1、找出当前CPU资源消耗最大的进程

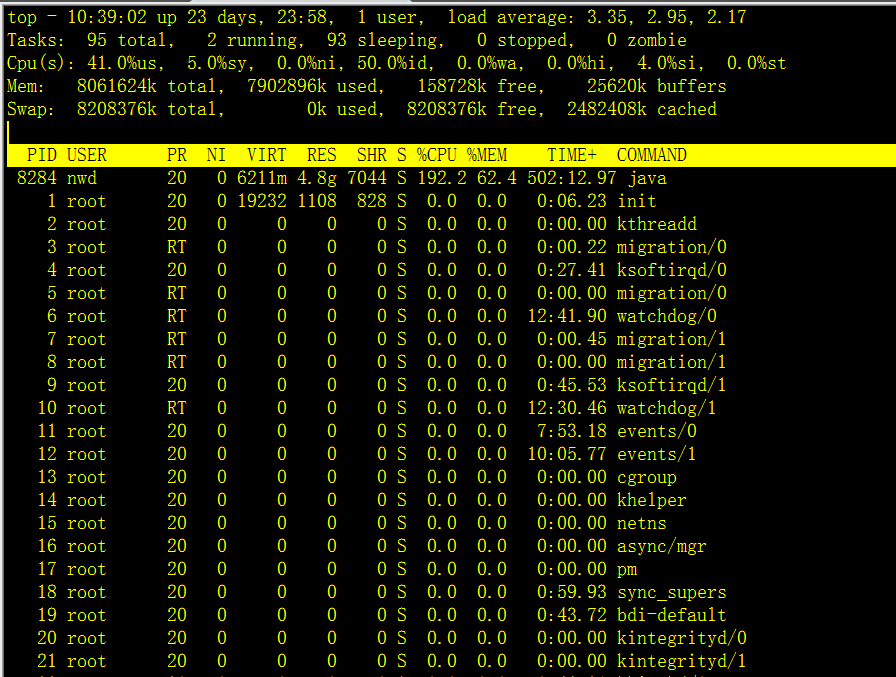

用top命令,发现java进程(pid:8424)CPU资源消耗达到192%,如下图:

确定java资源消耗过多。

2、打印当前JVM堆栈

jstack 8424 > /tmp/jstack.txt

堆栈信息见附件1

3、找到当前堆栈资源消耗多的线程

命令如下:

top -H -p8424

结果如下:

8320 nwd 20 0 6275m 4.8g 7044 R 44.6 62.7 3:57.00 java

8365 nwd 20 0 6275m 4.8g 7044 R 32.4 62.7 4:06.09 java

19016 nwd 20 0 6275m 4.8g 7044 R 22.5 62.7 0:24.47 java

8289 nwd 20 0 6275m 4.8g 7044 S 14.1 62.7 16:27.13 java

8356 nwd 20 0 6275m 4.8g 7044 S 13.5 62.7 6:40.18 java

8333 nwd 20 0 6275m 4.8g 7044 R 10.3 62.7 5:21.39 java

从上面查询出来的结果可以找到cpu消耗靠前的线程的pid,下一步根据pid从堆栈里面查找相应的线程。注意:这里pid是十进制的,线程nid是十六进制,要做进制转换。进制转换网址:http://tool.oschina.net/hexconvert/, 或者参考如下命令:

[ylp@ylp-web-01 ~]$ printf "%x\n" 14065

36f1

4、分析资源消耗靠前的线程

下面分析cpu资源消耗靠前的三个线程。

- 这里是列表文本线程pid=8320(0x2080)的线程堆栈如下:

"epollEventLoopGroup-3-5" prio=10 tid=0x00007fed00272000 nid=0x2080 runnable [0x00007fece29e6000]

java.lang.Thread.State: RUNNABLE

at sun.misc.Unsafe.unpark(Native Method)

at java.util.concurrent.locks.LockSupport.unpark(LockSupport.java:152)

at java.util.concurrent.locks.AbstractQueuedSynchronizer.unparkSuccessor(AbstractQueuedSynchronizer.java:662)

at java.util.concurrent.locks.AbstractQueuedSynchronizer.release(AbstractQueuedSynchronizer.java:1263)

at java.util.concurrent.locks.ReentrantLock.unlock(ReentrantLock.java:460)

at java.util.concurrent.LinkedBlockingQueue.signalNotEmpty(LinkedBlockingQueue.java:174)

at java.util.concurrent.LinkedBlockingQueue.offer(LinkedBlockingQueue.java:429)

at com.dianping.cat.message.io.DefaultMessageQueue.offer(DefaultMessageQueue.java:22)

at com.dianping.cat.analysis.PeriodTask.enqueue(PeriodTask.java:44)

at com.dianping.cat.analysis.Period.distribute(Period.java:85)

at com.dianping.cat.analysis.RealtimeConsumer.consume(RealtimeConsumer.java:53)

at com.dianping.cat.analysis.DefaultMessageHandler.handle(DefaultMessageHandler.java:28)

at com.dianping.cat.analysis.TcpSocketReceiver$MessageDecoder.decode(TcpSocketReceiver.java:147)

at io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:247)

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:147)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:333)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:319)

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:787)

at io.netty.channel.epoll.EpollSocketChannel$EpollSocketUnsafe.epollInReady(EpollSocketChannel.java:722)

at io.netty.channel.epoll.EpollEventLoop.processReady(EpollEventLoop.java:326)

at io.netty.channel.epoll.EpollEventLoop.run(EpollEventLoop.java:264)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:116)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:137)

at java.lang.Thread.run(Thread.java:745)

Locked ownable synchronizers:

- None

当前线程是消息接收器,从客户端传过来消息,然后交给consumer消费。

- 这里是列表文本线程pid=8365(0x20ad)堆栈信息如下:

"cat-Message-Gzip-19" daemon prio=10 tid=0x00007fed1c203800 nid=0x20ad runnable [0x00007fece0eb9000]

java.lang.Thread.State: RUNNABLE

at sun.misc.Unsafe.unpark(Native Method)

at java.util.concurrent.locks.LockSupport.unpark(LockSupport.java:152)

at java.util.concurrent.locks.AbstractQueuedSynchronizer.unparkSuccessor(AbstractQueuedSynchronizer.java:662)

at java.util.concurrent.locks.AbstractQueuedSynchronizer.release(AbstractQueuedSynchronizer.java:1263)

at java.util.concurrent.locks.ReentrantLock.unlock(ReentrantLock.java:460)

at java.util.concurrent.LinkedBlockingQueue.signalNotEmpty(LinkedBlockingQueue.java:174)

at java.util.concurrent.LinkedBlockingQueue.offer(LinkedBlockingQueue.java:429)

at com.dianping.cat.consumer.dump.LocalMessageBucketManager$MessageGzip.gzipMessage(LocalMessageBucketManager.java:303)

at com.dianping.cat.consumer.dump.LocalMessageBucketManager$MessageGzip.run(LocalMessageBucketManager.java:332)

at java.lang.Thread.run(Thread.java:745)

at org.unidal.helper.Threads$RunnableThread.run(Threads.java:294)

Locked ownable synchronizers:

- None

当前线程是处理消息分析结果压缩和解压的。

- 这里是列表文本线程pid=19016(0x4a48)的堆栈信息如下:

"Cat-RealtimeConsumer-ProblemAnalyzer-10-1" daemon prio=10 tid=0x00007fed181ed800 nid=0x4a48 runnable [0x00007fecd9c1c000]

java.lang.Thread.State: TIMED_WAITING (parking)

at sun.misc.Unsafe.park(Native Method)

- parking to wait for <0x0000000711fd73b8> (a java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject)

at java.util.concurrent.locks.LockSupport.parkNanos(LockSupport.java:226)

at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.awaitNanos(AbstractQueuedSynchronizer.java:2082)

at java.util.concurrent.LinkedBlockingQueue.poll(LinkedBlockingQueue.java:467)

at com.dianping.cat.message.io.DefaultMessageQueue.poll(DefaultMessageQueue.java:49)

at com.dianping.cat.analysis.AbstractMessageAnalyzer.analyze(AbstractMessageAnalyzer.java:40)

at com.dianping.cat.analysis.PeriodTask.run(PeriodTask.java:80)

at java.lang.Thread.run(Thread.java:745)

at org.unidal.helper.Threads$RunnableThread.run(Threads.java:294)

Locked ownable synchronizers:

- None

该线程是分析当前消息是否是异常消息,如果是异常消息,要统计异常数及异常堆栈信息。

分析发现,资源消耗靠前的线程都是在正常处理业务,怀疑是不是消息负载太大导致CPU效果过高。想起来Cat自身有个state报表,查看一下当前消息处理的量。

5、总结

处理这种问题,首先在合适的时机打印出堆栈信息,找到资源消耗靠前的线程重点分析。

附件: 附件1

6、附打印top线程及jstack信息的脚本

#!/bin/sh

cd `dirname $0`

APP_HOME=`pwd`

typeset top=${1:-10}

#typeset pid=${2:-$(pgrep -u $USER java)}

typeset pid=`ps -ef | grep java | grep "${APP_HOME}" |awk '{print $2}'`

typeset tmp_file=./java${pid}.trace

top -H -p $pid -b -d 1 -n 1 | head -20 > $tmp_file

$JAVA_HOME/bin/jstack -l $pid >> $tmp_file

ps H -eo user,pid,ppid,tid,time,%cpu --sort=%cpu --no-headers\

| tail -$top\

| awk -v "pid=$pid" '$2==pid{print $4"\t"$6}'\

| while read line;

do

typeset nid=$(echo "$line"|awk '{printf("0x%x",$1)}')

typeset cpu=$(echo "$line"|awk '{print $2}')

awk -v "cpu=$cpu" '/nid='"$nid"'/,/^$/{print $0"\t"(isF++?"":"cpu="cpu"%");}' $tmp_file

done

1291

1291

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?