一般用filebeat收集日志信息,发送给redis做队列,logstash集中日志信息,按一定格式过滤

filebeat配置段:

vim /etc/filebeat/filebeat.yml filebeat.prospectors: -input_type: log paths: - /tmp/test multiline.pattern: ^201 multiline.negate: true multiline.match: after output.redis: hosts: ["10.30.146.208"] port: 6379 key: "test"

这样写的目的是可以收集java格式的日志,多行匹配

logstash的grok用法:

[root@elk~]vim /etc/logstash/conf.d/test.conf

input {

redis {

host => "10.30.146.208"

port => 6379

key => "test"

type => "message"

data_type => "list"

}

}

filter {

grok {

match => {

"message" =>"%{REQUEST_TIME:request_time} %{LOGLEVEL:log_level} Key:\[%{WOR D:system}%{INT:sequence_id}%{SPACE}%{TRADE_TYPE:trade_type}%{SPACE}%{TRADE_ID:trade_id}%{SPACE}\],Information:%{GREEDYDATA}\[%{GREEDYDATA:method}\]"

}

}

}

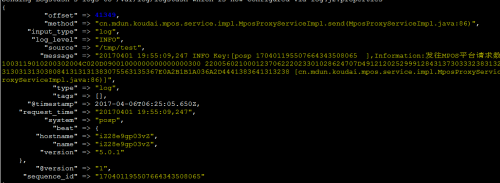

output {

stdout {

codec => "rubydebug"

}

}

REQUEST_TIME:是自定义的正则

GREEDYDATA:当一个文档不需要频繁搜索时,可以使用这个参数,表示贪婪的数据

当需要匹配信息时,可以直接使用/usr/share/logstash/vendor/bundle/jruby/1.9/gems/logstash-patterns-core-4.0.2/patterns/目录下定义好的正则表达式,也可以在目录下自己定义,注意,定义的时候要大写

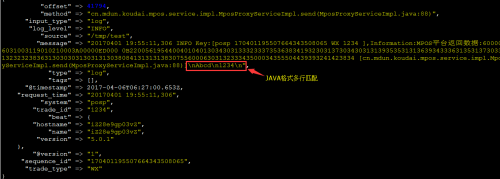

测试匹配信息:

20170401 19:55:09,247 INFO Key:[xyz170401195507664343508065 ],Information:test据:60000300006031003119010200302004C020D09001000000000000000300220056021000123706222023301028624707D49121202529991284313730333238313232323836313030303130313130380841313131383075563135367E0A2B1B1A036A2D4441383641313238[abcdefg.java:86)]

20170401 19:55:11,306 INFO Key:[xyz 170401195507664343508065 WX 1234 ],Information:test2:60000300006031003119010210003A00000ED000 0B220056195440040104013034303133323337353638341932303137303430313139353531313639343336313531373033323831323232383631303030313031313038084131313138307556000630313233343500034355504439393241423834[abcdefg.java:88)]

Abcd

1234

转载于:https://blog.51cto.com/landanhero/1913536

568

568

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?