如果你点进了这一篇文章,想必你在python爬虫这一块遇到了什么问题,但是—————我并不能帮助你!哈哈哈!但是我会告诉你我爬取墨迹天气气温的详细过程,希望可以帮助你!

废话到此结束,谢谢您的观看!

(python版本:python3写python爬虫,我要了解网页的源代码,但是首先还是要告诉你们网址,不然找错了(一般应该不会错)网址:

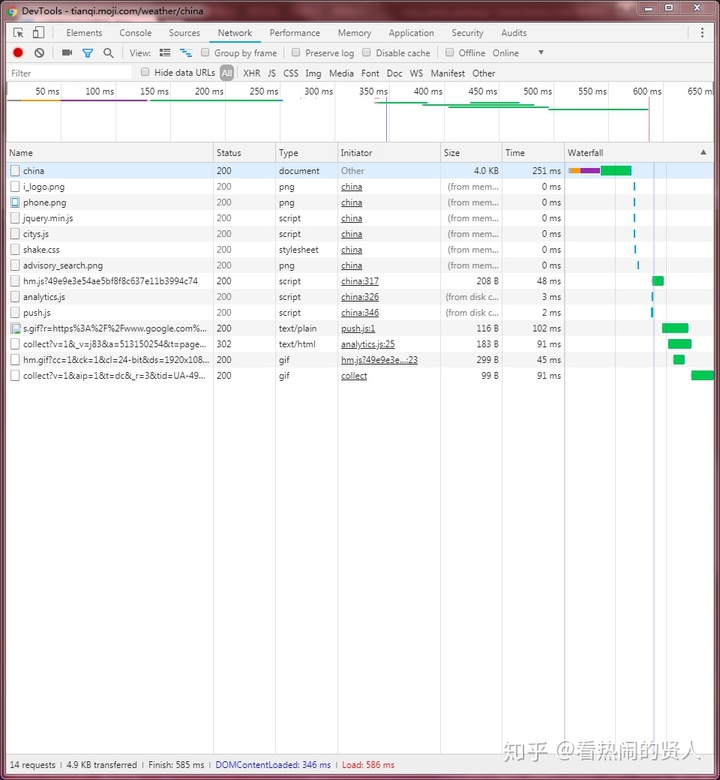

全国景区天气预报_相关查询 - 墨迹天气tianqi.moji.com来到这一页点击F12,再点击F5查看网页源代码,现在应该是这样的:

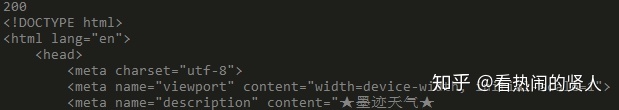

点进china,这是你会看到一些“代码”,看不懂?没关系!因为我也看不懂,真的!好了!不用管它了。打开your python,我们导入几个库:

(这里解释一下这几个库,知道的可以跳过:

1.requests库:python简单爬虫常用到的一个库

2.time库:用来计算你爬虫爬取信息的时间

3.json库:用来记录你爬取的信息(它会在你的爬虫下面创建一个txt,下面会提到。当然不要也可以)

4.这一句话是用来解析你爬取的信息)

大家应该知道服务器会拒绝爬虫的吧!所以现在开始伪装咱们的爬虫:

定义root_url为上面卡片网址(https://tianqi.moji.com/weather/china)

字典忘了没?忘了就该回去补补课了!在源代码页找到Headers的Request Headers的Host与User-Agent(我反复尝试得到的结论:墨迹天气这个网站只需这两个就行)写成一个字典。既然是在Headers里找到的就命名为headers吧!

写出来应该是这样的:

root_url = 'https://tianqi.moji.com/weather/china'

headers = {

'Host': 'tianqi.moji.com',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.105 Safari/537.36',

}这样我们的爬虫就伪装成了一个浏览器,接下来我们要找到省份:

我们来看网页源代码的Response:

(以安徽为例)是不是所有的省份都被'a'标签儿包着;再看是不是省份被<li>标签儿包着;以此类推直到你看见了'div'标签儿多了一个class,我们找的就是它。

因为它包裹着所有的省份。

找到他后,咱继续写代码:就像我们找时的那样,定义一个response = requests里找到网址的全部内容,(如果没有描述清楚,请大家原谅,麻烦大家自己看一下代码理解,谢谢!)写出来应该是这样:

response = requests.get(root_url, headers=headers)每做完一步我们又要检查的,这一步怎么检查呢?就看网页源代码Headers的Status Code是什么?是什么?是不是一个绿点 200 OK这200就是关键,还有一个就是内容检查。检查你得看得见啊!所以你得print() 来看详细代码:

print(response.status_code)

print(response.text[:200])这是输出:

检查完毕后,就把这两行注释掉,不用它了。

找到了网页源代码的全部内容,就该解析它并找到省份:

解析的结果我们一般用suop表示,解析出来了后,就在里面找'div'标签儿(记的带上class)然后检查:

soup = bs(response.text, 'lxml')

shenfen_div = soup.find('div', class_='city')

print(str(shenfen_div)) # 输出正确后就注释掉输出:

<div class="city clearfix">

<div class="city_title">全部省份</div>

<dl class="city_list clearfix">

<dt>A</dt>

<dd>

<ul>

<li><a href="/weather/china/anhui">安徽</a></li>

</ul>

</dd>

</dl>

<dl class="city_list clearfix">

<dt>B</dt>

<dd>

<ul>

<li><a href="/weather/china/beijing">北京</a></li>

</ul>

</dd>

</dl>

<dl class="city_list clearfix">

<dt>C</dt>

<dd>

<ul>

<li><a href="/weather/china/chongqing">重庆</a></li>

</ul>

</dd>

</dl>

<dl class="city_list clearfix">

<dt>F</dt>

<dd>

<ul>

<li><a href="/weather/china/fujian">福建</a></li>

</ul>

</dd>

</dl>

<dl class="city_list clearfix">

<dt>G</dt>

<dd>

<ul>

<li><a href="/weather/china/gansu">甘肃</a></li>

<li><a href="/weather/china/guangdong">广东</a></li>

<li><a href="/weather/china/guangxi">广西</a></li>

<li><a href="/weather/china/guizhou">贵州</a></li>

</ul>

</dd>

</dl>

<dl class="city_list clearfix">

<dt>H</dt>

<dd>

<ul>

<li><a href="/weather/china/hainan">海南</a></li>

<li><a href="/weather/china/hebei">河北</a></li>

<li><a href="/weather/china/henan">河南</a></li>

<li><a href="/weather/china/hubei">湖北</a></li>

<li><a href="/weather/china/hunan">湖南</a></li>

<li><a href="/weather/china/heilongjiang">黑龙江</a></li>

</ul>

</dd>

</dl>

<dl class="city_list clearfix">

<dt>J</dt>

<dd>

<ul>

<li><a href="/weather/china/jilin">吉林</a></li>

<li><a href="/weather/china/jiangsu">江苏</a></li>

<li><a href="/weather/china/jiangxi">江西</a></li>

</ul>

</dd>

</dl>

<dl class="city_list clearfix">

<dt>L</dt>

<dd>

<ul>

<li><a href="/weather/china/liaoning">辽宁</a></li>

</ul>

</dd>

</dl>

<dl class="city_list clearfix">

<dt>N</dt>

<dd>

<ul>

<li><a href="/weather/china/inner-mongolia">内蒙古</a></li>

<li><a href="/weather/china/ningxia">宁夏</a></li>

</ul>

</dd>

</dl>

<dl class="city_list clearfix">

<dt>Q</dt>

<dd>

<ul>

<li><a href="/weather/china/qinghai">青海</a></li>

</ul>

</dd>

</dl>

<dl class="city_list clearfix">

<dt>S</dt>

<dd>

<ul>

<li><a href="/weather/china/shandong">山东</a></li>

<li><a href="/weather/china/shaanxi">陕西</a></li>

<li><a href="/weather/china/shanxi">山西</a></li>

<li><a href="/weather/china/shanghai">上海</a></li>

<li><a href="/weather/china/sichuan">四川</a></li>

</ul>

</dd>

</dl>

<dl class="city_list clearfix">

<dt>T</dt>

<dd>

<ul>

<li><a href="/weather/china/tianjin">天津</a></li>

</ul>

</dd>

</dl>

<dl class="city_list clearfix">

<dt>X</dt>

<dd>

<ul>

<li><a href="/weather/china/tibet">西藏</a></li>

<li><a href="/weather/china/xinjiang">新疆</a></li>

</ul>

</dd>

</dl>

<dl class="city_list clearfix">

<dt>Y</dt>

<dd>

<ul>

<li><a href="/weather/china/yunnan">云南</a></li>

</ul>

</dd>

</dl>

<dl class="city_list clearfix">

<dt>Z</dt>

<dd>

<ul>

<li><a href="/weather/china/zhejiang">浙江</a></li>

</ul>

</dd>

</dl>

</div>找'a'标签儿也一样(最后两行这次需要,because 你得知道气温是什么地方的):

citys = shenfen_div.find_all('a')

for city in citys:

print(city.text)接下来进入下一个界面(以安徽为例):

city_hrefs = []

for city in citys:

city_hrefs.append(city['href'])

# print('n'.join(city_hrefs))

输出:

安徽

北京

重庆

福建

甘肃

广东

广西

贵州

海南

河北

河南

湖北

湖南

黑龙江

吉林

江苏

江西

辽宁

内蒙古

宁夏

青海

山东

陕西

山西

上海

四川

天津

西藏

新疆

云南

浙江

/weather/china/anhui

/weather/china/beijing

/weather/china/chongqing

/weather/china/fujian

/weather/china/gansu

/weather/china/guangdong

/weather/china/guangxi

/weather/china/guizhou

/weather/china/hainan

/weather/china/hebei

/weather/china/henan

/weather/china/hubei

/weather/china/hunan

/weather/china/heilongjiang

/weather/china/jilin

/weather/china/jiangsu

/weather/china/jiangxi

/weather/china/liaoning

/weather/china/inner-mongolia

/weather/china/ningxia

/weather/china/qinghai

/weather/china/shandong

/weather/china/shaanxi

/weather/china/shanxi

/weather/china/shanghai

/weather/china/sichuan

/weather/china/tianjin

/weather/china/tibet

/weather/china/xinjiang

/weather/china/yunnan

/weather/china/zhejiang

host_url = 'https://tianqi.moji.com'

full_city_hrefs = []

for city in city_hrefs:

full_city_hrefs.append( host_url + city )

# print('n'.join(full_city_hrefs))

输出:

安徽

北京

重庆

福建

甘肃

广东

广西

贵州

海南

河北

河南

湖北

湖南

黑龙江

吉林

江苏

江西

辽宁

内蒙古

宁夏

青海

山东

陕西

山西

上海

四川

天津

西藏

新疆

云南

浙江

https://tianqi.moji.com/weather/china/anhui

https://tianqi.moji.com/weather/china/beijing

https://tianqi.moji.com/weather/china/chongqing

https://tianqi.moji.com/weather/china/fujian

https://tianqi.moji.com/weather/china/gansu

https://tianqi.moji.com/weather/china/guangdong

https://tianqi.moji.com/weather/china/guangxi

https://tianqi.moji.com/weather/china/guizhou

https://tianqi.moji.com/weather/china/hainan

https://tianqi.moji.com/weather/china/hebei

https://tianqi.moji.com/weather/china/henan

https://tianqi.moji.com/weather/china/hubei

https://tianqi.moji.com/weather/china/hunan

https://tianqi.moji.com/weather/china/heilongjiang

https://tianqi.moji.com/weather/china/jilin

https://tianqi.moji.com/weather/china/jiangsu

https://tianqi.moji.com/weather/china/jiangxi

https://tianqi.moji.com/weather/china/liaoning

https://tianqi.moji.com/weather/china/inner-mongolia

https://tianqi.moji.com/weather/china/ningxia

https://tianqi.moji.com/weather/china/qinghai

https://tianqi.moji.com/weather/china/shandong

https://tianqi.moji.com/weather/china/shaanxi

https://tianqi.moji.com/weather/china/shanxi

https://tianqi.moji.com/weather/china/shanghai

https://tianqi.moji.com/weather/china/sichuan

https://tianqi.moji.com/weather/china/tianjin

https://tianqi.moji.com/weather/china/tibet

https://tianqi.moji.com/weather/china/xinjiang

https://tianqi.moji.com/weather/china/yunnan

https://tianqi.moji.com/weather/china/zhejiang接下来的代码和上面的就差不多了,我也就不多做介绍了:

for distinct_url in full_city_hrefs:

response = requests.get(distinct_url,headers=headers)

# print(response.status_code)

soup = bs(response.text,'lxml')

fanchang_div = soup.find('div',class_='city_hot')

print(fanchang_div.text)

cat = fanchang_div.find_all('a')

dog_hrefs = []

for dog in cat:

dog_hrefs.append(dog['href'])

# print('n'.join(dog_hrefs))找到后,再找气温(也是一样的):

for local in dog_hrefs:

response = requests.get(local,headers=headers)

# print(response.status_code)

soup = bs(response.text,'lxml')

dizhi_div = soup.find('div',class_='search_default')

zishu = dizhi_div.find('em')

qiwen_div = soup.find('div',class_='wea_weather')

# print(str(qiwen_div))

shuzi = qiwen_div.find('em')

print("{} {}".format(shuzi.text,zishu.text))这样就完了,最后再加上记录、时间、优化、异常处理就是这样的了:

import requests

import time

import json

from bs4 import BeautifulSoup as bs

def run():

start = time.time()

root_url = 'https://tianqi.moji.com/weather/china'

headers = {

'Host': 'tianqi.moji.com',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.105 Safari/537.36',

}

response = requests.get(root_url, headers=headers)

# print(response.status_code)

# print(response.text[:200])

soup = bs(response.text, 'lxml')

shenfen_div = soup.find('div', class_='city')

# print(str(shenfen_div))

citys = shenfen_div.find_all('a')

# for city in citys:

# print(city.text)

city_hrefs = []

for city in citys:

city_hrefs.append(city['href'])

# print('n'.join(city_hrefs))

host_url = 'https://tianqi.moji.com'

full_city_hrefs = []

for city in city_hrefs:

full_city_hrefs.append( host_url + city )

# print('n'.join(full_city_hrefs))

for distinct_url in full_city_hrefs:

response = requests.get(distinct_url,headers=headers)

# print(response.status_code)

soup = bs(response.text,'lxml')

# 异常处理

try:

jianggan_div = soup.find('div',class_='city_hot')

except:

try:

response = requests.get(distinct_url,headers=headers)

soup = bs(response.text,'lxml')

jianggan_div = soup.find('div',class_='city_hot')

except:

print("出错了")

continue

# 异常处理

try:

hhh = jianggan_div.find_all('a')

except:

try:

hhh = jianggan_div.find_all('a')

except:

print("出错了")

continue

han_hrefs = []

for han in hhh:

han_hrefs.append(han['href'])

print('n'.join(han_hrefs))

for local in han_hrefs:

try:

response = requests.get(local,headers=headers)

except:

try:

time.sleep(1)

response = requests.get(local,headers=headers)

except:

print("出错了")

continue

# print(response.status_code)

soup = bs(response.text,'lxml')

try:

dizhi_div = soup.find('div',class_='search_default')

except:

try:

dizhi_div = soup.find('div',class_='search_default')

except:

print("出错了")

continue

try:

zishu = dizhi_div.find('em')

except:

try:

zishu = dizhi_div.find('em')

except:

print("出错了")

continue

qiwen_div = soup.find('div',class_='wea_weather')

# print(str(qiwen_div))

shuzi = qiwen_div.find('em')

print("{} {}".format(shuzi.text,zishu.text))

with open('mojideqiwen.txt','a') as fo:

fo.write(shuzi.text+" ")

fo.write(zishu.text.replace(" ","").replace(",",",")+"n")

# break

# time.sleep(1)

# break

end = time.time()

print("time = {}".format(end - start))

run()好了,这就是我爬取墨迹天气气温的详细过程了。希望对你有帮助!

别走!一个小小的要求:点一个赞同呗!!!

582

582

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?