原标题:Hive分析统计离线日志信息

关注公众号:分享电脑学习

回复"百度云盘" 可以免费获取所有学习文档的代码(不定期更新)

云盘目录说明:

tools目录是安装包

res 目录是每一个课件对应的代码和资源等

doc 目录是一些第三方的文档工具

承接上一篇文档《新增访客数量MR统计之MR数据输出到MySQL》

hive-1.2.1的版本可以直接映射HBase已经存在的表

如果说想在hive创建表,同时HBase不存在对应的表,也想做映射,那么采用编译后的hive版本hive-1.2.1-hbase

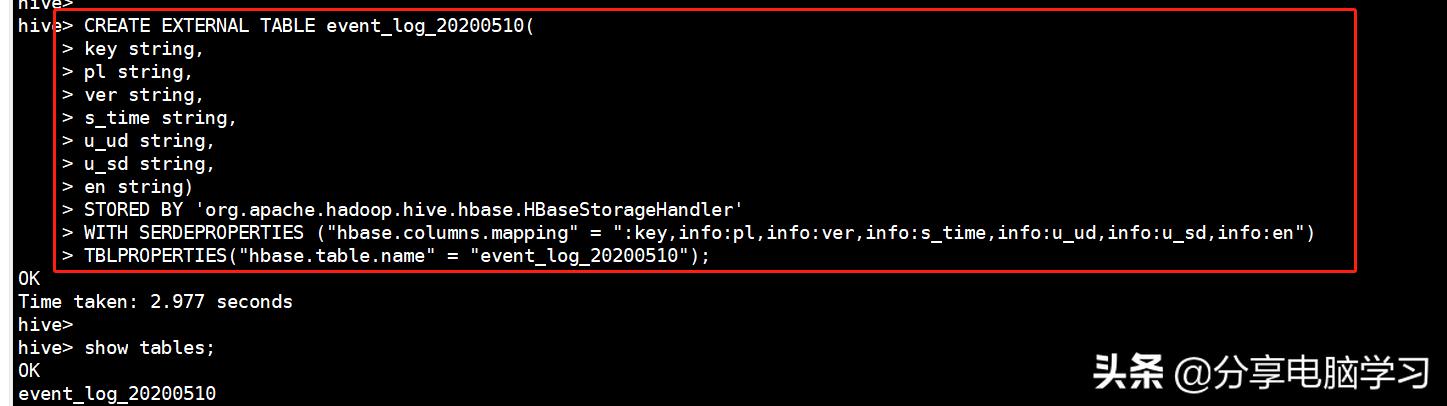

1. Hive中创建外部表,关联hbase

CREATE EXTERNAL TABLE event_log_20180728(

key string,

pl string,

ver string,

s_time string,

u_ud string,

u_sd string,

en string)

STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

WITH SERDEPROPERTIES ("hbase.columns.mapping" = ":key,info:pl,info:ver,info:s_time,info:u_ud,info:u_sd,info:en")

TBLPROPERTIES("hbase.table.name" = "event_log_20180728");

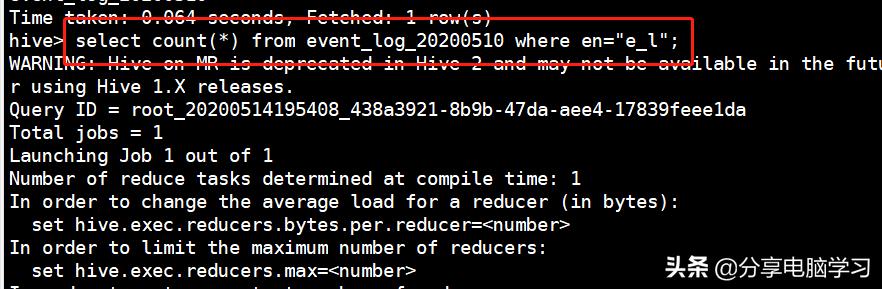

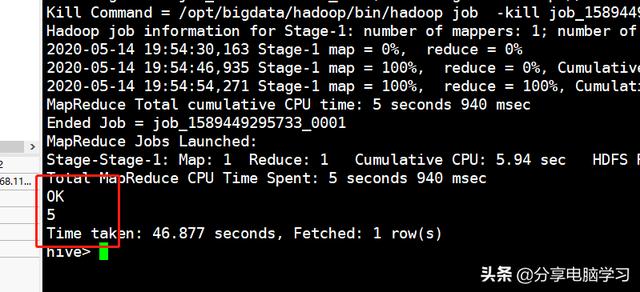

统计多少个新用户:

select count(*) from event_log_20180728 where en="e_l";

2. 提取数据,进行初步的数据过滤操作,最终将数据保存到临时表

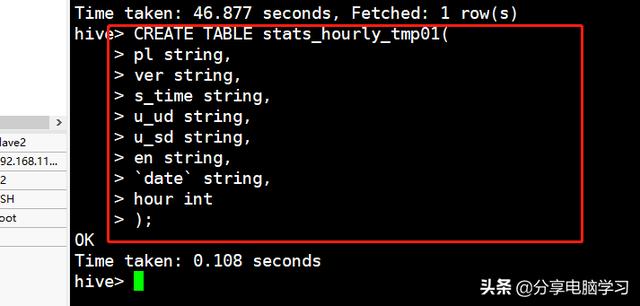

创建临时表

CREATE TABLE stats_hourly_tmp01(

pl string,

ver string,

s_time string,

u_ud string,

u_sd string,

en string,

`date` string,

hour int

);

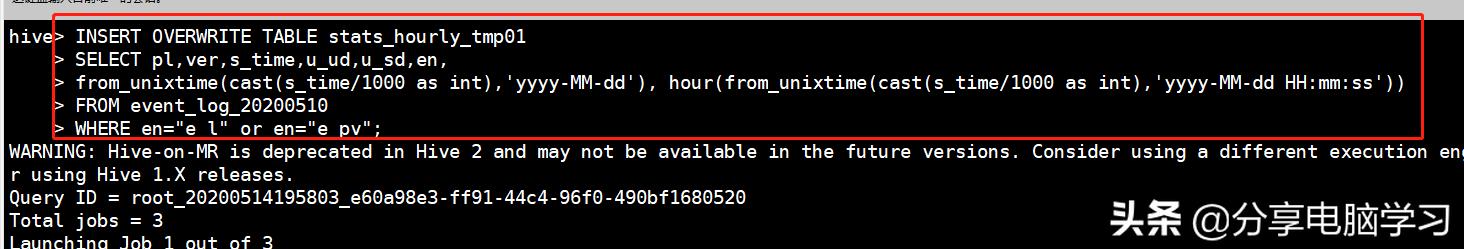

将原始数据提取到临时表中

INSERT OVERWRITE TABLE stats_hourly_tmp01

SELECT pl,ver,s_time,u_ud,u_sd,en,

from_unixtime(cast(s_time/1000 as int),'yyyy-MM-dd'), hour(from_unixtime(cast(s_time/1000 as int),'yyyy-MM-dd HH:mm:ss'))

FROM event_log_20200510

WHERE en="e_l" or en="e_pv";

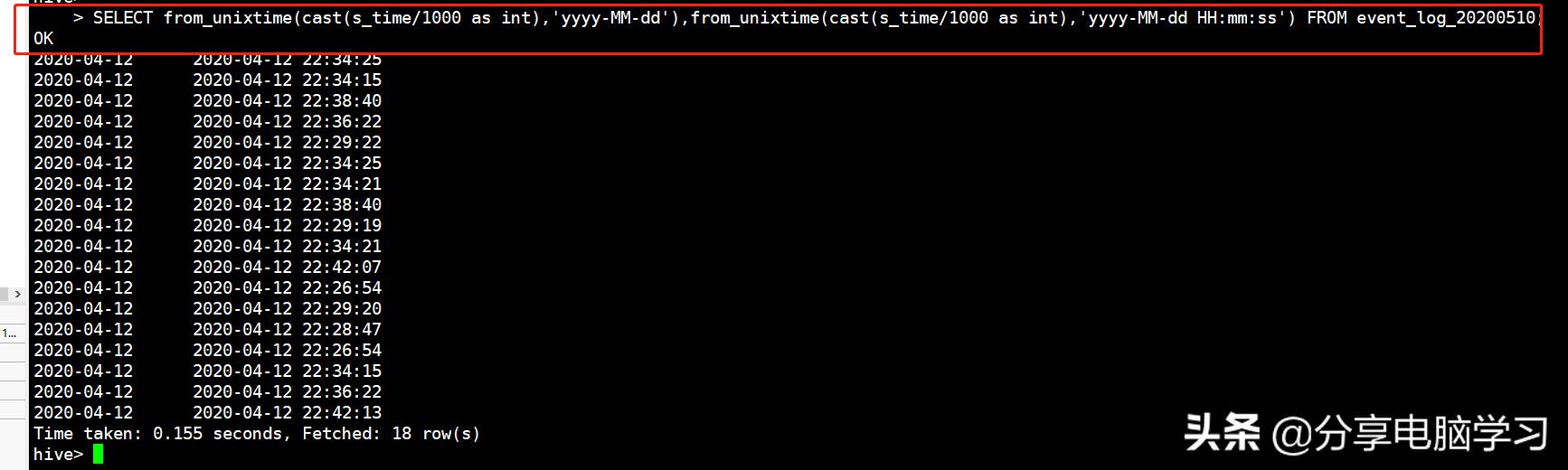

SELECT from_unixtime(cast(s_time/1000 as int),'yyyy-MM-dd'),from_unixtime(cast(s_time/1000 as int),'yyyy-MM-dd HH:mm:ss') FROM event_log_20180728;

查看结果

3. 具体kpi的分析

创建临时表保存数据结果

CREATE TABLE stats_hourly_tmp02(

pl string,

ver string,

`date` string,

kpi string,

hour int,

value int

);

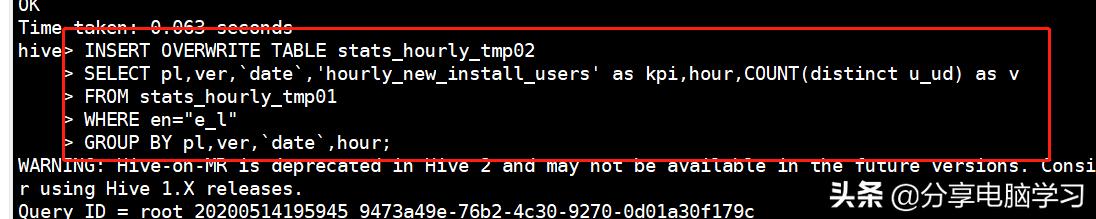

统计活跃用户 u_ud 有多少就有多少用户

统计platform维度是:(name,version)

INSERT OVERWRITE TABLE stats_hourly_tmp02

SELECT pl,ver,`date`,'hourly_new_install_users' as kpi,hour,COUNT(distinct u_ud) as v

FROM stats_hourly_tmp01

WHERE en="e_l"

GROUP BY pl,ver,`date`,hour;

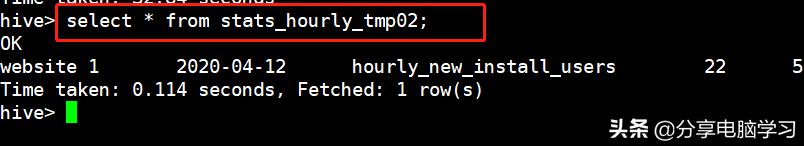

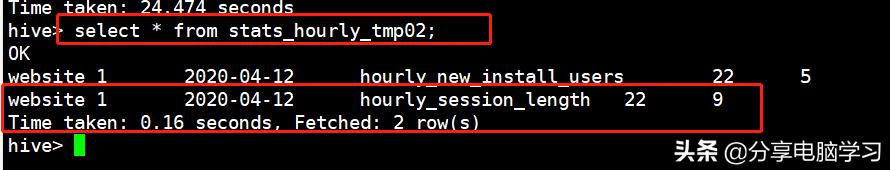

查看结果:

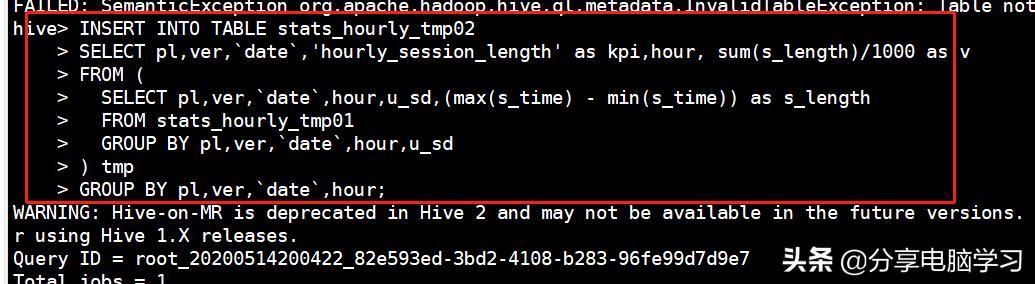

统计会话长度指标

会话长度 = 一个会话中最后一条记录的时间 - 第一条的记录时间 = maxtime - mintime

步骤:

1. 计算出每个会话的会话长度 group by u_sd

2. 统计每个区间段的总会话长度

统计platform维度是:(name,version)

INSERT INTO TABLE

SELECT pl,ver,`date`,'hourly_session_length' as kpi,hour, sum(s_length)/1000 as v

FROM (

SELECT pl,ver,`date`,hour,u_sd,(max(s_time) - min(s_time)) as s_length

FROM stats_hourly_tmp01

GROUP BY pl,ver,`date`,hour,u_sd

) tmp

GROUP BY pl,ver,`date`,hour;

查看结果

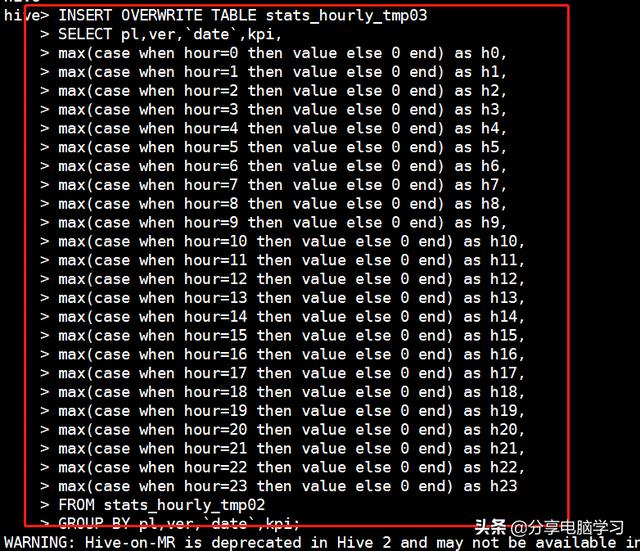

将tmp02的数据转换为和mysql表结构一致的数据

窄表转宽表 => 转换的结果保存到临时表中

CREATE TABLE stats_hourly_tmp03(

pl string, ver string, `date` string, kpi string,

hour00 int, hour01 int, hour02 int, hour03 int,

hour04 int, hour05 int, hour06 int, hour07 int,

hour08 int, hour09 int, hour10 int, hour11 int,

hour12 int, hour13 int, hour14 int, hour15 int,

hour16 int, hour17 int, hour18 int, hour19 int,

hour20 int, hour21 int, hour22 int, hour23 int

);

INSERT OVERWRITE TABLE stats_hourly_tmp03

SELECT pl,ver,`date`,kpi,

max(case when hour=0 then value else 0 end) as h0,

max(case when hour=1 then value else 0 end) as h1,

max(case when hour=2 then value else 0 end) as h2,

max(case when hour=3 then value else 0 end) as h3,

max(case when hour=4 then value else 0 end) as h4,

max(case when hour=5 then value else 0 end) as h5,

max(case when hour=6 then value else 0 end) as h6,

max(case when hour=7 then value else 0 end) as h7,

max(case when hour=8 then value else 0 end) as h8,

max(case when hour=9 then value else 0 end) as h9,

max(case when hour=10 then value else 0 end) as h10,

max(case when hour=11 then value else 0 end) as h11,

max(case when hour=12 then value else 0 end) as h12,

max(case when hour=13 then value else 0 end) as h13,

max(case when hour=14 then value else 0 end) as h14,

max(case when hour=15 then value else 0 end) as h15,

max(case when hour=16 then value else 0 end) as h16,

max(case when hour=17 then value else 0 end) as h17,

max(case when hour=18 then value else 0 end) as h18,

max(case when hour=19 then value else 0 end) as h19,

max(case when hour=20 then value else 0 end) as h20,

max(case when hour=21 then value else 0 end) as h21,

max(case when hour=22 then value else 0 end) as h22,

max(case when hour=23 then value else 0 end) as h23

FROM stats_hourly_tmp02

GROUP BY pl,ver,`date`,kpi;

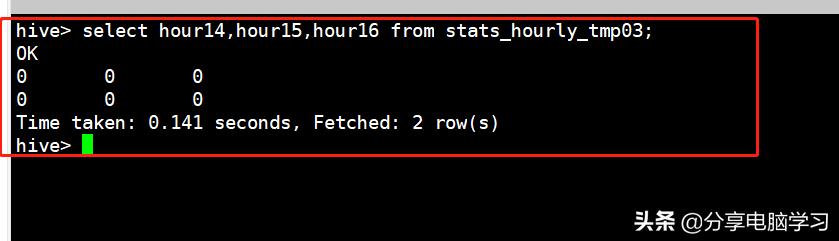

select hour14,hour15,hour16 from stats_hourly_tmp03;

结果:

将维度的属性值转换为id,使用UDF进行转换

1. 将udf文件夹中的所有自定义HIVE的UDF放到项目中

2. 使用run maven install环境进行打包

3. 将打包形成的jar文件上传到HDFS上的/jar文件夹中

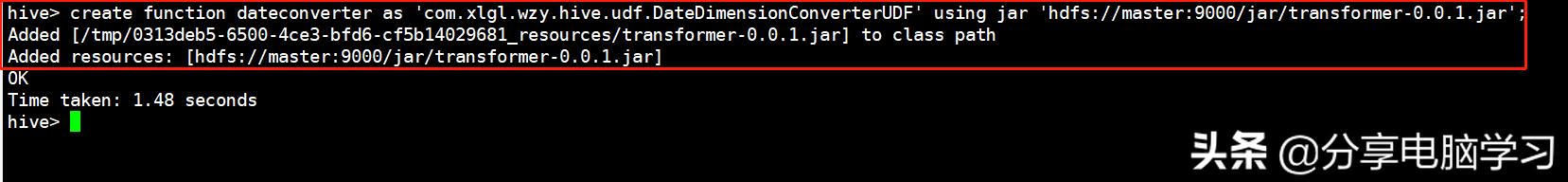

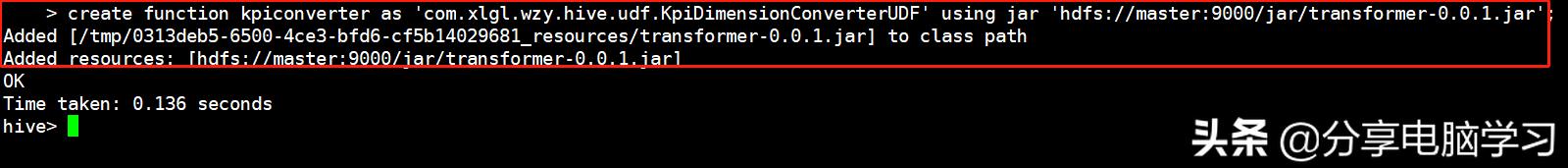

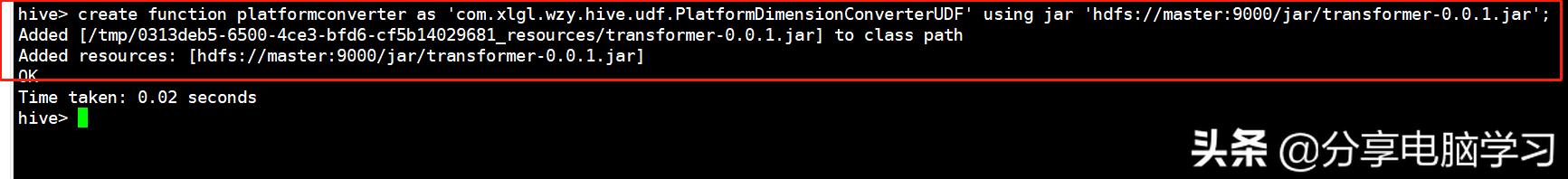

4. hive中创建自定义函数,命令如下:

create function dateconverter as 'com.xlgl.wzy.hive.udf.DateDimensionConverterUDF' using jar 'hdfs://master:9000/jar/transformer-0.0.1.jar';

create function kpiconverter as 'com.xlgl.wzy.hive.udf.KpiDimensionConverterUDF' using jar 'hdfs://master:9000/jar/transformer-0.0.1.jar';

create function platformconverter as 'com.xlgl.wzy.hive.udf.PlatformDimensionConverterUDF' using jar 'hdfs://master:9000/jar/transformer-0.0.1.jar';

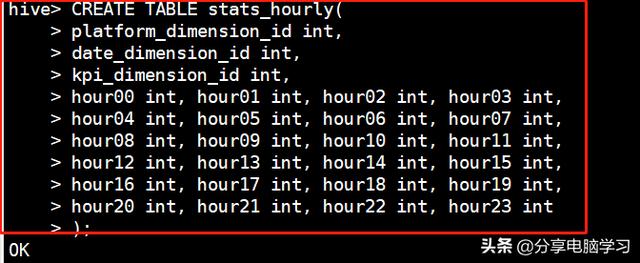

创建hive中对应mysql的最终表结构

CREATE TABLE stats_hourly(

platform_dimension_id int,

date_dimension_id int,

kpi_dimension_id int,

hour00 int, hour01 int, hour02 int, hour03 int,

hour04 int, hour05 int, hour06 int, hour07 int,

hour08 int, hour09 int, hour10 int, hour11 int,

hour12 int, hour13 int, hour14 int, hour15 int,

hour16 int, hour17 int, hour18 int, hour19 int,

hour20 int, hour21 int, hour22 int, hour23 int

);

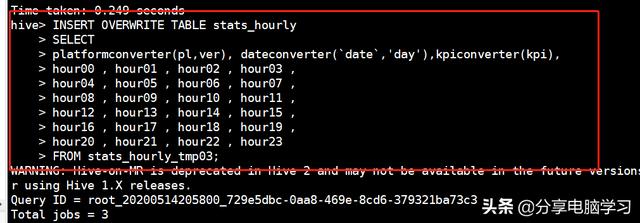

INSERT OVERWRITE TABLE stats_hourly

SELECT

platformconverter(pl,ver), dateconverter(`date`,'day'),kpiconverter(kpi),

hour00 , hour01 , hour02 , hour03 ,

hour04 , hour05 , hour06 , hour07 ,

hour08 , hour09 , hour10 , hour11 ,

hour12 , hour13 , hour14 , hour15 ,

hour16 , hour17 , hour18 , hour19 ,

hour20 , hour21 , hour22 , hour23

FROM stats_hourly_tmp03;

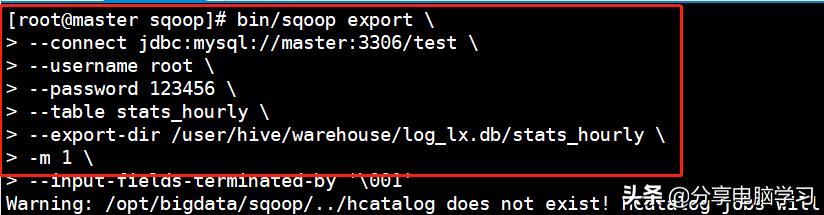

导出sqoop-》mysql

bin/sqoop export \

--connect jdbc:mysql://master:3306/test \

--username root \

--password 123456 \

--table stats_hourly \

--export-dir /user/hive/warehouse/log_lx.db/stats_hourly \

-m 1 \

--input-fields-terminated-by '\001'

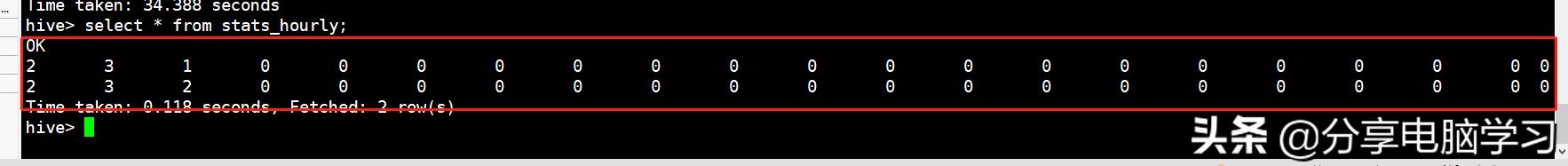

查询mysql

责任编辑:

444

444

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?