1.mapreduce的join运算

需求:

订单数据表t_order:

| id | date | pid | amount |

| 1001 | 20150710 | P0001 | 2 |

| 1002 | 20150710 | P0001 | 3 |

| 1002 | 20150710 | P0002 | 3 |

商品信息表t_product

| id | pname | category_id | price |

| P0001 | 小米5 | 1000 | 2 |

| P0002 | 锤子T1 | 1000 | 3 |

假如数据量巨大,两表的数据是以文件的形式存储在HDFS中,需要用mapreduce程序来实现一下SQL查询运算:

select a.id,a.date,b.name,b.category_id,b.price from t_order a join t_product b on a.pid = b.idId date pname category_id price

1001 20150710 小米5 1000 2

1002 20150710 小米5 1000 2

1002 20150710 锤子T1 1000 3

2.实现方法:

采用面向对象的思想首先将文件封装成对象,这个对象的属性就是需要查询的字段,对象还需要一个属性来表明是哪一个文件,在map中根据文件名字区分不同的文件提取想要的字段,然后再在reduce端进行组合。

懒得启动虚拟机,本例子就在本地运行

首先写一个驱动类:

package com.wx.mapreduce3;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

/**

* 订单表和商品表合到一起

order.txt(订单id, 日期, 商品编号, 数量)

1001 20150710 P0001 2

1002 20150710 P0001 3

1002 20150710 P0002 3

1003 20150710 P0003 3

product.txt(商品编号, 商品名字, 价格, 数量)

P0001 小米5 1001 2

P0002 锤子T1 1000 3

P0003 锤子 1002 4

*/

public class RJoin {

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

//本地运行

conf.set("mapreduce.framework.name", "local");

Job job = Job.getInstance(conf);

// 指定本程序的jar包所在的本地路径

job.setJarByClass(RJoin.class);

job.setJar("f:\\learnhadoop-1.0-SNAPSHOT.jar");

// 指定本业务job要使用的mapper/Reducer业务类

job.setMapperClass(RJoinMapper.class);

job.setReducerClass(RJoinReducer.class);

// 指定mapper输出数据的kv类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(InfoBean.class);

// 指定最终输出的数据的kv类型

job.setOutputKeyClass(InfoBean.class);

job.setOutputValueClass(NullWritable.class);

// 指定job的输入原始文件所在目录

FileInputFormat.setInputPaths(job, new Path(args[0]));

// 指定job的输出结果所在目录

FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 将job中配置的相关参数,以及job所用的java类所在的jar包,提交给yarn去运行

/* job.submit(); */

boolean res = job.waitForCompletion(true);

System.exit(res ? 0 : 1);

}

}

bean:

package com.wx.mapreduce3;

import org.apache.hadoop.io.Writable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

public class InfoBean implements Writable {

private int order_id;

private String dateString;

private String p_id;

private int amount;

private String pname;

private int category_id;

private float price;

// flag=0表示这个对象是封装订单表记录

// flag=1表示这个对象是封装产品信息记录

private String flag;

public InfoBean() {

}

public void set(int order_id, String dateString, String p_id, int amount, String pname, int category_id, float price, String flag) {

this.order_id = order_id;

this.dateString = dateString;

this.p_id = p_id;

this.amount = amount;

this.pname = pname;

this.category_id = category_id;

this.price = price;

this.flag = flag;

}

public int getOrder_id() {

return order_id;

}

public void setOrder_id(int order_id) {

this.order_id = order_id;

}

public String getDateString() {

return dateString;

}

public void setDateString(String dateString) {

this.dateString = dateString;

}

public String getP_id() {

return p_id;

}

public void setP_id(String p_id) {

this.p_id = p_id;

}

public int getAmount() {

return amount;

}

public void setAmount(int amount) {

this.amount = amount;

}

public String getPname() {

return pname;

}

public void setPname(String pname) {

this.pname = pname;

}

public int getCategory_id() {

return category_id;

}

public void setCategory_id(int category_id) {

this.category_id = category_id;

}

public float getPrice() {

return price;

}

public void setPrice(float price) {

this.price = price;

}

public String getFlag() {

return flag;

}

public void setFlag(String flag) {

this.flag = flag;

}

/**

* private int order_id; private String dateString; private int p_id;

* private int amount; private String pname; private int category_id;

* private float price;

*/

public void write(DataOutput out) throws IOException {

out.writeInt(order_id);

out.writeUTF(dateString);

out.writeUTF(p_id);

out.writeInt(amount);

out.writeUTF(pname);

out.writeInt(category_id);

out.writeFloat(price);

out.writeUTF(flag);

}

public void readFields(DataInput in) throws IOException {

this.order_id = in.readInt();

this.dateString = in.readUTF();

this.p_id = in.readUTF();

this.amount = in.readInt();

this.pname = in.readUTF();

this.category_id = in.readInt();

this.price = in.readFloat();

this.flag = in.readUTF();

}

@Override

public String toString() {

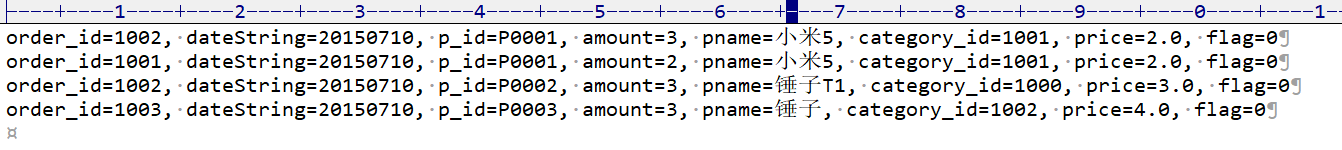

return "order_id=" + order_id + ", dateString=" + dateString + ", p_id=" + p_id + ", amount=" + amount + ", pname=" + pname + ", category_id=" + category_id + ", price=" + price + ", flag=" + flag;

}

}

mapper:

package com.wx.mapreduce3;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import java.io.IOException;

import java.io.UnsupportedEncodingException;

public class RJoinMapper extends Mapper<LongWritable, Text, Text, InfoBean> {

InfoBean bean = new InfoBean();

Text k = new Text();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

Text newvalue = transformTextToUTF8(value, "GBK");

String line = newvalue.toString();

System.out.println(line);

FileSplit inputSplit = (FileSplit) context.getInputSplit();

System.out.println(inputSplit);

String name = inputSplit.getPath().getName();

/***通过文件名判断是哪种数据*/

String pid = "";

if (name.startsWith("order")) {

String[] fields = line.split(",");

// id date pid amount

pid = fields[2];

bean.set(Integer.parseInt(fields[0]), fields[1], pid, Integer.parseInt(fields[3]), "", 0, 0, "0");

} else {

String[] fields = line.split(",");

// id pname category_id price

pid = fields[0];

bean.set(0, "", pid, 0, fields[1], Integer.parseInt(fields[2]), Float.parseFloat(fields[3]), "1");

}

k.set(pid);

context.write(k, bean);

}

public static Text transformTextToUTF8(Text text, String encoding) {

String value = null;

try {

value = new String(text.getBytes(), 0, text.getLength(), encoding);

} catch (UnsupportedEncodingException e) {

e.printStackTrace();

}

return new Text(value);

}

}

reduce:

package com.wx.mapreduce3;

import org.apache.commons.beanutils.BeanUtils;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

import java.util.ArrayList;

public class RJoinReducer extends Reducer<Text, InfoBean, InfoBean, NullWritable> {

@Override

protected void reduce(Text pid, Iterable<InfoBean> beans, Context context) throws IOException, InterruptedException {

InfoBean pdBean = new InfoBean();

ArrayList<InfoBean> orderBeans = new ArrayList<InfoBean>();

for (InfoBean bean : beans) {

if ("1".equals(bean.getFlag())) { //产品的

try {

BeanUtils.copyProperties(pdBean, bean);

} catch (Exception e) {

e.printStackTrace();

}

} else {

InfoBean odbean = new InfoBean();

try {

BeanUtils.copyProperties(odbean, bean);

orderBeans.add(odbean);

} catch (Exception e) {

e.printStackTrace();

}

}

}

// 拼接两类数据形成最终结果

for (InfoBean bean : orderBeans) {

bean.setPname(pdBean.getPname());

bean.setCategory_id(pdBean.getCategory_id());

bean.setPrice(pdBean.getPrice());

context.write(bean, NullWritable.get());

}

}

}

配置启动参数:

maven 打包:mvn clean package

准备数据:

运行:

刚刚开始出现中文乱码:

Hadoop处理GBK文本时,发现输出出现了乱码,原来HADOOP在涉及编码时都是写死的UTF-8,如果文件编码格式是其它类型(如GBK),则会出现乱码。

此时只需在mapper或reducer程序中读取Text时,使用transformTextToUTF8(text, "GBK");进行一下转码,以确保都是以UTF-8的编码方式在运行。

想要在本地运行必须将windos下编译好的hadoop配置到环境变量中,具体配置:

https://www.cnblogs.com/wxplmm/p/7253934.html

然后启动参数配置好,启动后报错

Could not locate executable null\bin\winutils.exe in the Hadoop binaries.

可能需要重启系统,重启后可以

2.数据倾斜问题:

Map端join算法,就可以解决数据全局问题,但是产品表不能太大,产品表就放在mapreduce 提供的缓存机制中

package com.wx.mapreduce4;

import java.io.BufferedReader;

import java.io.FileInputStream;

import java.io.IOException;

import java.io.InputStreamReader;

import java.net.URI;

import java.util.HashMap;

import java.util.Map;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class Mapjoin {

/**

* 利用mapreduce的缓存机制在map端进行多表的join运算

*/

static class MapJoinmap extends Mapper<LongWritable, Text, Text, NullWritable>

{

//用一个hashmap来保存加载产品的信息

Map<String, String> pdinfo=new HashMap<String, String>();

//通过阅读mapper源码发现,setup方法是maptask处理数据之前调用一次,可以用来做一些初始化 的工作

@Override

protected void setup( Context context) throws IOException, InterruptedException {

BufferedReader br = new BufferedReader(new InputStreamReader(new FileInputStream("pds.txt")));

String line;

while(StringUtils.isNotEmpty(line=br.readLine()))

{

String[] split = line.split(",");

pdinfo.put(split[0], split[1]);

}

br.close();

}

Text k=new Text();

@Override

protected void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException {

String[] split = value.toString().split(",");

String name = pdinfo.get(split[1]);

k.set(value.toString()+"\t"+name);

context.write(k, NullWritable.get());

}

}

public static void main(String[] args) throws Exception {

// TODO Auto-generated method stub

Configuration conf=new Configuration();

Job job = Job.getInstance(conf);

job.setJarByClass(Mapjoin.class);

//设置执行map的类以及输出的数据的类型

job.setMapperClass(MapJoinmap.class);

job.setMapOutputKeyClass(Text.class);

job.setOutputValueClass(NullWritable.class);

//设置读取处理文件的路径

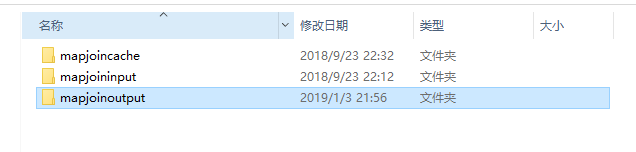

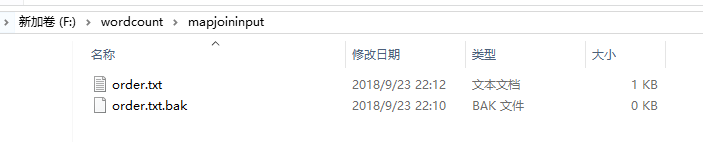

FileInputFormat.setInputPaths(job, new Path("F:/wordcount/mapjoininput"));

FileOutputFormat.setOutputPath(job, new Path("F:/wordcount/mapjoinoutput"));

//指定一个文件需要缓存到maptask的工作目录

//job.addArchiveToClassPath(archive);//缓存jar包到maptask运行节点的classpath中

//job.addFileToClassPath(file);//缓存普通文件到maptask的运行节点的calsspath中

//job.addCacheArchive(uri);//缓存压缩包文件到calsspath中

job.addCacheFile(new URI("file:/F:/wordcount/mapjoincache/pds.txt"));//将产品表的产品文件缓存到task的工作目录中去

//设置不需要reduce

job.setNumReduceTasks(0);

boolean re = job.waitForCompletion(true);

System.exit(re?0:1);

}

}

运行程序,系统报错:

java.lang.Exception: java.io.FileNotFoundException: pds.txt (系统找不到指定的文件。)

仔细找到错误:

failed 1 with: CreateSymbolicLink error (1314): ???????????

Failed to create symlink: \tmp\hadoop-cxdn\mapred\local\1537718956274\pds.txt <- F:\JAVAEE_jdk1.7\workspacemars2\workspacebigdata2\mapperreduce/pds.txt

网上解决办法:

https://blog.csdn.net/wangzy0327/article/details/78786410

1. Hadoop 毕竟是LINUX的东西,所以安装目录(包括JDK) 都别用太特别的字符或者空格; 2. CreateSymbolicLink error (1314): A required privilege is not held by the client. Windows 的执行权限问题,望这方面找就对了。 网上找到的具体解决办法:

1.打开本地策略(按win+R 打开 cmd,输入 gpedit.msc(win10家庭版没有这个,必须要其专业或者企业版才有))

2.打开本地计算机策略 - window设置 - 安全设置 - 本地策略 - 用户权限配置

3.找到创建符号链接(右侧),查看是否你的用户在里面(启动idea或者eclipse 运行程序的用户),如果不在添加进去

4.需要重启后才能生效

实在不行可以用管理员身份运行程序

仔细排查错误,发现不能运行成功的原因是eclipse运行的账户cxdn没有权限,当我添加权限以后就运行成功了

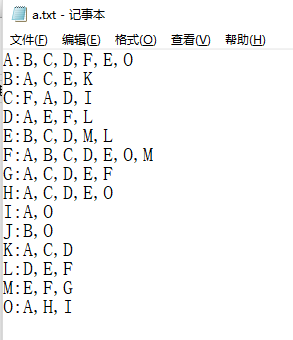

3.求出共同好友

思路:

此题目计算共同的QQ好友,分两步走,首先找到

第一步 map

读一行 A:B,C,D,F,E,O

输出

<B,A><C,A><D,A><F,A><E,A><O,A>

再读一行 B:A,C,E,K

输出 <A,B><C,B><E,B><K,B>

<B,A><C,A><D,A><F,A><E,A><O,A>

<A,B><C,B><E,B><K,B>

。。。。。。。。。。

读完过后key相同的聚在一起

<C,A><C,B> 。。。

....

这样就找到A和B的好友中都有C C: A,B ...

然后再来一个mapreduce:

C是A,B,....的共同好友

读一行:C:A,B,.....

拿到的数据是上一个步骤的输出结果

A I,H,K,D,F,G,C,O,B,

I,H,K,D,F,G,C,O,B, 进行两两组合

<A-B C> <A-B E>

package com.wx.mapreduce6;

import java.io.IOException;

import java.util.Iterator;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class Fensi1 {

/*

* 此题目计算共同的QQ好友,分两步走,首先找到

* 第一步 map

* 读一行 A:B,C,D,F,E,O

* 输出

* <B,A><C,A><D,A><F,A><E,A><O,A>

* 在读一行 B:A,C,E,K

* 输出 <A,B><C,B><E,B><K,B>

*

*/

static class Fensi1Mappper extends Mapper<LongWritable, Text, Text, Text>

{

Text k=new Text();

Text v=new Text();

@Override

protected void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException {

String[] split = value.toString().split(":");

v.set(split[0]);

String[] split2 = split[1].split(",");

for(String val:split2)

{

k.set(val);

context.write(k, v);

}

}

}

//<B,A><C,A><D,A><F,A><E,A><O,A> <A,B><C,B><E,B><K,B>

//<C,A><C,B> <c,D><C,F> ....

//这样就找到A和B的好友中都有C C: A,B ...

//

static class Fensi1Reduce extends Reducer<Text, Text, Text, Text>

{

Text v=new Text();

@Override

protected void reduce(Text key, Iterable<Text> value, Context context) throws IOException, InterruptedException {

//String str="";

//使用高效字符串拼接

StringBuilder sb=new StringBuilder();

for(Text v1:value) //value是A,B,D,F现在需要两两组合,但是在这里做拼接不好拼,所以去下一个map里面拼接

{

sb.append(v1).append(",");

}

v.set(sb.toString());

context.write(key, v);

}

}

public static void main(String[] args) throws Exception {

// TODO Auto-generated method stub

Configuration conf=new Configuration();

Job job = Job.getInstance(conf);

job.setJarByClass(Fensi1.class);

job.setMapperClass(Fensi1Mappper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

job.setReducerClass(Fensi1Reduce.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileInputFormat.setInputPaths(job, new Path("F:/bigdata/fensi/input"));

FileOutputFormat.setOutputPath(job, new Path("F:/bigdata/fensi/output1"));

boolean re = job.waitForCompletion(true);

System.exit(re?0:1);

}

}

package com.wx.mapreduce6;

import java.io.IOException;

import java.util.Arrays;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import com.wx.mapreduce6.Fensi1.Fensi1Mappper;

import com.wx.mapreduce6.Fensi1.Fensi1Reduce;

public class Fensi2 {

/*

* C是A,B,D,F的共同好友

* 读一行:C:A,B,D,F

* 拿到的数据是上一个步骤的输出结果

* A I,H,K,D,F,G,C,O,B,

*/

static class Fensi2Mappper extends Mapper<LongWritable, Text, Text, Text>

{

Text k=new Text();

Text v=new Text();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] split = value.toString().split("\t");

v.set(split[0]);

String[] split2 = split[1].split(",");

Arrays.sort(split2);

//I,H,K,D,F,G,C,O,B, 进行两两组合

for(int i=0;i<split2.length-1;i++)

{

for(int j=i+1;j<split2.length-1;j++)

{

k.set(split2[i]+"-"+split2[j]);

context.write(k, v);

}

}

}

}

//<A-B C> <A-B E>

static class Fensi2Reduce extends Reducer<Text, Text, Text, Text>

{

Text v=new Text();

@Override

protected void reduce(Text key, Iterable<Text> values,Context context) throws IOException, InterruptedException {

StringBuilder sb=new StringBuilder();

for(Text value:values)

{

sb.append(value).append(",");

v.set(sb.toString());

}

context.write(key, v);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

job.setJarByClass(Fensi2.class);

job.setMapperClass(Fensi2Mappper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

job.setReducerClass(Fensi2Reduce.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileInputFormat.setInputPaths(job, new Path("F:/bigdata/fensi/output1"));

FileOutputFormat.setOutputPath(job, new Path("F:/bigdata/fensi/output2"));

boolean re = job.waitForCompletion(true);

System.exit(re ? 0 : 1);

}

}

结果:

249

249

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?