前言

多数情况下,对于video设备,我们使用v4l2的时候,都是single plane的使用情景,其实v4l2 还支持mplane 的使用场景,本文将详细介绍mplane的具体使用

一、mplane 相关的结构体

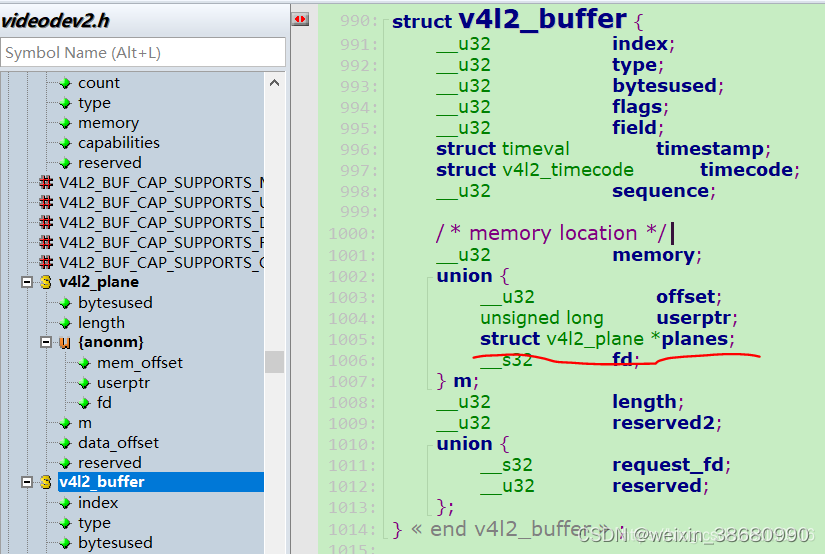

1. struct v4l2_buffer

struct v4l2_buffer的定义如下图所示,其中有一个共用体m成员,在共用体中有一个struct v4l2_plane *plane成员,它就是mplane 的核心结构体

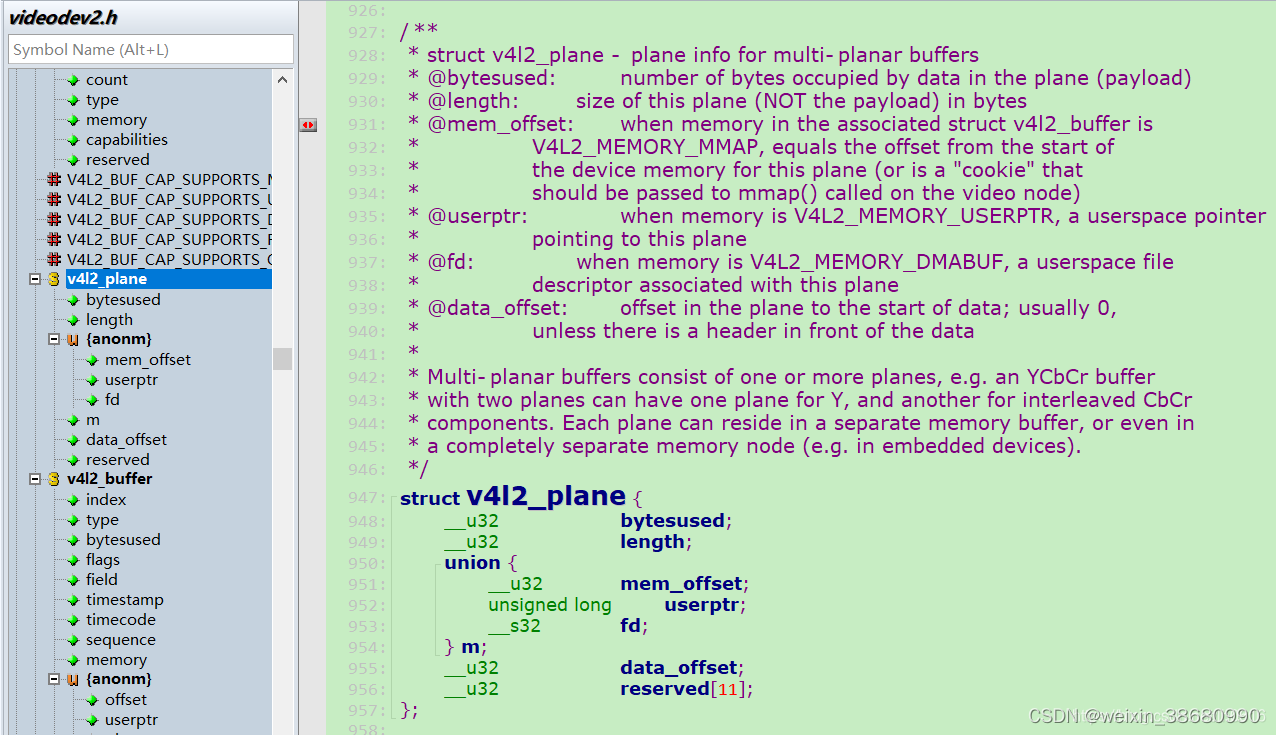

2. struct v4l2_plane

2. struct v4l2_plane

在mplane 中,struct v4l2_plane即代表其中的一个plane

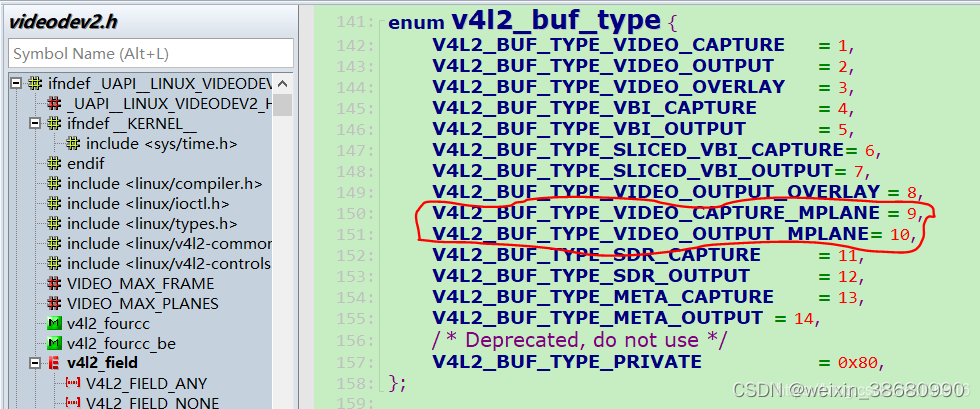

3. enum v4l2_buf_type

在mplane使用场景下,对于capture设备,应该使用V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE, 同样的,对于output设备,应该使用V4L2_BUF_TYPE_VIDEO_OUTPUT_MPLANE

二、mplane 应用层使用实例

1. 内存使用mmap方式

1. 使用3个plane 的场景:

#define BUFFER_NUM 4

unsigned char *buffer1[BUFFER_NUM];

unsigned char *buffer2[BUFFER_NUM];

struct v4l2_capability cap;

struct v4l2_format fmt;

enum v4l2_buf_type type;

int i;

struct v4l2_buffer buf;

struct v4l2_plane planes[VIDEO_MAX_PLANES];

ret = ioctl(fd,VIDIOC_QUERYCAP,&cap);

if(ret < 0)

{

printf("VIDIOC_QUERYCAP failed\n");

return -1;

}

fmt.type = V4L2_BUF_TYPE_VIDEO_OUTPUT_MPLANE;

ret = ioctl(fd,VIDIOC_G_FMT,&fmt);

if(ret < 0)

{

printf("VIDIOC_G_GMT failed\n");

return -1;

}

memset(&fmt,0,sizeof(fmt));

fmt.type = V4L2_BUF_TYPE_VIDEO_OUTPUT_MPLANE;

fmt.fmt.pix_mp.width = 1280;

fmt.fmt.pix_mp.height = 720;

fmt.fmt.pix_mp.pixelformat = V4L2_PIX_FMT_NV12; //input data format

fmt.fmt.pix_mp.field = V4L2_FIELD_NONE;

fmt.fmt.pix_mp.num_planes = 3; //plane_num

fmt.fmt.pix_mp.plane_fmt[0].bytesperline = 1280; // no use

fmt.fmt.pix_mp.plane_fmt[0].sizeimage = 1280 * 720 * 3 / 2; // no use

fmt.fmt.pix_mp.plane_fmt[1].bytesperline = 1280; //nv12 data bytesperline size

fmt.fmt.pix_mp.plane_fmt[1].sizeimage = 1280 * 720 * 3 / 2; //nv12 data sizeimage size

fmt.fmt.pix_mp.plane_fmt[2].bytesperline = 1280 / 4; // 1/16 Y data bytesperline size

fmt.fmt.pix_mp.plane_fmt[2].sizeimage = 1280 * 720 / 16 ; // 1/16 Y data sizeimage size

ret = ioctl(fd, VIDIOC_S_FMT,&fmt);

struct v4l2_requestbuffers reqbufs;

memset(&reqbufs,0,sizeof(reqbufs));

reqbufs.count = BUFFER_NUM;

reqbufs.type = V4L2_BUF_TYPE_VIDEO_OUTPUT_MPLANE;

reqbufs.memory = V4L2_MEMORY_MMAP;

ret = ioctl(fd,VIDIOC_REQBUFS,&reqbufs);

if(ret < 0)

{

printf("VIDIOC_REQBUF failed\n");

return -1;

}

for(i=0;i<BUFFER_NUM;i++)

{

memset(&buf,0,sizeof(buf));

memset(planes,0,sizeof(planes));

buf.index = i;

buf.type = V4L2_BUF_TYPE_VIDEO_OUTPUT_MPLANE;

buf.memory = V4L2_MEMORY_MMAP;

buf.length = VIDEO_MAX_PLANES;

buf.m.planes = planes;

buf.m.planes[0].bytesused = 0;

buf.m.planes[1].bytesused = 0;

bufm。planes[2].bytesused = 0;

ret = ioctl(fd,VIDIOC_QUERYBUF,&buf);

if(ret < 0)

{

printf("VIDIOC_QUERYBUF failed\n");

return -1;

}

buffer1[i] = mmap(NULL,buf.m.planes[1].length,PROT_READ | PROT_WRITE, MAP_SHARED,fd,buf.m.planes[1].m.mem_offset);

if(buffer[i] == MAP_FAILED)

{

printf("mmap failed 0 \n");

}

buffer2[i] = mmap(NULL,buf.m.planes[2].length,PROT_READ | PROT_WRITE,MAP_SHARED,fd,buf.m.planes[2].m.mem_offset);

if(buffer2[i] == MAP_FAILED)

{

printf("mmap failed 1 \n");

}

printf("buffer1[i] = 0x%x \n",buffer1[i]);

printf("buffer2[i] = 0x%x \n",buffer2[i]);

printf("buf.m.planes[1].length = %d, buf.m.planes[1].m.mem_offset = %d\n",buf.m.planes[1].length

,buf.m.planes[1].m.mem_offset);

printf("buf.m.planes[2].length = %d, buf.m.planes[2].m.mem_offset = %d\n",buf.m.planes[2].length

,buf.m.planes[2].m.mem_offset);

}

type = V4L2_BUF_TYPE_VIDEO_OUTPUT_MPLANE;

ret = ioctl(fd,VIDIOC_STREAMON,&type);

if(ret < 0)

{

printf("VIDIOC_STREAMON failed\n");

return -1;

}

2. mplane 也支持只使用一个plane的场景:

#define BUFFER_NUM 4

unsigned char *buffer[BUFFER_NUM];

struct v4l2_capability cap;

struct v4l2_format fmt;

enum v4l2_buf_type type;

int i;

struct v4l2_buffer buf;

struct v4l2_plane planes[VIDEO_MAX_PLANES];

memset(&fmt,0,sizeof(fmt));

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

fmt.fmt.pix_mp.width = 1280;

fmt.fmt.pix_mp.height = 720;

fmt.fmt.pix_mp.pixelformat = V4L2_PIX_FMT_H264; //data format

fmt.fmt.pix_mp.field = V4L2_FIELD_NONE;

fmt.fmt.pix_mp.num_planes = 1; // planes num

fmt.fmt.pix_mp.plane_fmt[0].bytesperline = 1280;

fmt.fmt.pix_mp.plane_fmt[0].sizeimage = 1280 * 720 * 3 / 2;

ret = ioctl(fd, VIDIOC_S_FMT,&fmt);

if(ret < 0)

{

printf("VIDIOC_S_FMT failed\n");

return -1;

}

struct v4l2_requestbuffers reqbufs;

memset(&reqbufs,0,sizeof(reqbufs));

reqbufs.count = BUFFER_NUM;

reqbufs.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

reqbufs.memory = V4L2_MEMORY_MMAP;

ret = ioctl(fd,VIDIOC_REQBUFS,&reqbufs);

if(ret < 0)

{

printf("VIDIOC_REQBUF failed\n");

return -1;

}

for(i=0;i<BUFFER_NUM;i++)

{

memset(&buf,0,sizeof(buf));

memset(planes,0,sizeof(planes));

buf.index = i;

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

buf.memory = V4L2_MEMORY_MMAP;

buf.length = VIDEO_MAX_PLANES;

buf.m.planes = planes;

buf.m.planes[0].bytesused = 0;

ret = ioctl(fd,VIDIOC_QUERYBUF,&buf);

if(ret < 0)

{

printf("VIDIOC_QUERYBUF failed\n");

}

buffer[i] = mmap(NULL,buf.m.planes[0].length,PROT_READ | PROT_WRITE,MAP_SHARED,fd,buf.m.planes[0].m.mem_offset);

if(buffer[i] == MAP_FAILED)

{

printf("buffer mmap failed\n");

}

printf("buffer[%d] = 0x%x \n",i,buffer[i]);

printf("buf.m.planes[0].length = %d, buf.m.planes[0].m.mem_offset = %d\n",buf.m.planes[0].length

,buf.m.planes[0].m.mem_offset);

}

memset(&buf,0,sizeof(buf));

memset(planes,0,sizeof(planes));

for(i=0; i < BUFFER_NUM; i++)

{

buf.m.planes = planes;

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = i;

buf.flags = 0;

buf.length = 1;

buf.m.planes[0].bytesused = 1280*720*3/2;

buf.bytesused = buf.m.planes[0].bytesused;

ret = ioctl(fd,VIDIOC_QBUF,&buf);

if(ret < 0)

{

printf("VIDIOC_QBUF failed\n");

return -1;

}

}

type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

ret = ioctl(fd,VIDIOC_STREAMON,&type);

if(ret < 0)

{

printf("VIDIOC_STREAMON failed\n");

return -1;

}

2. 内存使用dmafd方式

1. camera端导出dmafd:

#define BUFFER_NUM 4

#define CAMERA_PLANES 3

#define CAM_VIDEO "/dev/video0"

int buffer_export_mp(int fd, enum v4l2_buf_type bt, int index, int dmafd[], int n_planes)

{

int i;

for(i = 0; i < n_planes; i++)

{

struct v4l2_exportbuffer expbuf;

memset(&expbuf, 0, sizeof(expbuf));

expbuf.type = bt;

expbuf.index = index;

expbuf.plane = i;

if(ioctl(fd, VIDIOC_EXPBUF, &expbuf) == -1) {

printf("VIDIOC_EXPBUF failed\n");

while(i)

close(dmafd[--i]);

return -1;

}

dmafd[i] = expbuf.fd;

printf("dmafd[%d] = %d\n",i,dmafd[i]);

}

return 0;

}

int fd;

int i,j;

int ret;

int dmafd[BUFFER_NUM][CAMERA_PLANES];

unsigned char *buffer[BUFFER_NUM];

fd = open(CAMERA_VIDEO, O_RDWR);

if (fd < 0)

{

printf("open camera video node failed\n");

return -1;

}

struct v4l2_capability cap;

struct v4l2_format fmt;

enum v4l2_buf_type type;

struct v4l2_buffer buf;

struct v4l2_plane planes[VIDEO_MAX_PLANES];

type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

ret = ioctl(fd,VIDIOC_QUERYCAP,&cap);

if(ret < 0)

{

printf("VIDIOC_QUERYCAP failed\n");

return -1;

}

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

ret = ioctl(fd,VIDIOC_G_FMT,&fmt);

if(ret < 0)

{

printf("VIDIOC_G_GMT failed\n");

return -1;

}

memset(&fmt,0,sizeof(fmt));

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

fmt.fmt.pix_mp.width = 1920;

fmt.fmt.pix_mp.height = 1080;

fmt.fmt.pix_mp.pixelformat = V4L2_PIX_FMT_NV12;

fmt.fmt.pix_mp.field = V4L2_FIELD_NONE;

fmt.fmt.pix_mp.num_planes = CAMERA_PLANES;

ret = ioctl(fd, VIDIOC_S_FMT,&fmt);

if(ret < 0)

{

printf("VIDIOC_S_FMT failed\n");

return -1;

}

struct v4l2_requestbuffers reqbufs;

memset(&reqbufs,0,sizeof(reqbufs));

reqbufs.count = BUFFER_NUM;

reqbufs.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

reqbufs.memory = V4L2_MEMORY_MMAP;

ret = ioctl(fd,VIDIOC_REQBUFS,&reqbufs);

if(ret < 0)

{

printf("VIDIOC_REQBUF failed\n");

return -1;

}

for(i=0;i<BUFFER_NUM;i++)

{

memset(&buf,0,sizeof(buf));

memset(planes,0,sizeof(planes));

buf.index = i;

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

buf.memory = V4L2_MEMORY_MMAP;

buf.length = 3;

buf.m.planes = planes;

buf.m.planes[0].bytesused = 0;

ret = ioctl(fd,VIDIOC_QUERYBUF,&buf);

if(ret < 0)

{

printf("VIDIOC_QUERYBUF failed\n");

}

ret = buffer_export_mp(fd,buf.type,i,dmafd[i],CAMERA_PLANES); //export dmafd

if(ret < 0)

{

printf("buffer export_mp failed\n");

return -1;

}

}

2. encoder端使用camera端导出的dmafd

#define BUFFER_NUM 4

#define CAMERA_PLANES 3

#define ENC_VIDEO "/dev/video1"

int fd;

int i,j;

int ret;

int dmafd[BUFFER_NUM][CAMERA_PLANES];

unsigned char *buffer[BUFFER_NUM];

fd = open(ENC_VIDEO, O_RDWR);

if (fd < 0)

{

printf("open enc video node failed\n");

return -1;

}

struct v4l2_capability cap;

struct v4l2_format fmt;

enum v4l2_buf_type type;

struct v4l2_buffer buf;

struct v4l2_plane planes[VIDEO_MAX_PLANES];

type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

ret = ioctl(fd,VIDIOC_QUERYCAP,&cap);

if(ret < 0)

{

printf("VIDIOC_QUERYCAP failed\n");

return -1;

}

fmt.type = V4L2_BUF_TYPE_VIDEO_OUTPUT_MPLANE;

ret = ioctl(fd,VIDIOC_G_FMT,&fmt);

if(ret < 0)

{

printf("VIDIOC_G_GMT failed\n");

return -1;

}

memset(&fmt,0,sizeof(fmt));

fmt1.type = V4L2_BUF_TYPE_VIDEO_OUTPUT_MPLANE;

fmt1.fmt.pix_mp.width = 1920;

fmt1.fmt.pix_mp.height = 1080;

fmt1.fmt.pix_mp.pixelformat = V4L2_PIX_FMT_NV12;

fmt1.fmt.pix_mp.field = V4L2_FIELD_NONE;

fmt1.fmt.pix_mp.num_planes = CAMERA_PLANES; //plane num

ret = ioctl(fd, VIDIOC_S_FMT,&fmt);

if(ret < 0)

{

printf("VIDIOC_S_FMT failed\n");

return -1;

}

struct v4l2_requestbuffers reqbufs;

memset(&reqbufs,0,sizeof(reqbufs));

reqbufs.count = BUFFER_NUM;

reqbufs.type = V4L2_BUF_TYPE_VIDEO_OUTPUT_MPLANE;

reqbufs.memory = V4L2_MEMORY_DMABUF; //DMABUF

ret = ioctl(fd,VIDIOC_REQBUFS,&reqbufs);

if(ret < 0)

{

printf("Encoder input VIDIOC_REQBUF failed\n");

return -1;

}

memset(&buf1,0,sizeof(buf1));

memset(planes1,0,sizeof(planes1));

for(i=0; i < BUFFER_NUM; i++)

{

buf.m.planes = planes;

buf.type = V4L2_BUF_TYPE_VIDEO_OUTPUT_MPLANE;

buf.memory = V4L2_MEMORY_DMABUF;

buf.index = i;

//buf.flags = 0;

buf.length = 3;

//buf.m.planes[0].bytesused = 1920*1080*3/2;

//buf.m.planes[1].bytesused = 1280*720*3/2;

//buf.m.planes[2].bytesused = 1280*720 / 16;

//buf.bytesused = buf.m.planes[0].bytesused + buf.m.planes[1].bytesused + buf.m.planes[2].bytesused;

for(j=0; j < CAMERA_PLANES; j++)

{

buf.m.planes[j].m.fd = dmafd[i][j];

//printf("index = %d, buf.m.planes[%d] = %d\n",i,j,buf.m.planes[j].m.fd);

}

}

总结

- mplane 也支持只使用一个plane的场景;

- mplane实质上就是对应多个buffer;

参考资料

- https://www.kernel.org/doc/html/v4.14/media/uapi/v4l/buffer.html?highlight=v4l2_buffer#c.v4l2_buffer

232

232

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?