Ingress-nginx

目录

文章目录

本节实战

| 实战名称 |

|---|

| 💘 实战:在 Ingress 对象上配置Basic Auth-2023.3.12(测试成功) |

| 💘 实战:使用外部的 Basic Auth 认证信息-2023.3.12(测试成功) |

| 💘 实战:Ingress-nginx之URL Rewrite-2023.3.13(测试成功) |

| 💘 实战:Ingress-nginx之灰度发布-2023.3.14(测试成功) |

| 💘 实战:Ingress-nginx之用 HTTPS 来访问我们的应用(openssl)-2022.11.27(测试成功) |

| 💘 实战:实战:Ingress-nginx之用 HTTPS 来访问我们的应用(cfgssl)-2023.1.2(测试成功) |

| 💘 实战:Ingress-nginx之TCP-2023.3.15(测试成功) |

| 💘 实战:Ingress-nginx之全局配置-2023.3.15(测试成功) |

前言

我们已经了解了 Ingress 资源对象只是一个路由请求描述配置文件,要让其真正生效还需要对应的 Ingress 控制器才行。Ingress 控制器有很多,这里我们先介绍使用最多的 ingress-nginx,它是基于 Nginx 的 Ingress 控制器。

ingress-nginx控制器主要是用来组装一个 nginx.conf 的配置文件**,当配置文件发生任何变动的时候就需要重新加载 Nginx 来生效,**但是并不会只在影响 upstream 配置的变更后就重新加载 Nginx,控制器内部会使用一个 lua-nginx-module 来实现该功能。

我们知道 Kubernetes 控制器使用控制循环模式来检查控制器中所需的状态是否已更新或是否需要变更,所以 ingress-nginx 需要使用集群中的不同对象来构建模型,比如 Ingress、Service、Endpoints、Secret、ConfigMap 等可以生成反映集群状态的配置文件的对象,控制器需要一直 Watch 这些资源对象的变化,但是并没有办法知道特定的更改是否会影响到最终生成的 nginx.conf 配置文件,所以一旦 Watch 到了任何变化,控制器都必须根据集群的状态重建一个新的模型,并将其与当前的模型进行比较,如果模型相同则就可以避免生成新的 Nginx 配置并触发重新加载,否则还需要检查模型的差异是否只和端点有关**,如果是这样,则然后需要使用 HTTP POST 请求将新的端点列表发送到在 Nginx 内运行的 Lua 处理程序,并再次避免生成新的 Nginx 配置并触发重新加载。**如果运行和新模型之间的差异不仅仅是端点,那么就会基于新模型创建一个新的 Nginx 配置了,这样构建模型最大的一个好处就是在状态没有变化时避免不必要的重新加载,可以节省大量 Nginx 重新加载。

下面简单描述了需要重新加载的一些场景:

- 创建了新的 Ingress 资源

- TLS 添加到现有 Ingress

- 从 Ingress 中添加或删除 path 路径

- Ingress、Service、Secret 被删除了

- Ingress 的一些缺失引用对象变可用了,例如 Service 或 Secret

- 更新了一个 Secret

对于集群规模较大的场景下频繁的对Nginx进行重新加载显然会造成大量的性能消耗,所以要尽可能减少出现重新加载的场景。

现在我们已经安装了 ingress-nginx ,并可以通过 LoadBalancer 负载均衡器来暴露其服务了,那么接下来我们就来了解下 ingress-nginx 的一些具体配置使用,要进行一些自定义配置,有几种方式可以实现:使用 Configmap 在Nginx 中设置全局配置、通过 Ingress 的 Annotations 设置特定 Ingress 的规则、自定义模板。接下来我们重点给大家介绍使用注解来对 Ingress 对象进行自定义。

[root@master1 ~]#kubectl get svc ingress-nginx-controller -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.97.111.207 172.29.9.61 80:30970/TCP,443:32364/TCP 23h

[root@master1 ~]#kubectl get cm -ningress-nginx

NAME DATA AGE

ingress-nginx-controller 1 23h

kube-root-ca.crt 1 23h

[root@master1 ~]#kubectl get cm ingress-nginx-controller -ningress-nginx -oyaml

apiVersion: v1

data:

allow-snippet-annotations: "true"

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"allow-snippet-annotations":"true"},"kind":"ConfigMap","metadata":{"annotations":{},"labels":{"app.kubernetes.io/component":"controller","app.kubernetes.io/instance":"ingress-nginx","app.kubernetes.io/name":"ingress-nginx","app.kubernetes.io/part-of":"ingress-nginx","app.kubernetes.io/version":"1.5.1"},"name":"ingress-nginx-controller","namespace":"ingress-nginx"}}

creationTimestamp: "2023-03-06T22:59:36Z"

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx-controller

namespace: ingress-nginx

resourceVersion: "177720"

uid: 7692a932-ce9a-40d0-8df2-988e4eb0aa31

[root@master1 ~]#

1、Basic Auth

1.在 Ingress 对象上配置Basic Auth

💘 实战:在 Ingress 对象上配置Basic Auth-2023.3.12(测试成功)

- 实验环境

实验环境:

1、win10,vmwrokstation虚机;

2、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

k8s version:v1.22.2

containerd: v1.5.5

- 实验软件

链接:https://pan.baidu.com/s/15d39N-oQEsgcLlqZOCb__w?pwd=pshn

提取码:pshn

2023.3.12-实战:在 Ingress 对象上配置Basic Auth-2023.3.12(测试成功)

- 前提条件

已安装好ingress-nginx环境;(ingress-nginx svc类型是LoadBalancer的。)

已部署好MetalLB环境;(当然,这里也可以不部署MetalLB环境,直接使用域名:NodePort端口去访问的,这里为了测试方便,我们使用LB来进行访问)。

ingress-nginx部署见文档:

本地文档:

csdn链接:

https://blog.csdn.net/weixin_39246554/article/details/129334116?spm=1001.2014.3001.5501

MetalLB部署见文档:

本地文档:

csdn链接:

https://blog.csdn.net/weixin_39246554/article/details/129343617?spm=1001.2014.3001.5501

⚠️ 注意:

Ingres-nginx是通过DaemonSet方式部署的,MetalLB部署后,在3个节点上都是可以正常访问ingress的哦。

- 创建一个后续用于测试的应用

#nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

ports:

- port: 80

protocol: TCP

name: http

selector:

app: nginx

部署并观察:

[root@master1 ingress-nginx]#kubectl apply -f nginx.yaml

deployment.apps/nginx created

service/nginx created

[root@master1 ingress-nginx]#kubectl get po,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-7848d4b86f-ftznq 1/1 Running 0 26s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 18d

service/nginx ClusterIP 10.98.22.153 <none> 80/TCP 26s

- 我们可以在 Ingress 对象上配置一些基本的 Auth 认证,比如 Basic Auth。可以用

htpasswd生成一个密码文件来验证身份验证。

[root@master1 ingress-nginx]#yum install -y httpd-tools #记得安装下httpd-tools软件包,htpasswd命令依赖于这个软件包

[root@master1 ingress-nginx]#htpasswd -c auth foo #当前密码是foo321

New password:

Re-type new password:

Adding password for user foo

[root@master1 ingress-nginx]#ll

total 8

-rw-r--r-- 1 root root 42 Mar 12 21:47 auth

-rw-r--r-- 1 root root 441 Mar 8 06:29 nginx.yaml

- 然后根据上面的 auth 文件创建一个 secret 对象:

[root@master1 ingress-nginx]# kubectl create secret generic basic-auth --from-file=auth

secret/basic-auth created

[root@master1 ingress-nginx]# kubectl get secret basic-auth -o yaml

apiVersion: v1

data:

auth: Zm9vOiRhcHIxJE9reFhCMTV3JGNZR1NMYnpBWDhTNklkNHo3WTRlWi8K

kind: Secret

metadata:

creationTimestamp: "2023-03-12T13:48:52Z"

name: basic-auth

namespace: default

resourceVersion: "242528"

uid: b9c37dd7-3fac-43af-b5bc-3388114d9cc0

type: Opaque

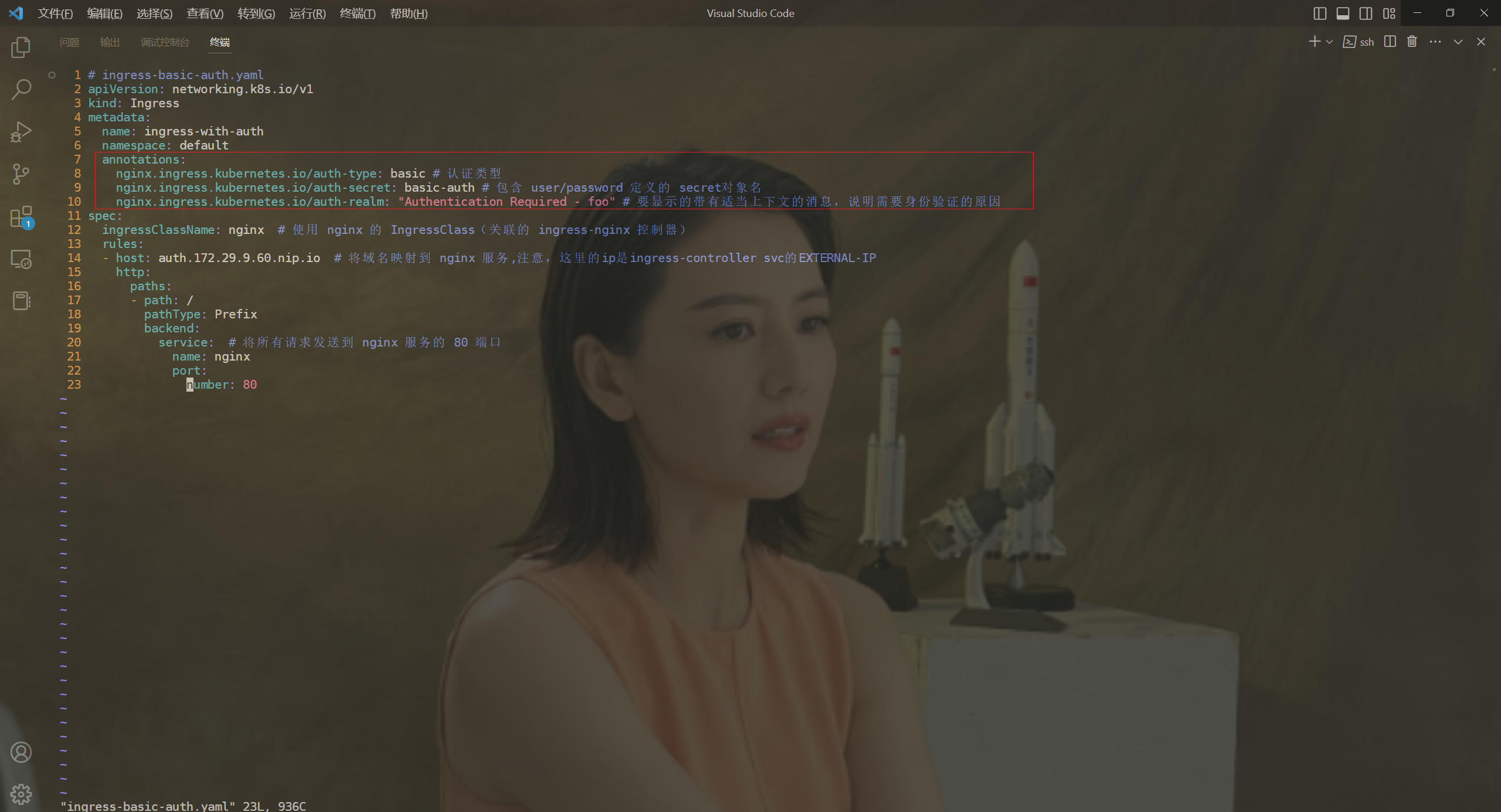

- 然后对上面的 my-nginx 应用创建一个具有 Basic Auth 的 Ingress 对象:

# ingress-basic-auth.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-with-auth

namespace: default

annotations:

nginx.ingress.kubernetes.io/auth-type: basic # 认证类型

nginx.ingress.kubernetes.io/auth-secret: basic-auth # 包含 user/password 定义的 secret对象名

nginx.ingress.kubernetes.io/auth-realm: "Authentication Required - foo" # 要显示的带有适当上下文的消息,说明需要身份验证的原因

spec:

ingressClassName: nginx # 使用 nginx 的 IngressClass(关联的 ingress-nginx 控制器)

rules:

- host: auth.172.29.9.60.nip.io # 将域名映射到 nginx 服务,注意,这里的ip是ingress-controller svc的EXTERNAL-IP

http:

paths:

- path: /

pathType: Prefix

backend:

service: # 将所有请求发送到 nginx 服务的 80 端口

name: nginx

port:

number: 80

- 直接创建上面的资源对象

[root@master1 ingress-nginx]#kubectl apply -f ingress-basic-auth.yaml

ingress.networking.k8s.io/ingress-with-auth created

[root@master1 ingress-nginx]#kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-with-auth nginx auth.172.29.9.60.nip.io 172.29.9.60 80 21s

- 然后通过下面的命令或者在浏览器中直接打开配置的域名

[root@master1 ingress-nginx]#curl -v http://auth.172.29.9.60.nip.io

* About to connect() to auth.172.29.9.60.nip.io port 80 (#0)

* Trying 172.29.9.60...

* Connected to auth.172.29.9.60.nip.io (172.29.9.60) port 80 (#0)

> GET / HTTP/1.1

> User-Agent: curl/7.29.0

> Host: auth.172.29.9.60.nip.io

> Accept: */*

>

< HTTP/1.1 401 Unauthorized

< Date: Sun, 12 Mar 2023 14:22:31 GMT

< Content-Type: text/html

< Content-Length: 172

< Connection: keep-alive

< WWW-Authenticate: Basic realm="Authentication Required - foo"

<

<html>

<head><title>401 Authorization Required</title></head>

<body>

<center><h1>401 Authorization Required</h1></center>

<hr><center>nginx</center>

</body>

</html>

* Connection #0 to host auth.172.29.9.60.nip.io left intact

我们可以看到出现了 401 认证失败错误。

- 然后带上我们配置的用户名和密码进行认证:

[root@master1 ingress-nginx]#curl -v http://auth.172.29.9.60.nip.io -u 'foo:foo321'

* About to connect() to auth.172.29.9.60.nip.io port 80 (#0)

* Trying 172.29.9.60...

* Connected to auth.172.29.9.60.nip.io (172.29.9.60) port 80 (#0)

* Server auth using Basic with user 'foo'

> GET / HTTP/1.1

> Authorization: Basic Zm9vOmZvbzMyMQ==

> User-Agent: curl/7.29.0

> Host: auth.172.29.9.60.nip.io

> Accept: */*

>

< HTTP/1.1 200 OK

< Date: Sun, 12 Mar 2023 14:23:50 GMT

< Content-Type: text/html

< Content-Length: 615

< Connection: keep-alive

< Last-Modified: Tue, 28 Dec 2021 15:28:38 GMT

< ETag: "61cb2d26-267"

< Accept-Ranges: bytes

<

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

* Connection #0 to host auth.172.29.9.60.nip.io left intact

可以看到已经认证成功了。

浏览器测试效果:是ok的,符合预期。

⚠️ 注意:nginx.ingress.kubernetes.io/auth-realm: "Authentication Required - foo"参数的含义

这里以密码写错为例举例:

测试结束。😘

2.使用外部的 Basic Auth 认证信息

💘 实战:使用外部的 Basic Auth 认证信息-2023.3.12(测试成功)

- 实验环境

实验环境:

1、win10,vmwrokstation虚机;

2、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

k8s version:v1.22.2

containerd: v1.5.5

- 实验软件

链接:https://pan.baidu.com/s/12vts7vEP-eYA5cGIHdOyPw?pwd=sgbk

提取码:sgbk

–来自百度网盘超级会员V7的分享

2023.3.12-实战:使用外部的 Basic Auth 认证信息-2023.3.12(测试成功)

- 前提条件

已安装好ingress-nginx环境;(ingress-nginx svc类型是LoadBalancer的。)

已部署好MetalLB环境;(当然,这里也可以不部署MetalLB环境,直接使用域名:NodePort端口去访问的,这里为了测试方便,我们使用LB来进行访问)。

ingress-nginx部署见文档:

本地文档:

csdn链接:

https://blog.csdn.net/weixin_39246554/article/details/129334116?spm=1001.2014.3001.5501

MetalLB部署见文档:

本地文档:

csdn链接:

https://blog.csdn.net/weixin_39246554/article/details/129343617?spm=1001.2014.3001.5501

⚠️ 注意:

Ingres-nginx是通过DaemonSet方式部署的,MetalLB部署后,在3个节点上都是可以正常访问ingress的哦。

- 创建一个后续用于测试的应用

#nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

ports:

- port: 80

protocol: TCP

name: http

selector:

app: nginx

部署并观察:

[root@master1 ingress-nginx]#kubectl apply -f nginx.yaml

deployment.apps/nginx created

service/nginx created

[root@master1 ingress-nginx]#kubectl get po,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-7848d4b86f-ftznq 1/1 Running 0 26s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 18d

service/nginx ClusterIP 10.98.22.153 <none> 80/TCP 26s

- 除了可以使用我们自己在本地集群创建的 Auth 信息之外,还可以使用外部的 Basic Auth 认证信息,比如我们使用 https://httpbin.org 的外部 Basic Auth 认证,创建如下所示的 Ingress 资源对象:

# ingress-basic-auth-external.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: external-auth

namespace: default

annotations:

# 配置外部认证服务地址

nginx.ingress.kubernetes.io/auth-url: https://httpbin.org/basic-auth/user/passwd

spec:

ingressClassName: nginx # 使用 nginx 的 IngressClass(关联的 ingress-nginx 控制器)

rules:

- host: external-auth.172.29.9.60.nip.io # 将域名映射到 nginx 服务,注意,这里的ip是ingress-controller svc的EXTERNAL-IP

http:

paths:

- path: /

pathType: Prefix

backend:

service: # 将所有请求发送到 nginx 服务的 80 端口

name: nginx

port:

number: 80

- 部署

[root@master1 ingress-nginx]#kubectl apply -f ingress-basic-auth-external.yaml

ingress.networking.k8s.io/external-auth created

[root@master1 ingress-nginx]#kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

external-auth nginx external-auth.172.29.9.60.nip.io 172.29.9.60 80 57s

- 测试,然后通过下面的命令或者在浏览器中直接打开配置的域名

[root@master1 ingress-nginx]#curl -k http://external-auth.172.29.9.60.nip.io

<html>

<head><title>401 Authorization Required</title></head>

<body>

<center><h1>401 Authorization Required</h1></center>

<hr><center>nginx</center>

</body>

</html>

我们可以看到出现了 401 认证失败错误。

- 然后带上我们配置的用户名和密码进行认证:

[root@master1 ingress-nginx]#curl -k http://external-auth.172.29.9.60.nip.io -u 'user:passwd'

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

可以看到已经认证成功了。

浏览器测试效果:是ok的,符合预期。

测试结束。😘

当然除了 Basic Auth 这一种简单的认证方式之外,ingress-nginx 还支持一些其他高级的认证,比如我们可以使用 GitHub OAuth 来认证 Kubernetes 的 Dashboard。

2、URL Rewrite

可能平常用的最多的是这个功能。

ingress-nginx 很多高级的用法可以通过 Ingress 对象的 annotation 进行配置,比如常用的 URL Rewrite 功能。**很多时候我们会将 **ingress-nginx当成网关使用,比如对访问的服务加上 /app 这样的前缀,在 nginx 的配置里面我们知道有一个 proxy_pass 指令可以实现:

location /app/ {

proxy_pass http://127.0.0.1/remote/;

}

可能要加上/app,或者/gateway,/api,特别是在我们微服务里,我们很多时候要把我们的微服务提供的一些接口给它聚合在某一个子路径下面去,比如果/api.或者/api/v1下面,当然这些功能我们可以直接在网关层ingress这里实现这样的功能。

proxy_pass 后面加了 /remote 这个路径,此时会将匹配到该规则路径中的 /app 用 /remote 替换掉,相当于截掉路径中的 /app。同样的在 Kubernetes 中使用 ingress-nginx 又该如何来实现呢?我们可以使用 rewrite-target 的注解**来实现这个需求。比如现在我们想要通过 rewrite.172.29.9.60.nip.io/gateway/ 来访问到 Nginx 服务,则我们需要对访问的 URL 路径做一个 Rewrite,**在 PATH 中添加一个 gateway 的前缀。

关于 Rewrite 的操作在 ingress-nginx 官方文档中也给出对应的说明:

💘 实战:Ingress-nginx之URL Rewrite-2023.3.13(测试成功)

- 实验环境

实验环境:

1、win10,vmwrokstation虚机;

2、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

k8s version:v1.22.2

containerd: v1.5.5

- 实验软件

链接:https://pan.baidu.com/s/1Dj1Qcmjpri6-IfUri5F5nQ?pwd=vpiu

提取码:vpiu

2023.3.13-实战:Ingress-nginx之URL Rewrite-2023.3.13(测试成功)

- 前提条件

已安装好ingress-nginx环境;(ingress-nginx svc类型是LoadBalancer的。)

已部署好MetalLB环境;(当然,这里也可以不部署MetalLB环境,直接使用域名:NodePort端口去访问的,这里为了测试方便,我们使用LB来进行访问)。

ingress-nginx部署见文档:

本地文档:

csdn链接:

https://blog.csdn.net/weixin_39246554/article/details/129334116?spm=1001.2014.3001.5501

MetalLB部署见文档:

本地文档:

csdn链接:

https://blog.csdn.net/weixin_39246554/article/details/129343617?spm=1001.2014.3001.5501

⚠️ 注意:

Ingres-nginx是通过DaemonSet方式部署的,MetalLB部署后,在3个节点上都是可以正常访问ingress的哦。

- 创建一个后续用于测试的应用

#nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

ports:

- port: 80

protocol: TCP

name: http

selector:

app: nginx

部署并观察:

[root@master1 ingress-nginx]#kubectl apply -f nginx.yaml

deployment.apps/nginx created

service/nginx created

[root@master1 ingress-nginx]#kubectl get po,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-7848d4b86f-ftznq 1/1 Running 0 26s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 18d

service/nginx ClusterIP 10.98.22.153 <none> 80/TCP 26s

以下3个小测试都是基于这个实战的哦。😘

1.rewrite-target

- 首先先按我们的想法来测试下

# ingress-nginx-url-rewrite.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-nginx-url-rewrite

namespace: default

spec:

ingressClassName: nginx # 使用 nginx 的 IngressClass(关联的 ingress-nginx 控制器)

rules:

- host: rewrite.172.29.9.60.nip.io # 将域名映射到 nginx 服务,注意,这里的ip是ingress-controller svc的EXTERNAL-IP, rewrite.172.29.9.60.nip.io/gateway --> nginx

http:

paths:

- path: /gateway

pathType: Prefix

backend:

service: # 将所有请求发送到 nginx 服务的 80 端口

name: nginx

port:

number: 80

- 部署并测试:

[root@master1 url-rewirte]#kubectl apply -f ingress-nginx-url-rewrite.yaml

ingress.networking.k8s.io/ingress-nginx-url-rewrite created

[root@master1 url-rewirte]#kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-nginx-url-rewrite nginx rewrite.172.29.9.60.nip.io 172.29.9.60 80 19s

#测试

[root@master1 url-rewirte]#curl -v rewrite.172.29.9.60.nip.io

* About to connect() to rewrite.172.29.9.60.nip.io port 80 (#0)

* Trying 172.29.9.60...

* Connected to rewrite.172.29.9.60.nip.io (172.29.9.60) port 80 (#0)

> GET / HTTP/1.1

> User-Agent: curl/7.29.0

> Host: rewrite.172.29.9.60.nip.io

> Accept: */*

>

< HTTP/1.1 404 Not Found

< Date: Sun, 12 Mar 2023 22:16:34 GMT

< Content-Type: text/html

< Content-Length: 146

< Connection: keep-alive

<

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx</center>

</body>

</html>

* Connection #0 to host rewrite.172.29.9.60.nip.io left intact

[root@master1 url-rewirte]#curl -v rewrite.172.29.9.60.nip.io/gateway

* About to connect() to rewrite.172.29.9.60.nip.io port 80 (#0)

* Trying 172.29.9.60...

* Connected to rewrite.172.29.9.60.nip.io (172.29.9.60) port 80 (#0)

> GET /gateway HTTP/1.1

> User-Agent: curl/7.29.0

> Host: rewrite.172.29.9.60.nip.io

> Accept: */*

>

< HTTP/1.1 404 Not Found

< Date: Sun, 12 Mar 2023 22:16:48 GMT

< Content-Type: text/html

< Content-Length: 153

< Connection: keep-alive

<

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx/1.21.5</center>

</body>

</html>

* Connection #0 to host rewrite.172.29.9.60.nip.io left intact

可以看到2种方式都是无法访问的。

- 我们再次改写yaml

# ingress-nginx-url-rewrite.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-nginx-url-rewrite

namespace: default

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

spec:

ingressClassName: nginx # 使用 nginx 的 IngressClass(关联的 ingress-nginx 控制器)

rules:

- host: rewrite.172.29.9.60.nip.io # 将域名映射到 nginx 服务,注意,这里的ip是ingress-controller svc的EXTERNAL-IP, rewrite.172.29.9.60.nip.io/gateway --> nginx

#包括如下几种情况

#rewrite.172.29.9.60.nip.io/gateway/ rewrite.172.29.9.60.nip.io/gateway rewrite.172.29.9.60.nip.io/gateway/xxx

http:

paths:

- path: /gateway(/|$)(.*)

pathType: Prefix

backend:

service: # 将所有请求发送到 nginx 服务的 80 端口

name: nginx

port:

number: 80

特别注意这里的正则表达式。

分为以下这3种情况:

rewrite.172.29.9.60.nip.io/gateway/ rewrite.172.29.9.60.nip.io/gateway rewrite.172.29.9.60.nip.io/gateway/xxx

$表示结尾

- 更新后,我们可以预见到直接访问域名肯定是不行了,因为我们没有匹配 / 的 path 路径:

[root@master1 url-rewirte]#curl rewrite.172.29.9.60.nip.io

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx</center>

</body>

</html>

- 但是我们带上 gateway 的前缀再去访问就正常了:

[root@master1 url-rewirte]#curl rewrite.172.29.9.60.nip.io/gateway

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@master1 url-rewirte]#curl rewrite.172.29.9.60.nip.io/gateway/

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@master1 url-rewirte]#

我们可以看到已经可以访问到了,这是因为我们在 path 中通过正则表达式 /gateway(/|$)(.*) 将匹配的路径设置成了 rewrite-target 的目标路径了,所以我们访问 rewrite.172.29.9.60.nip.io/gateway 的时候实际上相当于访问的就是后端服务的 / 路径。

2.app-root

解决我们访问主域名出现 404 的问题。

要解决我们访问主域名出现 404 的问题,我们可以给应用设置一个 app-root 的注解,这样当我们访问主域名的时候会自动跳转到我们指定的 app-root 目录下面,如下所示:

- 这里在

rewrite.yaml配置文件上进行更改:

# ingress-nginx-url-rewrite.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-nginx-url-rewrite

namespace: default

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

nginx.ingress.kubernetes.io/app-root: /gateway/ #注意。

spec:

ingressClassName: nginx # 使用 nginx 的 IngressClass(关联的 ingress-nginx 控制器)

rules:

- host: rewrite.172.29.9.60.nip.io # 将域名映射到 nginx 服务,注意,这里的ip是ingress-controller svc的EXTERNAL-IP, rewrite.172.29.9.60.nip.io/gateway --> nginx

#包括如下几种情况

#rewrite.172.29.9.60.nip.io/gateway/ rewrite.172.29.9.60.nip.io/gateway rewrite.172.29.9.60.nip.io/gateway/xxx

http:

paths:

- path: /gateway(/|$)(.*)

pathType: Prefix

backend:

service: # 将所有请求发送到 nginx 服务的 80 端口

name: nginx

port:

number: 80

- 这个时候我们更新应用后访问主域名

rewrite.172.29.9.60.nip.io就会自动跳转到rewrite.172.29.9.60.nip.io/gateway/ 路径下面去了。

#部署

[root@master1 url-rewirte]#kubectl apply -f ingress-nginx-url-rewrite.yaml

ingress.networking.k8s.io/ingress-nginx-url-rewrite configured

[root@master1 url-rewirte]#kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-nginx-url-rewrite nginx rewrite.172.29.9.60.nip.io 172.29.9.60 80 37m

#测试

[root@master1 url-rewirte]#curl -v rewrite.172.29.9.60.nip.io

* About to connect() to rewrite.172.29.9.60.nip.io port 80 (#0)

* Trying 172.29.9.60...

* Connected to rewrite.172.29.9.60.nip.io (172.29.9.60) port 80 (#0)

> GET / HTTP/1.1

> User-Agent: curl/7.29.0

> Host: rewrite.172.29.9.60.nip.io

> Accept: */*

>

< HTTP/1.1 302 Moved Temporarily #可以看到,这里出现了302

< Date: Sun, 12 Mar 2023 22:53:54 GMT

< Content-Type: text/html

< Content-Length: 138

< Connection: keep-alive

< Location: http://rewrite.172.29.9.60.nip.io/gateway/ #这里可以看到被重定向到rewrite.172.29.9.60.nip.io/gateway/了。

<

<html>

<head><title>302 Found</title></head>

<body>

<center><h1>302 Found</h1></center>

<hr><center>nginx</center>

</body>

</html>

* Connection #0 to host rewrite.172.29.9.60.nip.io left intact

[root@master1 url-rewirte]#curl rewrite.172.29.9.60.nip.io

<html>

<head><title>302 Found</title></head>

<body>

<center><h1>302 Found</h1></center>

<hr><center>nginx</center>

</body>

</html>

⚠️ 注意:这里通过命令行测试好像无法明确看到现象,这里在web浏览器里测试下。

打开一个无痕浏览器,输入rewrite.172.29.9.60.nip.io:

这个时候我们更新应用后访问主域名 rewrite.172.29.9.60.nip.io 就会自动跳转到rewrite.172.29.9.60.nip.io/gateway/ 路径下面去了。符合预期,

3.configuration-snippet

(希望我们的应用在最后添加一个

/这样的 slash)

- 但是还有一个问题是我们的 path 路径其实也匹配了

/app这样的路径,可能我们更加希望我们的应用在最后添加一个/这样的 slash,同样我们可以通过configuration-snippet配置来完成,如下 Ingress 对象:

这个是和搜索引擎seo有关。

# ingress-nginx-url-rewrite.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-nginx-url-rewrite

namespace: default

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

nginx.ingress.kubernetes.io/app-root: /gateway/ #注意。

nginx.ingress.kubernetes.io/configuration-snippet: |

rewrite ^(/gateway)$ $1/ redirect;

spec:

ingressClassName: nginx # 使用 nginx 的 IngressClass(关联的 ingress-nginx 控制器)

rules:

- host: rewrite.172.29.9.60.nip.io # 将域名映射到 nginx 服务,注意,这里的ip是ingress-controller svc的EXTERNAL-IP, rewrite.172.29.9.60.nip.io/gateway --> nginx

#包括如下几种情况

#rewrite.172.29.9.60.nip.io/gateway/ rewrite.172.29.9.60.nip.io/gateway rewrite.172.29.9.60.nip.io/gateway/xxx

http:

paths:

- path: /gateway(/|$)(.*)

pathType: Prefix

backend:

service: # 将所有请求发送到 nginx 服务的 80 端口

name: nginx

port:

number: 80

- 更新后我们的应用就都会以 / 这样的 slash 结尾了,这样就完成了我们的需求,如果你原本对 nginx 的配置就非常熟悉的话应该可以很快就能理解这种配置方式了。

#部署

[root@master1 url-rewirte]#kubectl apply -f ingress-nginx-url-rewrite.yaml

ingress.networking.k8s.io/ingress-nginx-url-rewrite configured

[root@master1 url-rewirte]#kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGEh

ingress-nginx-url-rewrite nginx rewrite.172.29.9.60.nip.io 172.29.9.60 80 62m

#测试

[root@master1 url-rewirte]#curl -v rewrite.172.29.9.60.nip.io/gateway

* About to connect() to rewrite.172.29.9.60.nip.io port 80 (#0)

* Trying 172.29.9.60...

* Connected to rewrite.172.29.9.60.nip.io (172.29.9.60) port 80 (#0)

> GET /gateway HTTP/1.1

> User-Agent: curl/7.29.0

> Host: rewrite.172.29.9.60.nip.io

> Accept: */*

>

< HTTP/1.1 302 Moved Temporarily

< Date: Sun, 12 Mar 2023 23:19:18 GMT

< Content-Type: text/html

< Content-Length: 138

< Location: http://rewrite.172.29.9.60.nip.io/gateway/ #这里发生了重定向

< Connection: keep-alive

<

<html>

<head><title>302 Found</title></head>

<body>

<center><h1>302 Found</h1></center>

<hr><center>nginx</center>

</body>

</html>

* Connection #0 to host rewrite.172.29.9.60.nip.io left intact

[root@master1 url-rewirte]#curl -v rewrite.172.29.9.60.nip.io/gateway/

* About to connect() to rewrite.172.29.9.60.nip.io port 80 (#0)

* Trying 172.29.9.60...

* Connected to rewrite.172.29.9.60.nip.io (172.29.9.60) port 80 (#0)

> GET /gateway/ HTTP/1.1

> User-Agent: curl/7.29.0

> Host: rewrite.172.29.9.60.nip.io

> Accept: */*

>

< HTTP/1.1 200 OK

< Date: Sun, 12 Mar 2023 23:19:22 GMT

< Content-Type: text/html

< Content-Length: 615

< Connection: keep-alive

< Last-Modified: Tue, 28 Dec 2021 15:28:38 GMT

< ETag: "61cb2d26-267"

< Accept-Ranges: bytes

<

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

* Connection #0 to host rewrite.172.29.9.60.nip.io left intact

[root@master1 url-rewirte]#

符合预期,测试结束。😘

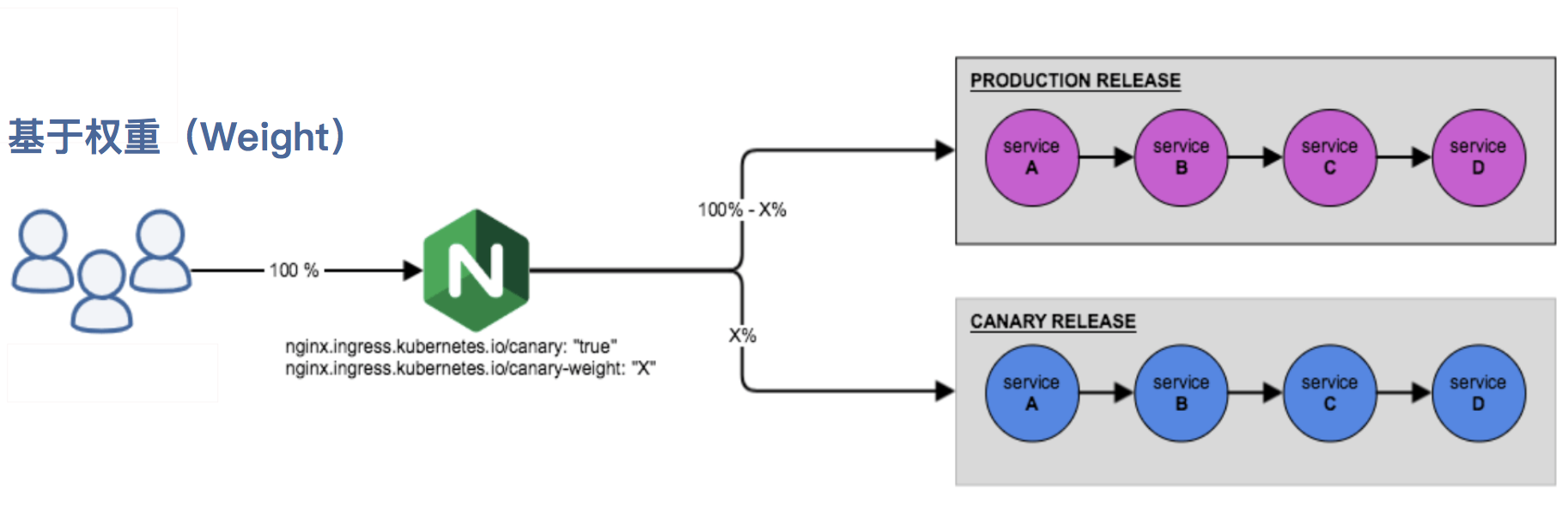

3、灰度发布

在日常工作中我们经常需要对服务进行版本更新升级,所以我们经常会使用到滚动升级、蓝绿发布、灰度发布等不同的发布操作。而 ingress-nginx 支持通过 Annotations 配置来实现不同场景下的灰度发布和测试,可以满足金丝雀发布、蓝绿部署与 A/B 测试等业务场景。

ingress-nginx 的 Annotations 支持以下 4 种 Canary(金丝雀) 规则:

我不知道我们线上会不会真正用这个ingress-nginx的annotations去做一个灰度,毕竟anotations它的这个配置方式其实不是特别云原生。一般来说,如果可以把他翻译成我们的CRD之类的,可能我们写起来就会方便一点的,目前来说,它是通过我们这个annotations方式来配置的。

nginx.ingress.kubernetes.io/canary-by-header:基于 Request Header 的流量切分,适用于灰度发布以及 A/B 测试。当 Request Header 设置为 always 时,请求将会被一直发送到 Canary 版本;当 Request Header 设置为 never 时,请求不会被发送到 Canary 入口;对于任何其他 Header 值,将忽略 Header,并通过优先级将请求与其他金丝雀规则进行优先级的比较。nginx.ingress.kubernetes.io/canary-by-header-value:要匹配的 Request Header 的值,用于通知 Ingress 将请求路由到 Canary Ingress 中指定的服务。当 Request Header 设置为此值时,它将被路由到 Canary 入口。该规则允许用户自定义 Request Header 的值,必须与上一个 annotation (canary-by-header) 一起使用。nginx.ingress.kubernetes.io/canary-weight:基于服务权重的流量切分,适用于蓝绿部署,权重范围 0 - 100 按百分比将请求路由到 Canary Ingress 中指定的服务。权重为 0 意味着该金丝雀规则不会向 Canary 入口的服务发送任何请求,权重为 100 意味着所有请求都将被发送到 Canary 入口。nginx.ingress.kubernetes.io/canary-by-cookie:基于 cookie 的流量切分,适用于灰度发布与 A/B 测试。用于通知 Ingress 将请求路由到 Canary Ingress 中指定的服务的cookie。当 cookie 值设置为 always 时,它将被路由到 Canary 入口;当 cookie 值设置为 never 时,请求不会被发送到 Canary 入口;对于任何其他值,将忽略 cookie 并将请求与其他金丝雀规则进行优先级的比较。

需要注意的是金丝雀规则按优先顺序进行排序:

canary-by-header > canary-by-cookie > canary-weight

总的来说可以把以上的四个 annotation 规则划分为以下两类:

- 基于权重的 Canary 规则

- 基于用户请求的 Canary 规则

下面我们通过一个示例应用来对灰度发布功能进行说明。

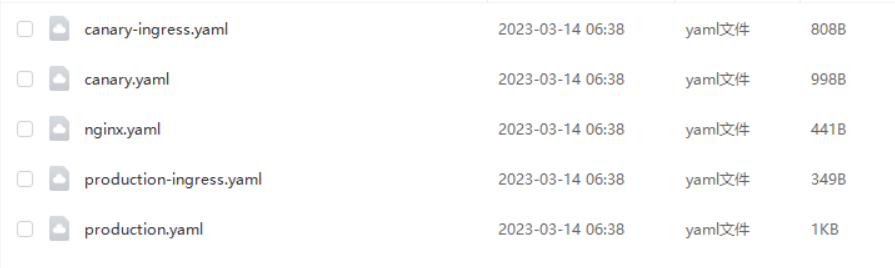

💘 实战:Ingress-nginx之灰度发布-2023.3.14(测试成功)

- 步骤划分:

第1步:部署 Production 应用

第2步:创建 Canary 版本

第3步:Annotation 规则配置

1.基于权重

2.基于 Request Header

3.基于 Cookie

- 实验环境

实验环境:

1、win10,vmwrokstation虚机;

2、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

k8s version:v1.22.2

containerd: v1.5.5

- 实验软件

链接:https://pan.baidu.com/s/1JG6uCfjSKVCFJZdZcQgWSg?pwd=rxqt

提取码:rxqt

2023.3.14-实战:Ingress-nginx之灰度发布-2023.3.14(测试成功)

- 前提条件

已安装好ingress-nginx环境;(ingress-nginx svc类型是LoadBalancer的。)

已部署好MetalLB环境;(当然,这里也可以不部署MetalLB环境,直接使用域名:NodePort端口去访问的,这里为了测试方便,我们使用LB来进行访问)。

ingress-nginx部署见文档:

本地文档:

csdn链接:

https://blog.csdn.net/weixin_39246554/article/details/129334116?spm=1001.2014.3001.5501

MetalLB部署见文档:

本地文档:

csdn链接:

https://blog.csdn.net/weixin_39246554/article/details/129343617?spm=1001.2014.3001.5501

⚠️ 注意:

Ingres-nginx是通过DaemonSet方式部署的,MetalLB部署后,在3个节点上都是可以正常访问ingress的哦。

- 注意:当前测

ingress-nginx的EXTERNAL-IP为172.29.9.60。

[root@master1 canary]#kubectl get svc -ningress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.108.58.246 172.29.9.60 80:32439/TCP,443:31347/TCP 2d15h

ingress-nginx-controller-admission ClusterIP 10.101.184.28 <none> 443/TCP 2d15h

第1步:部署 Production 应用

- 首先创建一个 production 环境的应用资源清单:

# production.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: production

labels:

app: production

spec:

selector:

matchLabels:

app: production

template:

metadata:

labels:

app: production

spec:

containers:

- name: production

image: cnych/echoserver #老师的测试镜像

ports:

- containerPort: 8080

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

---

apiVersion: v1

kind: Service

metadata:

name: production

labels:

app: production

spec:

ports:

- port: 80

targetPort: 8080

name: http

selector:

app: production

⚠️ 注意:这里的镜像也可以是如下官方的

# arm架构使用该镜像:mirrorgooglecontainers/echoserver-arm:1.8

image: mirrorgooglecontainers/echoserver:1.10这个镜像作用是打印pod的一些信息的。

- 然后创建一个用于 production 环境访问的 Ingress 资源对象:

# production-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: production

spec:

ingressClassName: nginx

rules:

- host: echo.172.29.9.60.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: production

port:

number: 80

- 直接创建上面的几个资源对象:

[root@master1 canary]#kubectl apply -f production.yaml

deployment.apps/production created

service/production created

[root@master1 canary]#kubectl apply -f production-ingress.yaml

ingress.networking.k8s.io/production created

[root@master1 canary]#kubectl get po -l app=production

NAME READY STATUS RESTARTS AGE

production-856d5fb99-k6k4h 1/1 Running 0 53s

[root@master1 canary]#kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

production nginx echo.172.29.9.60.nip.io 172.29.9.60 80 20s

[root@master1 canary]#

- 应用部署成功后即可正常访问应用:

[root@master1 canary]#curl echo.172.29.9.60.nip.io

Hostname: production-856d5fb99-k6k4h

Pod Information:

node name: node2

pod name: production-856d5fb99-k6k4h

pod namespace: default

pod IP: 10.244.2.22

Server values:

server_version=nginx: 1.13.3 - lua: 10008

Request Information:

client_address=10.244.0.2

method=GET

real path=/

query=

request_version=1.1

request_scheme=http

request_uri=http://echo.172.29.9.60.nip.io:8080/

Request Headers:

accept=*/*

host=echo.172.29.9.60.nip.io

user-agent=curl/7.29.0

x-forwarded-for=172.29.9.60

x-forwarded-host=echo.172.29.9.60.nip.io

x-forwarded-port=80

x-forwarded-proto=http

x-forwarded-scheme=http

x-real-ip=172.29.9.60

x-request-id=06d3ab578e605061c732c060c9194992

x-scheme=http

Request Body:

-no body in request-

第2步:创建 Canary 版本

参考将上述 Production 版本的 production.yaml 文件,再创建一个 Canary 版本的应用。

# canary.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: canary

labels:

app: canary

spec:

selector:

matchLabels:

app: canary

template:

metadata:

labels:

app: canary

spec:

containers:

- name: canary

image: cnych/echoserver

ports:

- containerPort: 8080

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

---

apiVersion: v1

kind: Service

metadata:

name: canary

labels:

app: canary

spec:

ports:

- port: 80

targetPort: 8080

name: http

selector:

app: canary

- 部署应用并查看

[root@master1 canary]#kubectl apply -f canary.yaml

deployment.apps/canary created

service/canary created

[root@master1 canary]#kubectl get po -l app=canary

NAME READY STATUS RESTARTS AGE

canary-66cb497b7f-86mdj 1/1 Running 0 26s

接下来就可以通过配置 Annotation 规则进行流量切分了。

第3步:Annotation 规则配置

1.基于权重

基于权重的流量切分的典型应用场景就是蓝绿部署,可通过将权重设置为 0 或 100 来实现。例如,可将 Green 版本设置为主要部分,并将 Blue 版本的入口配置为 Canary。最初,将权重设置为 0,因此不会将流量代理到 Blue 版本。一旦新版本测试和验证都成功后,即可将 Blue 版本的权重设置为 100,即所有流量从 Green 版本转向 Blue。

- 创建一个基于权重的 Canary 版本的应用路由 Ingress 对象。

# canary-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: canary

annotations:

nginx.ingress.kubernetes.io/canary: "true" # 要开启灰度发布机制,首先需要启用 Canary

nginx.ingress.kubernetes.io/canary-weight: "30" # 分配30%流量到当前Canary版本

spec:

ingressClassName: nginx

rules:

- host: echo.172.29.9.60.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: canary

port:

number: 80

- 直接创建上面的资源对象即可:

[root@master1 canary]#kubectl apply -f canary-ingress.yaml

ingress.networking.k8s.io/canary created

[root@master1 canary]#kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

canary nginx echo.172.29.9.60.nip.io 172.29.9.60 80 19s

production nginx echo.172.29.9.60.nip.io 172.29.9.60 80 17m

- Canary 版本应用创建成功后,接下来我们在命令行终端中来不断访问这个应用,观察 Hostname 变化:

[root@master1 canary]#for i in $(seq 1 10); do curl -s echo.172.29.9.60.nip.io | grep "Hostname"; done #canary版本出现3次

Hostname: canary-66cb497b7f-86mdj

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

#这里测试多次。

[root@master1 canary]#for i in $(seq 1 10); do curl -s echo.172.29.9.60.nip.io | grep "Hostname"; done #canary版本出现4次

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: canary-66cb497b7f-86mdj

[root@master1 canary]#for i in $(seq 1 10); do curl -s echo.172.29.9.60.nip.io | grep "Hostname"; done #canary版本出现2次

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

[root@master1 canary]#

由于我们给 Canary 版本应用分配了 30% 左右权重的流量,所以上面我们访问10次有3次(不是一定的)访问到了 Canary 版本的应用,符合我们的预期。

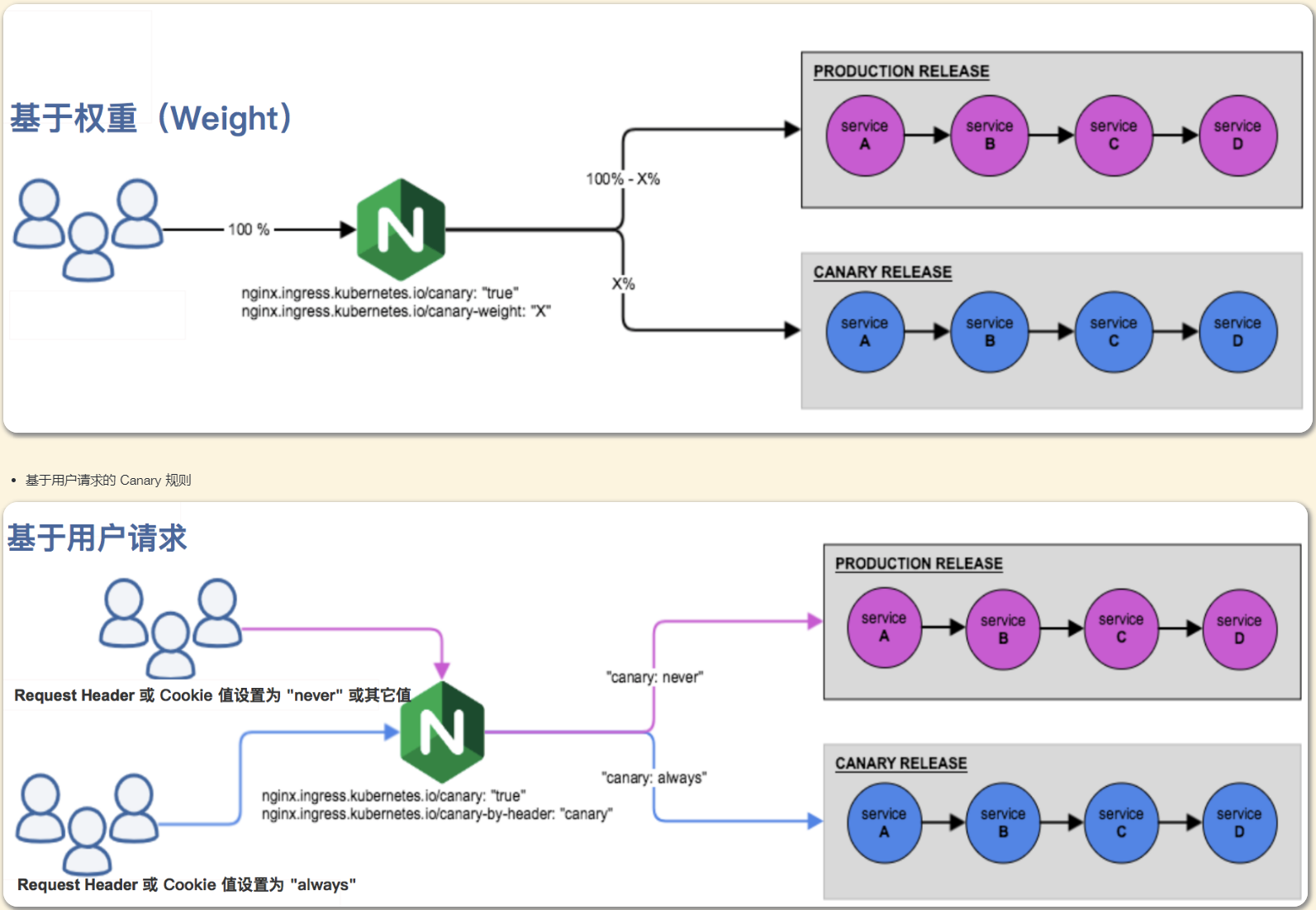

2.基于 Request Header

基于 Request Header 进行流量切分的典型应用场景即灰度发布或 A/B 测试场景。

在上面的 Canary 版本的 Ingress 对象中新增一条 annotation 配置 nginx.ingress.kubernetes.io/canary-by-header: canary(这里的 value 可以是任意值),使当前的 Ingress 实现基于 Request Header 进行流量切分,由于 canary-by-header 的优先级大于 canary-weight,所以会忽略原有的 canary-weight 的规则。

# canary-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: canary

annotations:

nginx.ingress.kubernetes.io/canary: "true" # 要开启灰度发布机制,首先需要启用 Canary

nginx.ingress.kubernetes.io/canary-by-header: canary # 基于header的流量切分

nginx.ingress.kubernetes.io/canary-weight: "30" # 会被忽略,因为配置了 canary-by-header Canary版本

spec:

ingressClassName: nginx

rules:

- host: echo.172.29.9.60.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: canary

port:

number: 80

更新上面的 Ingress 资源对象后,我们在请求中加入不同的 Header 值,再次访问应用的域名。

注意:当 Request Header 设置为 never 或 always 时,请求将不会或一直被发送到 Canary 版本,对于任何其他 Header 值,将忽略 Header,并通过优先级将请求与其他 Canary 规则进行优先级的比较。

- 部署并测试

[root@master1 canary]#kubectl apply -f canary-ingress.yaml

ingress.networking.k8s.io/canary configured

[root@master1 canary]#for i in $(seq 1 10); do curl -s -H "canary: never" echo.172.29.9.60.nip.io | grep "Hostname"; done

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

[root@master1 canary]#for i in $(seq 1 10); do curl -s -H "canary: always" echo.172.29.9.60.nip.io | grep "Hostname"; done

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

这里我们在请求的时候设置了 canary: never 这个 Header 值,所以请求没有发送到 Canary 应用中去。

这里我们在请求的时候设置了 canary: always 这个 Header 值,所以请求全部发送到 Canary 应用中去了。

- 如果设置为其他值呢:

[root@master1 canary]#for i in $(seq 1 10); do curl -s -H "canary: other-value" echo.172.29.9.60.nip.io | grep "Hostname"; done

Hostname: production-856d5fb99-k6k4h

Hostname: canary-66cb497b7f-86mdj

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: canary-66cb497b7f-86mdj

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

[root@master1 canary]#for i in $(seq 1 10); do curl -s -H "canary: other-value" echo.172.29.9.60.nip.io | grep "Hostname"; done

Hostname: production-856d5fb99-k6k4h

Hostname: canary-66cb497b7f-86mdj

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: canary-66cb497b7f-86mdj

Hostname: production-856d5fb99-k6k4h

Hostname: canary-66cb497b7f-86mdj

由于我们请求设置的 Header 值为 canary: other-value,所以 ingress-nginx 会通过优先级将请求与其他 Canary 规则进行优先级的比较,我们这里也就会进入 canary-weight: "30" 这个规则去。

- 这个时候我们可以在上一个 annotation (即

canary-by-header)的基础上添加一条nginx.ingress.kubernetes.io/canary-by-header-value: user-value这样的规则,就可以将请求路由到 Canary Ingress 中指定的服务了。

# canary-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: canary

annotations:

nginx.ingress.kubernetes.io/canary: "true" # 要开启灰度发布机制,首先需要启用 Canary

nginx.ingress.kubernetes.io/canary-by-header-value: user-value

nginx.ingress.kubernetes.io/canary-by-header: canary # 基于header的流量切分

nginx.ingress.kubernetes.io/canary-weight: "30" # 会被忽略,因为配置了 canary-by-header Canary版本

spec:

ingressClassName: nginx

rules:

- host: echo.172.29.9.60.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: canary

port:

number: 80

- 同样更新 Ingress 对象后,重新访问应用,当 Request Header 满足

canary: user-value时,所有请求就会被路由到 Canary 版本:

[root@master1 canary]#kubectl apply -f canary-ingress.yaml

ingress.networking.k8s.io/canary configured

[root@master1 canary]#for i in $(seq 1 10); do curl -s -H "canary: user-value" echo.172.29.9.60.nip.io | grep "Hostname"; done

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

3.基于 Cookie

与基于 Request Header 的 annotation 用法规则类似。例如在 A/B 测试场景下,需要让地域为北京的用户访问 Canary 版本。那么当 cookie 的 annotation 设置为 nginx.ingress.kubernetes.io/canary-by-cookie: "users_from_Beijing",此时后台可对登录的用户请求进行检查,如果该用户访问源来自北京则设置 cookie users_from_Beijing 的值为 always,这样就可以确保北京的用户仅访问 Canary 版本。

- 同样我们更新 Canary 版本的 Ingress 资源对象,采用基于 Cookie 来进行流量切分

# canary-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: canary

annotations:

nginx.ingress.kubernetes.io/canary: "true" # 要开启灰度发布机制,首先需要启用 Canary

#nginx.ingress.kubernetes.io/canary-by-header-value: user-value

#nginx.ingress.kubernetes.io/canary-by-header: canary # 基于header的流量切分

nginx.ingress.kubernetes.io/canary-by-cookie: "users_from_Beijing" # 基于 cookie

nginx.ingress.kubernetes.io/canary-weight: "30" # 会被忽略,因为配置了 canary-by-cookie

spec:

ingressClassName: nginx

rules:

- host: echo.172.29.9.60.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: canary

port:

number: 80

- 更新上面的 Ingress 资源对象后,我们在请求中设置一个

users_from_Beijing=always的 Cookie 值,再次访问应用的域名。

[root@master1 canary]#kubectl apply -f canary-ingress.yaml

ingress.networking.k8s.io/canary configured

[root@master1 canary]#for i in $(seq 1 10); do curl -s -b "users_from_Beijing=always" echo.172.29.9.60.nip.io | grep "Hostname"; done

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

我们可以看到应用都被路由到了 Canary 版本的应用中去了。

- 如果我们将这个 Cookie 值设置为 never,则不会路由到 Canary 应用中。

[root@master1 canary]#for i in $(seq 1 10); do curl -s -b "users_from_Beijing=nerver" echo.172.29.9.60.nip.io | grep "Hostname"; done

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: canary-66cb497b7f-86mdj

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

[root@master1 canary]#for i in $(seq 1 10); do curl -s -b "users_from_Beijing=nerver" echo.172.29.9.60.nip.io | grep "Hostname"; done

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: canary-66cb497b7f-86mdj

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: production-856d5fb99-k6k4h

Hostname: canary-66cb497b7f-86mdj

Hostname: production-856d5fb99-k6k4h

Hostname: canary-66cb497b7f-86mdj

测试结束。😘

4、HTTPS

如果我们需要用 HTTPS 来访问我们这个应用的话,就需要监听 443 端口了,同样用 HTTPS 访问应用必然就需要证书。

1.openssl自签证书

💘 实战:Ingress-nginx之用 HTTPS 来访问我们的应用(openssl)-2022.11.27(测试成功)

- 实验环境

实验环境:

1、win10,vmwrokstation虚机;

2、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

k8s version:v1.22.2

containerd://1.5.5

- 实验软件

链接:https://pan.baidu.com/s/1hKpD4bRPtYdaO3BeB7g8gQ?pwd=bi4d

提取码:bi4d

2023.3.14-实战:Ingress-nginx之用 HTTPS 来访问我们的应用(openssl)-2022.11.27(测试成功)

1、用 openssl创建一个自签名证书

[root@master1 ~]#openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=foo.bar.com"

Generating a 2048 bit RSA private key

....................................+++

.............+++

writing new private key to 'tls.key'

-----

2、通过 Secret 对象来引用证书文件

# 要注意证书文件名称必须是 tls.crt 和 tls.key

[root@master1 ~]#kubectl create secret tls foo-tls --cert=tls.crt --key=tls.key

secret/foo-tls created

[root@master1 ~]#kubectl get secrets foo-tls

NAME TYPE DATA AGE

foo-tls kubernetes.io/tls 2 13s

3、创建应用

记得提前创建好应用:

# my-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

spec:

selector:

matchLabels:

app: my-nginx

template:

metadata:

labels:

app: my-nginx

spec:

containers:

- name: my-nginx

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: my-nginx

labels:

app: my-nginx

spec:

ports:

- port: 80

protocol: TCP

name: http

selector:

app: my-nginx

部署:

[root@k8s-master1 tls]#kubectl apply -f my-nginx.yaml

deployment.apps/my-nginx1 created

service/my-nginx1 created

查看应用:

[root@master1 https-openssl]#kubectl get po,deploy,svc

NAME READY STATUS RESTARTS AGE

pod/my-nginx-7c4ff94949-zqlf8 1/1 Running 0 34s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/my-nginx 1/1 1 1 34s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 20d

service/my-nginx ClusterIP 10.104.187.51 <none> 80/TCP 34s

4、编写ingress

#ingress-https.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-https

spec:

ingressClassName: nginx

tls: # 配置 tls 证书

- hosts:

- foo.bar.com

secretName: foo-tls #包含证书的一个secret对象名称

rules:

- host: foo.bar.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: my-nginx

port:

number: 80

本地pc配置下域名解析:

这里记得在自己本地pc的hosts里面做下域名解析:

C:\WINDOWS\System32\drivers\etc

172.29.9.51 foo.bar.com #注意:这个的地址就是,ingress-nginx-controller所在节点的地址。

5、测试

部署并测试:

[root@master1 https]#kubectl apply -f ingress-https.yaml

ingress.networking.k8s.io/ingress-https created

[root@master1 https]#kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-https nginx foo.bar.com 172.29.9.51 80, 443 6s

在自己pc浏览器里进行验证:

测试结束。😘

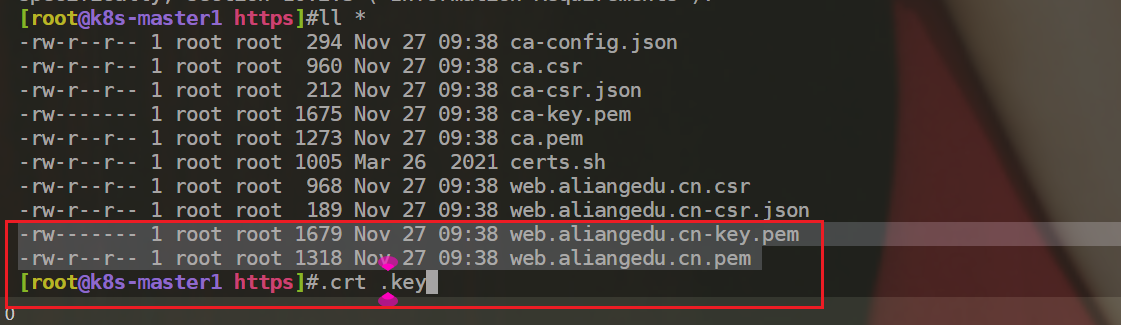

2.cfgssl自签证书

💘 实战:实战:Ingress-nginx之用 HTTPS 来访问我们的应用(cfgssl)-2023.1.2(测试成功)

- 实验环境

实验环境:

1、win10,vmwrokstation虚机;

2、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

k8s version:v1.22.2

containerd://1.5.5

- 实验软件

链接:https://pan.baidu.com/s/13nee2xk30Y8-z9TdpuZOuA?pwd=9zzp

提取码:9zzp

2023.1.5-cfgssl软件包

1、安装cfgssl工具

- 将cfssl工具安装包和脚本上传到服务器:

[root@k8s-master1 ~]#ls -lh cfssl.tar.gz

-rw-r--r-- 1 root root 5.6M Nov 25 2019 cfssl.tar.gz

-rw-r--r-- 1 root root 1005 Mar 26 2021 certs.sh

[root@k8s-master1 ~]#tar tvf cfssl.tar.gz

-rwxr-xr-x root/root 10376657 2019-11-25 06:36 cfssl

-rwxr-xr-x root/root 6595195 2019-11-25 06:36 cfssl-certinfo

-rwxr-xr-x root/root 2277873 2019-11-25 06:36 cfssljson

[root@k8s-master1 ~]#tar xf cfssl.tar.gz -C /usr/bin/

- 验证:

[root@k8s-master1 ~]#cfssl --help

Usage:

Available commands:

bundle

certinfo

ocspsign

selfsign

scan

print-defaults

sign

gencert

ocspdump

version

genkey

gencrl

ocsprefresh

info

serve

ocspserve

revoke

Top-level flags:

-allow_verification_with_non_compliant_keys

Allow a SignatureVerifier to use keys which are technically non-compliant with RFC6962.

-loglevel int

Log level (0 = DEBUG, 5 = FATAL) (default 1)

2、生成证书

- 创建测试目录:

[root@k8s-master1 ~]#mkdir https

[root@k8s-master1 ~]#cd https/

- 将证书生成脚本移动到刚才创建的目录:

[root@k8s-master1 ~]#mv certs.sh https/

[root@k8s-master1 ~]#ls https/

certs.sh

[root@k8s-master1 ~]#cd https/

[root@k8s-master1 https]#cat certs.sh

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

cat > web.aliangedu.cn-csr.json <<EOF

{

"CN": "web.aliangedu.cn",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes web.aliangedu.cn-csr.json | cfssljson -bare web.aliangedu.cn

备注:

- 执行脚本,生成证书:

[root@k8s-master1 https]#sh certs.sh

2022/11/27 09:38:30 [INFO] generating a new CA key and certificate from CSR

2022/11/27 09:38:30 [INFO] generate received request

2022/11/27 09:38:30 [INFO] received CSR

2022/11/27 09:38:30 [INFO] generating key: rsa-2048

2022/11/27 09:38:30 [INFO] encoded CSR

2022/11/27 09:38:30 [INFO] signed certificate with serial number 42920572197673510025121729381310395494775886689

2022/11/27 09:38:30 [INFO] generate received request

2022/11/27 09:38:30 [INFO] received CSR

2022/11/27 09:38:30 [INFO] generating key: rsa-2048

2022/11/27 09:38:30 [INFO] encoded CSR

2022/11/27 09:38:30 [INFO] signed certificate with serial number 265650157446309871110524021899155707215940024732

2022/11/27 09:38:30 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-master1 https]#ll *

-rw-r--r-- 1 root root 294 Nov 27 09:38 ca-config.json

-rw-r--r-- 1 root root 960 Nov 27 09:38 ca.csr

-rw-r--r-- 1 root root 212 Nov 27 09:38 ca-csr.json

-rw------- 1 root root 1675 Nov 27 09:38 ca-key.pem

-rw-r--r-- 1 root root 1273 Nov 27 09:38 ca.pem

-rw-r--r-- 1 root root 1005 Mar 26 2021 certs.sh

-rw-r--r-- 1 root root 968 Nov 27 09:38 web.aliangedu.cn.csr

-rw-r--r-- 1 root root 189 Nov 27 09:38 web.aliangedu.cn-csr.json

-rw------- 1 root root 1679 Nov 27 09:38 web.aliangedu.cn-key.pem #数字证书私钥

-rw-r--r-- 1 root root 1318 Nov 27 09:38 web.aliangedu.cn.pem #数字证书

[root@k8s-master1 https]#

- 注意:这个后缀不一样,

.crt,.key。

3、创建secret

- 创建secret:

kubectl create secret tls web-aliangedu-cn --cert=web.aliangedu.cn.pem --key=web.aliangedu.cn-key.pem

- 查看:

[root@k8s-master1 https]#kubectl create secret tls web-aliangedu-cn --cert=web.aliangedu.cn.pem --key=web.aliangedu.cn-key.pem

secret/web-aliangedu-cn created

[root@k8s-master1 https]#kubectl get secrets

NAME TYPE DATA AGE

default-token-xkms9 kubernetes.io/service-account-token 3 72d

web-aliangedu-cn kubernetes.io/tls 2 4s

4、部署应用

# my-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

spec:

selector:

matchLabels:

app: my-nginx

template:

metadata:

labels:

app: my-nginx

spec:

containers:

- name: my-nginx

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: my-nginx

labels:

app: my-nginx

spec:

ports:

- port: 80

protocol: TCP

name: http

selector:

app: my-nginx

- 部署并查看:

[root@k8s-master1 ~]#kubectl apply -f my-nginx.yaml

[root@k8s-master1 ~]#kubectl get po

NAME READY STATUS RESTARTS AGE

my-nginx-7c4ff94949-zg5zp 1/1 Running 0 82s

[root@k8s-master1 ~]#kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 72d

my-nginx ClusterIP 10.103.251.223 <none> 80/TCP 34d

5、创建Ingress

#ingress-https.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-https

spec:

ingressClassName: nginx

tls: # 配置 tls 证书

- hosts:

- web.aliangedu.cn

secretName: web-aliangedu-cn #包含证书的一个secret对象名称

rules:

- host: web.aliangedu.cn

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: my-nginx

port:

number: 80

- 创建并查看:

[root@k8s-master1 ~]#kubectl apply -f ingress-https.yaml

ingress.networking.k8s.io/ingress-https created

[root@k8s-master1 ~]#kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-https nginx web.aliangedu.cn 80, 443 5s

6、验证

在浏览器里访问:(可以看到是https了)

https://web.aliangedu.cn/

- 注意:证书和域名时一一对应的

测试结束。😘

除了自签名证书或者购买正规机构的 CA 证书之外,我们还可以通过一些工具来自动生成合法的证书,cert-manager 是一个云原生证书管理开源项目,可以用于在 Kubernetes 集群中提供 HTTPS 证书并自动续期,支持 Let’sEncrypt/HashiCorp/Vault 这些免费证书的签发。在 Kubernetes 中,可以通过 Kubernetes Ingress 和Let’s Encrypt 实现外部服务的自动化 HTTPS。

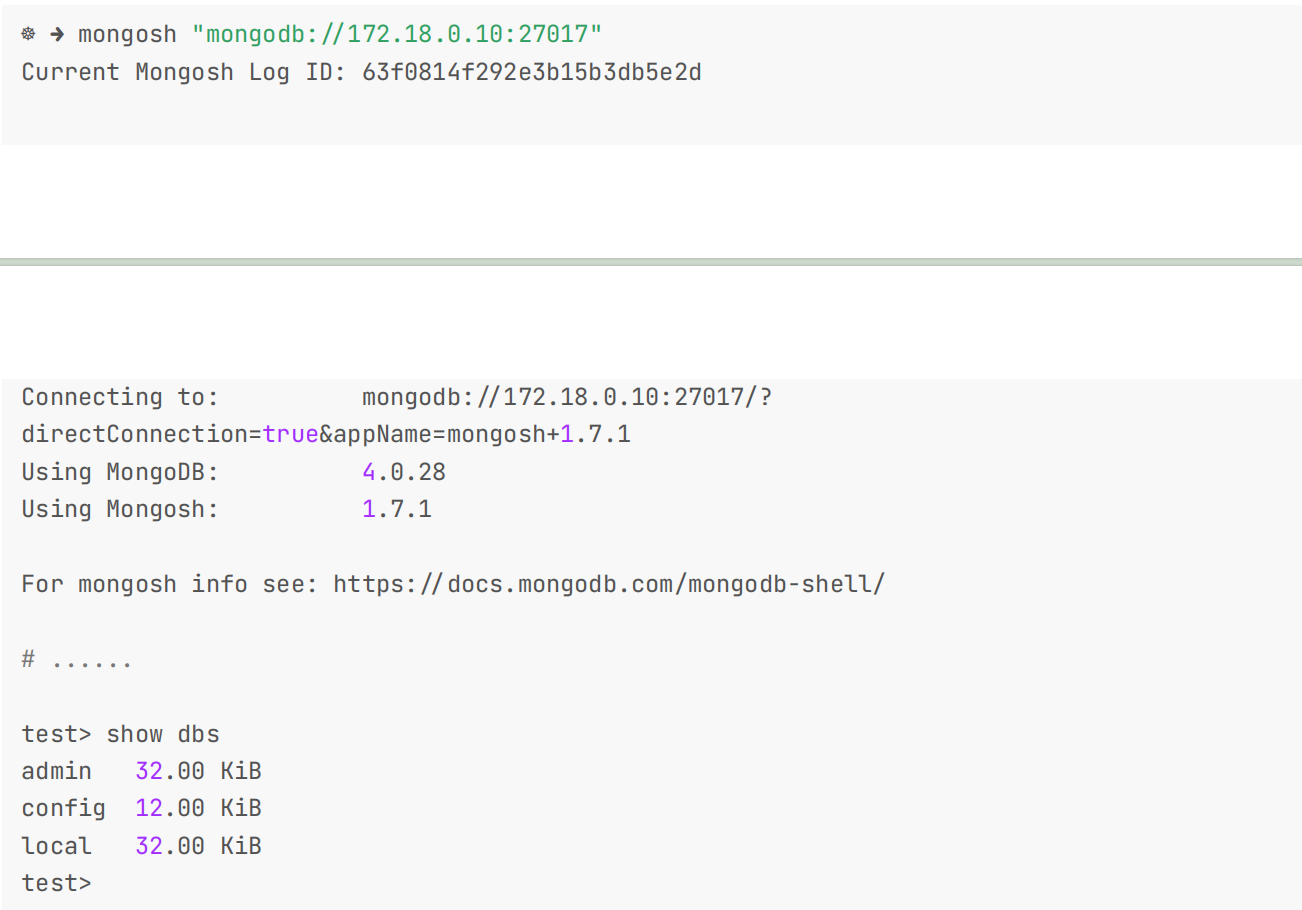

5、TCP 与 UDP

由于在 Ingress 资源对象中没有直接对 TCP 或 UDP 服务的支持,要在 ingress-nginx 中提供支持,需要在控制器启动参数中添加 --tcp-services-configmap 和 --udp-services-configmap 标志指向一个 ConfigMap,其中的 key 是要使用的外部端口,value 值是使用格式 <namespace/service name>:<service port>:[PROXY]:[PROXY] 暴露的服务,端口可以使用端口号或者端口名称,最后两个字段是可选的,用于配置 PROXY 代理。

💘 实战:Ingress-nginx之TCP-2023.3.15(测试成功)

- 实战步骤

- 实验环境

实验环境:

1、win10,vmwrokstation虚机;

2、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

k8s version:v1.22.2

containerd: v1.5.5

- 实验软件

链接:https://pan.baidu.com/s/12TEiSjUNKVt4or1TNSfEhg?pwd=0w34

提取码:0w34

2023.3.15-实战:Ingress-nginx之TCP-2023.3.15(测试成功)

- 前提条件

已安装好ingress-nginx环境;(ingress-nginx svc类型是LoadBalancer的。)

已部署好MetalLB环境;(当然,这里也可以不部署MetalLB环境,直接使用域名:NodePort端口去访问的,这里为了测试方便,我们使用LB来进行访问)。

ingress-nginx部署见文档:

本地文档:

csdn链接:

https://blog.csdn.net/weixin_39246554/article/details/129334116?spm=1001.2014.3001.5501

MetalLB部署见文档:

本地文档:

csdn链接:

https://blog.csdn.net/weixin_39246554/article/details/129343617?spm=1001.2014.3001.5501

⚠️ 注意:

Ingres-nginx是通过DaemonSet方式部署的,MetalLB部署后,在3个节点上都是可以正常访问ingress的哦。

- 注意:当前测

ingress-nginx的EXTERNAL-IP为172.29.9.60。

[root@master1 canary]#kubectl get svc -ningress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.108.58.246 172.29.9.60 80:32439/TCP,443:31347/TCP 2d15h

ingress-nginx-controller-admission ClusterIP 10.101.184.28 <none> 443/TCP 2d15h

1.部署MongoDB服务

- 比如现在我们要通过

ingress-nginx来暴露一个 MongoDB 服务,首先创建如下的应用:

# mongo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mongo

labels:

app: mongo

spec:

selector:

matchLabels:

app: mongo

template:

metadata:

labels:

app: mongo

spec:

volumes:

- name: data

emptyDir: {}

containers:

- name: mongo

image: mongo:4.0

ports:

- containerPort: 27017

volumeMounts:

- name: data

mountPath: /data/db

---

apiVersion: v1

kind: Service

metadata:

name: mongo

spec:

selector:

app: mongo

ports:

- port: 27017

- 直接创建上面的资源对象:

[root@master1 TCP]#kubectl apply -f mongo.yaml

deployment.apps/mongo created

service/mongo created

[root@master1 TCP]#kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 20d

mongo ClusterIP 10.99.220.83 <none> 27017/TCP 20s

[root@master1 TCP]#kubectl get po -l app=mongo

NAME READY STATUS RESTARTS AGE

mongo-7885fb6bd4-gpbxz 1/1 Running 0 42s

2.创建一个ConfigMap

- 现在我们要通过

ingress-nginx来暴露上面的 MongoDB 服务,我们需要创建一个如下所示的 ConfigMap:

# tcp-ingress-ConfigMap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: ingress-nginx-tcp

namespace: ingress-nginx

data:

"27017": default/mongo:27017

部署:

[root@master1 TCP]#kubectl apply -f tcp-ingress-ConfigMap.yaml

configmap/ingress-nginx-tcp created

#重新部署完成后会自动生成一个名为 `ingress-nginx-tcp` 的 ConfigMap 对象,如下所示:

[root@master1 TCP]#kubectl get cm -ningress-nginx

NAME DATA AGE

……

ingress-nginx-tcp 1 15s

3.配置ingress-nginx 的启动参数

- 然后在 ingress-nginx 的启动参数中添加

--tcp-services-configmap=$(POD_NAMESPACE)/ingress-nginx-tcp这样的配置:

[root@master1 ingress-nginx部署]#vim deploy.yaml

……

- --tcp-services-configmap=$(POD_NAMESPACE)/ingress-nginx-tcp

……

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-OthgT3eI-1678934536452)(https://bucket-hg.oss-cn-shanghai.aliyuncs.com/img/image-20230314213035761.png)]

4.通过 Service 暴露tcp 端口

由于我们这里安装的 ingress-nginx 是通过 LoadBalancer 的 Service 暴露出去的,那么自然我们也需要通过 Service 去暴露我们这里的 tcp 端口,所以我们还需要更新 ingress-nginx 的 Service 对象,如下所示:

[root@master1 ingress-nginx部署]#vim deploy.yaml

……

- name: mongo #暴露27017端口

port: 27017

protocol: TCP

targetPort: 27017

……

- 编辑完,重新部署即可:

[root@master1 ingress-nginx部署]#kubectl apply -f deploy.yaml

namespace/ingress-nginx unchanged

serviceaccount/ingress-nginx unchanged

serviceaccount/ingress-nginx-admission unchanged

role.rbac.authorization.k8s.io/ingress-nginx unchanged

role.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

clusterrole.rbac.authorization.k8s.io/ingress-nginx unchanged

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

rolebinding.rbac.authorization.k8s.io/ingress-nginx unchanged

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx unchanged

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

configmap/ingress-nginx-controller unchanged

service/ingress-nginx-controller unchanged

service/ingress-nginx-controller-admission unchanged

daemonset.apps/ingress-nginx-controller configured

job.batch/ingress-nginx-admission-create unchanged

job.batch/ingress-nginx-admission-patch unchanged

ingressclass.networking.k8s.io/nginx unchanged

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission configured

[root@master1 ingress-nginx部署]#kubectl get po -ningress-nginx -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-admission-create--1-5h6rr 0/1 Completed 0 3d6h 10.244.1.25 node1 <none> <none>

ingress-nginx-admission-patch--1-jdn2k 0/1 Completed 0 3d6h 10.244.2.18 node2 <none> <none>

ingress-nginx-controller-7hzwd 1/1 Running 0 95s 10.244.2.25 node2 <none> <none>

ingress-nginx-controller-s9psd 1/1 Running 0 30s 10.244.0.3 master1 <none> <none>

ingress-nginx-controller-tf2mp 1/1 Running 0 63s 10.244.1.29 node1 <none> <none>

[root@master1 ingress-nginx部署]#kubectl get svc ingress-nginx-controller -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.108.58.246 172.29.9.60 80:32439/TCP,443:31347/TCP,27017:32608/TCP 3d6h

5.验证

- 现在我们就可以通过

ingress-nginx暴露的 27017 端口去访问 Mongo 服务了:

[root@master1 TCP]#kubectl exec -it mongo-7885fb6bd4-gpbxz -- bash

root@mongo-7885fb6bd4-gpbxz:/# mongo --host 172.29.9.60 --port 27017

MongoDB shell version v4.0.27

connecting to: mongodb://172.29.9.60:27017/?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("6656a2e9-0ff5-40ba-99d5-68efdad06043") }

MongoDB server version: 4.0.27

Welcome to the MongoDB shell.

For interactive help, type "help".

For more comprehensive documentation, see

http://docs.mongodb.org/

Questions? Try the support group

http://groups.google.com/group/mongodb-user

Server has startup warnings:

2023-03-14T13:21:58.361+0000 I CONTROL [initandlisten]

2023-03-14T13:21:58.361+0000 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2023-03-14T13:21:58.361+0000 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2023-03-14T13:21:58.361+0000 I CONTROL [initandlisten]

2023-03-14T13:21:58.361+0000 I CONTROL [initandlisten]

2023-03-14T13:21:58.361+0000 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'.

2023-03-14T13:21:58.361+0000 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2023-03-14T13:21:58.361+0000 I CONTROL [initandlisten]

2023-03-14T13:21:58.361+0000 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2023-03-14T13:21:58.361+0000 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2023-03-14T13:21:58.361+0000 I CONTROL [initandlisten]

---

Enable MongoDB's free cloud-based monitoring service, which will then receive and display

metrics about your deployment (disk utilization, CPU, operation statistics, etc).

The monitoring data will be available on a MongoDB website with a unique URL accessible to you

and anyone you share the URL with. MongoDB may use this information to make product

improvements and to suggest MongoDB products and deployment options to you.

To enable free monitoring, run the following command: db.enableFreeMonitoring()

To permanently disable this reminder, run the following command: db.disableFreeMonitoring()

---

> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB

>

- 现在我们就可以通过 LB 地址 172.29.9.60 加上暴露的 27017 端口去访问 Mongo 服务了,比如我们这里在节点上安装了 MongoDB 客户端 mongosh,使用命令 mongosh “mongodb://172.29.9.60:27017” 就可以访问到我们的Mongo 服务了:

注意:自己这里没安装MongoDB客户端mongosh。这里只保留文档内容。

- 同样的我们也可以去查看最终生成的

nginx.conf配置文件:

[root@master1 ~]#kubectl get po -ningress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create--1-5h6rr 0/1 Completed 0 3d20h

ingress-nginx-admission-patch--1-jdn2k 0/1 Completed 0 3d20h

ingress-nginx-controller-7hzwd 1/1 Running 0 14h

ingress-nginx-controller-s9psd 1/1 Running 0 14h

ingress-nginx-controller-tf2mp 1/1 Running 0 14h

[root@master1 ~]#kubectl exec ingress-nginx-controller-tf2mp -ningress-nginx -- cat /etc/nginx/nginx.conf

......

stream {

……

# TCP services

server {

preread_by_lua_block {

ngx.var.proxy_upstream_name="tcp-default-mongo-27017";

}

listen 27017;

listen [::]:27017;

proxy_timeout 600s;

proxy_next_upstream on;

proxy_next_upstream_timeout 600s;

proxy_next_upstream_tries 3;

proxy_pass upstream_balancer;

}

# UDP services

# Stream Snippets

TCP 相关的配置位于 stream 配置块下面。

测试结束。😘

从 Nginx 1.9.13 版本开始提供 UDP 负载均衡,同样我们也可以在 ingress-nginx 中来代理 UDP 服务,比如我们可以去暴露 kube-dns 的服务,同样需要创建一个如下所示的 ConfigMap:

apiVersion: v1

kind: ConfigMap

metadata:

name: udp-services

namespace: ingress-nginx

data:

53: "kube-system/kube-dns:53"

然后需要在 ingress-nginx 参数中添加一个 --udp-services-configmap=$(POD_NAMESPACE)/ingress-nginx-udp 这样的配置,Service 中也要加上暴露的 53 端口,然后重新更新即可。

方法个TCP配置一样,这里省略。

6、全局配置

💘 实战:Ingress-nginx之全局配置-2023.3.15(测试成功)

- 实战步骤

- 实验环境

实验环境:

1、win10,vmwrokstation虚机;

2、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

k8s version:v1.22.2

containerd: v1.5.5

- 实验软件

无。

- 前提条件

已安装好ingress-nginx环境;(ingress-nginx svc类型是LoadBalancer的。)

已部署好MetalLB环境;(当然,这里也可以不部署MetalLB环境,直接使用域名:NodePort端口去访问的,这里为了测试方便,我们使用LB来进行访问)。

ingress-nginx部署见文档:

本地文档:

csdn链接:

https://blog.csdn.net/weixin_39246554/article/details/129334116?spm=1001.2014.3001.5501

MetalLB部署见文档:

本地文档:

csdn链接:

https://blog.csdn.net/weixin_39246554/article/details/129343617?spm=1001.2014.3001.5501

⚠️ 注意:

Ingres-nginx是通过DaemonSet方式部署的,MetalLB部署后,在3个节点上都是可以正常访问ingress的哦。

- 注意:当前测

ingress-nginx的EXTERNAL-IP为172.29.9.60。

[root@master1 canary]#kubectl get svc -ningress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.108.58.246 172.29.9.60 80:32439/TCP,443:31347/TCP 2d15h

ingress-nginx-controller-admission ClusterIP 10.101.184.28 <none> 443/TCP 2d15h

1.查看ingress-nginx默认的ConfigMap

除了可以通过 annotations 对指定的 Ingress 进行定制之外,我们还可以配置 ingress-nginx 的全局配置,在控制器启动参数中通过标志 --configmap 指定了一个全局的 ConfigMap 对象,我们可以将全局的一些配置直接定义在该对象中即可:

[root@master1 ~]#kubectl get po -ningress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create--1-5h6rr 0/1 Completed 0 4d3h

ingress-nginx-admission-patch--1-jdn2k 0/1 Completed 0 4d3h

ingress-nginx-controller-7hzwd 1/1 Running 0 20h

ingress-nginx-controller-s9psd 1/1 Running 0 20h

ingress-nginx-controller-tf2mp 1/1 Running 0 20h

[root@master1 ~]#kubectl edit pod ingress-nginx-controller-7hzwd -ningress-nginx

……

containers:

- args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

......

[root@master1 ~]#kubectl get cm ingress-nginx-controller -ningress-nginx

NAME DATA AGE

ingress-nginx-controller 1 4d3h

[root@master1 ~]#kubectl get cm ingress-nginx-controller -ningress-nginx -oyaml

apiVersion: v1

data:

allow-snippet-annotations: "true" #这里

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"allow-snippet-annotations":"true"},"kind":"ConfigMap","metadata":{"annotations":{},"labels":{"app.kubernetes.io/component":"controller","app.kubernetes.io/instance":"ingress-nginx","app.kubernetes.io/name":"ingress-nginx","app.kubernetes.io/part-of":"ingress-nginx","app.kubernetes.io/version":"1.5.1"},"name":"ingress-nginx-controller","namespace":"ingress-nginx"}}

creationTimestamp: "2023-03-11T07:05:30Z"

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx-controller

namespace: ingress-nginx

resourceVersion: "228849"

uid: 43e910ef-4b42-433a-9c1b-bd62214731c2

2.配置ingress-nginx默认的ConfigMap

- 比如我们可以添加如下所示的一些常用配置:

[root@master1 ~]#kubectl edit configmap ingress-nginx-controller -n ingress-nginx

apiVersion: v1

data:

allow-snippet-annotations: "true"

client-header-buffer-size: 32k # 注意不是下划线

client-max-body-size: 5m

use-gzip: "true"

gzip-level: "7"

large-client-header-buffers: 4 32k

proxy-connect-timeout: 11s

proxy-read-timeout: 12s

keep-alive: "75" # 启用keep-alive,连接复用,提高QPS,一般线上都是建议启用这个keep-alive的;

keep-alive-requests: "100"

upstream-keepalive-connections: "10000"

upstream-keepalive-requests: "100"

upstream-keepalive-timeout: "60"

disable-ipv6: "true"

disable-ipv6-dns: "true"

max-worker-connections: "65535"

max-worker-open-files: "10240"

kind: ConfigMap

......

3.验证

- 修改完成后 Nginx 配置会自动重载生效,我们可以查看

nginx.conf配置文件进行验证:

[root@master1 ~]#kubectl logs ingress-nginx-controller-7hzwd -ningress-nginx

I0315 10:29:19.809867 7 controller.go:168] "Configuration changes detected, backend reload required"

I0315 10:29:20.201208 7 controller.go:185] "Backend successfully reloaded"

I0315 10:29:20.210370 7 event.go:285] Event(v1.ObjectReference{Kind:"Pod", Namespace:"ingress-nginx", Name:"ingress-nginx-controller-7hzwd", UID:"eef199ea-1f92-48ab-8ffb-10f252d3b2da",

APIVersion:"v1", ResourceVersion:"277964", FieldPath:""}): type: 'Normal' reason: 'RELOAD' NGINX reload triggered due to a change in configuration

[root@master1 ~]#

[root@master1 ~]#kubectl exec -it ingress-nginx-controller-7hzwd -n ingress-nginx -- cat /etc/nginx/nginx.conf |grep large_client_header_buffers

large_client_header_buffers 4 32k;

[root@master1 ~]#

以下为ab测试效果:

- 没配置时效果

- 配置后效果

测试结束。😘

此外往往我们还需要对 ingress-nginx 部署的节点进行性能优化,修改一些内核参数,使得适配 Nginx 的使用场景,一般我们是直接去修改节点上的内核参数,为了能够统一管理,我们可以使用 initContainers 来进行配置:

(可以参考官方博客 (https://www.nginx.com/blog/tuning-nginx/ 进行调整)

initContainers:

- command:

- /bin/sh

- -c

- |

mount -o remount rw /proc/sys

sysctl -w net.core.somaxconn=65535 # 具体的配置视具体情况而定

sysctl -w net.ipv4.tcp_tw_reuse=1

sysctl -w net.ipv4.ip_local_port_range="1024 65535"

sysctl -w fs.file-max=1048576

sysctl -w fs.inotify.max_user_instances=16384

sysctl -w fs.inotify.max_user_watches=524288

sysctl -w fs.inotify.max_queued_events=16384

image: busybox

imagePullPolicy: IfNotPresent

name: init-sysctl

securityContext:

capabilities:

add:

- SYS_ADMIN

drop:

- ALL

......

部署完成后通过 initContainers 就可以修改节点内核参数了,生产环境建议对节点内核参数进行相应的优化。

性能优化需要有丰富的经验,关于 nginx 的性能优化可以参考文章https://cloud.tencent.com/developer/article/1026833。

7、gRPC

ingress-nginx 控制器同样也支持 gRPC 服务的。gRPC 是 Google 开源的一个高性能 RPC 通信框架,通过Protocol Buffers 作为其 IDL,在不同语言开发的平台上使用,同时 gRPC 基于 HTTP/2 协议实现,提供了多路复用、头部压缩、流控等特性,极大地提高了客户端与服务端的通信效率。gRPC 简介在 gRPC 服务中,客户端应用可以同本地方法一样调用到位于不同服务器上的服务端应用方法,可以很方便地创建分布式应用和服务。同其他 RPC 框架一样,gRPC 也需要定义一个服务接口,同时指定被远程调用的方法和返回类型。服务端需要实现被定义的接口,同时运行一个gRPC 服务器来处理客户端请求。

💘 实战:Ingress-nginx之gRPC-2023.3.16(测试成功)

- 实战步骤

- 实验环境

实验环境:

1、win10,vmwrokstation虚机;

2、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

k8s version:v1.22.2

containerd: v1.5.5

- 实验软件

链接:https://pan.baidu.com/s/1eqLLhYrl7_Q1nPGfDLUxGg?pwd=tbdz

提取码:tbdz

2023.3.16-实战:Ingress-nginx之gRPC-2023.3.16(测试成功)

- 前提条件

已安装好ingress-nginx环境;(ingress-nginx svc类型是LoadBalancer的。)

已部署好MetalLB环境;(当然,这里也可以不部署MetalLB环境,直接使用域名:NodePort端口去访问的,这里为了测试方便,我们使用LB来进行访问)。

ingress-nginx部署见文档:

本地文档:

csdn链接:

https://blog.csdn.net/weixin_39246554/article/details/129334116?spm=1001.2014.3001.5501

MetalLB部署见文档:

本地文档:

csdn链接:

https://blog.csdn.net/weixin_39246554/article/details/129343617?spm=1001.2014.3001.5501

⚠️ 注意:

Ingres-nginx是通过DaemonSet方式部署的,MetalLB部署后,在3个节点上都是可以正常访问ingress的哦。

- 注意:当前测

ingress-nginx的EXTERNAL-IP为172.29.9.60。

[root@master1 canary]#kubectl get svc -ningress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.108.58.246 172.29.9.60 80:32439/TCP,443:31347/TCP 2d15h

ingress-nginx-controller-admission ClusterIP 10.101.184.28 <none> 443/TCP 2d15h

1.构建镜像

- 我们这里使用 gRPC 示例应用 https://github.com/grpc/grpcgo/blob/master/examples/features/reflection/server/main.go 来进行说明。首先我们需要将该应用构建成一个容器镜像,可以用如下的 Dockerfile 进行构建:(这里我们用现成的镜像就好)

FROM golang:buster as build

WORKDIR /go/src/greeter-server

RUN curl -o main.go https://raw.githubusercontent.com/grpc/grpc-go/master/examples/features/reflection/server/main.go && \

go mod init greeter-server !+ \

go mod tidy && \

go build -o /greeter-server main.go

FROM gcr.io/distroless/base-debian10

COPY --from=build /greeter-server /

EXPOSE 50051

CMD ["/greeter-server"]

2.部署应用

- 然后我们就可以使用该镜像来部署应用了,对应的资源清单文件如下所示:

# grpc-ingress-app.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-grpc-greeter-server

spec:

selector:

matchLabels:

app: go-grpc-greeter-server

template:

metadata:

labels:

app: go-grpc-greeter-server

spec:

containers:

- name: go-grpc-greeter-server

image: cnych/go-grpc-greeter-server:v0.1 # 换成你自己的镜像

ports:

- containerPort: 50051