0. 介绍

支持向量机,support vector machines,SVM,是一种二分类模型。

- 策略: 间隔最大化。这等价于正则化的合页损失函数最小化问题。

- 学习算法: 序列最小最优化算法SMO

- 分类 线性可分支持向量机,线性支持向量机、非线性支持向量机。

1、线性可分支持向量机

- 特点: 训练数据线性可分;策略为硬间隔最大化;线性分类器。

模型 分类决策函数:

分类超平面:

定义超平面关于样本点

定义超平面关于样本点

几何距离是真正的点到面的距离。 定义所有样本点到面的距离的最小值:

间隔最大化:对训练集找到几何间隔最大的超平面,也就是充分大的确信度对训练数据进行分类。

以下通过最大间隔法和对偶法进行实现:

最大间隔法: 1)构造约束最优化函数

如果假设函数间隔

2)解约束函数,即获得超平面

对偶法: 对偶算法可以使得问题更容易求解,并且能自然引入核函数,推广非线性分类。 1、定义拉格朗日函数

优化目标:

2、求

得:

3、求

求

求解出

存在一个

因此,分类决策函数为:

总结

感知机模型的定义和SVM一样,但是两者的学习策略不同,感知机是误分类驱动,最小化误分点到超平面距离;SVM是最大化所有样本点到超平面的几何距离,也就是SVM是由与超平面距离最近的点决定的,这些点也称为支持向量。

2、线性支持向量机与软间隔最大化

- 特点: 训练数据近似可分;策略为软间隔最大化;线性分类器。

线性不可分指,某些样本点不能满足函数间隔

优化目标,一方面是使得间隔尽量大,另一方面使得误分点的个数尽量少。 当C趋于无穷大时,优化目标不允许误分点存在,从而过拟合; 当C趋于0时,只要求间隔越大越好,那么将无法得到有意义的解且算法不会收敛,即欠拟合。

学习算法:

1.构建凸二次规划问题:

2.求得

存在 $0<alpha_{j}^{*}

分类决策函数:

3、非线性支持向量机与核函数

- 特点: 训练数据线性不可分;学习策略为使用核技巧和软间隔最大化;非线性支持向量机。

思想: 使用非线性变换,把输入空间对应一个特征空间(希尔伯特空间),把非线性问题转换成线性问题;

核函数: 定义

正定核:

常用的核函数: 多项式核函数:

高斯核函数:

非线性支持向量分类机学习算法: (1)选取适当的核函数

求得最优解

(3)构建决策函数:

当

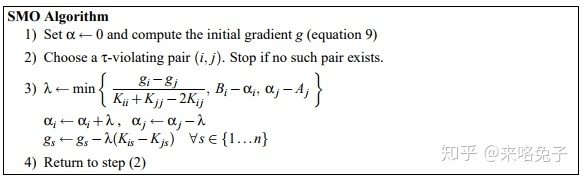

4、学习算法:序列最小最优化算法SMO

4.1 原理

序列最小最优化SMO算法: 1、通过满足KKT条件,来求解; 2、如果没有满足KKT条件,选择两个变量,固定其他变量,构造二次规划问题。 算法包括,求解两个变量二次规划和选择变量的启发式方法。

优化目标:

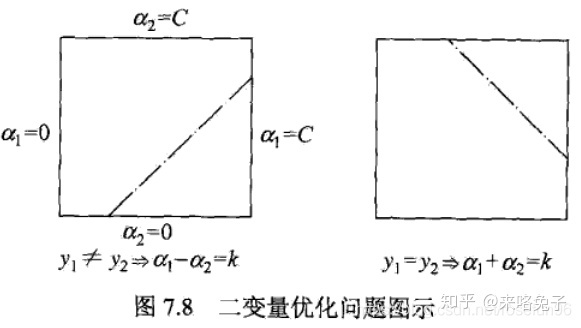

变量是拉格朗日乘子,一个变量

两个变量二次规划的求解方法

其中,

由于

由于,

如果

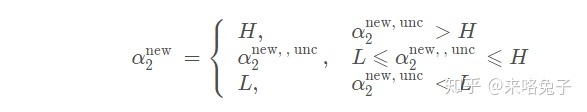

记未考虑

那么:

其中,

于是:

其中,

4.2 算法步骤

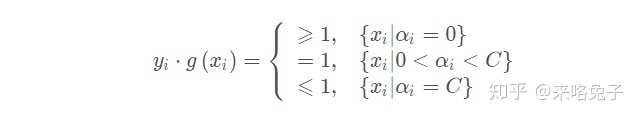

SMO算法在每个子问题中选择两个变量优化,其中至少一个变量是违反KKT条件的。 (1)第一个变量选择 从间隔边界上的支持向量点(

KKT条件为:

(2)第二个变量的选择 第二个变量选择使得

其中,

(3)计算阈值b 和差值

(4)预测 根据决策函数:

可以获得预测样本的标签。

5、实践

下面代码实现有点问题,这里使用了 max_iter作为终止条件,实际上应该是,找到最不符合KKT条件的样本,然后,该样本和剩下样本中挑选一个符合|Ei - Ej|最大的样本,不断重复挑选第二个样本直到目标函数不下降。也就是说,这里是O(n^2)的复杂度。代码展示的是O(n*max_iter)的复杂度,不合理。

from sklearn import datasets

import numpy as np

class SVM:

def __init__(self, max_iter=100, kernel='linear'):

self.max_iter = max_iter

self._kernel = kernel

def init_args(self, features, labels):

# 样本数,特征维度

self.m, self.n = features.shape

self.X = features

self.Y = labels

self.b = 0.0

# 将Ei保存在一个列表里

self.alpha = np.ones(self.m)

self.E = [self._E(i) for i in range(self.m)]

# 松弛变量

self.C = 1.0

def _KKT(self, i):

y_g = self._g(i) * self.Y[i]

if self.alpha[i] == 0:

return y_g >= 1

elif 0 < self.alpha[i] < self.C:

return y_g == 1

else:

return y_g <= 1

# g(x)预测值,输入xi(X[i])

# g(x) = sum_{j=1}^N {alpha_j*y_j*K(x_j,x)+b}

def _g(self, i):

r = self.b

for j in range(self.m):

r += self.alpha[j] * self.Y[j] * self.kernel(self.X[i], self.X[j])

return r

# 核函数

def kernel(self, x1, x2):

if self._kernel == 'linear':

return sum([x1[k] * x2[k] for k in range(self.n)])

elif self._kernel == 'poly':

return (sum([x1[k] * x2[k] for k in range(self.n)]) + 1)**2

return 0

# E(x)为g(x)对输入x的预测值和y的差

# E_i = g(x_i) - y_i

def _E(self, i):

return self._g(i) - self.Y[i]

def _init_alpha(self):

# 外层循环首先遍历所有满足0<a<C的样本点,检验是否满足KKT

index_list = [i for i in range(self.m) if 0 < self.alpha[i] < self.C]

# 否则遍历整个训练集

non_satisfy_list = [i for i in range(self.m) if i not in index_list]

index_list.extend(non_satisfy_list)

# 外层循环选择满足0<alpha_i<C,且不满足KKT的样本点。如果不存在遍历剩下训练集

for i in index_list:

if self._KKT(i):

continue

# 内层循环,|E1-E2|最大化

E1 = self.E[i]

# 如果E1是+,选择最小的E_i作为E2;如果E1是负的,选择最大的E_i作为E2

if E1 >= 0:

j = min(range(self.m), key=lambda x: self.E[x])

else:

j = max(range(self.m), key=lambda x: self.E[x])

return i, j

def _compare(self, _alpha, L, H):

if _alpha > H:

return H

elif _alpha < L:

return L

else:

return _alpha

def fit(self, features, labels):

self.init_args(features, labels)

for t in range(self.max_iter):

# train, 时间复杂度O(n)

i1, i2 = self._init_alpha()

# 边界,计算阈值b和差值E_i

if self.Y[i1] == self.Y[i2]:

# L = max(0, alpha_2 + alpha_1 -C)

# H = min(C, alpha_2 + alpha_1)

L = max(0, self.alpha[i1] + self.alpha[i2] - self.C)

H = min(self.C, self.alpha[i1] + self.alpha[i2])

else:

# L = max(0, alpha_2 - alpha_1)

# H = min(C, alpha_2 + alpha_1+C)

L = max(0, self.alpha[i2] - self.alpha[i1])

H = min(self.C, self.C + self.alpha[i2] - self.alpha[i1])

E1 = self.E[i1]

E2 = self.E[i2]

# eta=K11+K22-2K12= ||phi(x_1) - phi(x_2)||^2

eta = self.kernel(self.X[i1], self.X[i1]) + self.kernel(

self.X[i2],

self.X[i2]) - 2 * self.kernel(self.X[i1], self.X[i2])

if eta <= 0:

# print('eta <= 0')

continue

# 更新约束方向的解

alpha2_new_unc = self.alpha[i2] + self.Y[i2] * (

E1 - E2) / eta #此处有修改,根据书上应该是E1 - E2,书上130-131页

alpha2_new = self._compare(alpha2_new_unc, L, H)

alpha1_new = self.alpha[i1] + self.Y[i1] * self.Y[i2] * (

self.alpha[i2] - alpha2_new)

b1_new = -E1 - self.Y[i1] * self.kernel(self.X[i1], self.X[i1]) * (

alpha1_new - self.alpha[i1]) - self.Y[i2] * self.kernel(

self.X[i2],

self.X[i1]) * (alpha2_new - self.alpha[i2]) + self.b

b2_new = -E2 - self.Y[i1] * self.kernel(self.X[i1], self.X[i2]) * (

alpha1_new - self.alpha[i1]) - self.Y[i2] * self.kernel(

self.X[i2],

self.X[i2]) * (alpha2_new - self.alpha[i2]) + self.b

if 0 < alpha1_new < self.C:

b_new = b1_new

elif 0 < alpha2_new < self.C:

b_new = b2_new

else:

# 选择中点

b_new = (b1_new + b2_new) / 2

# 更新参数

self.alpha[i1] = alpha1_new

self.alpha[i2] = alpha2_new

self.b = b_new

self.E[i1] = self._E(i1)

self.E[i2] = self._E(i2)

return 'train done!'

def predict(self, data):

r = self.b

for i in range(self.m):

r += self.alpha[i] * self.Y[i] * self.kernel(data, self.X[i])

return 1 if r > 0 else -1

def score(self, X_test, y_test):

right_count = 0

for i in range(len(X_test)):

result = self.predict(X_test[i])

if result == y_test[i]:

right_count += 1

return right_count / len(X_test)

def _weight(self):

# linear model

yx = self.Y.reshape(-1, 1) * self.X

self.w = np.dot(yx.T, self.alpha)

return self.w

def normalize(x):

return (x - np.min(x))/(np.max(x) - np.min(x))

def get_datasets():

# breast cancer for classification(2 classes)

X, y = datasets.load_breast_cancer(return_X_y=True)

# 归一化

X_norm = normalize(X)

X_train = X_norm[:int(len(X_norm)*0.8)]

X_test = X_norm[int(len(X_norm)*0.8):]

y_train = y[:int(len(X_norm)*0.8)]

y_test = y[int(len(X_norm)*0.8):]

return X_train,y_train,X_test,y_test

if __name__ == '__main__':

X_train,y_train,X_test,y_test = get_datasets()

svm = SVM(max_iter=200)

svm.fit(X_train, y_train)

print("acccucy:{:.4f}".format(svm.score(X_test, y_test)))

运行结果:

acccucy:0.6491

Q:为什么支持向量机不适合大规模数据? 这里,大规模数据指,样本数目和特征数目都很大。

A:个人理解: Linear核的SVM迭代一次时间复杂度

非线性核的SVM:使用非线性特征映射将低维特征映射到高维,使用核技巧计算高维特征之间的内积。

由于使用数据集的核矩阵K(

参考:

- 李航 统计学习方法;

- sklearn SVM;

- github lihang-code SVM;

- 支持向量机(SVM)是否适合大规模数据? - 知乎;

- Github kernel-svm/svm.py ;

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?