k8s

作者:DevOps旭

来自:DevOps探路者

一、什么是k8s资源

在运维管理k8s时,管理员习惯将k8s中的一切称为资源,比如pod、deployment、service等等,k8s通过对这些资源进行维护,调度,从而实现了整个集群的管理

二、认识pod

pod是kubernetes内的最小管理单元,可以对一组容器提供管理。在k8s的管理哲学中,并不会对单个的容器进行维护,而是针对一组pod进行部署和操作。当然了,一个pod内的容器数也是灵活的,可以是一个也可以是多个。

那么这么设计又有什么优势呢?首先需要强调一下容器的管理哲学,那就是一个容器内只运行一个进程(子进程除外),那么,如果一个应用需要多个进程时,是选择一个容器内多个进程呢还是选择多个容器在同一节点上呢?

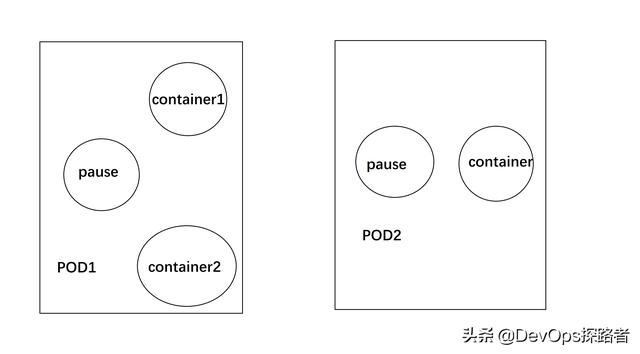

首先,一个容器多个进程是可以实现的,可以通过脚本来实现不同进程按照一定的依赖顺序启动,但是这样就存在一个问题了,容器内的第一个进程是否存在是判断容器存活的关键,那么容器内如果有多个进程,保障所有进程都处于运行状态将是一个极大的挑战,实现这个将会导致容器越来越沉,将有悖于容器轻量级的本质,同时,日志搜集,数据持久化等等,也将带来巨大的挑战,所以这个并非是一个比较好的选择。那么,多个容器跑在一个节点上呢?这恰好就是pod的管理哲学了。将多个容器限制在同一个pod中,共享这个pod的PID、NETWORK、UTS、IPC、MOUNT namespace这样的话,仅需要通过pause这一个容器对pod实现管理即可 。

说了那么多了,那么我们应该如何创建一个pod呢?kubernetes为我们提供十分便捷的方式kubectl——一个可以和apiserver交互的终端

kubectl create pod --image=nginx通过上面这条简单的命令便可以创建一个pod,那么除此之外还可以通过yaml文件来创建pod,下面就是一个最简单的yaml文件

apiVersion: v1kind: Podmetadata: name: nginx-demospec: containers: - image: nginx imagePullPolicy: Always name: nginx-demo这个编排文件遵循kubernetes API组的v1版本,将资源类型描述为pod,命名为nginx-demo

我们可以通过如下命令创建pod

[root@k8s01 yaml]# kubectl apply -f nginx-demo.yml[root@k8s01 yaml]# kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx 1/1 Running 0 4m32s 10.244.1.9 k8s02 可是容器的生命周期是短暂的,但是我们可以针对pod设置重启策略restartPolicy实现pod中容器的重启

Always: 当容器失效时重启容器OnFailure:当容器终止运行且退出码不为0时,由kubelet重启podNever:从不重启下面我们修改pod的yaml文件

apiVersion: v1kind: Podmetadata: name: nginx-demospec: restartPolicy: Always containers: - image: nginx imagePullPolicy: Always name: nginx-demo然后删除pod进行重建。

[root@k8s01 yaml]# kubectl delete pod nginx[root@k8s01 yaml]# kubectl apply -f nginx-demo.yml [root@k8s01 yaml]# kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx 1/1 Running 0 52s 10.244.1.11 k8s02 现在可以看到pod已经重建,并且运行在k8s02节点上,不过鉴于nginx的官方镜像缺少很多命令,无法进入容器内进行kill 操作杀死nginx进程,只能选择在pod所在节点杀死进程的方式来模拟容器故障

[root@k8s02 ~]# ps -ef | grep nginxroot 58895 58880 0 02:59 ? 00:00:00 nginx: master process nginx -g daemon off;101 58947 58895 0 02:59 ? 00:00:00 nginx: worker processroot 59071 49835 0 03:00 pts/0 00:00:00 grep --color=auto nginx[root@k8s02 ~]# kill 58895然后在k8s01节点上我们可以看到[root@k8s01 yaml]# kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx 0/1 Completed 0 53s 10.244.1.11 k8s02 [root@k8s01 yaml]# kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx 1/1 Running 1 55s 10.244.1.11 k8s02 此时nginx已经重启成功,然后我们看一下pod的事件[root@k8s01 yaml]# kubectl describe pod nginxName: nginxNamespace: defaultPriority: 0Node: k8s02/192.168.1.32Start Time: Sun, 06 Sep 2020 02:59:31 +0800Labels: Annotations: Status: RunningIP: 10.244.1.11IPs: IP: 10.244.1.11Containers: nginx: Container ID: docker://cf21ee868641ba2da52321e16fe7e43a0aca61b7ebcb0c4a4d62ecb4a3f9787a Image: nginx Image ID: docker-pullable://nginx@sha256:b0ad43f7ee5edbc0effbc14645ae7055e21bc1973aee5150745632a24a752661 Port: Host Port: State: Running Started: Sun, 06 Sep 2020 03:00:24 +0800 Last State: Terminated Reason: Completed Exit Code: 0 Started: Sun, 06 Sep 2020 02:59:48 +0800 Finished: Sun, 06 Sep 2020 03:00:20 +0800 Ready: True Restart Count: 1 Environment: Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-hdhjf (ro)Conditions: Type Status Initialized True Ready True ContainersReady True PodScheduled True Volumes: default-token-hdhjf: Type: Secret (a volume populated by a Secret) SecretName: default-token-hdhjf Optional: falseQoS Class: BestEffortNode-Selectors: Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s node.kubernetes.io/unreachable:NoExecute op=Exists for 300sEvents: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 63s Successfully assigned default/nginx to k8s02 Normal Pulled 47s kubelet, k8s02 Successfully pulled image "nginx" in 16.098712681s Normal Pulling 14s (x2 over 63s) kubelet, k8s02 Pulling image "nginx" Normal Created 11s (x2 over 47s) kubelet, k8s02 Created container nginx Normal Started 11s (x2 over 47s) kubelet, k8s02 Started container nginx Normal Pulled 11s kubelet, k8s02 Successfully pulled image "nginx" in 3.162238195s 可以清晰地看到kubelet对nginx容器的重启过程虽然kubelet可以实现对pod中的容器进行重启,但是,如果node节点发生了故障,这个策略又会如何呢?下面我们依次关闭k8s02节点的kubelet和kube-proxy 来模拟节点k8s02故障

[root@k8s02 ~]# systemctl stop kubelet [root@k8s02 ~]# systemctl stop kube-proxy [root@k8s02 ~]# systemctl status kube-proxy ● kube-proxy.service - Kubernetes Proxy Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled) Active: inactive (dead) since 日 2020-09-06 03:06:03 CST; 23s ago Process: 971 ExecStart=/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS (code=killed, signal=TERM) Main PID: 971 (code=killed, signal=TERM)9月 05 23:52:04 k8s02 systemd[1]: Ignoring invalid environment assignment '--proxy-mode=ipvs': /opt/kubernetes/cfg/kube-proxy.conf9月 05 23:52:04 k8s02 systemd[1]: Started Kubernetes Proxy.9月 05 23:52:16 k8s02 kube-proxy[971]: E0905 23:52:16.561493 971 node.go:125] Failed to retrieve node info: Get "https...timeout9月 05 23:52:23 k8s02 kube-proxy[971]: E0905 23:52:23.654714 971 node.go:125] Failed to retrieve node info: nodes "k8s...r scope9月 06 03:06:03 k8s02 systemd[1]: Stopping Kubernetes Proxy...9月 06 03:06:03 k8s02 systemd[1]: Stopped Kubernetes Proxy.Hint: Some lines were ellipsized, use -l to show in full.[root@k8s02 ~]# systemctl status kubelet● kubelet.service - Kubernetes Kubelet Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled) Active: inactive (dead) since 日 2020-09-06 03:05:57 CST; 35s ago Process: 1183 ExecStart=/opt/kubernetes/bin/kubelet $KUBELET_OPTS (code=exited, status=0/SUCCESS) Main PID: 1183 (code=exited, status=0/SUCCESS)9月 05 23:52:30 k8s02 kubelet[1183]: E0905 23:52:30.456897 1183 remote_runtime.go:113] RunPodSandbox from runtime service fail...9月 05 23:52:30 k8s02 kubelet[1183]: E0905 23:52:30.456938 1183 kuberuntime_sandbox.go:69] CreatePodSandbox for pod "nginx-679...9月 05 23:52:30 k8s02 kubelet[1183]: E0905 23:52:30.456951 1183 kuberuntime_manager.go:730] createPodSandbox for pod "nginx-67...9月 05 23:52:30 k8s02 kubelet[1183]: E0905 23:52:30.457009 1183 pod_workers.go:191] Error syncing pod ee15155c-faab-424...685b)" 9月 06 02:44:26 k8s02 kubelet[1183]: E0906 02:44:26.124263 1183 remote_runtime.go:329] ContainerStatus "4413a8d21a2b72b...68fb93c9月 06 02:44:26 k8s02 kubelet[1183]: E0906 02:44:26.124934 1183 remote_runtime.go:329] ContainerStatus "35eee7e6a06d70f...91c626b9月 06 02:51:40 k8s02 kubelet[1183]: E0906 02:51:40.490991 1183 remote_runtime.go:329] ContainerStatus "6489db11518634b...332343e9月 06 02:51:41 k8s02 kubelet[1183]: E0906 02:51:41.660419 1183 kubelet_pods.go:1250] Failed killing the pod "nginx": f...32343e"9月 06 03:05:57 k8s02 systemd[1]: Stopping Kubernetes Kubelet...9月 06 03:05:57 k8s02 systemd[1]: Stopped Kubernetes Kubelet.Hint: Some lines were ellipsized, use -l to show in full.然后我们在k8s01节点上观察一下

[root@k8s01 yaml]# kubectl get node NAME STATUS ROLES AGE VERSIONk8s01 Ready 9d v1.19.0k8s02 NotReady 9d v1.19.0k8s03 Ready 9d v1.19.0此时node节点k8s02已经是故障状态,那么pod呢?

[root@k8s01 yaml]# kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx 1/1 Running 1 8m18s 10.244.1.11 k8s02 [root@k8s01 yaml]# kubectl exec -it nginx sh kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.Error from server: error dialing backend: dial tcp 192.168.1.32:10250: connect: connection refused那么现在我们在杀死pod的进程呢?

[root@k8s02 ~]# ps -ef | grep nginxroot 59156 59141 0 03:00 ? 00:00:00 nginx: master process nginx -g daemon off;101 59203 59156 0 03:00 ? 00:00:00 nginx: worker processroot 61301 49835 0 03:10 pts/0 00:00:00 grep --color=auto nginx[root@k8s02 ~]# kill 59156在k8s01上看呢[root@k8s01 yaml]# kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx 1/1 Terminating 1 12m 10.244.1.11 k8s02 可见pod已经被删除,查看pod的事件

[root@k8s01 yaml]# kubectl describe pod nginxName: nginxNamespace: defaultPriority: 0Node: k8s02/192.168.1.32Start Time: Sun, 06 Sep 2020 02:59:31 +0800Labels: Annotations: Status: Terminating (lasts 48s)Termination Grace Period: 30sIP: 10.244.1.11IPs: IP: 10.244.1.11Containers: nginx: Container ID: docker://cf21ee868641ba2da52321e16fe7e43a0aca61b7ebcb0c4a4d62ecb4a3f9787a Image: nginx Image ID: docker-pullable://nginx@sha256:b0ad43f7ee5edbc0effbc14645ae7055e21bc1973aee5150745632a24a752661 Port: Host Port: State: Running Started: Sun, 06 Sep 2020 03:00:24 +0800 Last State: Terminated Reason: Completed Exit Code: 0 Started: Sun, 06 Sep 2020 02:59:48 +0800 Finished: Sun, 06 Sep 2020 03:00:20 +0800 Ready: True Restart Count: 1 Environment: Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-hdhjf (ro)Conditions: Type Status Initialized True Ready False ContainersReady True PodScheduled True Volumes: default-token-hdhjf: Type: Secret (a volume populated by a Secret) SecretName: default-token-hdhjf Optional: falseQoS Class: BestEffortNode-Selectors: Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s node.kubernetes.io/unreachable:NoExecute op=Exists for 300sEvents: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 13m Successfully assigned default/nginx to k8s02 Normal Pulled 13m kubelet, k8s02 Successfully pulled image "nginx" in 16.098712681s Normal Pulling 12m (x2 over 13m) kubelet, k8s02 Pulling image "nginx" Normal Created 12m (x2 over 13m) kubelet, k8s02 Created container nginx Normal Started 12m (x2 over 13m) kubelet, k8s02 Started container nginx Normal Pulled 12m kubelet, k8s02 Successfully pulled image "nginx" in 3.162238195s Warning NodeNotReady 6m23s node-controller Node is not ready现在我们恢复pod

[root@k8s02 ~]# systemctl start kubelet[root@k8s02 ~]# systemctl start kube-proxy[root@k8s01 yaml]# kubectl get po -o wide No resources found in default namespace.[root@k8s01 yaml]# 可以看到node异常导致pod无法完成自动恢复,可见pod自身的故障恢复能力还是有限的,同时node恢复后,pod也未恢复,那么这个必将引发很多问题,那么针对这个问题又该将如何处理呢?

三、认识deployment

3.1、deployment的故障自动转移

为了应对pod的故障转移,我们需要认识一下kubernetes的另一个关键的资源——deployment。deployment是一个极其强大的资源,是kubernetes提供的一个强大的控制器,这个控制器用来管理无状态应用的。我们可以通过这个控制器实现对pod的调度,对pod的滚动式升级,对pod的扩容缩容等等。那么我们应该如何创建一个deployment资源呢?下面我们先创建一个最简单的deployment资源

apiVersion: apps/v1 # api组kind: Deployment # 资源类型为deploymentmetadata: labels: app: nginx name: nginxspec: replicas: 1 # 副本数为1 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - image: nginx # 镜像为nginx name: nginx我们创建次资源

[root@k8s01 yaml]# kubectl apply -f nginx-deployment.yaml [root@k8s01 yaml]# kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-6799fc88d8-9lsjl 1/1 Running 0 109s 10.244.0.15 k8s01 可以看到pod被自动分配到了k8s01节点上,那么我们在模拟一下节点上的kubelet异常,将会如何呢?

[root@k8s01 yaml]# systemctl stop kubelet [root@k8s01 yaml]# systemctl stop kube-proxy[root@k8s01 yaml]# ps -ef | grep nginxroot 70693 70678 0 03:36 ? 00:00:00 nginx: master process nginx -g daemon off;101 70732 70693 0 03:36 ? 00:00:00 nginx: worker processroot 71641 50125 0 03:39 pts/0 00:00:00 grep --color=auto nginx[root@k8s01 yaml]# kill 70693此时我们观察一下k8s01节点

[root@k8s01 yaml]# kubectl get node NAME STATUS ROLES AGE VERSIONk8s01 NotReady 9d v1.19.0k8s02 Ready 9d v1.19.0k8s03 Ready 9d v1.19.0[root@k8s01 yaml]# kubectl describe pod nginx-6799fc88d8-9lsjl Name: nginx-6799fc88d8-9lsjlNamespace: defaultPriority: 0Node: k8s01/192.168.1.31Start Time: Sun, 06 Sep 2020 03:36:23 +0800Labels: app=nginx pod-template-hash=6799fc88d8Annotations: Status: RunningIP: 10.244.0.15IPs: IP: 10.244.0.15Controlled By: ReplicaSet/nginx-6799fc88d8Containers: nginx: Container ID: docker://f86cb1313c120b7797ac843a17f23a3551de7e868cbfe8fd24ade70de1ede843 Image: nginx Image ID: docker-pullable://nginx@sha256:b0ad43f7ee5edbc0effbc14645ae7055e21bc1973aee5150745632a24a752661 Port: Host Port: State: Running Started: Sun, 06 Sep 2020 03:36:26 +0800 Ready: True Restart Count: 0 Environment: Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-hdhjf (ro)Conditions: Type Status Initialized True Ready False ContainersReady True PodScheduled True Volumes: default-token-hdhjf: Type: Secret (a volume populated by a Secret) SecretName: default-token-hdhjf Optional: falseQoS Class: BestEffortNode-Selectors: Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s node.kubernetes.io/unreachable:NoExecute op=Exists for 300sEvents: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 4m42s Successfully assigned default/nginx-6799fc88d8-9lsjl to k8s01 Normal Pulling 4m42s kubelet, k8s01 Pulling image "nginx" Normal Pulled 4m40s kubelet, k8s01 Successfully pulled image "nginx" in 2.073509979s Normal Created 4m40s kubelet, k8s01 Created container nginx Normal Started 4m40s kubelet, k8s01 Started container nginx Warning NodeNotReady 68s node-controller Node is not ready [root@k8s01 yaml]# kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-6799fc88d8-9lsjl 1/1 Terminating 1 13m 10.244.0.15 k8s01 nginx-6799fc88d8-dvcj7 0/1 ContainerCreating 0 3s k8s02 我们惊喜的发现,在5分钟( pod-eviction-timeout控制 ,默认5m0s)后,在k8s01节点上的pod自动删除,pod被调度到了k8s02节点上,并被启动了起来,实现了pod的转移。可是实际在生产中,肯定无法容忍这个现象,那么我们还有什么策略呢?

3.2、deployment的pod多副本

我们在回顾一下deployment的yaml文件,可以发现里面有一行为副本数,那么我们对此进行修改后又将会如何呢?

[root@k8s01 yaml]# vim nginx-deployment.yamlapiVersion: apps/v1kind: Deploymentmetadata: labels: app: nginx name: nginxspec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - image: nginx name: nginx[root@k8s01 yaml]# kubectl apply -f nginx-deployment.yaml deployment.apps/nginx configured[root@k8s01 yaml]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-6799fc88d8-dvcj7 1/1 Running 0 7m55s 10.244.1.13 k8s02 nginx-6799fc88d8-j9l4v 1/1 Running 0 22s 10.244.0.16 k8s01 nginx-6799fc88d8-v48rj 1/1 Running 0 22s 10.244.2.15 k8s03 我们可以惊喜的看到,pod的副本数由1变成了3,那么这个是怎么实现的呢?

[root@k8s01 yaml]# kubectl describe deployment nginx Name: nginxNamespace: defaultCreationTimestamp: Sun, 06 Sep 2020 03:36:23 +0800Labels: app=nginxAnnotations: deployment.kubernetes.io/revision: 1Selector: app=nginxReplicas: 3 desired | 3 updated | 3 total | 3 available | 0 unavailableStrategyType: RollingUpdateMinReadySeconds: 0RollingUpdateStrategy: 25% max unavailable, 25% max surgePod Template: Labels: app=nginx Containers: nginx: Image: nginx Port: Host Port: Environment: Mounts: Volumes: Conditions: Type Status Reason ---- ------ ------ Progressing True NewReplicaSetAvailable Available True MinimumReplicasAvailableOldReplicaSets: NewReplicaSet: nginx-6799fc88d8 (3/3 replicas created)Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ScalingReplicaSet 21m deployment-controller Scaled up replica set nginx-6799fc88d8 to 1 Normal ScalingReplicaSet 77s deployment-controller Scaled up replica set nginx-6799fc88d8 to 3可以在deployment的事件中看到,deployment-controller 将nginx的replica 调整到3,这个replica是kubernetes的控制器,可以按照模板来实现pod的创建。

3.3、deployment的地毯式升级

作为核心资源的deployment的除此之外,还可以实现地毯式升级,而且可以控制升级的速率,主要是通过以下参数实现

maxSurge : 决定了deployment配置中期望的副本数之外,最多允许超出的pod实例数量maxUnavailable : 决定了滚动升级时,最多有多少pod处于不可用状态下面我们模拟一下升级,先创建一个升级使用的yaml文件

apiVersion: apps/v1kind: Deploymentmetadata: labels: app: nginx name: nginxspec: strategy: type: RollingUpdate rollingUpdate: maxSurge: 2 maxUnavailable: 0 selector: matchLabels: app: nginx replicas: 8 template: metadata: labels: app: nginx spec: containers: - image: nginx:1.12.1 name: nginx下面开始升级服务

# 先将副本数调至8,以放大现象,使滚动升级更加明显[root@k8s01 yaml]# kubectl scale deployment nginx --replicas=8[root@k8s01 yaml]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-6799fc88d8-72kkv 1/1 Running 0 4m41s 10.244.1.14 k8s02 nginx-6799fc88d8-7tl5d 1/1 Running 0 4m41s 10.244.1.15 k8s02 nginx-6799fc88d8-dvcj7 1/1 Running 0 29m 10.244.1.13 k8s02 nginx-6799fc88d8-j9l4v 1/1 Running 0 22m 10.244.0.16 k8s01 nginx-6799fc88d8-jhwt6 1/1 Running 0 4m41s 10.244.0.17 k8s01 nginx-6799fc88d8-m4wxm 1/1 Running 0 4m41s 10.244.2.16 k8s03 nginx-6799fc88d8-mg6jl 1/1 Running 0 4m41s 10.244.0.18 k8s01 nginx-6799fc88d8-v48rj 1/1 Running 0 22m 10.244.2.15 k8s03 # 执行升级命令[root@k8s01 yaml]# kubectl apply -f nginx-deployment-update.yaml deployment.apps/nginx configured# 开始滚动升级[root@k8s01 yaml]# kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-599c4c9ccc-4z7nn 0/1 ContainerCreating 0 15s k8s02 nginx-599c4c9ccc-kbr6v 0/1 ContainerCreating 0 15s k8s01 nginx-6799fc88d8-72kkv 1/1 Running 0 10m 10.244.1.14 k8s02 nginx-6799fc88d8-7tl5d 1/1 Running 0 10m 10.244.1.15 k8s02 nginx-6799fc88d8-dvcj7 1/1 Running 0 35m 10.244.1.13 k8s02 nginx-6799fc88d8-j9l4v 1/1 Running 0 28m 10.244.0.16 k8s01 nginx-6799fc88d8-jhwt6 1/1 Running 0 10m 10.244.0.17 k8s01 nginx-6799fc88d8-m4wxm 1/1 Running 0 10m 10.244.2.16 k8s03 nginx-6799fc88d8-mg6jl 1/1 Running 0 10m 10.244.0.18 k8s01 nginx-6799fc88d8-v48rj 1/1 Running 0 28m 10.244.2.15 k8s03 # 滚动升级完毕[root@k8s01 yaml]# kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-599c4c9ccc-2f4fc 1/1 Running 0 2m15s 10.244.2.17 k8s03 nginx-599c4c9ccc-4cckr 1/1 Running 0 46s 10.244.0.20 k8s01 nginx-599c4c9ccc-4vh5f 1/1 Running 0 32s 10.244.1.18 k8s02 nginx-599c4c9ccc-4z7nn 1/1 Running 0 4m4s 10.244.1.16 k8s02 nginx-599c4c9ccc-87hf7 1/1 Running 0 28s 10.244.0.21 k8s01 nginx-599c4c9ccc-kbr6v 1/1 Running 0 4m4s 10.244.0.19 k8s01 nginx-599c4c9ccc-mk6c2 1/1 Running 0 74s 10.244.1.17 k8s02 nginx-599c4c9ccc-q4wtg 1/1 Running 0 41s 10.244.2.18 k8s03 这里可以看到nginx的滚动升级已经结束,下面我们可以看一下deployment的事件

[root@k8s01 yaml]# kubectl describe deployment nginxName: nginxNamespace: defaultCreationTimestamp: Sun, 06 Sep 2020 03:36:23 +0800Labels: app=nginxAnnotations: deployment.kubernetes.io/revision: 2Selector: app=nginxReplicas: 8 desired | 8 updated | 8 total | 8 available | 0 unavailableStrategyType: RollingUpdateMinReadySeconds: 0RollingUpdateStrategy: 0 max unavailable, 2 max surgePod Template: Labels: app=nginx Containers: nginx: Image: nginx:1.12.1 Port: Host Port: Environment: Mounts: Volumes: Conditions: Type Status Reason ---- ------ ------ Available True MinimumReplicasAvailable Progressing True NewReplicaSetAvailableOldReplicaSets: NewReplicaSet: nginx-599c4c9ccc (8/8 replicas created)Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ScalingReplicaSet 53m deployment-controller Scaled up replica set nginx-6799fc88d8 to 1 Normal ScalingReplicaSet 32m deployment-controller Scaled up replica set nginx-6799fc88d8 to 3 Normal ScalingReplicaSet 15m deployment-controller Scaled up replica set nginx-6799fc88d8 to 8 Normal ScalingReplicaSet 5m deployment-controller Scaled up replica set nginx-599c4c9ccc to 2 Normal ScalingReplicaSet 3m11s deployment-controller Scaled down replica set nginx-6799fc88d8 to 7 Normal ScalingReplicaSet 3m11s deployment-controller Scaled up replica set nginx-599c4c9ccc to 3 Normal ScalingReplicaSet 2m10s deployment-controller Scaled up replica set nginx-599c4c9ccc to 4 Normal ScalingReplicaSet 2m10s deployment-controller Scaled down replica set nginx-6799fc88d8 to 6 Normal ScalingReplicaSet 102s deployment-controller Scaled down replica set nginx-6799fc88d8 to 5 Normal ScalingReplicaSet 102s deployment-controller Scaled up replica set nginx-599c4c9ccc to 5 Normal ScalingReplicaSet 97s deployment-controller Scaled down replica set nginx-6799fc88d8 to 4 Normal ScalingReplicaSet 97s deployment-controller Scaled up replica set nginx-599c4c9ccc to 6 Normal ScalingReplicaSet 65s (x6 over 88s) deployment-controller (combined from similar events): Scaled down replica set nginx-6799fc88d8 to 0可以看到,kubernetes通过deployment-controller,将replica nginx-599c4c9ccc 调整为2,当pod创建成功后,将replica nginx-6799fc88d8调整为7(这个依赖于pod启动的速度),按照此顺序,直到nginx-599c4c9ccc 调整为8,nginx-6799fc88d8调整为0,滚动升级结束。

除此之外,deployment资源也可以实现资源的回退

[root@k8s01 yaml]# kubectl rollout history deployment nginxdeployment.apps/nginx REVISION CHANGE-CAUSE1 2 [root@k8s01 yaml]# kubectl rollout undo deployment nginxdeployment.apps/nginx rolled back[root@k8s01 yaml]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-6799fc88d8-4wn62 1/1 Running 0 79s 10.244.1.19 k8s02 nginx-6799fc88d8-5rz78 1/1 Running 0 47s 10.244.0.24 k8s01 nginx-6799fc88d8-ckdfx 1/1 Running 0 60s 10.244.2.19 k8s03 nginx-6799fc88d8-f6dr7 1/1 Running 0 51s 10.244.1.21 k8s02 nginx-6799fc88d8-ghhp2 1/1 Running 0 55s 10.244.2.20 k8s03 nginx-6799fc88d8-msl22 1/1 Running 0 55s 10.244.0.23 k8s01 nginx-6799fc88d8-qmcxq 1/1 Running 0 79s 10.244.0.22 k8s01 nginx-6799fc88d8-wvmw9 1/1 Running 0 60s 10.244.1.20 k8s02 deployment回退到了上一个版本,下面看一下deployment的事件

[root@k8s01 yaml]# kubectl describe deployment nginxName: nginxNamespace: defaultCreationTimestamp: Sun, 06 Sep 2020 03:36:23 +0800Labels: app=nginxAnnotations: deployment.kubernetes.io/revision: 3Selector: app=nginxReplicas: 8 desired | 8 updated | 8 total | 8 available | 0 unavailableStrategyType: RollingUpdateMinReadySeconds: 0RollingUpdateStrategy: 0 max unavailable, 2 max surgePod Template: Labels: app=nginx Containers: nginx: Image: nginx Port: Host Port: Environment: Mounts: Volumes: Conditions: Type Status Reason ---- ------ ------ Available True MinimumReplicasAvailable Progressing True NewReplicaSetAvailableOldReplicaSets: NewReplicaSet: nginx-6799fc88d8 (8/8 replicas created)Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ScalingReplicaSet 41m deployment-controller Scaled up replica set nginx-6799fc88d8 to 8 Normal ScalingReplicaSet 31m deployment-controller Scaled up replica set nginx-599c4c9ccc to 2 Normal ScalingReplicaSet 29m deployment-controller Scaled down replica set nginx-6799fc88d8 to 7 Normal ScalingReplicaSet 29m deployment-controller Scaled up replica set nginx-599c4c9ccc to 3 Normal ScalingReplicaSet 28m deployment-controller Scaled up replica set nginx-599c4c9ccc to 4 Normal ScalingReplicaSet 28m deployment-controller Scaled down replica set nginx-6799fc88d8 to 6 Normal ScalingReplicaSet 27m deployment-controller Scaled down replica set nginx-6799fc88d8 to 5 Normal ScalingReplicaSet 27m deployment-controller Scaled up replica set nginx-599c4c9ccc to 5 Normal ScalingReplicaSet 27m deployment-controller Scaled down replica set nginx-6799fc88d8 to 4 Normal ScalingReplicaSet 27m deployment-controller Scaled up replica set nginx-599c4c9ccc to 6 Normal ScalingReplicaSet 118s deployment-controller Scaled up replica set nginx-6799fc88d8 to 2 Normal ScalingReplicaSet 99s deployment-controller Scaled down replica set nginx-599c4c9ccc to 6 Normal ScalingReplicaSet 99s deployment-controller Scaled up replica set nginx-6799fc88d8 to 4 Normal ScalingReplicaSet 99s deployment-controller Scaled down replica set nginx-599c4c9ccc to 7 Normal ScalingReplicaSet 99s (x2 over 58m) deployment-controller Scaled up replica set nginx-6799fc88d8 to 3 Normal ScalingReplicaSet 94s deployment-controller Scaled down replica set nginx-599c4c9ccc to 5 Normal ScalingReplicaSet 94s deployment-controller Scaled up replica set nginx-6799fc88d8 to 5 Normal ScalingReplicaSet 94s deployment-controller Scaled down replica set nginx-599c4c9ccc to 4 Normal ScalingReplicaSet 94s deployment-controller Scaled up replica set nginx-6799fc88d8 to 6 Normal ScalingReplicaSet 73s (x12 over 27m) deployment-controller (combined from similar events): Scaled down replica set nginx-599c4c9ccc to 0和滚动升级相同的策略,deployment回退到了之前的版本。

可以说deployment是kubernetes中的一个很重要的资源,后面会对此资源进行更加细致的分析,去寻找此控制器的最佳实践。

1573

1573

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?