Hadoop无HA环境搭建

标签(空格分隔): hadoop 大数据

准备Hadoop安装包(版本:hadoop-2.7.3)

下载地址 Apache Soft:http://archive.apache.org/dist/

官方文档:https://hadoop.apache.org/docs/r2.7.3/

#解压安装包并指定目录

tar -zxvf hadoop-2.7.3.tar.gz -C /opt/soft/hadoop

解压完成后需要到到hadoop目录下修改配置文件

#进入hadoop配置文件目录

cd /opt/soft/hadoop/etc/hadoop

如果没有其他特殊需求,我们只需要修改的几个文件分别是core-site.xml,hdfs-site.xml,mapred-site.xml.template,yarn-site.xml,slaves也可以根据自己的需求进行配置

由于我们是单机版的hadoop,所以在slaves文件中只需要添加我们当前主机的主机名称

core-site.xml:

<property>

<name>hadoop.tmp.dir</name>

<value>file:/hadoop/data/hdfs/tmp</value>

<description>A base for other temporary directories.</description>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<property>

<name>fs.default.name</name>

<value>hdfs://192.168.1.101:9000</value>

</property>

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

hdfs-site.xml添加以下内容:

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/hadoop/data/hdfs/name</value>

<final>true</final>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/hadoop/data/hdfs/data</value>

<final>true</final>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>192.168.1.101:9001</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

需要将mapred-site.xml.template模板改名为mapred-site.xml并进行配置

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

yarn-site.xml添加以下内容:

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>standlone</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>standlone:8088</value>

</property>

<!-- 下面这两个参数,是由于当时出现任务提交到yarn上之后,出现内存使用超限的情况,作为可选项配置 -->

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

<description>Whether virtual memory limits will be enforced for containers</description>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>4</value>

<description>Ratio between virtual memory to physical memory when setting memory limits for containers</description>

NOTE : hadoop2.7.3需要JDK1.7以上版本,JDK版本低于1.7就会报错。【java.lang.UnsupportedClassVersionError】版本不一致出错。

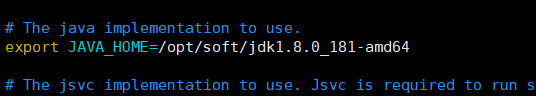

再将hadoop默认的JAVA环境更改成我们服务器的实际jdk的安装目录,在hadoop-env.sh文件中

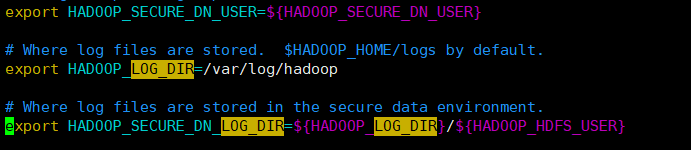

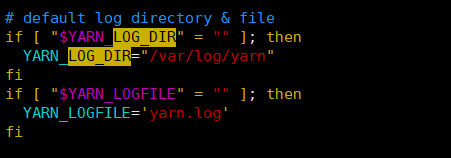

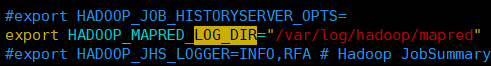

hadoop默认的日志文件会产生在安装目录下的logs文件夹,如果我们想要自定义的日志目录,需要更改服务的env文件,yarn-env.sh,hadoop-env.sh,mapred-env.sh

更改每个文件中的LOG_DIR目录

hadoop-env.sh文件:

yarn-env.sh文件:

mapred-env.sh文件:

配置完成后,将hadoop添加到环境变量中

echo "

export HADOOP_HOME=/opt/soft/hadoop-2.7.3

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

" >> ~/.bashrc

# 生效环境变量

source ~/.bashrc

我们首次启动前需要对hdfs文件系统进行格式化,执行以下命令:

hadoop namenode -format

格式化完成,我们可以启动hdfs服务

[root@standlone bin]# start-dfs.sh

Starting namenodes on [standlone]

standlone: starting namenode, logging to /var/log/hadoop/hadoop-root-namenode-standlone.out

standlone: starting datanode, logging to /var/log/hadoop/hadoop-root-datanode-standlone.out

Starting secondary namenodes [standlone]

standlone: starting secondarynamenode, logging to /var/log/hadoop/hadoop-root-secondarynamenode-standlone.out

### 查看是否启动hdfs进程

[root@standlone bin]# jps

2929 SecondaryNameNode

3128 Jps

2748 DataNode

2367 QuorumPeerMain

2623 NameNode

[root@standlone bin]#

启动完成我们可以到namenode的WebUI上查看hdfs状态

查看datanode

服务器执行hdfs dfs -ls /命令查看目录,(我这里是之前已经创建完成了,所以是存在一些目录)

[root@standlone bin]# hdfs dfs -ls /

Found 4 items

drwxr-xr-x - root supergroup 0 2019-12-03 16:42 /spark

drwxr-xr-x - root supergroup 0 2020-04-21 10:38 /sqoop

drwx-wx-wx - root supergroup 0 2020-04-10 17:50 /tmp

drwxr-xr-x - root supergroup 0 2020-04-04 22:00 /user

[root@standlone bin]#

现在我们的HDFS已经启动完成,我们在启动yarn来主测试M/R的WordCount任务

启动yarn,启动时如果出现异常可以到yarn的日志中进行查看,执行启动命令后,无异常,通过jps查看进程是否正常启动:

[root@standlone bin]# start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /var/log/yarn/yarn-root-resourcemanager-standlone.out

standlone: starting nodemanager, logging to /var/log/yarn/yarn-root-nodemanager-standlone.out

[root@standlone bin]# jps -ml | grep yarn

3372 org.apache.hadoop.yarn.server.nodemanager.NodeManager

3261 org.apache.hadoop.yarn.server.resourcemanager.ResourceManager

访问YARNWebUI界面,可以查看yarn的相关信息:

到目前为止我们的hdfs和yarn服务已经启动完成,可以执行M/R任务来测试是否能够正常执行任务

hadoop安装包中有WordCount的样例jar包,我们可以通过官方提供的examples进行测试,测试的jar包在hadoop安装目录下的 share/hadoop/mapreduce/中,包名为hadoop-mapreduce-examples-2.7.3.jar

在执行任务前,我们需要准备好wordcount所需的文件,从本地上传到hdfs上,任意文本

[root@standlone ~]# hdfs dfs -put anaconda-ks.cfg /tmp/

[root@standlone mapreduce]# hadoop jar hadoop-mapreduce-examples-2.7.3.jar wordcount /tmp/anaconda-ks.cfg /output

20/05/29 14:59:44 INFO client.RMProxy: Connecting to ResourceManager at standlone/192.168.1.101:8032

20/05/29 14:59:46 INFO input.FileInputFormat: Total input paths to process : 1

20/05/29 14:59:46 INFO mapreduce.JobSubmitter: number of splits:1

20/05/29 14:59:46 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1590735186042_0001

20/05/29 14:59:50 INFO impl.YarnClientImpl: Submitted application application_1590735186042_0001

20/05/29 14:59:50 INFO mapreduce.Job: The url to track the job: http://standlone:8088/proxy/application_1590735186042_0001/

20/05/29 14:59:50 INFO mapreduce.Job: Running job: job_1590735186042_0001

20/05/29 15:00:12 INFO mapreduce.Job: Job job_1590735186042_0001 running in uber mode : false

20/05/29 15:00:12 INFO mapreduce.Job: map 0% reduce 0%

20/05/29 15:00:24 INFO mapreduce.Job: map 100% reduce 0%

20/05/29 15:00:29 INFO mapreduce.Job: map 100% reduce 100%

20/05/29 15:00:29 INFO mapreduce.Job: Job job_1590735186042_0001 completed successfully

20/05/29 15:00:29 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=1413

FILE: Number of bytes written=240427

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=1193

HDFS: Number of bytes written=1100

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=9260

Total time spent by all reduces in occupied slots (ms)=2220

Total time spent by all map tasks (ms)=9260

Total time spent by all reduce tasks (ms)=2220

Total vcore-milliseconds taken by all map tasks=9260

Total vcore-milliseconds taken by all reduce tasks=2220

Total megabyte-milliseconds taken by all map tasks=9482240

Total megabyte-milliseconds taken by all reduce tasks=2273280

Map-Reduce Framework

Map input records=40

Map output records=101

Map output bytes=1479

Map output materialized bytes=1413

Input split bytes=110

Combine input records=101

Combine output records=77

Reduce input groups=77

Reduce shuffle bytes=1413

Reduce input records=77

Reduce output records=77

Spilled Records=154

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=1087

CPU time spent (ms)=3240

Physical memory (bytes) snapshot=440590336

Virtual memory (bytes) snapshot=4261498880

Total committed heap usage (bytes)=327155712

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=1083

File Output Format Counters

Bytes Written=1100

在执行M/R任务期间,我们可以通过Yarn的8088界面进行查看任务状态,也可以在后台执行shell命令进行查看

[root@standlone hadoop]# yarn application --list

20/05/29 15:00:07 INFO client.RMProxy: Connecting to ResourceManager at standlone/192.168.1.101:8032

Total number of applications (application-types: [] and states: [SUBMITTED, ACCEPTED, RUNNING]):1

Application-Id Application-Name Application-Type User Queue State Final-State Progress Tracking-URL

application_1590735186042_0001 word count MAPREDUCE root root.root ACCEPTED UNDEFINED 0% N/A

到这里,简单的hdfs、yarn搭建完成。

文章中如果有不对的地方,欢迎大家在评论区留言指正,有什么自己的技术分享也可以再评论区交流 😃 😃

675

675

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?