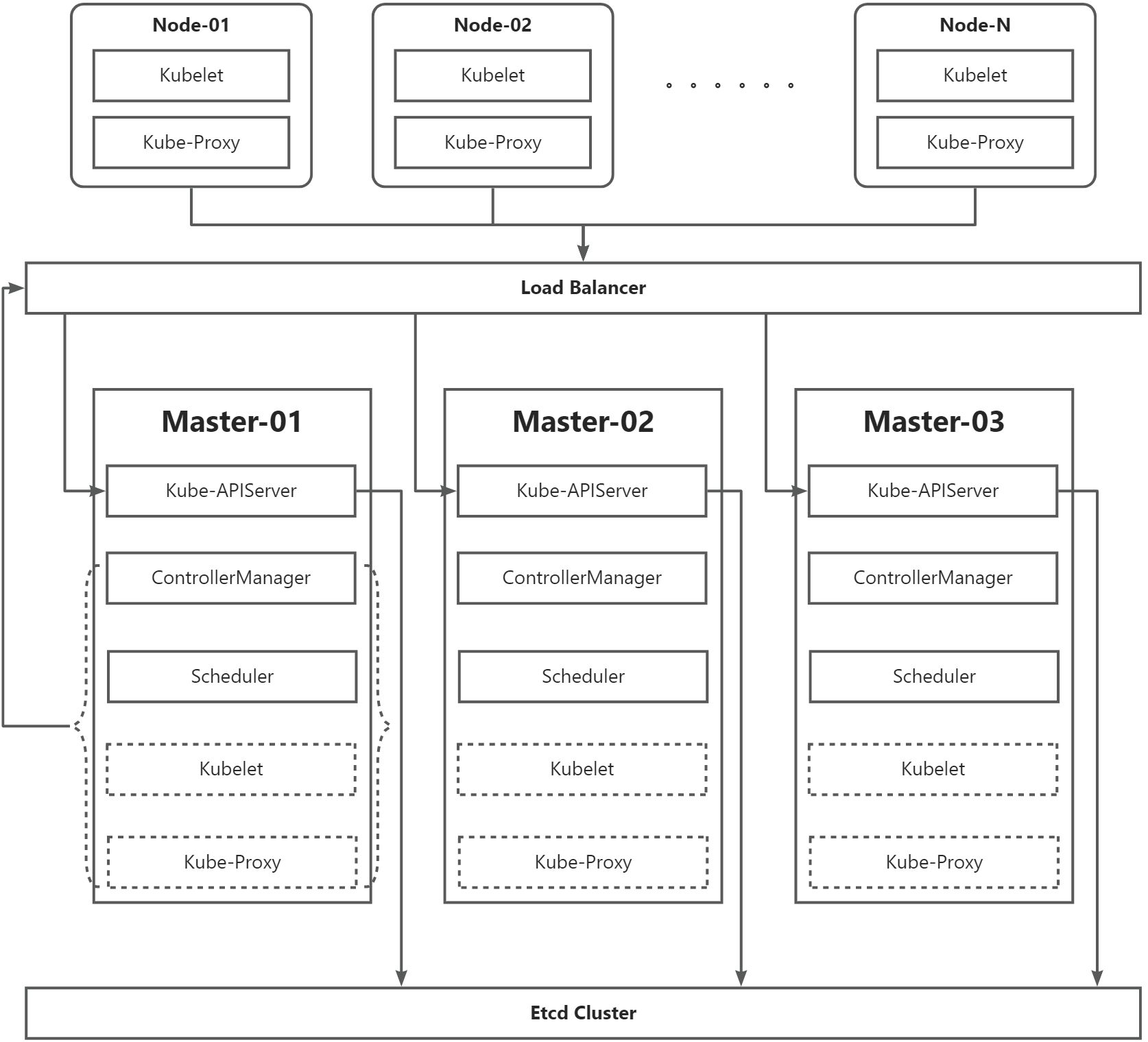

架构解析

高可用架构图

组件用途

- Etcd Cluster

- ectd是一个键值数据库,主要存放Kubernetes数据,如创建的资源、变更的操作等;

- Master

- Kubernetes中的控制节点,主要用于控制Kubernetes集群;

- Node

- Kubernetes中的工作节点,主要用于运行Kubernetes集群部署的Pod;

- Kube-APIServer

- Kubernetes中的控制组件,Kubernetes集群中所有流量都会经过Kube-APIServer;

- ControllerManager

- Kubernetes中的控制组件,主要用于监控Kubernetes集群的状态;

- Scheduler

- Kubernetes中的调度组件,主要用于控制Kubernetes集群中的Pod调度到哪个Node节点去运行;

- Load Balancer

- Kubernetes中各个节点直接负载均衡器,一般使用keepailved和haproxy组合实现负责均衡;

- Kubelet

- Kubernetes中的代理组件,主要用于保证Kubernetes集群中的Pod运行状态;

- Kube-Proxy

- Kubernetes中的网络代理组件,主要用于保证Kubernetes集群中的Pod访问控制;

部署集群

主机规划

| 主机名称 | 主机地址 | 主机资源 | 安装组件 | 主机用途 |

|---|---|---|---|---|

| k8s-master-01 | 192.168.23.139 | 2C4G;200G | haproxy+keepalived+ectd+Kubernetes-Master | 集群管理节点 |

| k8s-master-02 | 192.168.23.140 | 2C4G;200G | haproxy+keepalived+ectd+Kubernetes-Master | 集群管理节点 |

| k8s-master-03 | 192.168.23.141 | 2C4G;200G | haproxy+keepalived+ectd+Kubernetes-Master | 集群管理节点 |

| k8s-node-01 | 192.168.23.142 | 1C2G;200G | Kubernetes-Node | 集群工作节点 |

| k8s-node-02 | 192.168.23.143 | 1C2G;200G | Kubernetes-Node | 集群工作节点 |

| Vip | 192.168.23.100 | 集群虚拟IP |

软件规划

| 软件名称 | 软件版本 | 备注 |

|---|---|---|

| Linux | CentOS Linux release 7.9.2009 (Core) | 推荐使用CentOS8版本 |

| Kubernetes | 1.20.9 | |

| Kernel | 6.9.7-1.el7.elrepo.x86_64 | |

| Docker | 26.1.4 | |

| kubectl | 1.20.9 | |

| kubeadm | 1.20.9 | |

| kubelet | 1.20.9 | |

| haproxy | 1.5.18 | |

| keepalived | 1.3.5 | |

| kube-apiserver | 1.20.9 | |

| kube-controller-manager | 1.20.9 | |

| kube-scheduler | 1.20.9 | |

| kube-proxy | 1.20.9 | |

| coredns | 1.7.0 | |

| etcd | 3.4.13-0 | |

| pause | 3.2 | |

| calico/cni | 3.20.6 | |

| calico/pod2daemon-flexvol | 3.20.6 | |

| calico/node | 3.20.6 | |

| calico/kube-controllers | 3.20.6 | |

| metrics-server | 0.6.0 | |

| dashboard | 2.4.0 |

基础配置

配置源

所有节点都执行

- yum源

cat > /etc/yum.repos.d/CentOS-7-ali.repo << 'EOF'

# CentOS-Base.repo

#

# The mirror system uses the connecting IP address of the client and the

# update status of each mirror to pick mirrors that are updated to and

# geographically close to the client. You should use this for CentOS updates

# unless you are manually picking other mirrors.

#

# If the mirrorlist= does not work for you, as a fall back you can try the

# remarked out baseurl= line instead.

#

#

[base]

name=CentOS-$releasever - Base - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/os/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/os/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/os/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#released updates

[updates]

name=CentOS-$releasever - Updates - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/updates/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/updates/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/updates/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#additional packages that may be useful

[extras]

name=CentOS-$releasever - Extras - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/extras/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/extras/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/extras/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#additional packages that extend functionality of existing packages

EOF

- k8s源

cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name = kubernetes

baseurl = https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled = 1

gpgcheck =1

gpgkey = https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg \

https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

EOF

- 验证源配置

yum -y install vim wget telnet

配置时间同步阿里云

所有节点都执行

- 配置时间同步

#所有节点都要执行

yum -y install ntpdate chrony

sed -ri 's/^server/#server/g' /etc/chrony.conf

echo "server ntp.aliyun.com iburst" >> /etc/chrony.conf

systemctl start chronyd

systemctl enable chronyd

- 配置定期同步

cat >> /var/spool/cron/root <<EOF

# rsync ali clock

*/10 * * * * /usr/sbin/ntpdate ntp.aliyun.com

EOF

- 验证时间同步

chronyc sourcestats -v

检查并升级内核

所有节点都执行

Kubernetes集群要求Linux系统在4…18以上稳定版本,如不满足需要升级避免出现异常。

- 检查内核版本

uname -r

- 升级内核

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

rpm -Uvh https://elrepo.org/linux/elrepo/el7/x86_64/RPMS/elrepo-release-7.0-8.el7.elrepo.noarch.rpm

yum update -y --exclude=kernel* && reboot

yum --enablerepo=elrepo-kernel install kernel-ml-devel kernel-ml -y

yum --enablerepo=elrepo-kernel install kernel-lt-devel kernel-lt -y

- 更改内核启动顺序

#查看内核的序号

awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg

#选择内核的序号

grub2-set-default 0

grub2-mkconfig -o /boot/grub2/grub.cfg

reboot

- 注意:

- 通过命令awk -F’ ‘$1=="menuentry " {print i++ " : " $2}’ /etc/grub2.cfg可以查看到可用内核,及内核的序号。

- 通过命令grub2-set-default 0,设置新内核为默认启动的内核。

- 通过命令grub2-mkconfig -o /boot/grub2/grub.cfg生成grub文件。

- 通过reboot启动服务器即可。

关闭firewalld、dnsmasq、selinux、swap

所有节点都执行

- 关闭firewalld

systemctl stop firewalld

systemctl disable firewalld

#担心防火墙策略有遗漏,所以关闭firewalld

- 关闭dnsmasq

systemctl stop dnsmasq

systemctl disable dnsmasq

- 关闭selinux

setenforce 0

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux

- 关闭swap

swapoff -a && sysctl -w vm.swappiness=0

sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab

#由于swap会影响Docker的性能,所以关闭

集群调优配置

所有节点都执行

- /etc/security/limits.conf

cat >> /etc/security/limits.conf << EOF

* soft noproc 655350

* hard noproc 655350

* soft nofile 655350

* hard nofile 655350

EOF

#用于调整用户的系统资源限制,要求大于65535

- /etc/sysctl.conf

#注意:内核版本4.19以上版本需要使用nf_conntrack,内核版本4.18以下版本使用nf_conntrack_ipv4

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

cat >> /etc/sysctl.conf << EOF

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

EOF

systemctl enable --now systemd-modules-load

#用于调整内核的资源限制

- /etc/sysctl.d/k8s.conf

cat > /etc/sysctl.d/k8s.conf << EOF

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

net.ipv6.conf.lo.disable_ipv6 = 1

net.ipv4.neigh.default.gc_stale_time = 120

net.ipv4.conf.all.rp_filter = 0

net.ipv4.conf.default.rp_filter = 0

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_announce = 2

net.ipv4.ip_forward = 1

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.tcp_synack_retries = 2

# 要求iptables不对bridge的数据进行处理

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-arptables = 1

net.netfilter.nf_conntrack_max = 2310720

fs.inotify.max_user_watches=89100

fs.may_detach_mounts = 1

fs.file-max = 52706963

fs.nr_open = 52706963

vm.overcommit_memory=1

# 开启OOM

vm.panic_on_oom=0

# 禁止使用 swap 空间,只有当系统 OOM 时才允许使用它

vm.swappiness=0

# ipvs优化

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_intvl = 30

net.ipv4.tcp_keepalive_probes = 10

EOF

#用于系统调优参数配置

- /etc/security/limits.d/k8s.conf

cat > /etc/security/limits.d/k8s.conf <<EOF

* soft nproc 1048576

* hard nproc 1048576

* soft nofile 1048576

* hard nofile 1048576

root soft nproc 1048576

root hard nproc 1048576

root soft nofile 1048576

root hard nofile 1048576

EOF

#用于文件最大打开数调优

- /etc/rsyslog.conf

sed -ri 's/^\$ModLoad imjournal/#&/' /etc/rsyslog.conf

sed -ri 's/^\$IMJournalStateFile/#&/' /etc/rsyslog.conf

sed -ri 's/^#(DefaultLimitCORE)=/\1=100000/' /etc/systemd/system.conf

sed -ri 's/^#(DefaultLimitNOFILE)=/\1=100000/' /etc/systemd/system.conf

#用于优化日志处理,减少磁盘IO

- /etc/ssh/sshd_config

sed -ri 's/^#(UseDNS )yes/\1no/' /etc/ssh/sshd_config

#用于ssh 连接优化

配置hostname&host

所有节点都执行

- 配置hostname

所有节点都分别执行

hostnamectl set-hostname k8s-master-01

hostnamectl set-hostname k8s-master-02

hostnamectl set-hostname k8s-master-03

hostnamectl set-hostname k8s-node-01

hostnamectl set-hostname k8s-node-02

- 配置host

cat >> /etc/hosts << EOF

192.168.23.139 k8s-master-01

192.168.23.140 k8s-master-02

192.168.23.141 k8s-master-03

192.168.23.142 k8s-node-01

192.168.23.143 k8s-node-02

EOF

- 验证解析

ping k8s-master-01

ping k8s-master-02

ping k8s-master-03

ping k8s-node-01

ping k8s-node-02

基础组件

所有节点都执行

基本组件安装–ipvs模块

modprobe ip_vs

modprobe ip_vs_rr

modprobe ip_vs_wrr

modprobe ip_vs_sh

modprobe nf_conntrack

基本组件安装–Docker

- 安装依赖

yum install -y yum-utils

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

- 安装Docker

yum -y install docker-ce docker-ce-cli containerd.io docker-compose-plugin

- 配置Docker

mkdir /etc/docker

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": [

"https://11777p0c.mirror.aliyuncs.com",

"https://hub-mirror.c.163.com",

"https://mirror.baidubce.com",

"https://docker.m.daocloud.io",

"https://docker.1panel.live"

],

"dns": [

"8.8.8.8",

"114.114.114.114"

],

"log-driver": "json-file",

"log-opts": {

"max-file": "10",

"max-size": "100m"

},

"storage-driver": "overlay2",

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

- 启动Docker

systemctl enable --now docker

systemctl enable --now containerd

- 查看版本

docker version

- 命令补全

yum install -y bash-completion

#安装完成之后重新登录shell或者重启系统即可

- 注意

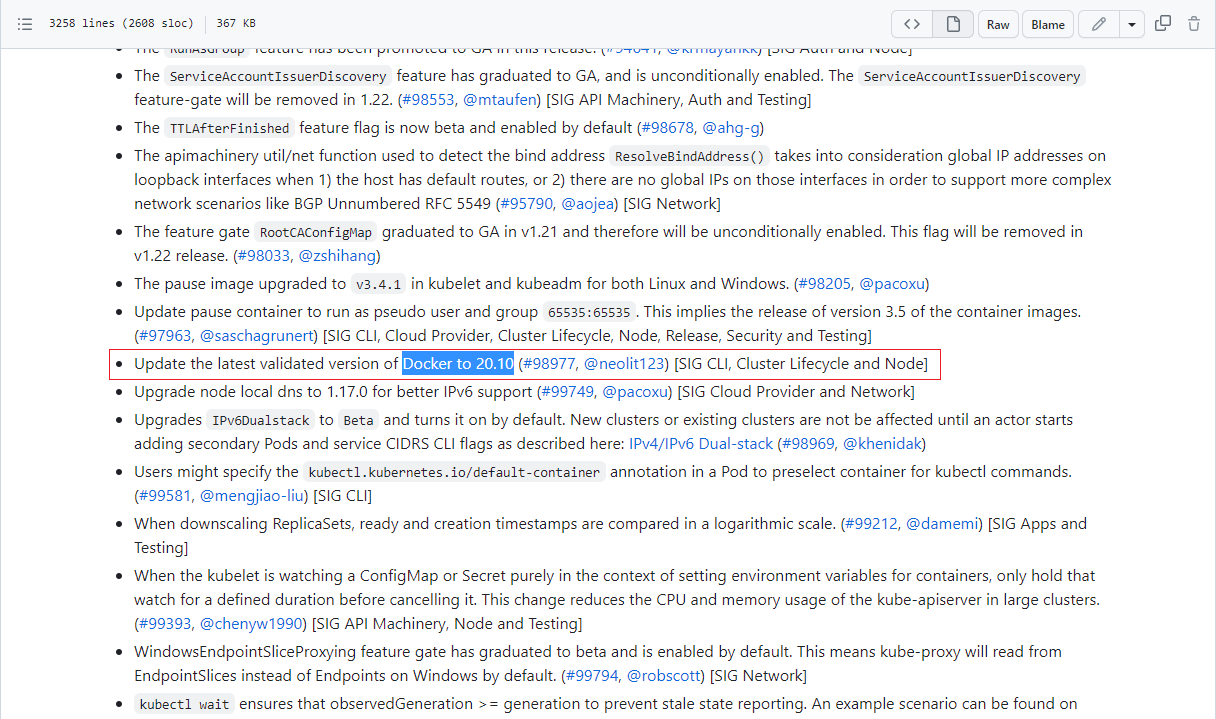

docker版本选择参考https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/,如果对应k8s版本没有docker版本信息,则去上一个版本查找。

基本组件安装–kubelet、kubeadm、kubectl

- 查看可用版本

yum list kubeadm.x86_64 --showduplicates | sort -r

- 安装组件

yum -y install kubelet-1.20.9 kubeadm-1.20.9 kubectl-1.20.9

- 检查版本

kubeadm version

kubectl version

kubelet --version

- 启动组件

systemctl daemon-reload

systemctl enable --now kubelet

- 命令补全

yum -y install bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

高可用组件

Master节点安装

高可用组件安装–haproxy

- 安装haproxy

yum -y install haproxy

- 配置haproxy

cp /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg_`date +%F`

#定义主机名称和IP地址

HOST_NAME_1=k8s-master-01

HOST_IP_1=192.168.23.139

HOST_NAME_2=k8s-master-02

HOST_IP_2=192.168.23.140

HOST_NAME_3=k8s-master-03

HOST_IP_3=192.168.23.141

cat > /etc/haproxy/haproxy.cfg << EOF

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

#haproxy监控检测

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

#haproxy状态检测

listen stats

bind *:8006

mode http

stats enable

stats hide-version

stats uri /stats

stats refresh 30s

stats realm Haproxy\ Statistics

stats auth admin:admin

#kubernetes代理配置

frontend k8s-master

bind 0.0.0.0:16443

bind 127.0.0.1:16443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

#kubernetes后端配置

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server $HOST_NAME_1 $HOST_IP_1:6443 check

server $HOST_NAME_2 $HOST_IP_2:6443 check

server $HOST_NAME_3 $HOST_IP_3:6443 check

EOF

- 启动haproxy

systemctl enable --now haproxy

高可用组件安装–keepalived

- 安装keepalived

yum -y install keepalived

- 配置keepalived配置健康检查脚本

mkdir -p /etc/keepalived/script

cat > /etc/keepalived/script/check_apiserver.sh << "EOF"

!#/bin/bash

ERR=0

for k in $(seq 1 5)

do

CHECK_CODE=$(pgrep kube-apiserver)

if [[ $CHECK_CODE = "" ]]; then

ERR=$(expr $ERR + 1)

sleep 5

continue

else

ERR=0

break

fi

done

if [[ $ERR != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

EOF

- 配置keepalived(仅k8s-master-01执行)

cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf_`date +%F`

HOST_IP=$(ip addr | grep dynamic | awk -F' ' '{print $2}' | awk -F'/' '{print $1}')

HOST_VIP=192.168.23.100

INTERFACE=ens32

TYPES=MASTER

cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/script/check_apiserver.sh"

interval 2

weight -5

fall 3

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface $INTERFACE

mcast_src_ip $HOST_IP

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

$HOST_VIP

}

# track_script {

# chk_apiserver

# }

}

EOF

- 配置keepalived(k8s-master-02和k8s-master-03执行)

cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf_`date +%F`

HOST_IP=$(ip addr | grep dynamic | awk -F' ' '{print $2}' | awk -F'/' '{print $1}')

HOST_VIP=192.168.23.100

INTERFACE=ens32

cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/script/check_apiserver.sh"

interval 2

weight -5

fall 3

rise 2

}

vrrp_instance VI_1 {

state BACKUP

interface $INTERFACE

mcast_src_ip $HOST_IP

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

$HOST_VIP

}

# track_script {

# chk_apiserver

# }

}

EOF

- 启动keepalived

systemctl enable --now keepalived

集群部署

初始化文件

Master节点执行

- 默认配置文件

- 生成kubeadm文件

kubeadm config print init-defaults --component-configs \

KubeProxyConfiguration,KubeletConfiguration > kubeadm-config.yaml

# 查看所需镜像

kubeadm config images list --config kubeadm-config.yaml

- 编辑镜像下载文件

cat > ./images_kube.sh << 'EOF'

#!/bin/bash

images=(

kube-apiserver:v1.20.0

kube-controller-manager:v1.20.0

kube-scheduler:v1.20.0

kube-proxy:v1.20.0

coredns:1.7.0

etcd:3.4.13-0

pause:3.2

)

for imageName in ${images[@]} ; do

docker pull registry.aliyuncs.com/google_containers/$imageName

done

EOF

- 下载安装镜像

chmod +x ./images.sh && ./images.sh

初始化集群

仅k8s-master-01操作

初始化k8s-master-01节点后,会在/etc/kubernetes目录下生成对应的证书和配置。最后将其他master节点、node节点加入到k8s-master-01即可。

kubeadm init \

--apiserver-advertise-address=192.168.23.139 \

--control-plane-endpoint=k8s-master-01 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.20.9 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=83.12.0.0/16

如果初始化失败,需要重置后再初始化

#初始化重置命令

kubeadm reset

节点管理

添加master节点

- 创建令牌

仅k8s-master-01节点执行

#生成token

kubeadm token create --print-join-command

#生成key

kubeadm init phase upload-certs --upload-certs

- 添加节点

k8s-master-02和k8s-master-03节点执行

根据生成的令牌组合成添加master的命令,并在k8s-master-02和k8s-master-03节点上执行

kubeadm join k8s-master-01:6443 --token xpeers.u6ok4v3ju2oaq25t \

--discovery-token-ca-cert-hash sha256:137e28cb3a7be99914bc8ec2ce83c269dc724768c5cb6e3e06700c4494e6afad \

--control-plane --certificate-key fc56a938a45bf445b69458b1afb5ac9aaa66fabbd5c3414db6696a8fdb51ff2d

添加worker节点

- 创建令牌

仅k8s-master-01节点执行

#生成token

kubeadm token create --print-join-command

- 添加节点

k8s-node-01和k8s-node-02节点执行

根据生成的令牌添加worker,并在k8s-node-01和k8s-node-02节点上执行

kubeadm join k8s-master-01:6443 --token lx60mi.isnids1u0qlmybv7 \

--discovery-token-ca-cert-hash sha256:799108e2b9f6fe87aa1af2e4bf3ddc22b379e2ed35dd7b82ddbc3a433d1d976a

设置control节点

Master节点都执行

- 设置管理节点

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

- 设置环境变量

cat >> /root/.bashrc << EOF

export KUBECONFIG=/etc/kubernetes/admin.conf

EOF

source /root/.bashrc

- 查看所有节点

kubectl get nodes

# NAME STATUS ROLES AGE VERSION

# k8s-master-01 NotReady control-plane,master 13m v1.20.9

# k8s-master-02 NotReady <none> 93s v1.20.9

# k8s-master-03 NotReady control-plane,master 6m1s v1.20.9

# k8s-node-01 NotReady <none> 6m52s v1.20.9

# k8s-node-02 NotReady <none> 6m40s v1.20.9

各个节点NotReady的原因是没有安装网络插件。网络插件有:flannel、calico、canal、kube-router、weave net等,这里使用calico网络插件。

删除节点

- 格式

kubectl delete nodes 节点名称

- 举例

kubectl delete nodes k8s-node-01

核心组件

仅k8s-master-01节点执行

核心组件安装–calico

安装时间较长,需要耐心等待

- 下载calico安装文件

curl -O https://docs.projectcalico.org/v3.20/manifests/calico.yaml

- calico默认文件

- 安装calico

kubectl apply -f ./calico.yaml

- 检查calico

kubectl get -n kube-system pod | grep calico

其他组件

仅k8s-master-01节点执行(用不着可以不安装)

其他组件安装–metrics-server

- 下载metrics-server安装文件

wget -O /root/install_k8s/metrics-server-v0.6.0.yaml \

https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.0/components.yaml

- metrics-server默认文件

- 安装metrics-server

如果镜像地址下载失败,可以尝试使用镜像地址:registry.aliyuncs.com/google_containers/metrics-server:v0.6.0

kubectl apply -f ./metrics-server-v0.6.0.yaml

- 检查metrics-server

kubectl top nodes

其他组件安装–dashboard

- 下载dashboard安装文件

wget -O dashboard-v2.4.0.yaml https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yaml

- 默认配置文件

- 配置dashboard文件

# 使用 NodePort

sed -i '39a\ type: NodePort' dashboard-v2.4.0.yaml

sed -i '43a\ nodePort: 30443' dashboard-v2.4.0.yaml

# 使用 LoadBalancer

sed -i '39a\ type: LoadBalancer' dashboard-v2.4.0.yaml

# 创建自定义证书时需要

sed -i '48,58d' dashboard-v2.4.0.yaml

# 镜像拉取策略

sed -i '/imagePullPolicy/s@Always@IfNotPresent@' dashboard-v2.4.0.yaml

- 安装dashboard

kubectl apply -f dashboard-v2.4.0.yaml

- 查看dashboard访问端口

kubectl get svc -A |grep kubernetes-dashboard

- 创建dashboard账号

cat > /root/install_k8s/dash.yaml << EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

EOF

kubectl apply -f /root/install_k8s/dash.yaml

- 获取访问令牌

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

验证集群

查看集群中所有容器

查看集群中所有容器是否启动

kubectl get pod --all-namespaces

查看集群中主要Service

- 查看Cluster的Service

kubectl get service

- 查看DNS的Service

kubectl get service -n kube-system

查看集群中节点网络是否通

- 查看集群中pod运行节点

kubectl get pod --all-namespaces -owide

- ping测试任意ip地址

ping 83.12.151.130

查看集群中所有节点状态

kubectl get nodes

7127

7127

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?