一、下载数据集 the Cats vs Dogs dataset

1.下载原始数据集

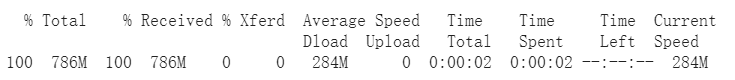

!curl -O https://download.microsoft.com/download/3/E/1/3E1C3F21-ECDB-4869-8368-6DEBA77B919F/kagglecatsanddogs_3367a.zip

2.解压

!unzip -q kagglecatsanddogs_3367a.zip3.显示下载后目录并查看

!ls

!ls PetImages![]()

数据集下载完成

二、过滤图片

过滤出非 JFIF格式的图片并删除

import os

num_skipped = 0

for folder_name in ("Cat", "Dog"):

folder_path = os.path.join("PetImages", folder_name)

for fname in os.listdir(folder_path):

fpath = os.path.join(folder_path, fname)

try:

fobj = open(fpath, "rb")

is_jfif = tf.compat.as_bytes("JFIF") in fobj.peek(10)

finally:

fobj.close()

if not is_jfif:

num_skipped += 1

# Delete corrupted image

os.remove(fpath)

print("Deleted %d images" % num_skipped)结果:

Deleted 1590 images

【注】

Import os:

·os.path.join(path,*path):合理地拼接一个或多个路径部分,返回值是两个路径的连接

·os.listdir(path):返回一个包含path指定目录中条目名称组成的列表(按任意顺序)

·os.remove(path):移除path指向的文件(如果path是目录,则抛出异常,使用rmdir())

open(name,mode,buffering)

·name:要访问文件名称的字符串值

·mode:打开文件的模式:

‘rb’:以二进制格式打开一个文件用于只读,一般用于非文本文件如图片

‘t’:文本模式(默认)

‘x’:写模式,创建一个文件,如果该文件已经存在则会报错

‘r+’:打开一个文件用与读写

‘w’:打开一个文件只用于写入,如果该文件已存在则打开文件,从头开始编辑(即原有内容会被删除);如果该文件不存在,则创建新文件

·buffering:用于设置缓冲策略,是一个可选的整数

peek( int ):用于从指定文件中读取一个字节的数据,但读取数据后,被读取的数据不会从数据流中消除(与read函数不同)

tf.compat.as_bytes( bytes_or_text , encoding):将对象(字节或unicode)转换为字节,使用编码方式进行文本处理

·encoding=’utf-8’

三、生成数据集

形成两个数据集,一个作为训练集,一个作为验证集

image_size = (180, 180)

batch_size = 32

#训练集

train_ds = tf.keras.preprocessing.image_dataset_from_directory(

"PetImages",

validation_split=0.2,

subset="training",

seed=1337,

image_size=image_size,

batch_size=batch_size,

)

#验证集

val_ds = tf.keras.preprocessing.image_dataset_from_directory(

"PetImages",

validation_split=0.2,

subset="validation",

seed=1337,

image_size=image_size,

batch_size=batch_size,

)结果:

Found 23410 files belonging to 2 classes.

Using 18728 files for training.

Found 23410 files belonging to 2 classes.

Using 4682 files for validation.

【注】

tf.keras.preprocessing.image_dataset_from_directory():从目录中的图像文件生成一个数据集,返回一个tf.data.Dataset对象,由于数据集‘PetImages’的子目录为‘cat’和‘dog’,从其中生成批次图像,同时生成标签0和1,即0代表‘cat’,1代表‘dog

·direcory:数据所在目录

·validation_split:0和1之间的可选浮点数,可保留一位数据用于验证

·subset:“training或validation”(仅在设置validation_split时使用)

·seed:用于shuffle和转换的可选随机种子(shuffle:是否打乱数据,默认True,如果设置为False,则按字母数字顺序对数据进行排序)

·image_size:从磁盘读取数据后将其重新调整大小,默认(256,256),该参数必须提供

·batch_size:数据批次的大小,默认32

四、可视化数据

显示训练数据集图片的前九个,3*3格式,并在上方显示标签: 1 代表dog ;0 代表 cat

import matplotlib.pyplot as plt

plt.figure(figsize=(10, 10))

for images, labels in train_ds.take(1):

for i in range(9):

ax = plt.subplot(3, 3, i + 1)

plt.imshow(images[i].numpy().astype("uint8"))

plt.title(int(labels[i]))

plt.axis("off")

【注】

numpy().astype():强制类型转换,将张量转换为numpy数组

·‘uint8’:转换成无符号整数

·‘int32’:转换成整数

·‘float64’:转换成浮点数

plt.imshow():对函数进行处理,并显示其格式,其后跟plt.show():才能显示图像(即先画出图像才能显示出来)

train_ds.take():从数组中取指定的行或者列(取出批量图片张量)

五、使用图像数据增强

当没有大型图像数据集时,通过对训练图像应用随机但逼真的变化来人为引入样本多样性,这有助于使模型暴露于训练数据的不同方面,同时减慢过度拟合的速度

data_augmentation = keras.Sequential(

[

layers.experimental.preprocessing.RandomFlip("horizontal"),

layers.experimental.preprocessing.RandomRotation(0.1),

]

)看看变换后的样本图片

plt.figure(figsize=(10, 10))

for images, _ in train_ds.take(1):

for i in range(9):

augmented_images = data_augmentation(images)

ax = plt.subplot(3, 3, i + 1)

plt.imshow(augmented_images[0].numpy().astype("uint8"))

plt.axis("off")

【注】

Keras.Sequential([]):通过将网络层实例的列表传递给Sequential的构造器来创建一个Sequential模型

·RandomFlip():图像翻转,’horizontal’表示水平翻转

·RandomRotation():图像旋转一定角度。当参数为度数是,表示在(-0.1,0.1)之间随机旋转,如果是center,表示中心旋转

六、标准化数据

RGB通道范围是[0,255],现使用“重新缩放”层将值标准化为[0,1],有两种方法

第一种 更适合训练在GPU上的情况

inputs = keras.Input(shape=input_shape)

x = data_augmentation(inputs)

x = layers.experimental.preprocessing.Rescaling(1./255)(x)

第二种 更适合训练在CPU上的情况

augmented_train_ds = train_ds.map(

lambda x, y: (data_augmentation(x, training=True), y))

七、建立模型

配置数据集以提高性能:使用缓冲的预取,以便我们可以从磁盘产生数据而不会阻塞 I/O

train_ds = train_ds.prefetch(buffer_size=32)

val_ds = val_ds.prefetch(buffer_size=32)建立模型:构建Xception网络的小型版本。先使用 data_augmentation预处理器开始模型,然后再进行rescaling层。我们在最终分类层之前包括一个Dropout层

def make_model(input_shape, num_classes):

inputs = keras.Input(shape=input_shape)

# Image augmentation block

x = data_augmentation(inputs)

# Entry block

x = layers.experimental.preprocessing.Rescaling(1.0 / 255)(x)

x = layers.Conv2D(32, 3, strides=2, padding="same")(x)

x = layers.BatchNormalization()(x)

x = layers.Activation("relu")(x)

x = layers.Conv2D(64, 3, padding="same")(x)

x = layers.BatchNormalization()(x)

x = layers.Activation("relu")(x)

previous_block_activation = x # Set aside residual

for size in [128, 256, 512, 728]:

x = layers.Activation("relu")(x)

x = layers.SeparableConv2D(size, 3, padding="same")(x)

x = layers.BatchNormalization()(x)

x = layers.Activation("relu")(x)

x = layers.SeparableConv2D(size, 3, padding="same")(x)

x = layers.BatchNormalization()(x)

x = layers.MaxPooling2D(3, strides=2, padding="same")(x)

# Project residual

residual = layers.Conv2D(size, 1, strides=2, padding="same")(

previous_block_activation

)

x = layers.add([x, residual]) # Add back residual

previous_block_activation = x # Set aside next residual

x = layers.SeparableConv2D(1024, 3, padding="same")(x)

x = layers.BatchNormalization()(x)

x = layers.Activation("relu")(x)

x = layers.GlobalAveragePooling2D()(x)

if num_classes == 2:

activation = "sigmoid"

units = 1

else:

activation = "softmax"

units = num_classes

x = layers.Dropout(0.5)(x)

outputs = layers.Dense(units, activation=activation)(x)

return keras.Model(inputs, outputs)

model = make_model(input_shape=image_size + (3,), num_classes=2)

keras.utils.plot_model(model, show_shapes=True)

八、训练模型

在进行50个epochs后准确率可以达到96%

epochs = 50

callbacks = [

keras.callbacks.ModelCheckpoint("save_at_{epoch}.h5"),

]

model.compile(

optimizer=keras.optimizers.Adam(1e-3),

loss="binary_crossentropy",

metrics=["accuracy"],

)

model.fit(

train_ds, epochs=epochs, callbacks=callbacks, validation_data=val_ds,

)训练过程:

Epoch 1/50

586/586 [==============================] - 156s 250ms/step - loss: 0.6621 - accuracy: 0.6285 - val_loss: 0.6194 - val_accuracy: 0.6754

Epoch 2/50

586/586 [==============================] - 151s 257ms/step - loss: 0.4475 - accuracy: 0.7884 - val_loss: 0.4350 - val_accuracy: 0.8084

Epoch 3/50

586/586 [==============================] - 153s 260ms/step - loss: 0.3634 - accuracy: 0.8390 - val_loss: 0.6299 - val_accuracy: 0.7249

Epoch 4/50

586/586 [==============================] - 153s 261ms/step - loss: 0.3167 - accuracy: 0.8681 - val_loss: 0.2636 - val_accuracy: 0.8887

Epoch 5/50

586/586 [==============================] - 152s 259ms/step - loss: 0.2637 - accuracy: 0.8929 - val_loss: 0.2141 - val_accuracy: 0.9144

Epoch 6/50

586/586 [==============================] - 152s 259ms/step - loss: 0.2259 - accuracy: 0.9047 - val_loss: 1.2972 - val_accuracy: 0.6583

Epoch 7/50

586/586 [==============================] - 152s 259ms/step - loss: 0.2053 - accuracy: 0.9143 - val_loss: 0.2884 - val_accuracy: 0.8727

Epoch 8/50

586/586 [==============================] - 152s 259ms/step - loss: 0.1875 - accuracy: 0.9219 - val_loss: 0.1648 - val_accuracy: 0.9376

Epoch 9/50

586/586 [==============================] - 152s 259ms/step - loss: 0.1756 - accuracy: 0.9290 - val_loss: 0.5611 - val_accuracy: 0.8082

Epoch 10/50

586/586 [==============================] - 153s 260ms/step - loss: 0.1681 - accuracy: 0.9304 - val_loss: 0.3434 - val_accuracy: 0.8740

Epoch 11/50

586/586 [==============================] - 152s 259ms/step - loss: 0.1628 - accuracy: 0.9348 - val_loss: 0.1753 - val_accuracy: 0.9293

Epoch 12/50

586/586 [==============================] - 153s 260ms/step - loss: 0.1489 - accuracy: 0.9390 - val_loss: 0.1815 - val_accuracy: 0.9312

Epoch 13/50

586/586 [==============================] - 152s 259ms/step - loss: 0.1466 - accuracy: 0.9401 - val_loss: 0.1415 - val_accuracy: 0.9451

Epoch 14/50

586/586 [==============================] - 152s 259ms/step - loss: 0.1331 - accuracy: 0.9454 - val_loss: 0.2082 - val_accuracy: 0.9186

Epoch 15/50

586/586 [==============================] - 152s 260ms/step - loss: 0.1351 - accuracy: 0.9478 - val_loss: 0.1752 - val_accuracy: 0.9282

Epoch 16/50

586/586 [==============================] - 152s 260ms/step - loss: 0.1188 - accuracy: 0.9494 - val_loss: 0.1920 - val_accuracy: 0.9327

Epoch 17/50

586/586 [==============================] - 153s 260ms/step - loss: 0.1188 - accuracy: 0.9521 - val_loss: 0.1332 - val_accuracy: 0.9490

Epoch 18/50

586/586 [==============================] - 153s 260ms/step - loss: 0.1178 - accuracy: 0.9538 - val_loss: 0.1245 - val_accuracy: 0.9537

Epoch 19/50

586/586 [==============================] - 153s 260ms/step - loss: 0.1143 - accuracy: 0.9525 - val_loss: 0.1151 - val_accuracy: 0.9530

Epoch 20/50

586/586 [==============================] - 152s 260ms/step - loss: 0.1023 - accuracy: 0.9600 - val_loss: 0.1976 - val_accuracy: 0.9158

Epoch 21/50

586/586 [==============================] - 153s 260ms/step - loss: 0.1018 - accuracy: 0.9605 - val_loss: 0.1635 - val_accuracy: 0.9344

Epoch 22/50

586/586 [==============================] - 152s 259ms/step - loss: 0.1042 - accuracy: 0.9573 - val_loss: 0.1374 - val_accuracy: 0.9530

Epoch 23/50

586/586 [==============================] - 153s 260ms/step - loss: 0.1063 - accuracy: 0.9577 - val_loss: 0.1292 - val_accuracy: 0.9547

Epoch 24/50

586/586 [==============================] - 152s 260ms/step - loss: 0.0972 - accuracy: 0.9601 - val_loss: 0.1288 - val_accuracy: 0.9483

Epoch 25/50

586/586 [==============================] - 153s 260ms/step - loss: 0.0904 - accuracy: 0.9618 - val_loss: 0.1178 - val_accuracy: 0.9513

Epoch 26/50

586/586 [==============================] - 153s 260ms/step - loss: 0.0837 - accuracy: 0.9669 - val_loss: 0.0999 - val_accuracy: 0.9609

Epoch 27/50

586/586 [==============================] - 153s 260ms/step - loss: 0.0865 - accuracy: 0.9663 - val_loss: 0.1189 - val_accuracy: 0.9543

Epoch 28/50

586/586 [==============================] - 153s 260ms/step - loss: 0.0845 - accuracy: 0.9660 - val_loss: 0.1050 - val_accuracy: 0.9605

Epoch 29/50

586/586 [==============================] - 153s 260ms/step - loss: 0.0838 - accuracy: 0.9669 - val_loss: 0.1280 - val_accuracy: 0.9477

Epoch 30/50

586/586 [==============================] - 153s 260ms/step - loss: 0.0833 - accuracy: 0.9670 - val_loss: 0.1093 - val_accuracy: 0.9588

Epoch 31/50

586/586 [==============================] - 153s 261ms/step - loss: 0.0738 - accuracy: 0.9720 - val_loss: 0.1918 - val_accuracy: 0.9289

Epoch 32/50

586/586 [==============================] - 153s 261ms/step - loss: 0.0782 - accuracy: 0.9700 - val_loss: 0.1166 - val_accuracy: 0.9560

Epoch 33/50

586/586 [==============================] - 153s 261ms/step - loss: 0.0731 - accuracy: 0.9725 - val_loss: 0.1085 - val_accuracy: 0.9584

Epoch 34/50

586/586 [==============================] - 153s 261ms/step - loss: 0.0728 - accuracy: 0.9707 - val_loss: 0.1067 - val_accuracy: 0.9569

Epoch 35/50

586/586 [==============================] - 153s 260ms/step - loss: 0.0711 - accuracy: 0.9726 - val_loss: 0.1040 - val_accuracy: 0.9652

Epoch 36/50

586/586 [==============================] - 153s 261ms/step - loss: 0.0721 - accuracy: 0.9711 - val_loss: 0.1093 - val_accuracy: 0.9639

Epoch 37/50

586/586 [==============================] - 153s 261ms/step - loss: 0.1013 - accuracy: 0.9589 - val_loss: 0.0962 - val_accuracy: 0.9645

Epoch 38/50

586/586 [==============================] - 153s 260ms/step - loss: 0.0661 - accuracy: 0.9741 - val_loss: 0.1409 - val_accuracy: 0.9492

Epoch 39/50

586/586 [==============================] - 156s 265ms/step - loss: 0.0630 - accuracy: 0.9758 - val_loss: 0.1161 - val_accuracy: 0.9547

Epoch 40/50

586/586 [==============================] - 157s 268ms/step - loss: 0.0639 - accuracy: 0.9768 - val_loss: 0.1058 - val_accuracy: 0.9641

Epoch 41/50

586/586 [==============================] - 157s 268ms/step - loss: 0.0601 - accuracy: 0.9765 - val_loss: 0.1122 - val_accuracy: 0.9586

Epoch 42/50

586/586 [==============================] - 157s 267ms/step - loss: 0.0595 - accuracy: 0.9759 - val_loss: 0.0983 - val_accuracy: 0.9639

Epoch 43/50

586/586 [==============================] - 157s 268ms/step - loss: 0.0592 - accuracy: 0.9763 - val_loss: 0.1067 - val_accuracy: 0.9645

Epoch 44/50

586/586 [==============================] - 157s 268ms/step - loss: 0.0629 - accuracy: 0.9755 - val_loss: 0.0866 - val_accuracy: 0.9718

Epoch 45/50

586/586 [==============================] - 157s 267ms/step - loss: 0.0576 - accuracy: 0.9771 - val_loss: 0.1143 - val_accuracy: 0.9558

Epoch 46/50

586/586 [==============================] - 157s 268ms/step - loss: 0.0675 - accuracy: 0.9744 - val_loss: 0.1133 - val_accuracy: 0.9605

Epoch 47/50

586/586 [==============================] - ETA: 0s - loss: 0.0544 - accuracy: 0.9801

Epoch 48/50

586/586 [==============================] - 158s 268ms/step - loss: 0.0573 - accuracy: 0.9795 - val_loss: 0.1155 - val_accuracy: 0.9607

Epoch 49/50

586/586 [==============================] - 158s 269ms/step - loss: 0.0511 - accuracy: 0.9811 - val_loss: 0.0853 - val_accuracy: 0.9654

Epoch 50/50

586/586 [==============================] - 157s 268ms/step - loss: 0.0510 - accuracy: 0.9816 - val_loss: 0.0942 - val_accuracy: 0.9635

九、对新数据进行推测

此时,数据增强和丢失是不活动的

img = keras.preprocessing.image.load_img(

"PetImages/Cat/6779.jpg", target_size=image_size

)

img_array = keras.preprocessing.image.img_to_array(img)

img_array = tf.expand_dims(img_array, 0) # Create batch axis

predictions = model.predict(img_array)

score = predictions[0]

print(

"This image is %.2f percent cat and %.2f percent dog."

% (100 * (1 - score), 100 * score)

)预测结果:

This image is 32.56 percent cat and 67.44 percent dog.

十、完整代码

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

"""

### 过滤图片:过滤掉名字中不含 JFIF的图片

"""

import os

num_skipped = 0

for folder_name in ("Cat", "Dog"):

folder_path = os.path.join("PetImages", folder_name)

for fname in os.listdir(folder_path):

fpath = os.path.join(folder_path, fname)

try:

fobj = open(fpath, "rb")

is_jfif = tf.compat.as_bytes("JFIF") in fobj.peek(10)

finally:

fobj.close()

if not is_jfif:

num_skipped += 1

# Delete corrupted image

os.remove(fpath)

print("Deleted %d images" % num_skipped)

"""

### 形成两个数据集:一个训练集,一个验证集

"""

image_size = (180, 180)

batch_size = 32

train_ds = tf.keras.preprocessing.image_dataset_from_directory(

"PetImages",

validation_split=0.2,

subset="training",

seed=1337,

image_size=image_size,

batch_size=batch_size,

)

val_ds = tf.keras.preprocessing.image_dataset_from_directory(

"PetImages",

validation_split=0.2,

subset="validation",

seed=1337,

image_size=image_size,

batch_size=batch_size,

)

"""

### 可视化数据:显示训练数据集图片,前九个三行三列,显示标签--1代表 dog;0 代表猫

"""

import matplotlib.pyplot as plt

plt.figure(figsize=(10, 10))

for images, labels in train_ds.take(1):

for i in range(9):

ax = plt.subplot(3, 3, i + 1)

plt.imshow(images[i].numpy().astype("uint8"))

plt.title(int(labels[i]))

plt.axis("off")

"""

### 使用图像数据增强:当没有大型图像数据集时,通过对训练图像应用随机但逼真的变化来

人为引入样本多样性,这有助于使模型暴露于训练数据的不同方面,同时减慢过度拟合的速度

"""

data_augmentation = keras.Sequential(

[

layers.experimental.preprocessing.RandomFlip("horizontal"),

layers.experimental.preprocessing.RandomRotation(0.1),

]

)

"""

看看 变换后的样本图片

"""

plt.figure(figsize=(10, 10))

for images, _ in train_ds.take(1):

for i in range(9):

augmented_images = data_augmentation(images)

ax = plt.subplot(3, 3, i + 1)

plt.imshow(augmented_images[0].numpy().astype("uint8"))

plt.axis("off")

"""

### 标准化数据:RGB通道值范围 [0,255],现使用“重新缩放”层将值标准化为[0,1],有两种方法

第一种 如果训练在GPU上更好

inputs = keras.Input(shape=input_shape)

x = data_augmentation(inputs)

x = layers.experimental.preprocessing.Rescaling(1./255)(x)

第二种 如果训练在CPU上更好

augmented_train_ds = train_ds.map(

lambda x, y: (data_augmentation(x, training=True), y))

"""

"""

### 配置数据集以提高性能:使用缓冲的预取,以便我们可以从磁盘产生数据而不会阻塞I/O

"""

"""

### 建立模型:构建Xception网络的小型版本

先使用 data_augmentation预处理器开始模型,然后再进行rescaling层。我们在最终分类层之前包括一个Dropout层

"""

def make_model(input_shape, num_classes):

inputs = keras.Input(shape=input_shape)

# Image augmentation block

x = data_augmentation(inputs)

# Entry block

x = layers.experimental.preprocessing.Rescaling(1.0 / 255)(x)

x = layers.Conv2D(32, 3, strides=2, padding="same")(x)

x = layers.BatchNormalization()(x)

x = layers.Activation("relu")(x)

x = layers.Conv2D(64, 3, padding="same")(x)

x = layers.BatchNormalization()(x)

x = layers.Activation("relu")(x)

previous_block_activation = x # Set aside residual

for size in [128, 256, 512, 728]:

x = layers.Activation("relu")(x)

x = layers.SeparableConv2D(size, 3, padding="same")(x)

x = layers.BatchNormalization()(x)

x = layers.Activation("relu")(x)

x = layers.SeparableConv2D(size, 3, padding="same")(x)

x = layers.BatchNormalization()(x)

x = layers.MaxPooling2D(3, strides=2, padding="same")(x)

# Project residual

residual = layers.Conv2D(size, 1, strides=2, padding="same")(

previous_block_activation

)

x = layers.add([x, residual]) # Add back residual

previous_block_activation = x # Set aside next residual

x = layers.SeparableConv2D(1024, 3, padding="same")(x)

x = layers.BatchNormalization()(x)

x = layers.Activation("relu")(x)

x = layers.GlobalAveragePooling2D()(x)

if num_classes == 2:

activation = "sigmoid"

units = 1

else:

activation = "softmax"

units = num_classes

x = layers.Dropout(0.5)(x)

outputs = layers.Dense(units, activation=activation)(x)

return keras.Model(inputs, outputs)

model = make_model(input_shape=image_size + (3,), num_classes=2)

keras.utils.plot_model(model, show_shapes=True)

"""

### 训练模型:在进行50个epochs后准确率可以达到96%

"""

epochs = 50

callbacks = [

keras.callbacks.ModelCheckpoint("save_at_{epoch}.h5"),

]

model.compile(

optimizer=keras.optimizers.Adam(1e-3),

loss="binary_crossentropy",

metrics=["accuracy"],

)

model.fit(

train_ds, epochs=epochs, callbacks=callbacks, validation_data=val_ds,

)

"""

### 对新数据进行推断:此时 数据增强和丢失是不活动的

"""

img = keras.preprocessing.image.load_img(

"PetImages/Cat/6779.jpg", target_size=image_size

)

img_array = keras.preprocessing.image.img_to_array(img)

img_array = tf.expand_dims(img_array, 0) # Create batch axis

predictions = model.predict(img_array)

score = predictions[0]

print(

"This image is %.2f percent cat and %.2f percent dog."

% (100 * (1 - score), 100 * score)

)

原链接 :https://keras.io/examples/vision/image_classification_from_scratch/

767

767

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?