生产环境使用ambari搭建集群,一台机器突然hdfs和kafka启动不了,查看错误日志如下(部分):

stderr:

2019-10-31 14:39:05,949 -

***** WARNING ***** WARNING ***** WARNING ***** WARNING ***** WARNING *****

***** WARNING ***** WARNING ***** WARNING ***** WARNING ***** WARNING *****

***** WARNING ***** WARNING ***** WARNING ***** WARNING ***** WARNING *****

Directory /data/hadoop/hdfs/data became unmounted from / . Current mount point: /data . Please ensure that mounts are healthy. If the mount change was intentional, you can update the contents of /var/lib/ambari-agent/data/datanode/dfs_data_dir_mount.hist.

***** WARNING ***** WARNING ***** WARNING ***** WARNING ***** WARNING *****

***** WARNING ***** WARNING ***** WARNING ***** WARNING ***** WARNING *****

***** WARNING ***** WARNING ***** WARNING ***** WARNING ***** WARNING *****

Traceback (most recent call last):

File “/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/datanode.py”, line 161, in

DataNode().execute()

File “/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py”, line 329, in execute

method(env)

File “/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/datanode.py”, line 67, in start

datanode(action=“start”)

File “/usr/lib/python2.6/site-packages/ambari_commons/os_family_impl.py”, line 89, in thunk

return fn(*args, **kwargs)

File “/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/hdfs_datanode.py”, line 68, in datanode

create_log_dir=True

File “/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/utils.py”, line 275, in service

Execute(daemon_cmd, not_if=process_id_exists_command, environment=hadoop_env_exports)

File “/usr/lib/python2.6/site-packages/resource_management/core/base.py”, line 166, in init

self.env.run()

File “/usr/lib/python2.6/site-packages/resource_management/core/environment.py”, line 160, in run

self.run_action(resource, action)

File “/usr/lib/python2.6/site-packages/resource_management/core/environment.py”, line 124, in run_action

provider_action()

File “/usr/lib/python2.6/site-packages/resource_management/core/providers/system.py”, line 262, in action_run

tries=self.resource.tries, try_sleep=self.resource.try_sleep)

File “/usr/lib/python2.6/site-packages/resource_management/core/shell.py”, line 72, in inner

result = function(command, **kwargs)

File “/usr/lib/python2.6/site-packages/resource_management/core/shell.py”, line 102, in checked_call

tries=tries, try_sleep=try_sleep, timeout_kill_strategy=timeout_kill_strategy)

File “/usr/lib/python2.6/site-packages/resource_management/core/shell.py”, line 150, in _call_wrapper

result = _call(command, **kwargs_copy)

File “/usr/lib/python2.6/site-packages/resource_management/core/shell.py”, line 303, in _call

raise ExecutionFailed(err_msg, code, out, err)

resource_management.core.exceptions.ExecutionFailed: Execution of ‘ambari-sudo.sh su hdfs -l -s /bin/bash -c ‘ulimit -c unlimited ; /usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf start datanode’’ returned 1. starting datanode, logging to /data/log/hadoop/hdfs/hadoop-hdfs-datanode-siger-slave01.shunfeng.com.out

…

网上看了各种办法,比如

/var/lib/ambari-agent/data/datanode/dfs_data_dir_mount.hist

该文件存储每个hdfs文件夹的最后一个安装点。在您的情况下,您似乎正在尝试将HDFS文件夹挂载在不同的路径上,因此datanode不会启动以防止数据丢失。修复文件以指向新的安装点并启动datanode。

然而查看后三个文件都一样,没什么问题

继续回头看日志,一行

/usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh: 第 183 行:echo: 写错误: 设备上没有空间

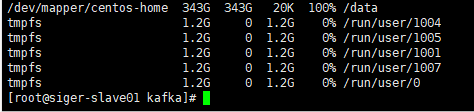

立马查看磁盘空间

查看以后是zookeeper的out.log占用大量空间

/var/log/zookeeper/zookeeper-zookeeper-server-xxx.out

3070

3070

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?