更多相关文章

个人博客 :pyenv.cc

一、Sidecar CR

-

由于在网格当中每一个以任何一个Pod为视角的该istio proxy 都会配置了到达该网格内任何服务的 Egress Listener的相关配置,因此可能会带来以下的问题 :

- 导致任何一个Pod对应的 istio proxy 配置信息量过大,而且可能更多的目标服务并不能正常访问到,并且微服务架构中本身一个需要联系的服务并不会很多;

- 所以我们需要配置特定的一组Pod中的istio-proxy上可用的Listener的专用CR;

-

Sidecar CR

- 默认情况下,istio会配置每一个Sidecar Envoy能够与同一网格内所有的workload实例通信,并且能够在与其代理的workload相关的所有端口上接收流量;

- 从实际通信需求来说,网格内的每个workload未必需要同当前网格内的所有其它workload通信,于是,sidecar CR提供了为 sidecar Envoy 微调其用于 workload间通信时支持的端口集和协议等配置的方式;

- 另外,转发来自其代理的 workload实例的出向流量时,Sidecar CR资源对象还能够限制Sidecar Envoy可以访问的外部服务集;

-

Sidecar CR的生效机制

- Sidecar CR通过 workloadSelector字段挑选同一名称空间中的一个或多个 workload实例来应用其提供的配置;

- 对于未提供workloadSelector字段Sidecar资源,其配置将应用于同一名称空间下中的所有workload实例(默认);

- namespace中同时存在带有workloadSelector字段以及为附带此字段的Sidecar资源对象时,workload实例将优先应用带有此字段的Sidecar对象;

- 每个namespace中仅应该提供一个未附带workloadSelector字段的Sidecar资源,否则其配置结果将难以确定;

- 另外,每个workload也应该仅应用一个带有workloadSelector字段的Sidecar资源,否则其行为同样难以明确;

7.1、Sidecar配置

- outboundTrafficPolicy - mode - (REGISTRY_ONLY|ALLOW_ANY)

- IstioIngressListener (并非创建ingress listener,而是用来生成filter chain match条件的)

- IstioEgressListener (port和bind则是用来定义 egress listener,并且使用hosts指明主机头来适配的流量)

- captureMode : 流量捕获、拦截机制

- DEFAULT : 默认

- IPTABLES : iptables

- NODE : 不拦截,直接向后发送

- captureMode : 流量捕获、拦截机制

root@native:~# kubectl explain sidecar.spec

KIND: Sidecar

VERSION: networking.istio.io/v1beta1

RESOURCE: spec <Object>

DESCRIPTION:

Configuration affecting network reachability of a sidecar. See more details

at: https://istio.io/docs/reference/config/networking/sidecar.html

FIELDS:

# 配置出站方向的侦听器

egress <[]Object>

# 配置入站方向的侦听器

ingress <[]Object>

# 对于所有的出站方向的侦听器,没能匹配到与之相对应请求的目标相关联流量时,可以使用outboundTrafficPolicy来定义出向请求的处理方式(ALLOW_ANY | REGISTRY_ONLY)

outboundTrafficPolicy <Object>

Configuration for the outbound traffic policy.

# workloadSelector 是否存在决定定义的此策略是针对一组还是一部分Pod生效

workloadSelector <Object>

7.2、Sidecar配置示例

7.2.1、环境准备

1、部署demoapp v10

# cat 01-demoapp-v10/deploy-demoapp.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: demoappv10

version: v1.0

name: demoappv10

spec:

progressDeadlineSeconds: 600

replicas: 3

selector:

matchLabels:

app: demoapp

version: v1.0

template:

metadata:

labels:

app: demoapp

version: v1.0

spec:

containers:

- image: registry.cn-wulanchabu.aliyuncs.com/daizhe/demoapp:v1.0

imagePullPolicy: IfNotPresent

name: demoapp

env:

- name: "PORT"

value: "8080"

ports:

- containerPort: 8080

name: web

protocol: TCP

resources:

limits:

cpu: 50m

---

apiVersion: v1

kind: Service

metadata:

name: demoappv10

spec:

ports:

- name: http

port: 8080

protocol: TCP

targetPort: 8080

selector:

app: demoapp

version: v1.0

type: ClusterIP

---

2、部署demoapp v11

# cat 02-demoapp-v11/deploy-demoapp-v11.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: demoappv11

version: v1.1

name: demoappv11

spec:

progressDeadlineSeconds: 600

replicas: 2

selector:

matchLabels:

app: demoapp

version: v1.1

template:

metadata:

labels:

app: demoapp

version: v1.1

spec:

containers:

- image: registry.cn-wulanchabu.aliyuncs.com/daizhe/demoapp:v1.1

imagePullPolicy: IfNotPresent

name: demoapp

env:

- name: "PORT"

value: "8080"

ports:

- containerPort: 8080

name: web

protocol: TCP

resources:

limits:

cpu: 50m

---

apiVersion: v1

kind: Service

metadata:

name: demoappv11

spec:

ports:

- name: http-8080

port: 8080

protocol: TCP

targetPort: 8080

selector:

app: demoapp

version: v1.1

type: ClusterIP

---

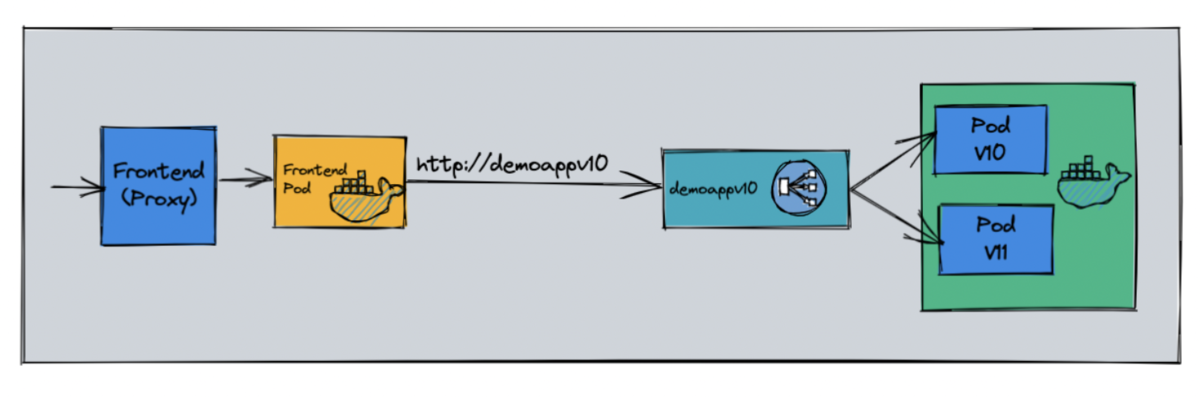

3、部署proxy

# cat 02-demoapp-v11/deploy-proxy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: proxy

spec:

progressDeadlineSeconds: 600

replicas: 1

selector:

matchLabels:

app: proxy

template:

metadata:

labels:

app: proxy

spec:

containers:

- env:

- name: PROXYURL

value: http://demoapp:8080

image: registry.cn-wulanchabu.aliyuncs.com/daizhe/proxy:v0.1.1

imagePullPolicy: IfNotPresent

name: proxy

ports:

- containerPort: 8080

name: web

protocol: TCP

resources:

limits:

cpu: 50m

---

apiVersion: v1

kind: Service

metadata:

name: proxy

spec:

ports:

- name: http-80

port: 80

protocol: TCP

targetPort: 8080

selector:

app: proxy

---

4、定义匹配到demoapp v10 和 v11 的service (v10和v11各自的service可以不定义)

# cat 02-demoapp-v11/service-demoapp.yaml

---

apiVersion: v1

kind: Service

metadata:

name: demoapp

spec:

ports:

- name: http

port: 8080

protocol: TCP

targetPort: 8080

selector:

app: demoapp

type: ClusterIP

---

5、创建定义的demoapp subset 子集

# cat 03-demoapp-subset/virutalservice-demoapp.yaml

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: demoapp

spec:

hosts:

- demoapp

http:

- name: canary

match:

- uri:

prefix: /canary

rewrite:

uri: /

route:

- destination:

host: demoapp

subset: v11

- name: default

route:

- destination:

host: demoapp

subset: v10

# cat 03-demoapp-subset/destinationrule-demoapp.yaml

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: demoapp

spec:

host: demoapp

subsets:

- name: v10

labels:

version: v1.0

- name: v11

labels:

version: v1.1

6、开放proxy 使用gateway暴露proxy服务

# cat 04-proxy-gateway/virtualservice-proxy.yaml

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: proxy

spec:

hosts:

- "fe.toptops.top" # 对应于gateways/proxy-gateway

gateways:

- istio-system/proxy-gateway # 相关定义仅应用于Ingress Gateway上

#- mesh

http:

- name: default

route:

- destination:

host: proxy

# cat 04-proxy-gateway/gateway-proxy.yaml

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: proxy-gateway

namespace: istio-system # 要指定为ingress gateway pod所在名称空间

spec:

selector:

app: istio-ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "fe.toptops.top"

7、创建demoapp 调度策略

# cat 06-weight-based-routing/virtualservice-demoapp.yaml

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: demoapp

spec:

hosts:

- demoapp

http:

- name: weight-based-routing

route:

- destination:

host: demoapp

subset: v10

weight: 90

- destination:

host: demoapp

subset: v11

weight: 10

# kubectl get gateway -n istio-system

NAME AGE

grafana-gateway 8d

kiali-gateway 8d

proxy-gateway 29s

# kubectl get vs

NAME GATEWAYS HOSTS AGE

demoapp ["demoapp"] 110s

proxy ["istio-system/proxy-gateway"] ["fe.toptops.top"] 42s

# kubectl get dr

NAME HOST AGE

demoapp demoapp 80s

# kubectl delete svc demoappv10 demoappv11

service "demoappv10" deleted

service "demoappv11" deleted

# 以下Service一定会在网格中生成对应的 Listener、VirtualHost、Route、Destination

# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demoapp ClusterIP 10.100.75.191 <none> 8080/TCP 87m

kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 8d

proxy ClusterIP 10.100.154.127 <none> 80/TCP 7d12h

8、访问测试(网格内启动客户端进行访问测试)

# kubectl run client --image=registry.cn-wulanchabu.aliyuncs.com/daizhe/admin-box -it --rm --restart=Never --command -- /bin/sh

If you don't see a command prompt, try pressing enter.

root@native:~# kubectl get pods

NAME READY STATUS RESTARTS AGE

client 2/2 Running 0 93s

demoappv10-6ff964cbff-84kqr 2/2 Running 0 7d12h

demoappv10-6ff964cbff-ngpr9 2/2 Running 0 7d12h

demoappv10-6ff964cbff-t6mxd 2/2 Running 0 7d12h

demoappv11-7984f579f5-8m7ng 2/2 Running 0 92m

demoappv11-7984f579f5-mx9kf 2/2 Running 0 92m

proxy-6b567ff76f-kf852 2/2 Running 0 92m

# 需要注意的是 :在网格当中运行应用程序是应该会显式使用两个标签 ,app和version 并且每个Pod必须要隶属于某个Service,很显然client两个条件都没有满足,所有并没有遵循网格服务的使用规范,但这里的client仅作为客户端测试使用;

root@client # curl localhost:15000/listeners

6a8a0b6e-c652-4edd-b5b1-3d210fb0a8ae::0.0.0.0:15090

316502d0-d131-4ea6-87f3-e7df91014c00::0.0.0.0:15021

10.100.146.158_15443::10.100.146.158:15443

10.100.0.1_443::10.100.0.1:443

10.100.0.2_53::10.100.0.2:53

10.100.146.158_443::10.100.146.158:443

10.100.146.158_31400::10.100.146.158:31400

10.100.136.102_443::10.100.136.102:443

10.100.136.102_15012::10.100.136.102:15012

10.100.167.188_443::10.100.167.188:443

10.100.166.70_3000::10.100.166.70:3000

0.0.0.0_15010::0.0.0.0:15010

0.0.0.0_15014::0.0.0.0:15014

0.0.0.0_20001::0.0.0.0:20001

10.100.0.2_9153::10.100.0.2:9153

0.0.0.0_9411::0.0.0.0:9411

0.0.0.0_9090::0.0.0.0:9090

0.0.0.0_16685::0.0.0.0:16685

0.0.0.0_8080::0.0.0.0:8080

0.0.0.0_80::0.0.0.0:80

10.100.22.254_14268::10.100.22.254:14268

10.100.22.254_14250::10.100.22.254:14250

10.100.146.158_15021::10.100.146.158:15021

virtualOutbound::0.0.0.0:15001

virtualInbound::0.0.0.0:15006

root@native:~# istioctl proxy-config listeners client

root@native:~# kubectl exec -it client -c istio-proxy -- pilot-agent request GET /listeners

7.2.2、Sidecar配置示例 - 定义可被访问的出站服务

7.2.2.1、outboundTrafficPolicy : REGISTRY_ONLY 丢弃

1、创建sidecar

# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

client 2/2 Running 0 21m run=client,security.istio.io/tlsMode=istio,service.istio.io/canonical-name=client,service.istio.io/canonical-revision=latest

# cat sidecar-demo.yaml

apiVersion: networking.istio.io/v1beta1

kind: Sidecar

metadata:

name: client

namespace: default

spec:

# 定义workload实例

workloadSelector:

# 标签匹配的Pod run=client

labels:

run: client

# 若不存在可接收转发报文的Listener,流量直接丢弃

outboundTrafficPolicy:

mode: REGISTRY_ONLY

# 定义出站侦听器

egress:

# 定义允许所匹配的实例所访问的服务,仅client允许访问demoapp的HTTP协议的8080端口

- port:

number: 8080

protocol: HTTP

name: demoapp

# 当前名称空间下可以使用所有名称

hosts:

- "./*"

# kubectl apply -f sidecar-demo.yaml

sidecar.networking.istio.io/client created

# kubectl get sidecar

NAME AGE

client 6s

2、验证client之上可用的侦听器,已经被急剧压缩

root@client # curl localhost:15000/listeners

6a8a0b6e-c652-4edd-b5b1-3d210fb0a8ae::0.0.0.0:15090

316502d0-d131-4ea6-87f3-e7df91014c00::0.0.0.0:15021

0.0.0.0_8080::0.0.0.0:8080

virtualOutbound::0.0.0.0:15001

virtualInbound::0.0.0.0:15006

3、访问测试,此时只能访问demoapp service 8080端口

root@client # curl demoapp:8080

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-6ff964cbff-ngpr9, ServerIP: 10.220.158.84!

root@client # curl proxy:80

curl: (56) Recv failure: Connection reset by peer

7.2.2.2、outboundTrafficPolicy : ALLOW_ANY 透传

1、创建sidecar

# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

client 2/2 Running 0 21m run=client,security.istio.io/tlsMode=istio,service.istio.io/canonical-name=client,service.istio.io/canonical-revision=latest

# cat sidecar-demo.yaml

apiVersion: networking.istio.io/v1beta1

kind: Sidecar

metadata:

name: client

namespace: default

spec:

# 定义workload实例

workloadSelector:

# 标签匹配的Pod run=client

labels:

run: client

# 若不存在可接收转发报文的Listener,流量透传

outboundTrafficPolicy:

# mode: REGISTRY_ONLY

mode: ALLOW_ANY

# 定义出站侦听器

egress:

# 定义允许所匹配的实例所访问的服务,仅client允许访问demoapp的HTTP协议的8080端口

- port:

number: 8080

protocol: HTTP

name: demoapp

# 当前名称空间下可以使用所有名称

hosts:

- "./*"

# kubectl apply -f sidecar-demo.yaml

sidecar.networking.istio.io/client created

# kubectl get sidecar

NAME AGE

client 6s

2、验证client之上可用的侦听器,已经被急剧压缩

root@client # curl localhost:15000/listeners

6a8a0b6e-c652-4edd-b5b1-3d210fb0a8ae::0.0.0.0:15090

316502d0-d131-4ea6-87f3-e7df91014c00::0.0.0.0:15021

0.0.0.0_8080::0.0.0.0:8080

virtualOutbound::0.0.0.0:15001

virtualInbound::0.0.0.0:15006

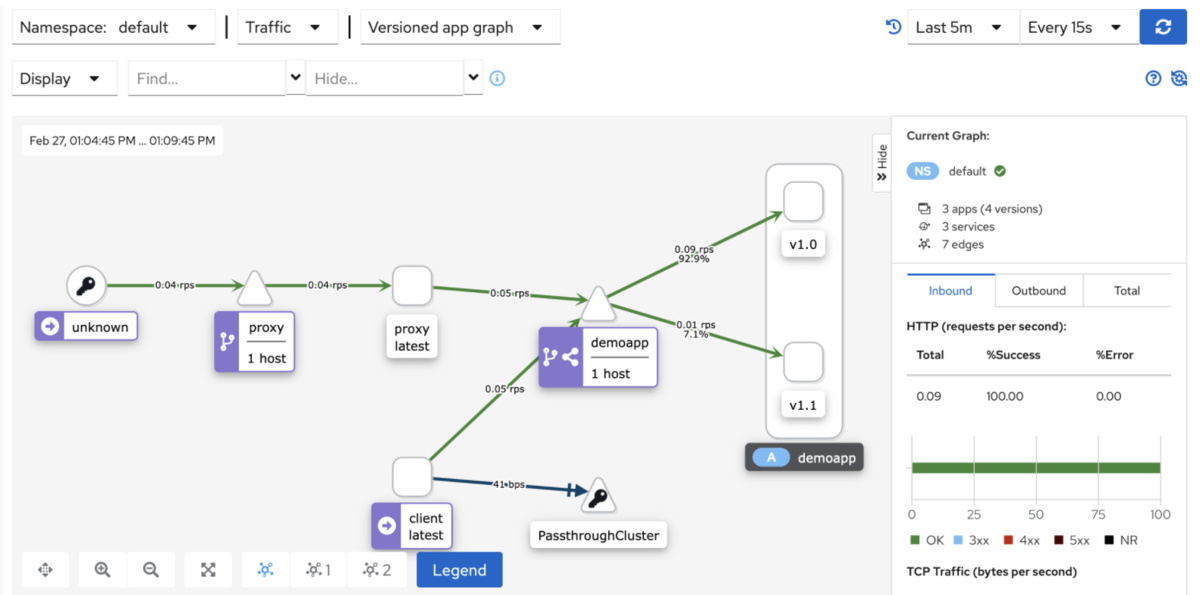

3、访问测试,虽然指定定义了client出向demoapp出站侦听器,但是由于设置的若不存在可接收转发报文的Listener,流量透传,所以也可以透传达到proxy;

root@client # curl demoapp:8080

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-6ff964cbff-84kqr, ServerIP: 10.220.158.81!

root@client # curl proxy:80

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-6ff964cbff-84kqr, ServerIP: 10.220.158.81!

- Took 63 milliseconds.

root@client # while true; do curl proxy:80; curl demoapp:8080; sleep 0.$RANDOM; done

...

...

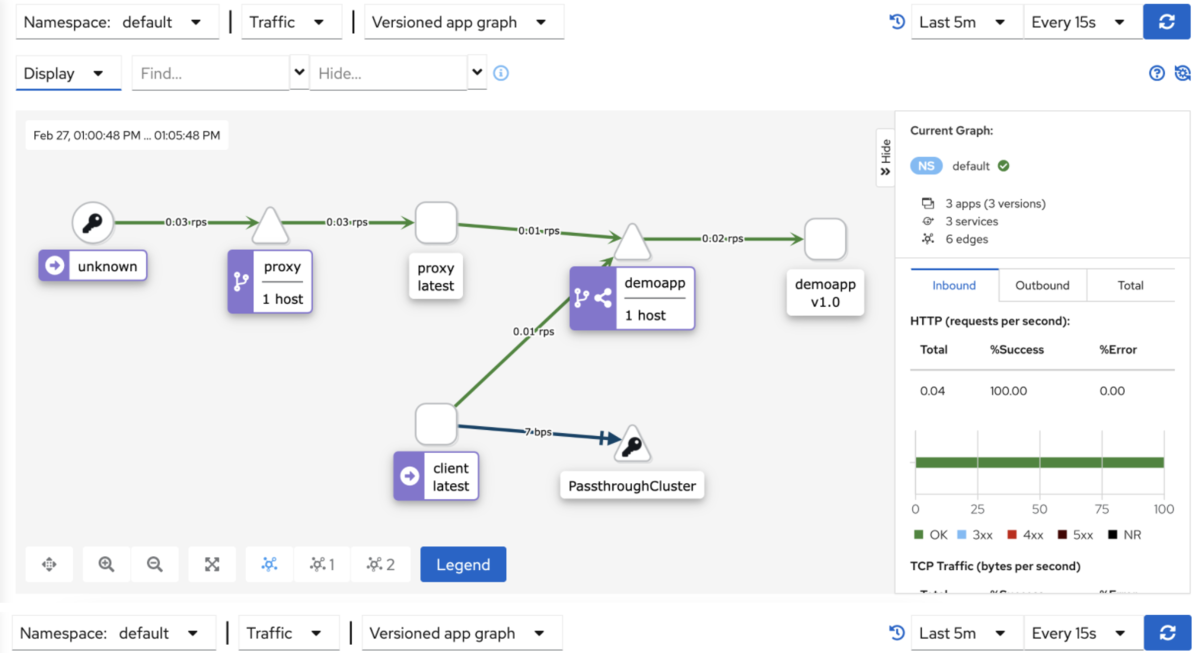

- kiali graph能够根据流量实时进行图形绘制 (访问proxy是透传)

385

385

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?