P22 squential和小实战

- Sequential能把网络集成在一起,方便使用:

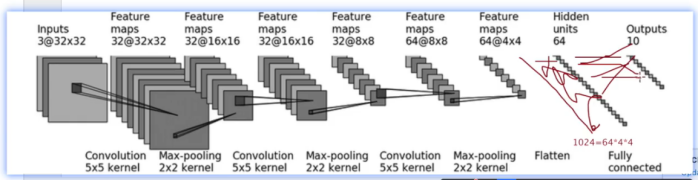

- 写一个针对CIFAR10的数据集,写一个分类网络:有一个1024层的,以前的没有说:

- 在这一集做了这个计算:这里计算的是padding:

- 清清爽爽:

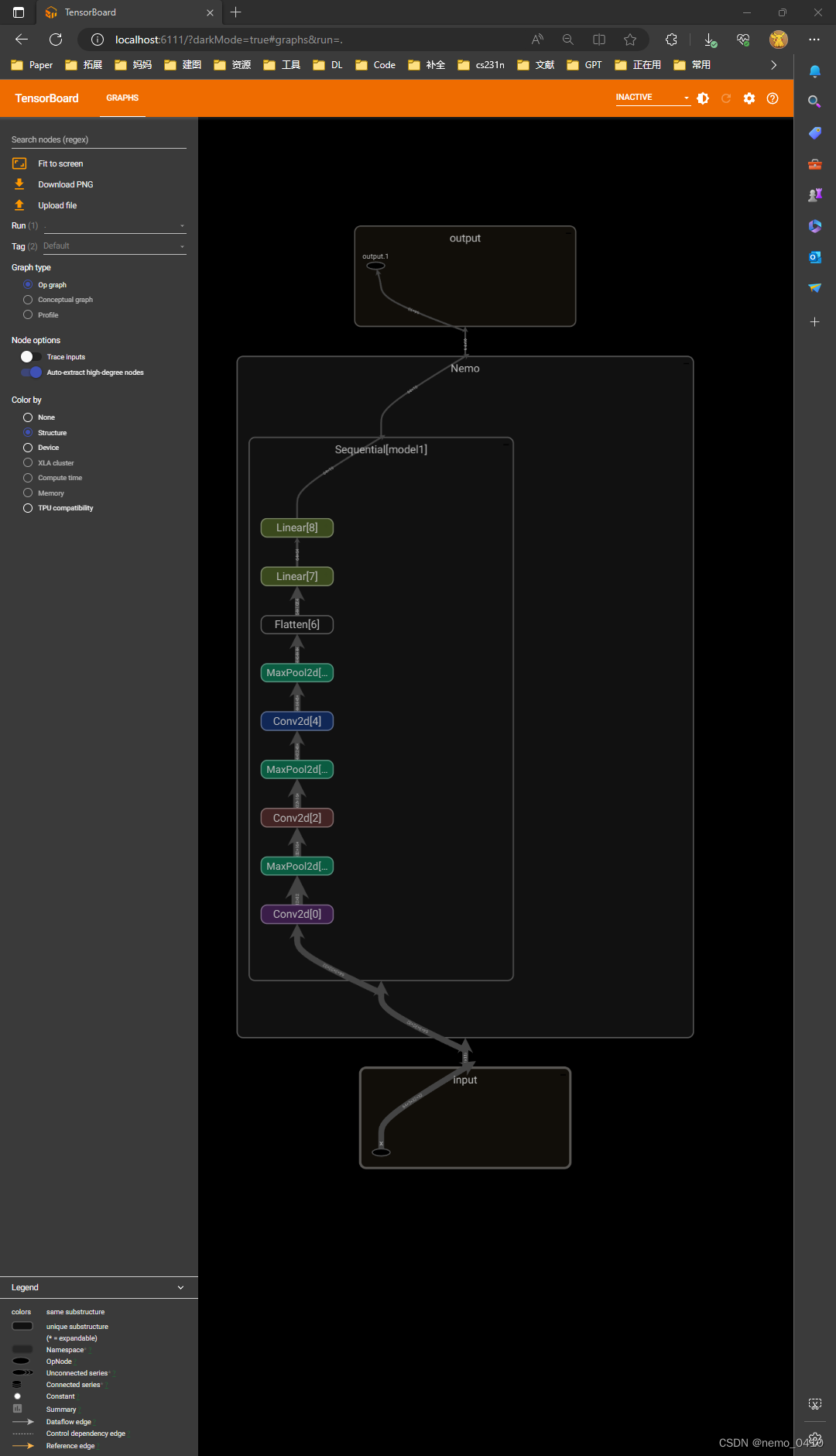

- 可视化:

可以运行的代码

# !usr/bin/env python3

# -*- coding:utf-8 -*-

"""

author :24nemo

date :2021年07月07日

"""

'''

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.tensorboard import SummaryWriter

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

tudui = Tudui()

print(tudui)

input = torch.ones((64, 3, 32, 32))

output = tudui(input)

print(output.shape)

writer = SummaryWriter("../logs_seq")

writer.add_graph(tudui, input)

writer.close()

'''

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Linear

from torch.nn.modules.flatten import Flatten

# from torch.utils.tensorboard import SummaryWriter

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1 = Conv2d(3, 32, 5, padding=2) # input_channel = 3, output_channel = 32, kernel_size = 5 * 5 ,padding是计算出来的

self.maxpool1 = MaxPool2d(2) # maxpooling只有一个kernel_size参数

self.conv2 = Conv2d(32, 32, 5, padding=2)

self.maxpool2 = MaxPool2d(2)

self.conv3 = Conv2d(32, 64, 5, padding=2)

self.maxpool3 = MaxPool2d(2)

self.flatten = Flatten() # 展平操作

self.linear1 = Linear(64 * 4 * 4, 64)

self.linear2 = Linear(64, 10)

def forward(self, m):

m = self.conv1(m)

m = self.maxpool1(m)

m = self.conv2(m)

m = self.maxpool2(m)

m = self.conv3(m)

m = self.maxpool3(m)

m = self.flatten(m)

m = self.linear1(m)

m = self.linear2(m)

return m

tudui = Tudui()

print("tudui:", tudui)

input = torch.ones((64, 3, 32, 32))

output = tudui(input)

print("output.shape:", output.shape)

'''

writer = SummaryWriter("logs_seq")

writer.add_graph(tudui, input)

writer.close()

这个 可视化,我又没能实现

'''

2023.7.18补充内容

-

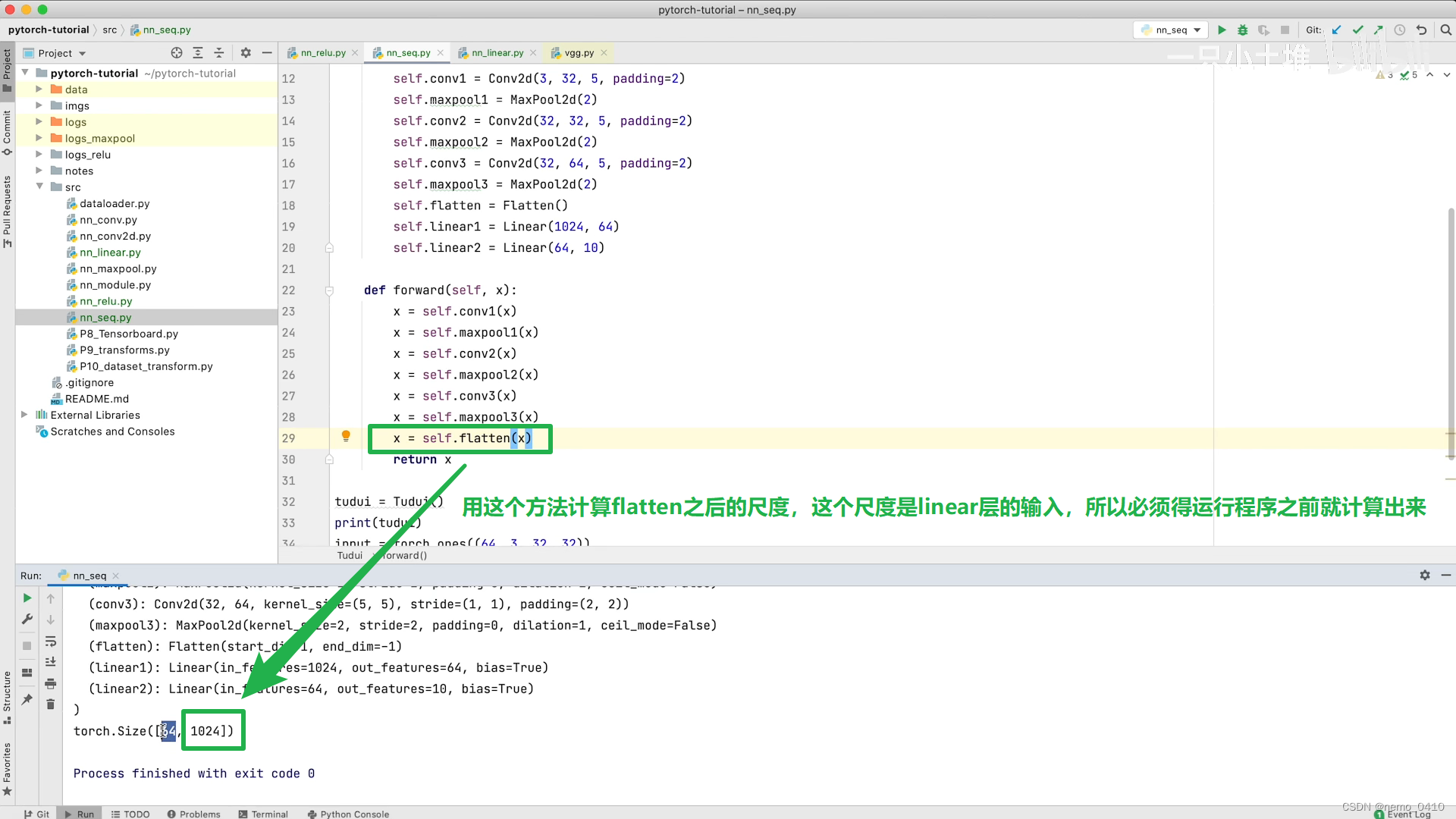

flatten计算年之后的结果,用这个方法获得:

-

如果要使用

Sequential来简化效果,就要在里面直接加上Flatten,不能到forward再去加了。 -

由于网络复杂,多出容易出错,测试网络写的正确与否,可以用一个tensor的方式,打印forward前后的shape,判断是否正确。

nemo = Nemo()

input = torch.ones((64, 3, 32, 32))

output = nemo(input)

print(nemo)

print(input)

print(output)

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Linear, Sequential, Flatten

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10(root='data', train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64)

# class Nemo(nn.Module):

# def __init__(self):

# super().__init__()

# self.conv1 = Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=1, padding=2)

# self.maxPooling1 = MaxPool2d(2)

# self.conv2 = Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2)

# self.maxPooling2 = MaxPool2d(2)

# self.conv3 = Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=2)

# self.maxPooling3 = MaxPool2d(3)

#

# self.linear1 = Linear(1024, 64)

# self.linear2 = Linear(64, 10)

#

#

# def forward(self, x):

# x = self.conv1(x)

# x = self.maxPooling1(x)

# x = self.conv2(x)

# x = self.maxPooling2(x)

# x = self.conv3(x)

# x = self.maxPooling3(x)

# x = torch.flatten(x)

# x = self.linear1(x)

# x = self.linear2(x)

# return x

class Nemo(nn.Module):

def __init__(self):

super().__init__()

self.model1 = Sequential(

Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=1, padding=2),

MaxPool2d(2),

Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2),

MaxPool2d(2),

Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

# nemo = Nemo()

# writer = SummaryWriter('xiaoshizhan')

# step = 0

#

#

# for data in dataloader:

# inputs, targets = data

# print(inputs.shape)

# outputs = nemo(inputs)

# print(outputs.shape)

#

# writer.add_images("inputs", inputs, step)

# writer.add_images("outputs", outputs, step)

#

# writer.close()

writer = SummaryWriter("xiaoshizhan")

nemo = Nemo()

input = torch.ones((64, 3, 32, 32))

output = nemo(input)

print(nemo)

print(input)

print(output)

print(output.shape)

# 这次我实现了可视化!

writer.add_graph(nemo, input)

writer.close()

328

328

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?