1. 进程的创建

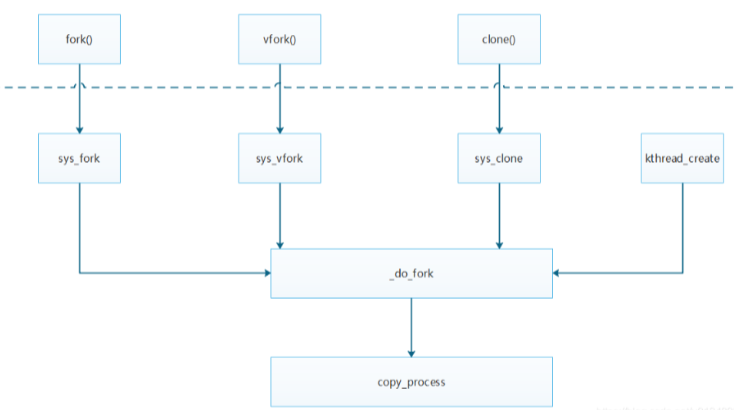

clone()、fork()、及vfork()系统调用:在 Linux中,轻量级进程是由名为 clone()的函数创建的。do_fork()函数负责处理 clone()、fork()和 vfork()系统调用。

1.1 _do_fork()函数

_do_fork()函数有6个参数:具体含义为:

#define CLONE_VM 0x00000100 /* 父、子进程共享进程地址空间set if VM shared between processes */

#define CLONE_FS 0x00000200 /*父、子进程共享文件系统信息 set if fs info shared between processes */

#define CLONE_FILES 0x00000400 /*父、子进程共享打开的文件 set if open files shared between processes */

#define CLONE_SIGHAND 0x00000800 /* 父、子进程共享信号处理函数以及被阻塞的信号set if signal handlers and blocked signals shared */

#define CLONE_PTRACE 0x00002000 /*父进程被跟踪、子进程也会被跟踪 set if we want to let tracing continue on the child too */

#define CLONE_VFORK 0x00004000 /*在创建子进程时启用Linux内核的完成量机制,wait_for_completion会使父进程进入睡眠状态,直到子进程调用execve或exit释放内存 set if the parent wants the child to wake it up on mm_release */

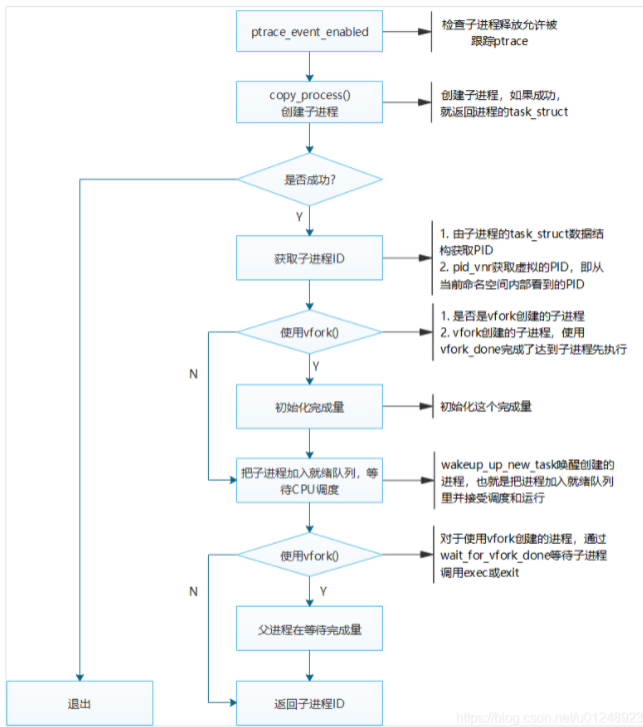

#define CLONE_IO 0x80000000 /* 复制I/O上下文Clone io context */_do_fork()函数主要是调用copy_process函数来创建子进程的task_struct数据结构,以及从父进程复制必要的内容到子进程的task_struct数据结构中,完成子进程的创建,如下图所示:

long _do_fork(unsigned long clone_flags,

unsigned long stack_start,

unsigned long stack_size,

int __user *parent_tidptr,

int __user *child_tidptr,

unsigned long tls)

{

struct task_struct *p;

int trace = 0;

long nr;

/*

* Determine whether and which event to report to ptracer. When

* called from kernel_thread or CLONE_UNTRACED is explicitly

* requested, no event is reported; otherwise, report if the event

* for the type of forking is enabled.

*/

//第一步、检查子进程是否允许被跟踪

if (!(clone_flags & CLONE_UNTRACED)) {

if (clone_flags & CLONE_VFORK)

trace = PTRACE_EVENT_VFORK;

else if ((clone_flags & CSIGNAL) != SIGCHLD)

trace = PTRACE_EVENT_CLONE;

else

trace = PTRACE_EVENT_FORK;

if (likely(!ptrace_event_enabled(current, trace)))

trace = 0;

}

//第二步、复制进程描述符,返回的是新的进程描述符的地址

p = copy_process(clone_flags, stack_start, stack_size,

child_tidptr, NULL, trace, tls);

/*

* Do this prior waking up the new thread - the thread pointer

* might get invalid after that point, if the thread exits quickly.

*/

//第三步、初始化完成量

if (!IS_ERR(p)) {

struct completion vfork;

struct pid *pid;

trace_sched_process_fork(current, p);

//1. 由子进程的task_struct数据结构来获取PID

pid = get_task_pid(p, PIDTYPE_PID);

//2. pid_vnr获取虚拟的PID,即从当前命令空间内部看到的PID

nr = pid_vnr(pid);

if (clone_flags & CLONE_PARENT_SETTID)

put_user(nr, parent_tidptr);

//3. init_completion初始化完成量

if (clone_flags & CLONE_VFORK) {

p->vfork_done = &vfork;

init_completion(&vfork);

get_task_struct(p);

}

//第四步、唤醒新进程:就是把进程加入就绪队列里并接受调度、运行。

wake_up_new_task(p);

/* forking complete and child started to run, tell ptracer */

//第五步、等待子进程完成

if (unlikely(trace))

ptrace_event_pid(trace, pid);

//对于使用vfork(),wait_for_vfork_done函数等待子进程调用exec()或exit()

if (clone_flags & CLONE_VFORK) {

if (!wait_for_vfork_done(p, &vfork))

ptrace_event_pid(PTRACE_EVENT_VFORK_DONE, pid);

}

put_pid(pid);

} else {

nr = PTR_ERR(p);

}

return nr; //第六步、返回子进程的ID

}在父进程返回用户空间时,其返回子进程的ID,子进程返回用户空间时,其返回值为0。do_fork函数执行后就存在两个进程,而且每个进程都会从 _do_fork函数的返回处执行。程序可以通过fork的返回值来区分父、子进程,父进程返回新创建的子进程的ID,子进程,返回0。

1.2copy_process()函数

/*

* This creates a new process as a copy of the old one,

* but does not actually start it yet.

*

* It copies the registers, and all the appropriate

* parts of the process environment (as per the clone

* flags). The actual kick-off is left to the caller.

*/

static struct task_struct *copy_process(unsigned long clone_flags,

unsigned long stack_start,

unsigned long stack_size,

int __user *child_tidptr,

struct pid *pid,

int trace,

unsigned long tls)

{

int retval;

struct task_struct *p;

void *cgrp_ss_priv[CGROUP_CANFORK_COUNT] = {};

//第一步、标志位检查

// 1. CLONE_NEWS表明父子进程不共享mount的命名空间,每个进程可以拥有属于自己的mount空间

if ((clone_flags & (CLONE_NEWNS|CLONE_FS)) == (CLONE_NEWNS|CLONE_FS))

return ERR_PTR(-EINVAL);

// 2. CLONE_NEWUSER表示子进程要创建新的user命名空间,USER命令空间用于管理USER ID和Group ID的映射,起到隔离的作用

if ((clone_flags & (CLONE_NEWUSER|CLONE_FS)) == (CLONE_NEWUSER|CLONE_FS))

return ERR_PTR(-EINVAL);

/*

* Thread groups must share signals as well, and detached threads

* can only be started up within the thread group.

*/

// 3. CLONE_THREAD表示父子进程在同一个线程组里,POSIX标准规定在一个进程的内部,多个线程共享一个PID,但是linux为每个线程和进程都分配了PID

if ((clone_flags & CLONE_THREAD) && !(clone_flags & CLONE_SIGHAND))

return ERR_PTR(-EINVAL);

/*

* Shared signal handlers imply shared VM. By way of the above,

* thread groups also imply shared VM. Blocking this case allows

* for various simplifications in other code.

*/

// 4. CLONE_SIGHAND表明父子进程共享相同的信号处理表,CLONE_VM表明父子进程共享内存空间

if ((clone_flags & CLONE_SIGHAND) && !(clone_flags & CLONE_VM))

return ERR_PTR(-EINVAL);

/*

* Siblings of global init remain as zombies on exit since they are

* not reaped by their parent (swapper). To solve this and to avoid

* multi-rooted process trees, prevent global and container-inits

* from creating siblings.

*/

// 5. CLONE_PARENT表明新创建的进程是兄弟关系,而不是父子关系,他们拥有相同的父进程

if ((clone_flags & CLONE_PARENT) &&

current->signal->flags & SIGNAL_UNKILLABLE)

return ERR_PTR(-EINVAL);

/*

* If the new process will be in a different pid or user namespace

* do not allow it to share a thread group with the forking task.

*/

// 6. CLONE_NEWPID表明创建一个新的PID命名空间

if (clone_flags & CLONE_THREAD) {

if ((clone_flags & (CLONE_NEWUSER | CLONE_NEWPID)) ||

(task_active_pid_ns(current) !=

current->nsproxy->pid_ns_for_children))

return ERR_PTR(-EINVAL);

}

retval = security_task_create(clone_flags);

if (retval)

goto fork_out;

retval = -ENOMEM;

//第二步,为子进程获取进程描述符

p = dup_task_struct(current);

if (!p)

goto fork_out;

//第三步、复制父进程: user数据结构中的processes成员记录了该用户的进程数,这里检查进程数是否超过了进程资源的限制RLIMIT_NPROC

ftrace_graph_init_task(p);

rt_mutex_init_task(p);

#ifdef CONFIG_PROVE_LOCKING

DEBUG_LOCKS_WARN_ON(!p->hardirqs_enabled);

DEBUG_LOCKS_WARN_ON(!p->softirqs_enabled);

#endif

retval = -EAGAIN;

// 1. 检查进程数是否超过限制,由操作系统定义

if (atomic_read(&p->real_cred->user->processes) >=

task_rlimit(p, RLIMIT_NPROC)) {

if (p->real_cred->user != INIT_USER &&

!capable(CAP_SYS_RESOURCE) && !capable(CAP_SYS_ADMIN))

goto bad_fork_free;

}

current->flags &= ~PF_NPROC_EXCEEDED;

//2. 复制父进程

retval = copy_creds(p, clone_flags);

if (retval < 0)

goto bad_fork_free;

/*

* If multiple threads are within copy_process(), then this check

* triggers too late. This doesn't hurt, the check is only there

* to stop root fork bombs.

*/

retval = -EAGAIN;

// 检查进程数量是否超过max_threads,后者取决于内存的大小

if (nr_threads >= max_threads)

goto bad_fork_cleanup_count;

```//第四步、初始化task_stcut//初始化子进程描述符中的list_head数据结构和自旋锁,并为与挂起信号、定时器及时间统计表相关的几个字段赋初值。

delayacct_tsk_init(p); /* Must remain after dup_task_struct() */

p->flags &= ~(PF_SUPERPRIV | PF_WQ_WORKER);

p->flags |= PF_FORKNOEXEC;

INIT_LIST_HEAD(&p->children);

INIT_LIST_HEAD(&p->sibling);

rcu_copy_process(p);

p->vfork_done = NULL;

spin_lock_init(&p->alloc_lock);

init_sigpending(&p->pending);

p->utime = p->stime = p->gtime = 0;

p->utimescaled = p->stimescaled = 0;

prev_cputime_init(&p->prev_cputime);

#ifdef CONFIG_VIRT_CPU_ACCOUNTING_GEN

seqlock_init(&p->vtime_seqlock);

p->vtime_snap = 0;

p->vtime_snap_whence = VTIME_SLEEPING;

#endif

#if defined(SPLIT_RSS_COUNTING)

memset(&p->rss_stat, 0, sizeof(p->rss_stat));

#endif

p->default_timer_slack_ns = current->timer_slack_ns;

task_io_accounting_init(&p->ioac);

acct_clear_integrals(p);

posix_cpu_timers_init(p);

p->start_time = ktime_get_ns();

p->real_start_time = ktime_get_boot_ns();

p->io_context = NULL;

p->audit_context = NULL;

if (clone_flags & CLONE_THREAD)

threadgroup_change_begin(current);

cgroup_fork(p);

#ifdef CONFIG_NUMA

p->mempolicy = mpol_dup(p->mempolicy);

if (IS_ERR(p->mempolicy)) {

retval = PTR_ERR(p->mempolicy);

p->mempolicy = NULL;

goto bad_fork_cleanup_threadgroup_lock;

}

#endif

#ifdef CONFIG_CPUSETS

p->cpuset_mem_spread_rotor = NUMA_NO_NODE;

p->cpuset_slab_spread_rotor = NUMA_NO_NODE;

seqcount_init(&p->mems_allowed_seq);

#endif

#ifdef CONFIG_TRACE_IRQFLAGS

p->irq_events = 0;

p->hardirqs_enabled = 0;

p->hardirq_enable_ip = 0;

p->hardirq_enable_event = 0;

p->hardirq_disable_ip = _THIS_IP_;

p->hardirq_disable_event = 0;

p->softirqs_enabled = 1;

p->softirq_enable_ip = _THIS_IP_;

p->softirq_enable_event = 0;

p->softirq_disable_ip = 0;

p->softirq_disable_event = 0;

p->hardirq_context = 0;

p->softirq_context = 0;

#endif

p->pagefault_disabled = 0;

#ifdef CONFIG_LOCKDEP

p->lockdep_depth = 0; /* no locks held yet */

p->curr_chain_key = 0;

p->lockdep_recursion = 0;

#endif

#ifdef CONFIG_DEBUG_MUTEXES

p->blocked_on = NULL; /* not blocked yet */

#endif

#ifdef CONFIG_BCACHE

p->sequential_io = 0;

p->sequential_io_avg = 0;

#endif

/* Perform scheduler related setup. Assign this task to a CPU. */

// 第五步、完成调度器相关数据结构的初始化

//sched_fork函数初始化与进程调度相关的数据结构,调度实体用sched_entity数据结构来抽象,每个进程或线程都是一个调度实体。

retval = sched_fork(clone_flags, p);

if (retval)

goto bad_fork_cleanup_policy;

//第六步、初始化task_struct结构的其他数据结构

retval = perf_event_init_task(p);

if (retval)

goto bad_fork_cleanup_policy;

retval = audit_alloc(p);

if (retval)

goto bad_fork_cleanup_perf;

/* copy all the process information */

// 拷贝所有的进程信息

shm_init_task(p);

retval = copy_semundo(clone_flags, p);

if (retval)

goto bad_fork_cleanup_audit;

//1.主要用于复制一个进程打开的文件信息。这些信息用一个结构 files_struct 来维护,每个打开的文件都有一个文件描述符

retval = copy_files(clone_flags, p);

if (retval)

goto bad_fork_cleanup_semundo;

//2.主要用于复制一个进程的目录信息。这些信息用一个结构 fs_struct 来维护。一个进程有自己的根目录和根文件系统 root,也有当前目录 pwd 和当前目录的文件系统,都在 fs_struct 里面维护。

retval = copy_fs(clone_flags, p);

if (retval)

goto bad_fork_cleanup_files;

//3.会分配一个新的 sighand_struct。这里最主要的是维护信号处理函数,在 copy_sighand 里面会调用 memcpy,将信号处理函数 sighand->action 从父进程复制到子进程。

retval = copy_sighand(clone_flags, p);

if (retval)

goto bad_fork_cleanup_fs;

//4.copy_signal 用于初始化,并且复制用于维护发给这个进程的信号的数据结构。copy_signal 函数会分配一个新的 signal_struct,并进行初始化。

retval = copy_signal(clone_flags, p);

if (retval)

goto bad_fork_cleanup_sighand;

//5.copy_mm 函数中调用 dup_mm,分配一个新的 mm_struct 结构,调用 memcpy 复制这个结构。dup_mmap 用于复制内存空间中内存映射的部分。

retval = copy_mm(clone_flags, p);

if (retval)

goto bad_fork_cleanup_signal;

//6.复制父进程的命名地址空间

retval = copy_namespaces(clone_flags, p);

if (retval)

goto bad_fork_cleanup_mm;

//7.复制父进程与I/O相关的内容

retval = copy_io(clone_flags, p);

if (retval)

goto bad_fork_cleanup_namespaces;

//8.复制父进程的内核堆信息

retval = copy_thread_tls(clone_flags, stack_start, stack_size, p, tls);

if (retval)

goto bad_fork_cleanup_io;

if (pid != &init_struct_pid) {

pid = alloc_pid(p->nsproxy->pid_ns_for_children);

if (IS_ERR(pid)) {

retval = PTR_ERR(pid);

goto bad_fork_cleanup_io;

}

}

p->set_child_tid = (clone_flags & CLONE_CHILD_SETTID) ? child_tidptr : NULL;

/*

* Clear TID on mm_release()?

*/

p->clear_child_tid = (clone_flags & CLONE_CHILD_CLEARTID) ? child_tidptr : NULL;

#ifdef CONFIG_BLOCK

p->plug = NULL;

#endif

#ifdef CONFIG_FUTEX

p->robust_list = NULL;

#ifdef CONFIG_COMPAT

p->compat_robust_list = NULL;

#endif

INIT_LIST_HEAD(&p->pi_state_list);

p->pi_state_cache = NULL;

#endif

/*

* sigaltstack should be cleared when sharing the same VM

*/

if ((clone_flags & (CLONE_VM|CLONE_VFORK)) == CLONE_VM)

p->sas_ss_sp = p->sas_ss_size = 0;

/*

* Syscall tracing and stepping should be turned off in the

* child regardless of CLONE_PTRACE.

*/

user_disable_single_step(p);

clear_tsk_thread_flag(p, TIF_SYSCALL_TRACE);

#ifdef TIF_SYSCALL_EMU

clear_tsk_thread_flag(p, TIF_SYSCALL_EMU);

#endif

clear_all_latency_tracing(p);

/* ok, now we should be set up.. */

//第七步、设置子进程的PID

p->pid = pid_nr(pid);

// 根据是创建线程还是进程设置线程组组长、进程组组长等等信息

if (clone_flags & CLONE_THREAD) {

p->exit_signal = -1;

p->group_leader = current->group_leader;

p->tgid = current->tgid;

} else {

if (clone_flags & CLONE_PARENT)

p->exit_signal = current->group_leader->exit_signal;

else

p->exit_signal = (clone_flags & CSIGNAL);

p->group_leader = p;

p->tgid = p->pid;

}

p->nr_dirtied = 0;

p->nr_dirtied_pause = 128 >> (PAGE_SHIFT - 10);

p->dirty_paused_when = 0;

p->pdeath_signal = 0;

INIT_LIST_HEAD(&p->thread_group);

p->task_works = NULL;

/*

* Ensure that the cgroup subsystem policies allow the new process to be

* forked. It should be noted the the new process's css_set can be changed

* between here and cgroup_post_fork() if an organisation operation is in

* progress.

*/

retval = cgroup_can_fork(p, cgrp_ss_priv);

if (retval)

goto bad_fork_free_pid;

/*

* Make it visible to the rest of the system, but dont wake it up yet.

* Need tasklist lock for parent etc handling!

*/

write_lock_irq(&tasklist_lock);

/* CLONE_PARENT re-uses the old parent */

if (clone_flags & (CLONE_PARENT|CLONE_THREAD)) {

p->real_parent = current->real_parent;

p->parent_exec_id = current->parent_exec_id;

} else {

p->real_parent = current;

p->parent_exec_id = current->self_exec_id;

}

spin_lock(¤t->sighand->siglock);

/*

* Copy seccomp details explicitly here, in case they were changed

* before holding sighand lock.

*/

copy_seccomp(p);

/*

* Process group and session signals need to be delivered to just the

* parent before the fork or both the parent and the child after the

* fork. Restart if a signal comes in before we add the new process to

* it's process group.

* A fatal signal pending means that current will exit, so the new

* thread can't slip out of an OOM kill (or normal SIGKILL).

*/

recalc_sigpending();

if (signal_pending(current)) {

spin_unlock(¤t->sighand->siglock);

write_unlock_irq(&tasklist_lock);

retval = -ERESTARTNOINTR;

goto bad_fork_cancel_cgroup;

}

//将pid加入PIDTYPE_PID这个散列表

if (likely(p->pid)) {

ptrace_init_task(p, (clone_flags & CLONE_PTRACE) || trace);

init_task_pid(p, PIDTYPE_PID, pid);

if (thread_group_leader(p)) {

init_task_pid(p, PIDTYPE_PGID, task_pgrp(current));

init_task_pid(p, PIDTYPE_SID, task_session(current));

if (is_child_reaper(pid)) {

ns_of_pid(pid)->child_reaper = p;

p->signal->flags |= SIGNAL_UNKILLABLE;

}

p->signal->leader_pid = pid;

p->signal->tty = tty_kref_get(current->signal->tty);

list_add_tail(&p->sibling, &p->real_parent->children);

list_add_tail_rcu(&p->tasks, &init_task.tasks);

attach_pid(p, PIDTYPE_PGID);

attach_pid(p, PIDTYPE_SID);

__this_cpu_inc(process_counts);

} else {

current->signal->nr_threads++;

atomic_inc(¤t->signal->live);

atomic_inc(¤t->signal->sigcnt);

list_add_tail_rcu(&p->thread_group,

&p->group_leader->thread_group);

list_add_tail_rcu(&p->thread_node,

&p->signal->thread_head);

}

//把新进程的PID插入pidhash[PIDTYPE_PID]散列表中

attach_pid(p, PIDTYPE_PID);

nr_threads++;

}

total_forks++;

spin_unlock(¤t->sighand->siglock);

syscall_tracepoint_update(p);

write_unlock_irq(&tasklist_lock);

proc_fork_connector(p);

cgroup_post_fork(p, cgrp_ss_priv);

if (clone_flags & CLONE_THREAD)

threadgroup_change_end(current);

perf_event_fork(p);

trace_task_newtask(p, clone_flags);

uprobe_copy_process(p, clone_flags);

return p;//第八步,返回进程描述符

bad_fork_cancel_cgroup:

cgroup_cancel_fork(p, cgrp_ss_priv);

bad_fork_free_pid:

if (pid != &init_struct_pid)

free_pid(pid);

bad_fork_cleanup_io:

if (p->io_context)

exit_io_context(p);

bad_fork_cleanup_namespaces:

exit_task_namespaces(p);

bad_fork_cleanup_mm:

if (p->mm)

mmput(p->mm);

bad_fork_cleanup_signal:

if (!(clone_flags & CLONE_THREAD))

free_signal_struct(p->signal);

bad_fork_cleanup_sighand:

__cleanup_sighand(p->sighand);

bad_fork_cleanup_fs:

exit_fs(p); /* blocking */

bad_fork_cleanup_files:

exit_files(p); /* blocking */

bad_fork_cleanup_semundo:

exit_sem(p);

bad_fork_cleanup_audit:

audit_free(p);

bad_fork_cleanup_perf:

perf_event_free_task(p);

bad_fork_cleanup_policy:

#ifdef CONFIG_NUMA

mpol_put(p->mempolicy);

bad_fork_cleanup_threadgroup_lock:

#endif

if (clone_flags & CLONE_THREAD)

threadgroup_change_end(current);

delayacct_tsk_free(p);

bad_fork_cleanup_count:

atomic_dec(&p->cred->user->processes);

exit_creds(p);

bad_fork_free:

free_task(p);

fork_out:

return ERR_PTR(retval);

}2. 内核线程

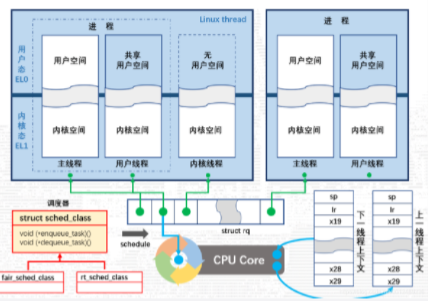

在 Linux中,内核线程在以下几方面不同于普通进程:内核线程只运行在内核态,而普通进程既可以运行在内核态,也可以运行在用户态因为内核线程只运行在内核态,它们只使用大于PAGE_ OFFSET的线性地址空间。另一方面,不管在用户态还是在内核态,普通进程可以用4GB的线性地址空间。

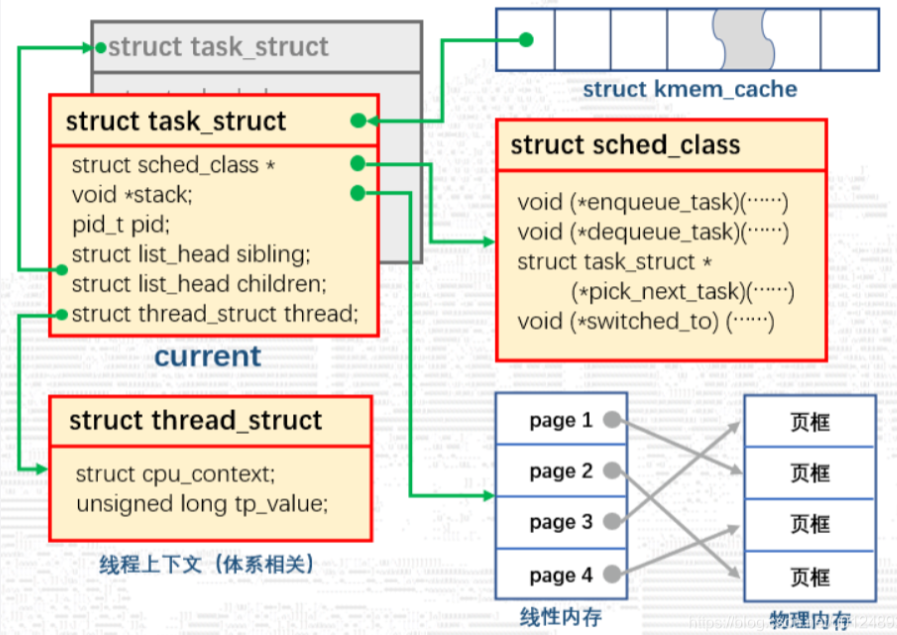

2.1 线程主要数据结构

2.2用户线程与内核线程

linux用户线程(属于用户进程),都是在用户空间创建,虽然线程是内核调度的基本单位,但是用户线程的堆栈并不是由内核管理,它是由系统库创建和管理。所以,用户的线程的资源是由用户库来管理的,和内核线程堆没有必然的关系,唯一依赖的就是靠内核的线程对象来调度执行。详细的过程参考pthread的库实现,其最终会调用到底层的clone接口。

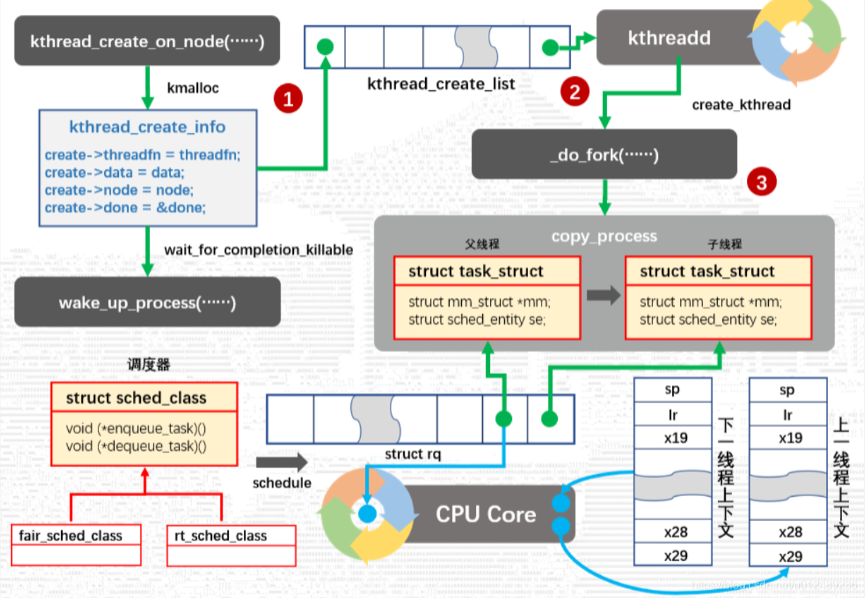

创建内核线程:kthread_create_on_node()

首先将需要在内核线程中执行的函数等信息封装到kthread_create_info结构体中,然后加入kthreadd的kthread_create_list链表中接着去唤醒kthreadd去处理创建的内核线程请求,由它来执行具体的创建工作,之后通过一个completion对象等待创建结构创建成功后,线程就可以调度执行了。

struct task_struct *kthread_create_on_node(int (*threadfn)(void *data),

void *data, int node,

const char namefmt[],

...)

{

DECLARE_COMPLETION_ONSTACK(done);

struct task_struct *task;

struct kthread_create_info *create = kmalloc(sizeof(*create),

GFP_KERNEL);

if (!create)

return ERR_PTR(-ENOMEM);

create->threadfn = threadfn; //线程函数

create->data = data;

create->node = node;

create->done = &done;

spin_lock(&kthread_create_lock);

list_add_tail(&create->list, &kthread_create_list); //加入kthread_create_list,后面的kthread线程会处理这个

spin_unlock(&kthread_create_lock);

wake_up_process(kthreadd_task); //唤醒创建线程的线程

/*

* Wait for completion in killable state, for I might be chosen by

* the OOM killer while kthreadd is trying to allocate memory for

* new kernel thread.

*/

if (unlikely(wait_for_completion_killable(&done))) { //等待请求内核的线程创建完成

/*

* If I was SIGKILLed before kthreadd (or new kernel thread)

* calls complete(), leave the cleanup of this structure to

* that thread.

*/

if (xchg(&create->done, NULL))

return ERR_PTR(-EINTR);

/*

* kthreadd (or new kernel thread) will call complete()

* shortly.

*/

wait_for_completion(&done);

}

task = create->result; //获得创建的线程

if (!IS_ERR(task)) {

static const struct sched_param param = { .sched_priority = 0 };

va_list args;

va_start(args, namefmt);

vsnprintf(task->comm, sizeof(task->comm), namefmt, args); //设置线程的名字

va_end(args);

/*

* root may have changed our (kthreadd's) priority or CPU mask.

* The kernel thread should not inherit these properties.

*/

sched_setscheduler_nocheck(task, SCHED_NORMAL, ¶m);

set_cpus_allowed_ptr(task, cpu_all_mask);

}

kfree(create);

return task;

}内核专门提供了Kthreadd线程用来处理内核线程,kthreadd线程在内核启动的时候就创建好了,一直不会退出,当没有创建任务时,主动放弃CPU时间,调用schedule()执行调度程序;当任务到来后,它会被再次唤醒,执行具体的创建线程任务。

int kthreadd(void *unused)

{

struct task_struct *tsk = current;

/* Setup a clean context for our children to inherit. */

set_task_comm(tsk, "kthreadd");

ignore_signals(tsk);

set_cpus_allowed_ptr(tsk, cpu_all_mask);

set_mems_allowed(node_states[N_MEMORY]);

current->flags |= PF_NOFREEZE;

for (;;) {

set_current_state(TASK_INTERRUPTIBLE);//此线程不退出执行,没有创建任务时,主动放弃CPU执行调度程序

if (list_empty(&kthread_create_list))

schedule();

__set_current_state(TASK_RUNNING);

spin_lock(&kthread_create_lock);

while (!list_empty(&kthread_create_list)) { //当kthread_create_list存在任务时

struct kthread_create_info *create;

create = list_entry(kthread_create_list.next,

struct kthread_create_info, list);//获取链表中的创建信息

list_del_init(&create->list);

spin_unlock(&kthread_create_lock);

create_kthread(create);//创建线程

spin_lock(&kthread_create_lock);

}

spin_unlock(&kthread_create_lock);

}

return 0;

}create_thread()函数实际上调用kernel_thread(),而它又最终调用_do_fork()创建线程。创建的时候并不会把线程函数直接传递进去,而是先传入一个公共的代理函数,待代理函数起来,并进行一些初始化后,才开始执行线程函数。

static void create_kthread(struct kthread_create_info *create)

{

int pid;

#ifdef CONFIG_NUMA

current->pref_node_fork = create->node;

#endif

/* We want our own signal handler (we take no signals by default). */

pid = kernel_thread(kthread, create, CLONE_FS | CLONE_FILES | SIGCHLD); //通过do_fork()创建内核线程

if (pid < 0) {

/* If user was SIGKILLed, I release the structure. */

struct completion *done = xchg(&create->done, NULL);

if (!done) {

kfree(create);

return;

}

create->result = ERR_PTR(pid);

complete(done);

}

}static int kthread(void *_create)

{

/* Copy data: it's on kthread's stack */

struct kthread_create_info *create = _create; //获得传递过来的信息

int (*threadfn)(void *data) = create->threadfn; //线程处理函数

void *data = create->data;

struct completion *done;

struct kthread self;

int ret;

self.flags = 0;

self.data = data;

init_completion(&self.exited);

init_completion(&self.parked);

current->vfork_done = &self.exited;

/* If user was SIGKILLed, I release the structure. */

done = xchg(&create->done, NULL); //获取done完成量

if (!done) {

kfree(create);

do_exit(-EINTR);

}

/* OK, tell user we're spawned, wait for stop or wakeup */

__set_current_state(TASK_UNINTERRUPTIBLE); //设置内核线程状态

create->result = current; //返回当前任务的tsk

complete(done); //通知创建者线程创建完毕,并释放CPU让创建者可以得到运行

schedule();

ret = -EINTR;

if (!test_bit(KTHREAD_SHOULD_STOP, &self.flags)) {

__kthread_parkme(&self);

ret = threadfn(data); //执行实际的线程函数

}

/* we can't just return, we must preserve "self" on stack */

do_exit(ret); //当前任务退出

}

2029

2029

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?