spark客户端配置

一、需已安装

hadoop客户端

hive客户端

jdk1.8

二、配置文件

spark-2.3.2-bin-hadoop2.6.tgz

三、配置客户端

tar -zxvf spark-2.3.2-bin-hadoop2.6.tgz -C /opt/beh/core

将hadoop配置文件core-site.xml,hdfs-site.xml,yarn-site.xml拷贝到spark/conf

将hive配置文件hive-site.xml拷贝到spark/conf

四、修改配置spark-env.sh

将如下内容添加

export HADOOP_HOME=/opt/beh/core/hadoop

export HADOOP_CONF_DIR=/opt/beh/core/hadoop/etc/hadoop

export YARN_CONF_DIR=/opt/beh/core/hadoop/etc/hadoop

export PATH=/usr/local/openssl/bin:/sbin:/bin:/usr/sbin:/usr/local/bin:$PATH

export JAVA_HOME=/opt/beh/core/jdk1.8.0_181

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

#export SCALA_HOME=/opt/beh/core/scala

export SPARK_HOME=/opt/beh/core/spark

export SPARK_LIBARY_PATH=.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib:$HADOOP_HOME/lib/native

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$SCALA_HOME/bin:$SPARK_HOME/bin:$PATH

export SPARK_LOG_DIR=/opt/beh/core/spark/logs

五、测试spark-sql,spark-shell,spark-submit

1、spark-sql

查看hive数据库

spark-sql> show databases;

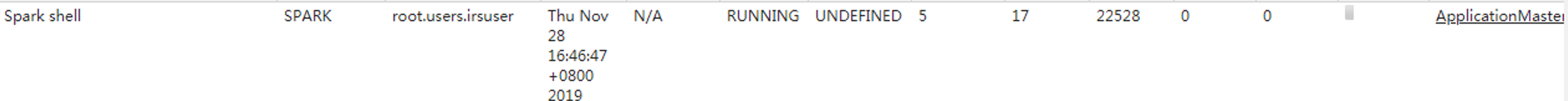

2、spark-shell --master yarn-client --executor-cores 4 --num-executors 4 --executor-memory 4g

3、spark-submit --class org.apache.spark.examples.SparkPi --master yarn /opt/beh/core/spark/examples/jars/spark-examples_2.11-2.3.2.jar

六、报错

1、

2019-11-27 17:21:55 ERROR ApplicationMaster:70 - Failed to connect to driver at r04-client:44696, retrying ...

2019-11-27 17:21:58 ERROR ApplicationMaster:70 - Failed to connect to driver at r04-client:44696, retrying ...

2019-11-27 17:21:59 ERROR ApplicationMaster:91 - Uncaught exception:

org.apache.spark.SparkException: Failed to connect to driver!

at org.apache.spark.deploy.yarn.ApplicationMaster.waitForSparkDriver(ApplicationMaster.scala:672)

at org.apache.spark.deploy.yarn.ApplicationMaster.runExecutorLauncher(ApplicationMaster.scala:532)

at org.apache.spark.deploy.yarn.ApplicationMaster.org$apache$spark$deploy$yarn$ApplicationMaster$$runImpl(ApplicationMaster.scala:347)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anonfun$run$2.apply$mcV$sp(ApplicationMaster.scala:260)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anonfun$run$2.apply(ApplicationMaster.scala:260)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anonfun$run$2.apply(ApplicationMaster.scala:260)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$5.run(ApplicationMaster.scala:815)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1692)

at org.apache.spark.deploy.yarn.ApplicationMaster.doAsUser(ApplicationMaster.scala:814)

at org.apache.spark.deploy.yarn.ApplicationMaster.run(ApplicationMaster.scala:259)

at org.apache.spark.deploy.yarn.ApplicationMaster$.main(ApplicationMaster.scala:839)

at org.apache.spark.deploy.yarn.ExecutorLauncher$.main(ApplicationMaster.scala:869)

at org.apache.spark.deploy.yarn.ExecutorLauncher.main(ApplicationMaster.scala)

2019-11-27 17:21:59 INFO ApplicationMaster:54 - Final app status: FAILED, exitCode: 13, (reason: Uncaught exception: org.apache.spark.SparkException: Failed to connect to driver!)

2019-11-27 17:21:59 INFO ShutdownHookManager:54 - Shutdown hook called

解决办法:检查所有主机的hosts,确保客户端的hostname在每台机器的hosts中都有

2、

报错找不到/etc/hadoop/conf.cloudera.yarn/topology.py

解决办法,将core-site.xml中将该参数注释掉

<!--

<property>

<name>net.topology.script.file.name</name>

<value>/etc/hadoop/conf.cloudera.yarn/topology.py</value>

</property>

-->

3331

3331

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?