Cell中神经元的个数不一定要和序列数相等

如不相等 此代码序列数为len(char2idx)

我给的cell中神经元的个数为len(char2idx)*2

改变个数后 输出的形状也随之改变 和cell中神经元的个数是一样的 这时候需要:

- 1.reshape 变为二维 [-1,hidden_size]

- 2.经过一层全连接 使之变为[sequence_length,num_class]

- 3.再次reshape 使之变为[batch_size,sequence_length,num_class]

作为一个三维的数据可以继续使用 去除了num_units与序列数大小不一致的影响、

X_for_fc = tf.reshape(outputs,[-1,rnn_hidden_size])

outputs = contrib.layers.fully_connected(

inputs=X_for_fc, num_outputs=num_classes, activation_fn=None)

outputs = tf.reshape(outputs,[batch_size,sequence_length,num_classes])具体RNN实现代码:点击这里!

整体代码如下:

import warnings

warnings.filterwarnings('ignore')

import tensorflow as tf

from tensorflow.contrib import rnn

from tensorflow import contrib

import numpy as np

sample = " 这是一个基于tensorflow的RNN短句子练习 (CSDN_qihao) "

idx2char = list(set(sample))

char2idx = {c: i for i, c in enumerate(idx2char)}

sample_idx = [char2idx[c] for c in sample]

x_data = [sample_idx[:-1]]

y_data = [sample_idx[1:]]

print(x_data)

print(y_data)

# 一些参数

dic_size = len(char2idx)

rnn_hidden_size = len(char2idx) *2 #***********

num_classes = len(char2idx) # 最终输出大小(RNN或softmax等)

batch_size = 1

sequence_length = len(sample) - 1

X = tf.placeholder(tf.int32, [None, sequence_length]) # X data

Y = tf.placeholder(tf.int32, [None, sequence_length]) # Y label

X_one_hot = tf.one_hot(X, num_classes) # one hot: 1 -> 0 1 0 0 0 0 0 0 0 0

cell = tf.contrib.rnn.BasicLSTMCell(num_units=rnn_hidden_size, state_is_tuple=True)

initial_state = cell.zero_state(batch_size, tf.float32)

outputs, _states = tf.nn.dynamic_rnn(cell, X_one_hot , initial_state=initial_state, dtype=tf.float32)

X_for_fc = tf.reshape(outputs,[-1,rnn_hidden_size])

outputs = contrib.layers.fully_connected(

inputs=X_for_fc, num_outputs=num_classes, activation_fn=None)

outputs = tf.reshape(outputs,[batch_size,sequence_length,num_classes])

weights = tf.ones([batch_size, sequence_length])

sequence_loss = tf.contrib.seq2seq.sequence_loss(logits=outputs, targets=Y,weights=weights)

loss = tf.reduce_mean(sequence_loss)

train = tf.train.GradientDescentOptimizer(learning_rate=0.1).minimize(loss)

prediction = tf.argmax(outputs, axis=2)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

# print(sess.run(X_one_hot,feed_dict={X: x_data}))

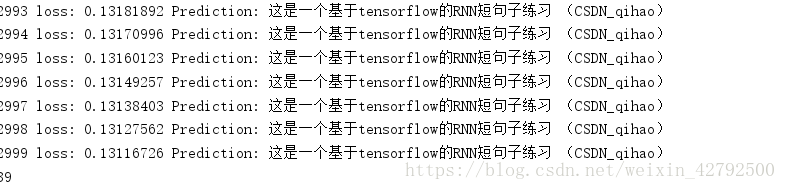

for i in range(3000):

l, _ = sess.run([loss, train], feed_dict={X: x_data, Y: y_data})

result = sess.run(prediction, feed_dict={X: x_data})

# print char using dic

result_str = [idx2char[c] for c in np.squeeze(result)]

print(i, "loss:", l, "Prediction:", ''.join(result_str))

print(len(result_str))输出结果:

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?