1.utils

import jieba

import torch

import re

jieba.load_userdict('./datas/jieba_words.txt')

stop_words=['的','了','啊']

word_mapping={

'好的很':'很好',

'好极':'很好',

'恰到好处':'很好',

'足量':'量足'

}

def acc(pred_score, target):

pred = torch.argmax(pred_score, 1).long()

target = target.long()

return torch.mean((pred == target).float())

def is_punctuation(ch):

punctuation = r"""!"#$%&'()*+,-./:;<=>?@[\]^_`{|},。:?!‘“……;~【】《》{}()"""

return ch in punctuation

def split_text(text):

text=re.sub(r'\W+','E',text)

words=[]

for word in jieba.cut(text):

word=word.strip() #去掉前后空格

if len(word)==0:

continue

# if is_punctuation(word):

# word='EOF'

if word in stop_words:

continue

word=word_mapping.get(word,word)

words.append(word)

return words

def sparse_2_dense_array(arr,n):

result=[0]*n

for t in arr:

result[t[0]]=t[1]

return result

2.classify model and train

import re

import numpy as np

from tqdm import tqdm

import pandas as pd

from gensim.corpora import Dictionary

import torch

import torch.nn as nn

import torch.nn.functional as F

from sklearn.model_selection import train_test_split

from utils import *

jieba.load_userdict('./datas/jieba_words.txt')

stop_words=['的','了','啊']

word_mapping={

'好的很':'很好',

'好极':'很好',

'恰到好处':'很好',

'足量':'量足'

}

class Network(nn.Module):

def __init__(self,features,n_class,hidden_units=None):

super(Network, self).__init__()

in_features=features

if hidden_units is None:

hidden_units=[64,128,256]

layers=[]

for _uint in hidden_units:

fc=nn.Linear(in_features=in_features,out_features=_uint)

in_features=_uint

layers.append(fc)

layers.append(nn.ReLU())

layers.append(nn.Linear(in_features=hidden_units[-1],out_features=n_class))

self.model=nn.Sequential(*layers)

def forward(self,x):

return self.model(x)

def train():

path='./datas/waimai.csv'

n_class=2

batch_size = 16

total_epoch = 100

df=pd.read_csv(path)

y=[]

x0=[]

with open('./output/t0.txt','w',encoding='utf-8') as writer:

for value in tqdm(df.values):

y.append(int(value[0]))

_words=split_text(str(value[1]))

x0.append(split_text(str(value[1])))

writer.writelines(f'{value[0]},{value[1]}。{"|".join(_words)}\n')

word_dict=Dictionary(x0)

print(f'单词数目:{len(word_dict)}')

word_dict.filter_extremes(no_below=3,no_above=0.8)#出现次数少于三次或者出现文档超过0.8数目的单词过滤掉

# [word_dict[k] for k, v in word_dict.dfs.items() if v <= 3]

word_dict.compactify() #将去除单词产生的空白位置进行重新计算

print(f'单词数目:{len(word_dict)}')

# print(len(x0))

# print(len(y))

word_dict.save('./output/word_dict.pkl')

n = len(word_dict)

x1 = []

for doc in x0:

doc = word_dict.doc2bow(doc)

x1.append(sparse_2_dense_array(doc, n))

# x2,_=ldamodel.inference(x1) #x2的shape为(11987, 20)

x=np.asarray(x1)

y=np.asarray(y)

train_x, test_x, train_y, test_y = train_test_split(x, y)

train_samples, n = train_x.shape #总样本数,维度大小,为20

test_samples, _ = test_x.shape

print(f"训练数据特征矩阵形状:{train_x.shape}, 目标属性形状:{train_y.shape}")

print(f"验证数据特征矩阵形状:{test_x.shape}, 目标属性形状:{test_y.shape}")

device=torch.device('cuda' if torch.cuda.is_available() else 'cpu')

_net = Network(features=n, n_class=n_class)

_loss_fn = nn.CrossEntropyLoss()

_optimizer = torch.optim.SGD(_net.parameters(), lr=0.5)

train_total_batch = train_samples // batch_size

test_total_batch = test_samples // batch_size

for epoch in range(total_epoch):

_net.train().to(device) # 将模型设置为训练阶段

# permutation(n): 产生(0,1,2,3,4,...n-1)n个数字,并随机打乱顺序

random_indexes = np.random.permutation(train_samples)

for batch in range(train_total_batch):

si = batch * batch_size

ei = si + batch_size

_indexes = random_indexes[si:ei]

# 得到当前批次的数据

_x = torch.from_numpy(train_x[_indexes]).float().to(device)

_y = torch.from_numpy(train_y[_indexes]).long().to(device)

# 前向过程

pred_y = _net(_x)

loss = _loss_fn(pred_y, _y) # 顺序不能错,第一个是预测值,第二个是实际值

# 反向传播,在每次反向传播之前将临时保存的梯度值重置为0

_optimizer.zero_grad()

loss.backward()

# 参数更新

_optimizer.step()

if batch == train_total_batch - 1:

print(f"TRAIN {epoch}/{total_epoch} {batch}/{train_total_batch} loss:{loss:.4f} acc:{acc(pred_y, _y)}")

# 当一个epoch数据训练完后,进行测试数据的评估

_net.eval().to(device) # 将模型设置为校验阶段

test_loss = []

test_acc = []

random_indexes = np.random.permutation(test_samples)

for batch in range(test_total_batch):

si = batch * batch_size

ei = si + batch_size

_indexes = random_indexes[si:ei]

# 得到当前批次的数据

_x = torch.from_numpy(test_x[_indexes]).float().to(device)

_y = torch.from_numpy(test_y[_indexes]).long().to(device)

# 前向过程

pred_y = _net(_x)

loss = _loss_fn(pred_y, _y) # 顺序不能错,第一个是预测值,第二个是实际值

# 添加评估指标

test_loss.append(loss.item()) # item是当tensor对象里面是一个单独的数字的时候,直接将其转换为普通的python数值

test_acc.append(acc(pred_y, _y).item())

print(f"TEST {epoch}/{total_epoch} loss:{np.mean(test_loss):.4f} acc:{np.mean(test_acc)}")

torch.save(_net, './output/model.pt')

torch.save(_net.state_dict(), './output/params.pt')

if __name__ == '__main__':

train()

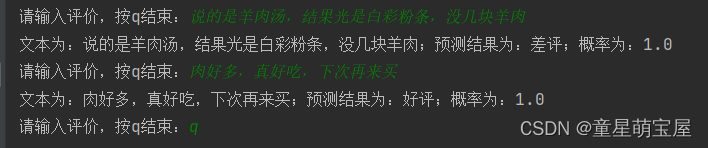

3.test

import torch

from classify import Network

from gensim.corpora import Dictionary

from utils import *

import numpy as np

if __name__ == '__main__':

classes=['差评','好评']

device=torch.device('cuda' if torch.cuda.is_available() else 'cpu')

word_dict=Dictionary.load('./output/word_dict.pkl')

# net=torch.load('./output/model.pt')

net=Network(len(word_dict),2)

state_dict=torch.load('./output/params.pt')

net.load_state_dict(state_dict)

net.eval().to(device)

while True:

text = input('请输入评价,按q结束:')

if text=='q':

break

words=split_text(text)

words=word_dict.doc2bow(words)

words=sparse_2_dense_array(words,len(word_dict))

with torch.no_grad():

y_=net(torch.Tensor([words]).to(device)).to(torch.float32) #tensor([[ 19.8573, -18.0917]], device='cuda:0')

p_=torch.softmax(y_,dim=1).cpu().numpy()[0]

y_=y_.cpu().numpy()[0] #[ 19.857328 -18.091734]

y_=np.argmax(y_)

print(f'文本为:{text};预测结果为:{classes[y_]};概率为:{p_[y_]}')

3820

3820

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?