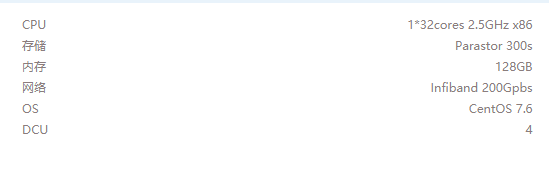

超算中心CentOS环境

安装新软件

普通用户,需要安装一些系统上没有的软件包和一些新版本包,没有root权限。系统是CentOS7.6,包管理工具yum. 以gtest包为例

主要步骤

- 联网查找

会列出源库中的所有gtest相关rpm包yum search all gtest - 下载rpm包

yumdownloader gtest-devel.x86_64 - 本地路径下安装

mkdir ~/local cd ~/local rpm2cpio <path-to-rpms>/gtest-devel.x86_64.rpm | cpio -idv - 设置环境变量: PATH、LD_LIBRARY_PATH、MANPATH

echo 'export $HOME/local/bin:$PATH' >> ~/.bashrc echo 'export $HOME/local/lib:$HOME/local/lib64:$LD_LIBRARY_PATH' >>~/.bashrc source ~/.bashrc

至此gtest包安装完成。安装cmake3的时候运行cmake3,报错缺少libuv,libzstd,Modules等,需要安装cmake3-data,libuv,libzstd;安装devtoolset-10-g++时,编译源码文件链接报错,需要安装对应的devtoolset-10-libstdc+±devel。缺少啥搜索啥,然后安装,一个一个解决!yum search时加上all选项! 参考 how-to-install-packages-in-linux-centos-without-root-user

slurm运行MPI,OpenMP并行程序

网络资源

MPI环境是OpenMPI 4.0

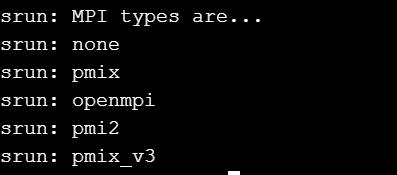

srun --mpi=list 输出

提交MPI程序

srun -N 2 --mpi=pmix ./hello_mpi.out

提交MPI+OpenMP混合并行程序: 两个节点,两个MPI进程,每个节点32个核心供OpenMP使用

srun -N 2 -c 32 --mpi=pmix ./hello_mpi_openmp.out

hello.cpp

#include <mpi.h>

#include <omp.h>

#include <cstdio>

int main(int argc, char **argv)

{

MPI_Init(&argc, &argv);

int rank,nnode;

MPI_Comm_size(MPI_COMM_WORLD,&nnode);

MPI_Comm_rank(MPI_COMM_WORLD,&rank);

char processor_name[MPI_MAX_PROCESSOR_NAME];

int name_len;

MPI_Get_processor_name(processor_name,&name_len);

int nprocs=omp_get_num_procs();

int nthreads=omp_get_num_threads();

fprintf(stderr, "Hello %s, %d/%d, nprocs=%d, nthreads=%d\n",

processor_name, rank,nnode, nprocs, nthreads);

#pragma omp parallel

{

int tid=omp_get_thread_num();

int nthread=omp_get_num_threads();

#pragma omp master

{

fprintf(stderr, "tid/nthread=%d/%d\n", tid,nthread);

}

}

MPI_Finalize();

}

编译

mpicxx -fopenmp hello.cpp -o hello.out -lgomp

hello.f90

!

! mpiifort -fopenmp hello.f90 -o hello.out

!

! srun -N 2 ./hello.out

! srun -N 2 -c 2 ./hello.out

!

! https://hpc-wiki.info/hpc/Hybrid_Slurm_Job

!

program hello

use mpi

use omp_lib

integer:: rank,size,ierror,tag, status(MPI_STATUS_SIZE)

integer:: threadid

integer:: len

character*MPI_MAX_PROCESSOR_NAME:: name

call MPI_INIT(ierror)

call MPI_COMM_SIZE(MPI_COMM_WORLD,size,ierror)

call MPI_COMM_RANK(MPI_COMM_WORLD,rank,ierror)

call MPI_GET_PROCESSOR_NAME(name,len,ierror)

!$omp parallel private(threadid)

threadid=omp_get_thread_num()

print*,'node:',trim(name),' rank:',rank,', thread_id:',threadid

!$omp end parallel

call MPI_FINALIZE(ierror)

end program

测量IMB-MPI1中的Allgather性能

#!/bin/bash

CMD=${HOME}/mpi.download/mpi-benchmarks-intel/IMB-MPI1

for nnode in {64,128,256};do

for ADJUST in {0..5}; do

I_MPI_ADJUST_ALLGATHER=${ADJUST} srun -N $nnode -o ${nnode}.a${ADJUST}.stdout -e ${nnode}.a${ADJUST}.stderr ${CMD} -npmin ${nnode} Allgather

done

done

命令备忘

CC=mpiicc CXX=mpiicpc CXXFLAGS="-I $HOME/local/usr/include" LDFLAGS="-L $HOME/local/usr/lib64" cmake3

od -i x.bin

od -f --endian=big x.bin

rpm2cpio x.rpm | cpio -idv

#列出slurm支持的pmi库

srun --mpi=list

#slurm提交MPI程序

#openmpi

srun -N 2 -c 2 --mpi=pmix ./test_openmpi.out

#mpich

srun -N 2 -c 2 --mpi=pmi2 ./test_mpich.out

#intel mpi

I_MPI_PMI_LIBRARY=/opt/gridview/slurm/lib/libpmi.so srun -N 2 -c 2 ./test_intel_mpi.out

#mvapich2-x

#compile

~/local/mvapich2-x/bin/mpicxx -fopenmp hello.cpp -o z.out

#run

LD_LIBRARY_PATH=~/local/mvapich2-x/lib64/:$LD_LIBRARY_PATH OMP_NUM_THREADS=2 srun -N 3 -c 2 --mpi=pmi2 ./z.out

//常用命令

find -name 'xxx*' -print0 | xargs -0 rm

wget -r -c <.....>

测试小程序

#include<cstring>

#include<iostream>

#include<limits>

#include<bitset>

#include<cassert>

using namespace std;

void test()

{

const int N=256;

uint8_t *spikes=new uint8_t[N/8];

for(int i=0; i<N/8; i++)

spikes[i]=0xff;

for(int i=0;i<N/8;i++)

{

assert(spikes[i]==std::numeric_limits<uint8_t>::max());

cout<<hex<<(unsigned short)spikes[i]<<endl;

cout<<bitset<8>(spikes[i])<<endl;

}

}

int main()

{

test();

}

AMD RocM HIP环境

MPI、MPI+OpenMP并行作业实验

mpi_hello.cpp

#include <cstdio>

#include <mpi.h>

/*

compile:

mpicxx mpi_hello.cpp -o hello.exe

submit:

srun -N 2 --mpi=pmix ./hello.exe

*/

int main(int argc, char **argv)

{

MPI_Init(&argc,&argv);

int size, rank;

MPI_Comm_size(MPI_COMM_WORLD, &size);

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

char processor_name[MPI_MAX_PROCESSOR_NAME];

int name_len;

MPI_Get_processor_name(processor_name, &name_len);

printf("Hello from processor %s, rank %d out of %d\n",

processor_name, rank,size);

MPI_Finalize();

}

mpi_openmp_hello.cpp

#include <cstdio>

#include <mpi.h>

#include <omp.h>

/*

compile:

mpicxx -fopenmp mpi_openmp_hello.cpp -o hello.exe

submit:

OMP_NUM_THREADS=2 srun -N 2 -c 2 --mpi=pmix ./hello.exe

*/

int main(int argc, char **argv)

{

int provided;

MPI_Init_thread(&argc,&argv,MPI_THREAD_MULTIPLE,&provided);

int size, rank;

MPI_Comm_size(MPI_COMM_WORLD, &size);

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

char processor_name[MPI_MAX_PROCESSOR_NAME];

int name_len;

MPI_Get_processor_name(processor_name, &name_len);

#pragma omp parallel

{

int threads=omp_get_num_threads();

int tid=omp_get_thread_num();

printf("Hello from processor %s, rank %d out of %d, thread %d out of %d\n",

processor_name, rank,size,tid, threads);

}

MPI_Finalize();

}

Makefile

CXX=mpicxx

CXXFLAGS =

SRC:=$(wildcard *.cpp)

EXE:=$(patsubst %.cpp,%.exe,${SRC})

all: ${EXE}

mpi_openmp_hello.exe: CXXFLAGS=-fopenmp

%.exe:%.cpp

${CXX} ${CXXFLAGS} $< -o $@

run:

srun -N 2 --mpi=pmix ./mpi_hello.exe

OMP_NUM_THREADS=2 srun -N 2 -c 2 --mpi=pmix ./mpi_openmp_hello.exe

clean:

rm -f *.exe

| MPI版本 | PMI | 例子 |

|---|---|---|

| OpenMPI | --mpi=mpix | srun -N 2 -c 2 --mem=32GB --mpi=pmix ./a.out |

| MPICH2 | --mpi=pmi2 | srun -N 2 -c 2 --mem=32GB --mpi=pmi2 ./a.out |

| Intel MPI | export I_MPI_MPI_LIBRARY=<path-to-sslurm>/lib/libmpi.so | srun -N 2 -c --mem=32GB 2 ./a.out |

| MVAPICH2 | --mpi=pmi2 | srun -N 2 -c 2 --mem=32GB --mpi=pmi2 ./a.out |

966

966

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?