1. 基础配置及说明

用kafka自带的zookeeper做集群

三台机器

放置目录在 opt

系统:centos7

kafka版本:kafka_2.12-2.2.0.tgz

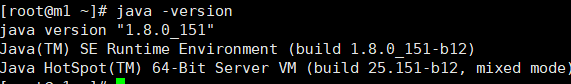

jdk版本:jdk-8u151-linux-x64.tar

192.168.1.41 m1

192.168.1.42 m2

192.168.1.43 m3

配置jdk

解压

mv 解压目录 jdk

mkdir /usr/java

mv jdk /usr/java

vi .bashrc

###

JAVA_HOME=/usr/java/jdk

PATH=$JAVA_HOME/bin:$PATH

export JAVA_HOME PATH

###

source .bashrc

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/selinux/config

echo "* soft nofile 65536" >> /etc/security/limits.conf

echo "* hard nofile 65536" >> /etc/security/limits.conf

echo "* soft nproc 65536" >> /etc/security/limits.conf

echo "* hard nproc 65536" >> /etc/security/limits.conf

echo "* soft memlock unlimited" >> /etc/security/limits.conf

echo "* hard memlock unlimited" >> /etc/security/limits.conf

yum -y install ntpdate

systemctl enable ntpdate.service

echo '*/30 * * * * /usr/sbin/ntpdate time7.aliyun.com >/dev/null 2>&1' > /tmp/crontab2.tmp

crontab /tmp/crontab2.tmp

systemctl start ntpdate.service

2.kafka中自带的zookeeper配置

tar -xvf kafka_2.12-2.2.0.tgz -C /opt

mv kafka_2.12-2.2.0 kafka

三台配置相同

mkdir -p /opt/kafka/data/zookeeper

mkdir -p /opt/kafka/logs/zookeeper

cd /opt/kafka/

vi config/zookeeper.properties

#填入

dataDir=/opt/kafka/data/zookeeper

dataLogDir=/opt/kafka/logs/zookeeper

clientPort=2181

tickTime=2000

initLimit=10

syncLimit=5

server.0=m1:2888:3888

server.1=m2:2888:3888

server.2=m3:2888:3888

echo '0' > /opt/kafka/data/myid

m1 m2 m3 分别为 0 1 2 为每台机器添加myid,注意这个和配置⽂件保持⼀直,例如server.0=m1:2888:3888,应该在myid写

⼊0,依次类推。

启动

cd /opt/kafka

bin/zookeeper-server-start.sh -daemon config/zookeeper.properties

放在后台执行

bin/zookeeper-server-start.sh config/zookeeper.properties

bin/zookeeper-server-stop.sh config/zookeeper.properties

查看⼯作状态

echo stat | nc localhost 2181

制作systemctl

#vi /lib/systemd/system/zookeeper.service

[Unit]

Description=Apache Zookeeper server

Documentation=http://zookeeper.apache.org

Requires=network.target remote-fs.target

After=network.target remote-fs.target

[Service]

Type=simple

Environment=JAVA_HOME=/usr/java/jdk

ExecStart=/opt/kafka/bin/zookeeper-server-start.sh /opt/kafka/config/zookeeper.properties

ExecStop=/opt/kafka/bin/zookeeper-server-stop.sh

Restart=on-abnormal

User=root

Group=root

[Install]

WantedBy=multi-user.target

3.kafka配置

想让外部访问,必须是ip********,必须 advertised.listeners是ip地址。**

配置文件

broker.id= 0 ,1,2

分别每台机器对应的 m1 m2 m3

其他的配置项就是ip改成实际ip

mkdir -p /opt/kafka/logs/kafka

vi config/server.properties

broker.id=1

listeners=PLAINTEXT://192.168.1.42:8092

#想让外部访问,必须是ip**********,必须*****

advertised.listeners=PLAINTEXT://192.168.1.42:8092

num.network.threads=32

num.io.threads=96

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

zookeeper.connect=m1:2181,m2:2181,m3:2181

log.dirs=/opt/kafka/logs/kafka

num.partitions=3

offsets.topic.replication.factor=3

transaction.state.log.replication.factor=3

transaction.state.log.min.isr=3

num.recovery.threads.per.data.dir=1

log.retention.hours=168

delete.topic.enable=true

启动

bin/kafka-server-start.sh config/server.properties

放后台启动

bin/kafka-server-start.sh -daemon config/server.properties

验证

jps

ss -anlp|grep 8092

制作systemctl

#vi /lib/systemd/system/kafka.service

[Unit]

Description=Apache Kafka Server

Documentation=http://kafka.apache.org/documentation.html

Requires=zookeeper.service

[Service]

Type=simple

Environment=JAVA_HOME=/usr/java/jdk

ExecStart=/opt/kafka/bin/kafka-server-start.sh /opt/kafka/config/server.properties

ExecStop=/opt/kafka/bin/kafka-server-stop.sh

Restart=on-abnormal

[Install]

WantedBy=multi-user.target

由于启动kafka必须先启动zookeeper。所以当开机自启时,kafka启动不了,需要自己手动。systemctl start kafka

4.配置SASL/SCRAM认证

配置SASL/SCRAM认证后,你所有的操作的脚本最好填写

需要填写的,由于开启了认证,所有的操作都需要认证

bin/kafka-acls.sh

export KAFKA_OPTS=" -Djava.security.auth.login.config=/opt/kafka/config/jaas/kafka_server_jaas.conf"

设置kafka

#### 三台一样基本 zookeeper

vi config/zookeeper.properties

dataDir=/opt/kafka/data

dataLogDir=/opt/kafka/logs/zookeeper

clientPort=2181

tickTime=2000

initLimit=10

syncLimit=5

server.0=m1:2888:3888

server.1=m2:2888:3888

server.2=m3:2888:3888

authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

requireClientAuthScheme=sasl

jaasLoginRenew=3600000

###

vi config/jaas/kafka_zookeeper_jaas.conf

Server {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="admin"

password="admin"

user_admin="admin"

user_kafka="kafka";

};

vi bin/zookeeper-server-start.sh

export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/jaas/kafka_zookeeper_jaas.conf"

#####kafka 配置

#创建admin认证,password对应kafka_server_jaas.conf

bin/kafka-configs.sh --zookeeper 192.168.1.41:2181 --alter --add-config 'SCRAM-SHA-256=[password=admin],SCRAM-SHA-512=[password=admin]' --entity-type users --entity-name admin

#查看证书

bin/kafka-configs.sh --zookeeper 192.168.1.41:2181 --describe --entity-type users --entity-name admin

vi config/server.properties

broker.id=0

listeners=SASL_PLAINTEXT://192.168.1.41:8092

advertised.listeners=SASL_PLAINTEXT://192.168.1.41:8092

num.network.threads=32

num.io.threads=96

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

zookeeper.connect=m1:2181,m2:2181,m3:2181

log.dirs=/opt/kafka/logs/kafka

num.partitions=3

offsets.topic.replication.factor=3

transaction.state.log.replication.factor=3

transaction.state.log.min.isr=3

num.recovery.threads.per.data.dir=1

log.retention.hours=168

delete.topic.enable=true

sasl.enabled.mechanisms=SCRAM-SHA-512

sasl.mechanism.inter.broker.protocol=SCRAM-SHA-512

authorizer.class.name=kafka.security.auth.SimpleAclAuthorizer

allow.everyone.if.no.acl.found=true

security.inter.broker.protocol=SASL_PLAINTEXT

zookeeper.set.acl=true

super.users=User:admin

vi config/jaas/kafka_server_jaas.conf

KafkaServer {

org.apache.kafka.common.security.scram.ScramLoginModule required

username="admin"

password="admin";

};

Client {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="kafka"

password="kafka";

};

vi bin/kafka-server-start.sh

export KAFKA_OPTS=" -Djava.security.auth.login.config=/opt/kafka/config/jaas/kafka_server_jaas.conf"

测试

最好在开两台机器,不要在集群内测试

vi /config/admin.conf

增加admin认证文件

security.protocol=SASL_PLAINTEXT

sasl.mechanism=SCRAM-SHA-512

sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="admin" password="admin";

vi config/mytest.conf

新建mytest认证文件

security.protocol=SASL_PLAINTEXT

sasl.mechanism=SCRAM-SHA-512

sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="mytest" password="mytest";

bin/kafka-topics.sh --list --bootstrap-server 192.168.1.41:8092 --command-config config/admin.conf

bin/kafka-configs.sh --zookeeper 192.168.1.41:2181 --alter --add-config 'SCRAM-SHA-512=[password=123456]' --entity-type users --entity-name kafka

bin/kafka-configs.sh --zookeeper 192.168.1.41:2181 --alter --add-config 'SCRAM-SHA-512=[password=mytest]' --entity-type users --entity-name kafka

#读取权限,设置用户mytest的消费者权限

bin/kafka-acls.sh --authorizer-properties zookeeper.connect=192.168.1.41:2181 --add --allow-principal User:"mytest" --consumer --topic 'mytest' --group '*'

#写入权限,设置用户mytest的生产者权限

bin/kafka-acls.sh --authorizer-properties zookeeper.connect=192.168.1.41:2181 --add --allow-principal User:"mytest" --producer --topic 'mytest'

#查看权限

bin/kafka-acls.sh --authorizer-properties zookeeper.connect=192.168.1.41:2181 --list

#生产者

bin/kafka-console-producer.sh --broker-list 192.168.1.41:8092 --topic mytest --producer.config config/mytest.conf

#消费者

bin/kafka-console-consumer.sh --bootstrap-server 192.168.1.41:8092 --topic mytest --consumer.config config/mytest.conf

#查看所有groupid

bin/kafka-consumer-groups.sh --bootstrap-server 192.168.1.41:8092 --list --command-config config/admin.conf

#查看offset

bin/kafka-consumer-groups.sh --bootstrap-server 192.168.1.41:8092 --describe --group test-consumer-group --command-config config/admin.conf

bin/kafka-run-class.sh kafka.tools.DumpLogSegments --files /opt/kafka/logs/kafka/mytest-0/00000000000000000000.log --print-data-log

997

997

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?