制作zookeeper镜像

Dockerfile如下:

FROM harbor-server.linux.io/base-images/ubuntu-jdk-base:8u131

ENV ZK_VERSION 3.4.14

ADD zookeeper-3.4.14.tar.gz /apps/

RUN mv /apps/zookeeper-3.4.14 /apps/zookeeper \

&& mkdir -p /apps/zookeeper/data /apps/zookeeper/wal /apps/zookeeper/log

COPY conf /apps/zookeeper/conf/

COPY bin/zkReady.sh /apps/zookeeper/bin/

COPY entrypoint.sh /

ENV PATH=/apps/zookeeper/bin:${PATH} \

ZOO_LOG_DIR=/apps/zookeeper/log \

ZOO_LOG4J_PROP="INFO, CONSOLE, ROLLINGFILE" \

JMXPORT=9010

ENTRYPOINT [ "/entrypoint.sh" ]

CMD [ "zkServer.sh", "start-foreground" ]

EXPOSE 2181 2888 3888 9010

其中conf目录下存在两个文件,zoo.cfg(zookeeper的配置文件)和log4j.properties(zookeeper日志相关配置),zoo.cfg内容如下:

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/apps/zookeeper/data

dataLogDir=/apps/zookeeper/wal

#snapCount=100000

autopurge.purgeInterval=1

clientPort=2181

quorumListenOnAllIPs=true

log4j.properties内容如下:

# Define some default values that can be overridden by system properties

zookeeper.root.logger=INFO, CONSOLE, ROLLINGFILE

zookeeper.console.threshold=INFO

zookeeper.log.dir=/apps/zookeeper/log

zookeeper.log.file=zookeeper.log

zookeeper.log.threshold=INFO

zookeeper.tracelog.dir=/apps/zookeeper/log

zookeeper.tracelog.file=zookeeper_trace.log

#

# ZooKeeper Logging Configuration

#

# Format is "<default threshold> (, <appender>)+

# DEFAULT: console appender only

log4j.rootLogger=${zookeeper.root.logger}

# Example with rolling log file

#log4j.rootLogger=DEBUG, CONSOLE, ROLLINGFILE

# Example with rolling log file and tracing

#log4j.rootLogger=TRACE, CONSOLE, ROLLINGFILE, TRACEFILE

#

# Log INFO level and above messages to the console

#

log4j.appender.CONSOLE=org.apache.log4j.ConsoleAppender

log4j.appender.CONSOLE.Threshold=${zookeeper.console.threshold}

log4j.appender.CONSOLE.layout=org.apache.log4j.PatternLayout

log4j.appender.CONSOLE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L] - %m%n

#

# Add ROLLINGFILE to rootLogger to get log file output

# Log DEBUG level and above messages to a log file

log4j.appender.ROLLINGFILE=org.apache.log4j.RollingFileAppender

log4j.appender.ROLLINGFILE.Threshold=${zookeeper.log.threshold}

log4j.appender.ROLLINGFILE.File=${zookeeper.log.dir}/${zookeeper.log.file}

# Max log file size of 10MB

log4j.appender.ROLLINGFILE.MaxFileSize=10MB

# uncomment the next line to limit number of backup files

log4j.appender.ROLLINGFILE.MaxBackupIndex=5

log4j.appender.ROLLINGFILE.layout=org.apache.log4j.PatternLayout

log4j.appender.ROLLINGFILE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L] - %m%n

#

# Add TRACEFILE to rootLogger to get log file output

# Log DEBUG level and above messages to a log file

log4j.appender.TRACEFILE=org.apache.log4j.FileAppender

log4j.appender.TRACEFILE.Threshold=TRACE

log4j.appender.TRACEFILE.File=${zookeeper.tracelog.dir}/${zookeeper.tracelog.file}

log4j.appender.TRACEFILE.layout=org.apache.log4j.PatternLayout

### Notice we are including log4j's NDC here (%x)

log4j.appender.TRACEFILE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L][%x] - %m%n

entrypoint.sh是容器启动时执行的脚本,内容如下:

#!/bin/bash

#写入myid

echo ${MYID:-1} > /apps/zookeeper/data/myid

#动态生成zookeepr配置

if [ -n "$SERVERS" ]; then

IFS=\, read -a servers <<<"$SERVERS"

for i in "${!servers[@]}"; do

printf "\nserver.%i=%s:2888:3888" "$((1 + $i))" "${servers[$i]}" >> /apps/zookeeper/conf/zoo.cfg

done

fi

cd /apps/zookeeper

exec "$@"

bin/zkReady.sh是检查zookeeper状态的脚本,内容如下:

#!/bin/bash

/zookeeper/bin/zkServer.sh status | egrep 'Mode: (standalone|leader|follower|observing)'

所有构建文件如下图:

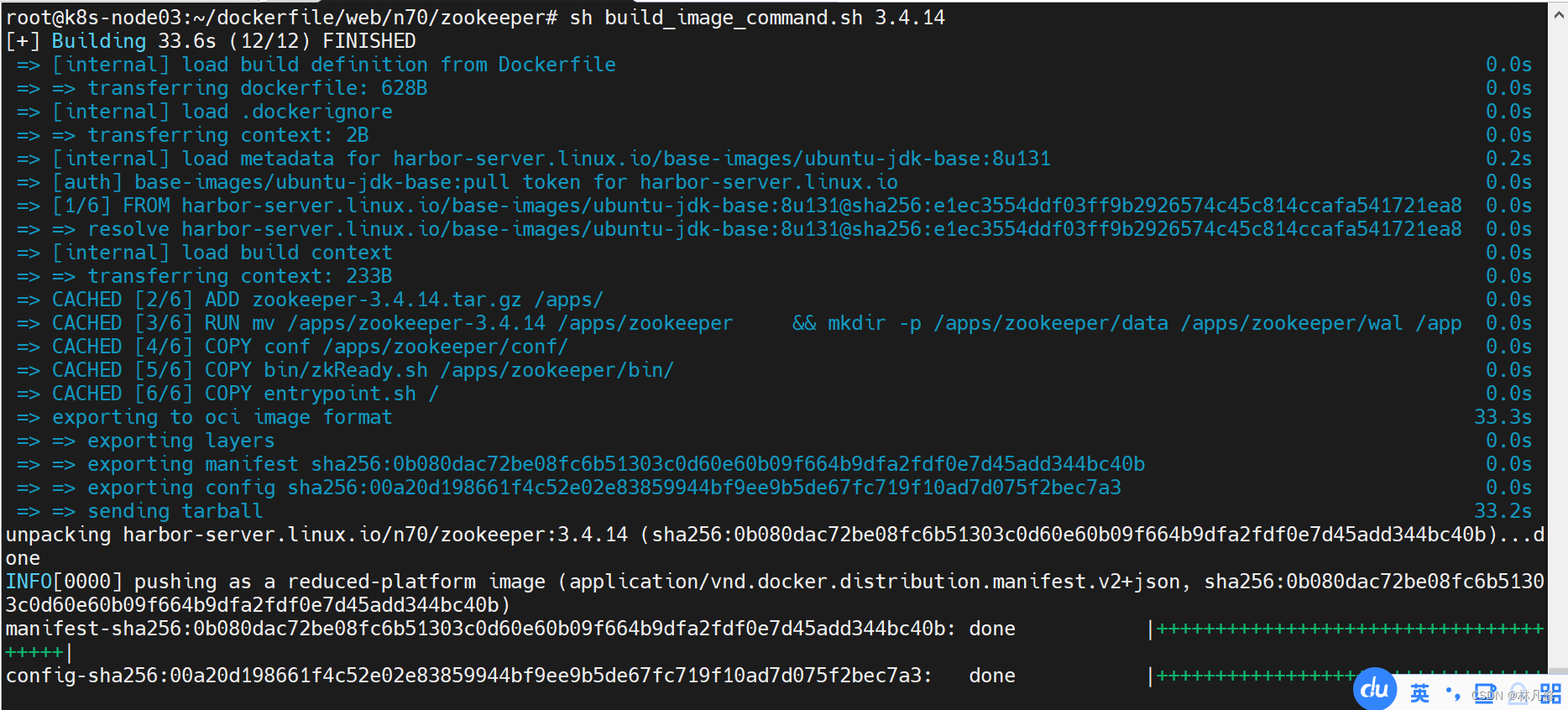

通过脚本执行构建上传镜像

#!/bin/bash

TAG=$1

nerdctl build -t harbor-server.linux.io/n70/zookeeper:${TAG} .

sleep 3

nerdctl push harbor-server.linux.io/n70/zookeeper:${TAG}

创建pv/pvc

先创建pv,部署文件如下:

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-data-volume1

spec:

nfs:

server: 192.168.122.1

path: /data/k8s/n70/zookeeper/zookeeper-data1

accessModes: ["ReadWriteOnce"]

capacity:

storage: 5Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-data-volume2

spec:

nfs:

server: 192.168.122.1

path: /data/k8s/n70/zookeeper/zookeeper-data2

accessModes: ["ReadWriteOnce"]

capacity:

storage: 5Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-data-volume3

spec:

nfs:

server: 192.168.122.1

path: /data/k8s/n70/zookeeper/zookeeper-data3

accessModes: ["ReadWriteOnce"]

capacity:

storage: 5Gi

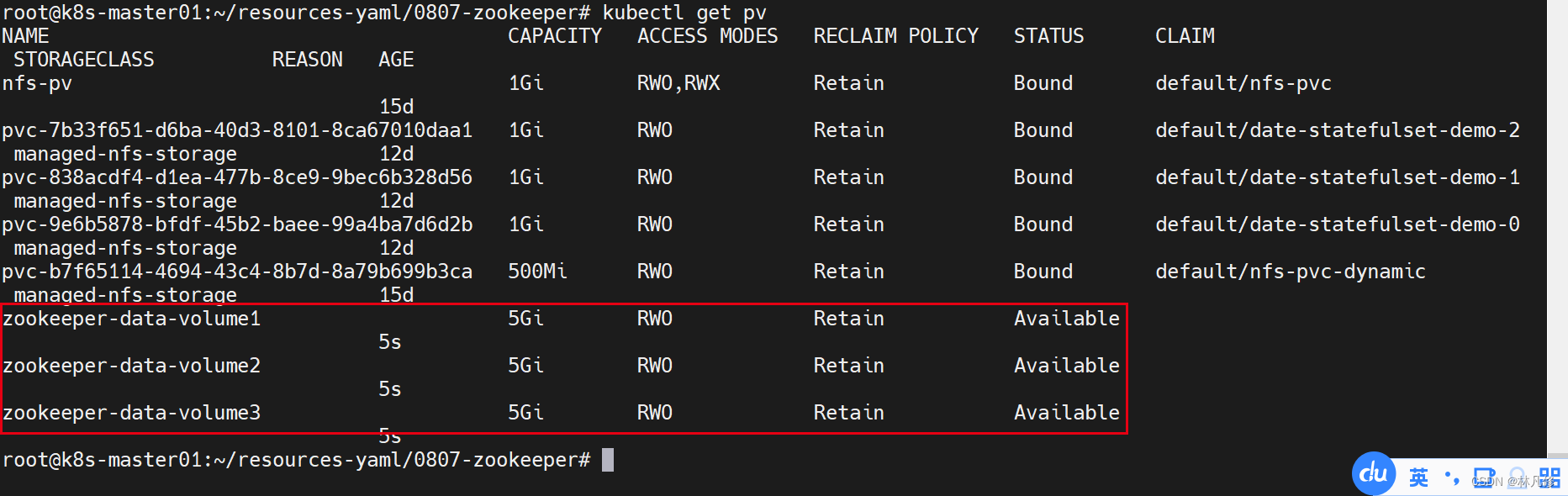

查看pv状态

创建pvc,部署文件如下:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-data-volume1

spec:

accessModes: ["ReadWriteOnce"]

volumeName: zookeeper-data-volume1

resources:

requests:

storage: 5Gi

limits:

storage: 5Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-data-volume2

spec:

accessModes: ["ReadWriteOnce"]

volumeName: zookeeper-data-volume2

resources:

requests:

storage: 5Gi

limits:

storage: 5Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-data-volume3

spec:

accessModes: ["ReadWriteOnce"]

volumeName: zookeeper-data-volume3

resources:

requests:

storage: 5Gi

limits:

storage: 5Gi

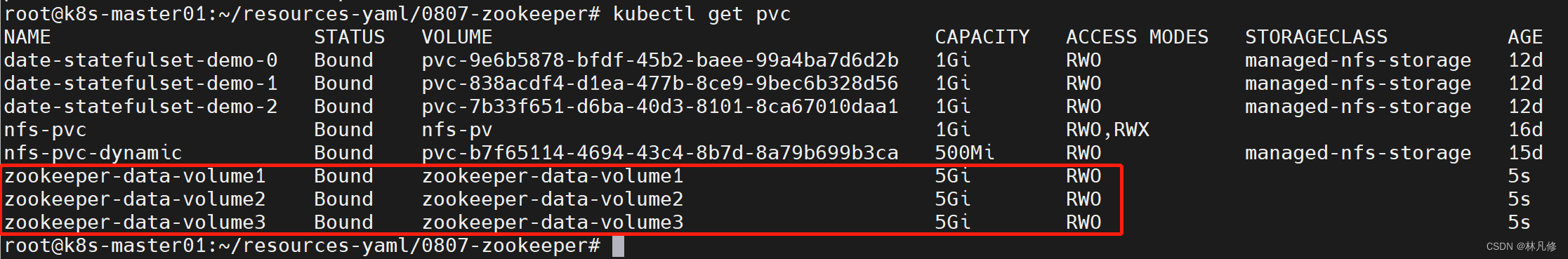

查看pvc状态

运行zookeeper集群

创建zookeeeper集群,部署文件如下:

apiVersion: apps/v1

kind: Deployment

metadata:

name: zookeeper-instance1

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

server-id: "1"

template:

metadata:

labels:

app: zookeeper

server-id: "1"

spec:

containers:

- name: zookeeper

image: harbor-server.linux.io/n70/zookeeper:3.4.14

imagePullPolicy: Always

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

env:

- name: MYID

value: "1"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

volumeMounts:

- name: zookeeper-data-volume1

mountPath: /apps/zookeeper/data

volumes:

- name: zookeeper-data-volume1

persistentVolumeClaim:

claimName: zookeeper-data-volume1

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: zookeeper-instance2

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

server-id: "2"

template:

metadata:

labels:

app: zookeeper

server-id: "2"

spec:

containers:

- name: zookeeper

image: harbor-server.linux.io/n70/zookeeper:3.4.14

imagePullPolicy: Always

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

env:

- name: MYID

value: "2"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

volumeMounts:

- name: zookeeper-data-volume2

mountPath: /apps/zookeeper/data

volumes:

- name: zookeeper-data-volume2

persistentVolumeClaim:

claimName: zookeeper-data-volume2

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: zookeeper-instance3

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

server-id: "3"

template:

metadata:

labels:

app: zookeeper

server-id: "3"

spec:

containers:

- name: zookeeper

image: harbor-server.linux.io/n70/zookeeper:3.4.14

imagePullPolicy: Always

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

env:

- name: MYID

value: "3"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

volumeMounts:

- name: zookeeper-data-volume3

mountPath: /apps/zookeeper/data

volumes:

- name: zookeeper-data-volume3

persistentVolumeClaim:

claimName: zookeeper-data-volume3

---

#此Service用于zookeeper1对外提供服务,并和其余两个zookeper实例通信

apiVersion: v1

kind: Service

metadata:

name: zookeeper1

spec:

type: NodePort

selector:

app: zookeeper

server-id: "1"

ports:

- name: client

port: 2181

targetPort: 2181

nodePort: 32181

- name: followers

port: 2888

targetPort: 2888

- name: election

port: 3888

targetPort: 3888

#此Service用于zookeeper2对外提供服务,并和其余两个zookeper实例通信

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper2

spec:

type: NodePort

selector:

app: zookeeper

server-id: "2"

ports:

- name: client

port: 2181

targetPort: 2181

nodePort: 32182

- name: followers

port: 2888

targetPort: 2888

- name: election

port: 3888

targetPort: 3888

#此Service用于zookeeper3对外提供服务,并和其余两个zookeper实例通信

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper3

spec:

type: NodePort

selector:

app: zookeeper

server-id: "3"

ports:

- name: client

port: 2181

targetPort: 2181

nodePort: 32183

- name: followers

port: 2888

targetPort: 2888

- name: election

port: 3888

targetPort: 3888

#此Service用于k8s集群内客户端访问zookeeper

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper

spec:

selector:

app: zookeeper

ports:

- name: client

port: 2181

targetPort: 2181

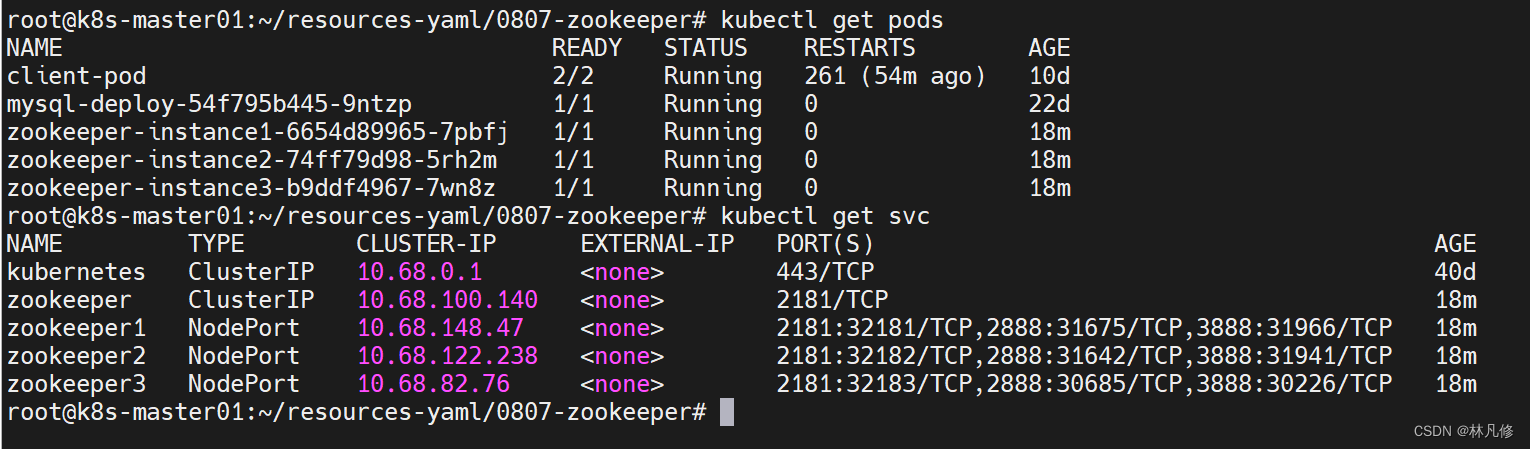

创建后,查看pod和service

验证

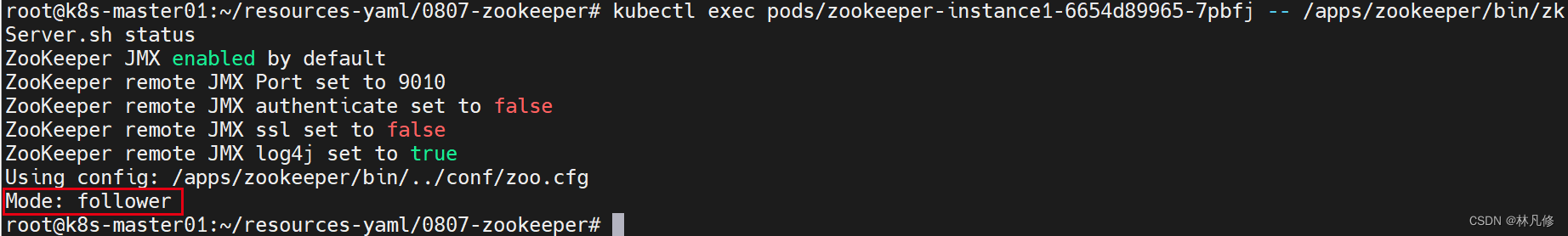

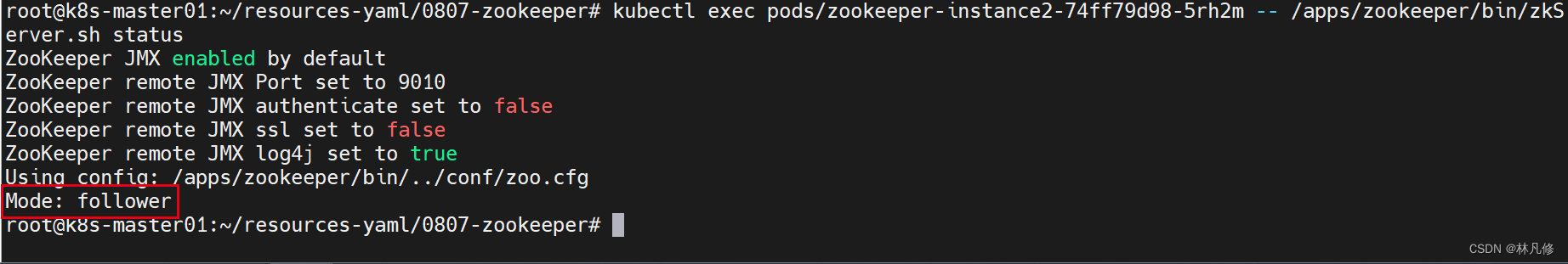

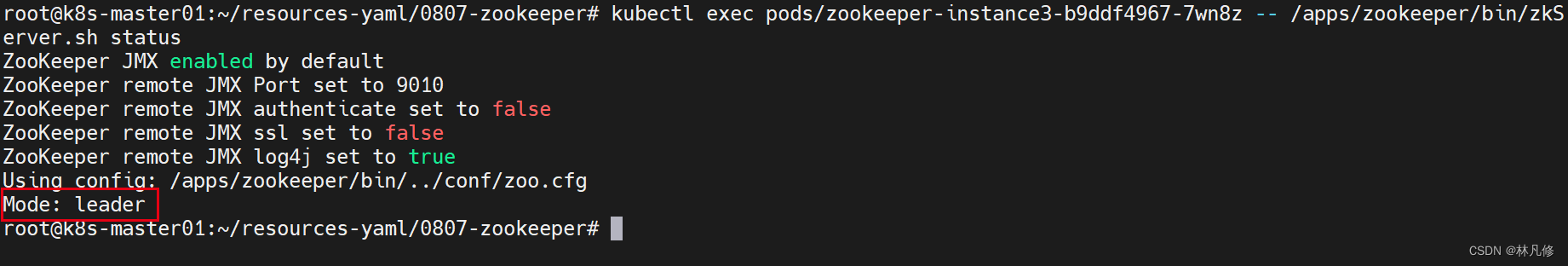

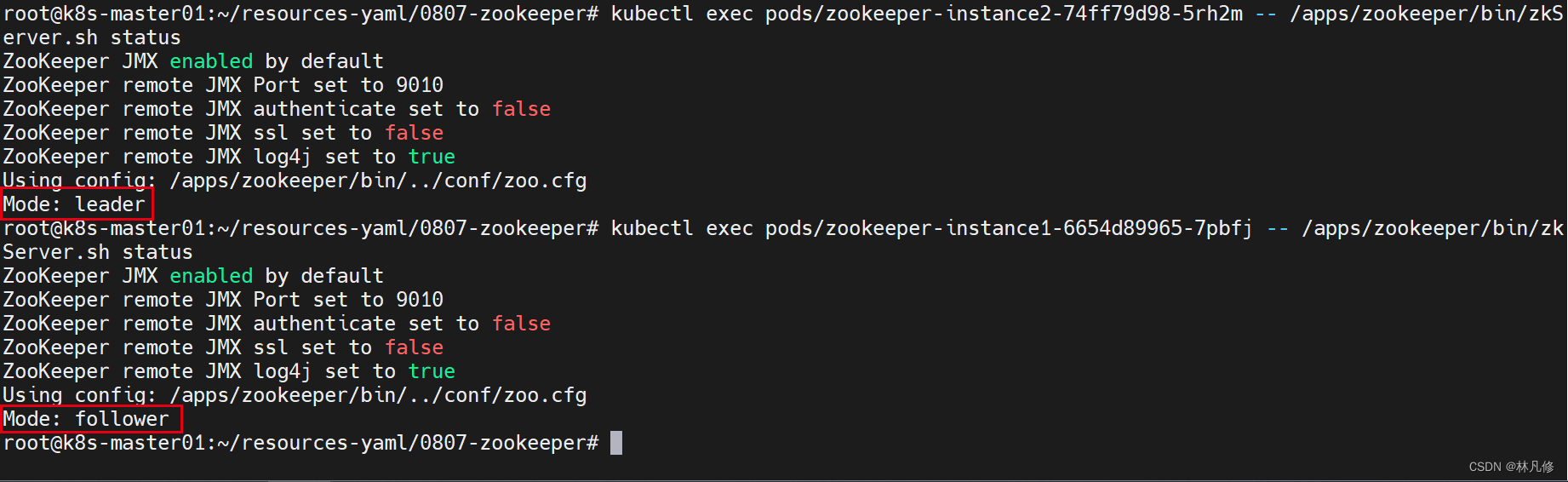

查看3个zookeeper的状态,zookeeper3为leader,其余节点为follower

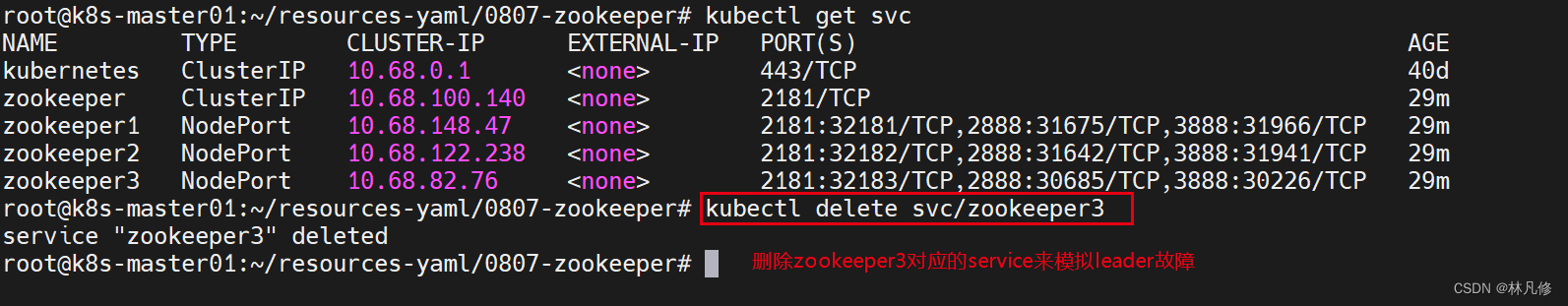

将目前的leader停掉,然后验证是否重新选举leader

再查看zookeeper的状态,zookeeper2已经成为新的leader

3454

3454

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?