**

train.py

**

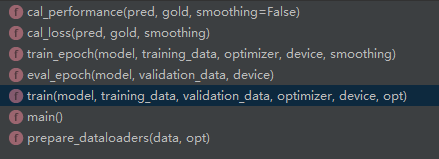

这次先用pycharm查看train.py的structure

import argparse

import math

import time

from tqdm import tqdm

import torch

import torch.nn.functional as F

import torch.optim as optim

import torch.utils.data

import transformer.Constants as Constants

from dataset import TranslationDataset, paired_collate_fn

from transformer.Models import Transformer

from transformer.Optim import ScheduledOptim

#transformer.xxx是transformer文件夹下的.py文件

main中的输入代码

#1.命令行输入操作

parser = argparse.ArgumentParser()

parser.add_argument('-data', required=True)

parser.add_argument('-epoch', type=int, default=10)

parser.add_argument('-batch_size', type=int, default=64)

#parser.add_argument('-d_word_vec', type=int, default=512)

parser.add_argument('-d_model', type=int, default=512)

parser.add_argument('-d_inner_hid', type=int, default=2048)

parser.add_argument('-d_k', type=int, default=64)

parser.add_argument('-d_v', type=int, default=64)

parser.add_argument('-n_head', type=int, default=8)

parser.add_argument('-n_layers', type=int, default=6)

parser.add_argument('-n_warmup_steps', type=int, default=4000)

parser.add_argument('-dropout', type=float, default=0.1)

parser.add_argument('-embs_share_weight', action='store_true')

parser.add_argument('-proj_share_weight', action='store_true')

parser.add_argument('-log', default=None)

parser.add_argument('-save_model', default=None)

parser.add_argument('-save_mode', type=str, choices=['all', 'best'], default='best')

parser.add_argument('-no_cuda', action='store_true')

parser.add_argument('-label_smoothing', action='store_true')

opt = parser.parse_args()

opt.cuda = not opt.no_cuda

opt.d_word_vec = opt.d_model

main中导入数据集(训练+验证)

#========= Loading Dataset =========#

#2.导入数据集

data = torch.load(opt.data)

opt.max_token_seq_len = data['settings'].max_token_seq_len

#用prepare_dataloader函数,导入训练集以及验证集

#返回的数据集是torch的Dataloader对象,这样方便一批一批送入进行训练。

training_data, validation_data = prepare_dataloaders(data, opt)

opt.src_vocab_size = training_data.dataset.src_vocab_size

opt.tgt_vocab_size = training_data.dataset.tgt_vocab_size

prepare_dataloader()代码如下

def prepare_dataloaders(data, opt):

# ========= Preparing DataLoader =========#

train_loader = torch.utils.data.DataLoader(

TranslationDataset(

src_word2idx=data['dict']['src'],

tgt_word2idx=data['dict']['tgt'],

src_insts=data['train']['src'],

tgt_insts=data['train']['tgt']),

num_workers=2,

batch_size=opt.batch_size,

collate_fn=paired_collate_fn,

shuffle=True)

valid_loader = torch.utils.data.DataLoader(

TranslationDataset(

src_word2idx=data['dict']['src'],

tgt_word2idx=data['dict']['tgt'],

src_insts=data['valid']['src'],

tgt_insts=data['valid']['tgt']),

num_workers=2,

batch_size=opt.batch_size,

collate_fn=paired_collate_fn)

return train_loader, valid_loader

main中预备模型分布讲解

#3.对可选参数处理,加载词嵌入共享权重

if opt.embs_share_weight:

assert training_data.dataset.src_word2idx == training_data.dataset.tgt_word2idx, \

'The src/tgt word2idx table are different but asked to share word embedding.'

print(opt)

#4.有显卡用显卡跑,即GPU,没有就用CPU跑

device = torch.device('cuda' if opt.cuda else 'cpu')

#5.构造transformer模型

transformer = Transformer(

opt.src_vocab_size, #data词表大小

opt.tgt_vocab_size, #target词表大小

opt.max_token_seq_len, #最长句子长度

tgt_emb_prj_weight_sharing=opt.proj_share_weight,

emb_src_tgt_weight_sharing=opt.embs_share_weight,

d_k=opt.d_k,

d_v=opt.d_v,

d_model=opt.d_model,

d_word_vec=opt.d_word_vec,

d_inner=opt.d_inner_hid,

n_layers=opt.n_layers,

n_head=opt.n_head, #attention机制的head数量

dropout=opt.dropout).to(device) #transformer=Transformer().to(device)表示在什么设备上面跑,'cpu'就是用CPU跑,'cuda'就是用GPU

#6.定义一个优化器

optimizer = ScheduledOptim(

optim.Adam(

filter(lambda x: x.requires_grad, transformer.parameters()),

betas=(0.9, 0.98), eps=1e-09),

opt.d_model, opt.n_warmup_steps)

其中:

- betas (Tuple[float, float], 可选) – 用于计算梯度以及梯度平方的运行平均值的系数

- eps (float, 可选) – 为了增加数值计算的稳定性而加到分母里的项(默认:1e-8)

参考:https://www.01hai.com/note/av114805#class_torchoptimAdamparams_lr0001_betas09_0999_eps1e-08_weight_decay0source - 关于filter(lambda x: x.requires_grad, transformer.parameters())说是过滤的作用,根据requires_grad过滤,但是过滤掉啥子我还没搞清楚哟。。。参考:https://blog.csdn.net/guotong1988/article/details/79739775

#7.train函数

train(transformer, training_data, validation_data, optimizer, device ,opt)

具体代码如下:

def train(model, training_data, validation_data, optimizer, device, opt):

''' Start training '''

log_train_file = None

log_valid_file = None

if opt.log: #如果运行的时候在命令行传入log参数则生成日志

log_train_file = opt.log + '.train.log'

log_valid_file = opt.log + '.valid.log'

print('[Info] Training performance will be written to file: {} and {}'.format(

log_train_file, log_valid_file))

with open(log_train_file, 'w') as log_tf, open(log_valid_file, 'w') as log_vf:

log_tf.write('epoch,loss,ppl,accuracy\n')

log_vf.write('epoch,loss,ppl,accuracy\n')

valid_accus = [] #记录验证集正确率列表

for epoch_i in range(opt.epoch): #opt.epoch就是命令行输入命令时传入的参数,使用这一个for循环,将数据送入训练

print('[ Epoch', epoch_i, ']')

start = time.time() #调用train_epoch函数进行训练,该函数在下面的225行,返回值是训练loss和acc。

train_loss, train_accu = train_epoch(

model, training_data, optimizer, device, smoothing=opt.label_smoothing)

print(' - (Training) ppl: {ppl: 8.5f}, accuracy: {accu:3.3f} %, '\

'elapse: {elapse:3.3f} min'.format(

ppl=math.exp(min(train_loss, 100)), accu=100*train_accu,

elapse=(time.time()-start)/60))

start = time.time() #调用eval_epoch()函数在验证集上进行检测,返回值是验证的loss和acc

valid_loss, valid_accu = eval_epoch(model, validation_data, device)

print(' - (Validation) ppl: {ppl: 8.5f}, accuracy: {accu:3.3f} %, '\

'elapse: {elapse:3.3f} min'.format(

ppl=math.exp(min(valid_loss, 100)), accu=100*valid_accu,

elapse=(time.time()-start)/60))

valid_accus += [valid_accu] #列表中记录每次的验证集正确率

model_state_dict = model.state_dict() #记录模型参数

checkpoint = {

'model': model_state_dict,

'settings': opt,

'epoch': epoch_i}

if opt.save_model: #如果当时调用的时候传入了这个参数的话,则使模型持久化

if opt.save_mode == 'all':

model_name = opt.save_model + '_accu_{accu:3.3f}.chkpt'.format(accu=100*valid_accu)

torch.save(checkpoint, model_name)

elif opt.save_mode == 'best':

model_name = opt.save_model + '.chkpt'

if valid_accu >= max(valid_accus):

torch.save(checkpoint, model_name)

print(' - [Info] The checkpoint file has been updated.')

if log_train_file and log_valid_file: #写入日志文件

with open(log_train_file, 'a') as log_tf, open(log_valid_file, 'a') as log_vf:

log_tf.write('{epoch},{loss: 8.5f},{ppl: 8.5f},{accu:3.3f}\n'.format(

epoch=epoch_i, loss=train_loss,

ppl=math.exp(min(train_loss, 100)), accu=100*train_accu))

log_vf.write('{epoch},{loss: 8.5f},{ppl: 8.5f},{accu:3.3f}\n'.format(

epoch=epoch_i, loss=valid_loss,

ppl=math.exp(min(valid_loss, 100)), accu=100*valid_accu))

其中的其中

train_epoch()函数代码如下:

def train_epoch(model, training_data, optimizer, device, smoothing):

''' Epoch operation in training phase'''

model.train()

total_loss = 0

n_word_total = 0

n_word_correct = 0

for batch in tqdm(

training_data, mininterval=2,

desc=' - (Training) ', leave=False):

# prepare data

src_seq, src_pos, tgt_seq, tgt_pos = map(lambda x: x.to(device), batch)

gold = tgt_seq[:, 1:]

# forward

optimizer.zero_grad()

pred = model(src_seq, src_pos, tgt_seq, tgt_pos)

# backward

loss, n_correct = cal_performance(pred, gold, smoothing=smoothing)

loss.backward()

# update parameters

optimizer.step_and_update_lr()

# note keeping

total_loss += loss.item()

non_pad_mask = gold.ne(Constants.PAD)

n_word = non_pad_mask.sum().item()

n_word_total += n_word

n_word_correct += n_correct

loss_per_word = total_loss/n_word_total

accuracy = n_word_correct/n_word_total

return loss_per_word, accuracy

eval_epoch()函数代码如下:

def eval_epoch(model, validation_data, device):

''' Epoch operation in evaluation phase '''

model.eval()

total_loss = 0

n_word_total = 0

n_word_correct = 0

with torch.no_grad():

for batch in tqdm(

validation_data, mininterval=2,

desc=' - (Validation) ', leave=False):

# prepare data

src_seq, src_pos, tgt_seq, tgt_pos = map(lambda x: x.to(device), batch)

gold = tgt_seq[:, 1:]

# forward

pred = model(src_seq, src_pos, tgt_seq, tgt_pos)

loss, n_correct = cal_performance(pred, gold, smoothing=False)

# note keeping

total_loss += loss.item()

non_pad_mask = gold.ne(Constants.PAD)

n_word = non_pad_mask.sum().item()

n_word_total += n_word

n_word_correct += n_correct

loss_per_word = total_loss/n_word_total

accuracy = n_word_correct/n_word_total

return loss_per_word, accuracy

其中的其中的其中

cal_performance()代码如下:

def cal_performance(pred, gold, smoothing=False):

''' Apply label smoothing if needed '''

loss = cal_loss(pred, gold, smoothing)

pred = pred.max(1)[1]

gold = gold.contiguous().view(-1)

non_pad_mask = gold.ne(Constants.PAD)

n_correct = pred.eq(gold)

n_correct = n_correct.masked_select(non_pad_mask).sum().item()

return loss, n_correct

其中的其中的其中的其中

cal_loss()代码如下:

def cal_loss(pred, gold, smoothing):

''' Calculate cross entropy loss, apply label smoothing if needed. '''

gold = gold.contiguous().view(-1)

if smoothing:

eps = 0.1

n_class = pred.size(1)

one_hot = torch.zeros_like(pred).scatter(1, gold.view(-1, 1), 1)

one_hot = one_hot * (1 - eps) + (1 - one_hot) * eps / (n_class - 1)

log_prb = F.log_softmax(pred, dim=1)

non_pad_mask = gold.ne(Constants.PAD)

loss = -(one_hot * log_prb).sum(dim=1)

loss = loss.masked_select(non_pad_mask).sum() # average later

else:

loss = F.cross_entropy(pred, gold, ignore_index=Constants.PAD, reduction='sum')

return loss

最后别忘记介个嘻嘻嘻~

if __name__ == '__main__':

main()

有关运行结果,一定要在上一步的download data和preprocess data的命令行输入执行完后再进行

train.py命令行输入代码与第一步有关 我这里比git上的多设置了epoch和batch_size

python train.py -data data/multi30k.atok.low.pt -save_model trained -save_mode best -proj_share_weight -label_smoothing -epoch 30 -batch_size 128

结果如下:

Git上的答案:

参考:https://github.com/jadore801120/attention-is-all-you-need-pytorch

1629

1629

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?