一,背景介绍

什么是NAS服务?

众所周知,存储有几种类型的存储,DAS(Direct Attached Storage),NAS(Network Attached Storage),SAN(Storage Area Network)今天主要围绕NAS来展开介绍。

NAS全称Network Attached Storage,就是基于网络的存储服务,这里的网络一般是以太网。当存储通过网络交付后,局域网内多个应用都能通过网络挂载存储服务,而主流NAS服务都支持CIFS协议和NFS协议。

什么是Manila?

Manila项目全称是File Share Service,文件共享即服务。用来提供云上的文件共享,支持GFS CEPHFS CIFS NFS与众多商业存储协议

社区情况

目前Manila项目的主导者是由各大存储厂商把持 NetApp、Mirantis、EMC、IBM等等,项目情况的话目前版本也是跟随OpenStack版本发布一同发布的。

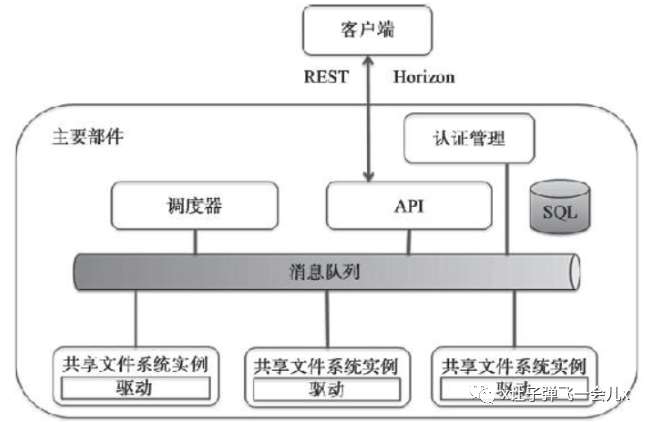

逻辑框架

二,部署

1.环境规划

1.1 主机规划

| 序号 | 主机 | 组件 | 主机名 | 角色 |

| 1 | 192.168.1.11 | glusterd,nfs-ganesha,keepalived | Node1 | gfs节点 |

| 2 | 192.168.1.12 | glusterd,nfs-ganesha,keepalived | Node2 | gfs节点 |

| 3 | 192.168.1.200 | vip |

2.1 安装包(所有节点)

# yum install nfs-ganesha-gluster nfs-ganesha nfs-ganesha-vfs -y# yum install glusterfs glusterfs-server -y# yum install keepalived -y# systemctl start nfs-ganesha# systemctl enable nfs-ganesha# systemctl start glusterd# systemctl enable glusterd# systemctl start keepalived# systemctl enable keepalived

2.2 磁盘初始化(所有节点)

# fdisk /dev/sda......(此处步骤省略)# partprobe# mkfs.xfs /dev/sda# mkdir -p /rds/data1/# echo “/dev/sda1 /rds/data1 ext4 defaults 0 0” >> /etc/fstab

2.3 glusterfs配置(在node1)

# gluster peer probe node2# gluster peer statusNumber of Peers: 1Hostname: node2Uuid: 0892341f-691d-411b-ae8d-c68e3dbd6665State: Peer in Cluster (Connected)# 创建volume,复制卷# gluster volume create rds-volume replica 2 192.168.1.11:/rds/data1/ 192.168.1.12:/rds/data1/ force# gluster volume start rds-volume# gluster volume infoVolume Name: gluster_shared_storageType: ReplicateVolume ID: ef89840a-3e79-42a6-b40f-ea3919fc307bStatus: StartedSnapshot Count: 0Number of Bricks: 1 x 2 = 2Transport-type: tcpBricks:Brick1: node2:/var/lib/glusterd/ss_brickBrick2: node1:/var/lib/glusterd/ss_brickOptions Reconfigured:nfs.export-volumes: OFFtransport.address-family: inetnfs.disable: onperformance.client-io-threads: offcluster.enable-shared-storage: enableVolume Name: rds-volumeType: ReplicateVolume ID: 0b05e778-4182-4fe1-a7fb-4165fc44a84eStatus: StartedSnapshot Count: 0Number of Bricks: 1 x 2 = 2Transport-type: tcpBricks:Brick1: 192.168.1.11:/rds/data1Brick2: 192.168.1.12:/rds/data1Options Reconfigured:nfs.export-volumes: OFFfeatures.quota-deem-statfs: onfeatures.inode-quota: onfeatures.quota: ontransport.address-family: inetnfs.disable: onperformance.client-io-threads: offcluster.enable-shared-storage: enable

2.4 ganesha网关配置(所有节点)

# mkdir /etc/ganesha/export.d/# cat /etc/ganesha/ganesha.conf%include /etc/ganesha/export.d/INDEX.confNFS_CORE_PARAM {# possible to mount with NFSv3 to NFSv4 Pseudo pathmount_path_pseudo = true;# NFS protocolProtocols = 3,4;}EXPORT_DEFAULTS {# default access modeAccess_Type = RW;}EXPORT {# uniq IDExport_Id = 101;# mount path of Gluster VolumePath = "/rds-volume";FSAL {# any namename = GLUSTER;# hostname or IP address of this Nodehostname="192.168.1.1,192.168.1.2";# Gluster volume namevolume="rds-volume";}# config for root SquashSquash="No_root_squash";# NFSv4 Pseudo pathPseudo="/rds/data1";# allowed security optionsSecType = "sys";}LOG {# default log levelDefault_Log_Level = WARN;}

2.5 Keepalived配置(所有节点)

# cat /etc/keepalived/check_ports_ganesha#!/bin/shsystemctl status nfs-ganesha >/dev/nullif [ $? -ne 0 ]; thensystemctl restart nfs-ganeshasystemctl status nfs-ganesha >/dev/nullif [ $? -eq 0 ]; thenecho 0elsesystemctl stop keepalivedfifi# cat /etc/keepalived/keepalived.confglobal_defs {script_user root root}vrrp_script chk_port_ganesha {script "/etc/keepalived/check_ports_ganesha"interval 3weight -50}vrrp_instance VI_2 {state MASTERinterface bond0.323nopreemptvirtual_router_id 12priority 200advert_int 1authentication {auth_type PASSauth_pass vLKKIb310LIvN6gj}virtual_ipaddress {192.168.1.200/24 dev bond0.323 label bond0.323:g}track_script {chk_port_ganesha}notify_master "systemctl reload nfs-ganesha"notify_backup "systemctl reload nfs-ganesha"notify_fault "systemctl stop nfs-ganesha"}

Slave

# cat /etc/keepalived/keepalived.confglobal_defs {script_user root root}vrrp_script chk_port_ganesha {script "/etc/keepalived/check_ports_ganesha"interval 3weight -50}vrrp_instance VI_2 {state MASTERnopreemptinterface bond0.323virtual_router_id 12priority 150advert_int 1authentication {auth_type PASSauth_pass vLKKIb310LIvN6gj}virtual_ipaddress {192.168.1.200/24 dev bond0.323 label bond0.323:g}track_script {chk_port_ganesha}notify_master "systemctl reload nfs-ganesha"notify_backup "systemctl reload nfs-ganesha"notify_fault "systemctl stop nfs-ganesha"}

2.6 开启glusterfs集群件的共享目录(node1)

gluster volume set all cluster.enable-shared-storage enable2.7 ganesha配置同步(所有节点)

ln -s /run/gluster/shared_storage/ganesha/ /etc/3 Openstack manila部分

3.1 安装manila(controller1)

# vi /etc/kolla/globals.ymlenable_manila: "yes"enable_manila_backend_generic: "yes"# ansible-playbook -i /etc/ansible/hosts/ deploy -t maniladocker ps |grep manilae2385bae63d8 192.168.2.11:4000/kolla/centos-source-manila-share:iaas-8.1.2 "dumb-init --single-…" 4 days ago Up 3 days manila_share33d4e054bfd8 192.168.2.11:4000/kolla/centos-source-manila-scheduler:iaas-8.1.2 "dumb-init --single-…" 4 days ago Up 4 days manila_schedulerf47072da5e83 192.168.2.11:4000/kolla/centos-source-manila-data:iaas-8.1.2 "dumb-init --single-…" 4 days ago Up 4 days manila_data87545f632e49 192.168.2.11:4000/kolla/centos-source-manila-api:iaas-8.1.2 "dumb-init --single-…" 4 days ago Up 23 hours manila_api

3.2 配置manila(所有manila节点)

cat /etc/kolla/manila-share/manila.confenabled_share_protocols = NFS,CIFS,GLUSTERFSenabled_share_backends = ganesha-nfs[ganesha-nfs]share_backend_name = ganesha-nfsglusterfs_nfs_server_type = Ganeshaglusterfs_target = root@192.168.1.200:/rds-volumeglusterfs_server_password = xxxxxxxxshare_driver = manila.share.drivers.glusterfs.GlusterfsShareDriverganesha_service_name = nfs-ganeshadriver_handles_share_servers = Falseglusterfs_ganesha_server_remote = Trueglusterfs_ganesha_server_ip = 192.168.1.200glusterfs_ganesha_server_username = rootglusterfs_ganesha_server_password = xxxxxxxxx# docker restart manila_share$ source /etc/admin-openrc.sh$ manila service-list+----+------------------+--------------------------------+------+---------+-------+----------------------------+| Id | Binary | Host | Zone | Status | State | Updated_at |+----+------------------+--------------------------------+------+---------+-------+----------------------------+| 1 | manila-data | SCDA0052.uat.local | nova | enabled | up | 2022-11-01T05:53:16.000000 || 4 | manila-data | SCDA0072.uat.local | nova | enabled | up | 2022-11-01T05:53:20.000000 || 7 | manila-data | SCDA0062.uat.local | nova | enabled | up | 2022-11-01T05:53:12.000000 || 10 | manila-scheduler | SCDA0062.uat.local | nova | enabled | up | 2022-11-01T05:53:21.000000 || 13 | manila-scheduler | SCDA0052.uat.local | nova | enabled | up | 2022-11-01T05:53:20.000000 || 16 | manila-scheduler | SCDA0072.uat.local | nova | enabled | up | 2022-11-01T05:53:14.000000 || 19 | manila-share | SCDA0052.uat.local@generic | nova | enabled | down | 2022-10-28T06:13:23.000000 || 22 | manila-share | SCDA0072.uat.local@generic | nova | enabled | down | 2022-10-28T09:19:48.000000 || 25 | manila-share | SCDA0062.uat.local@generic | nova | enabled | down | 2022-10-28T09:19:15.000000 || 28 | manila-share | SCDA0052.uat.local@ganesha-nfs | nova | enabled | up | 2022-11-01T05:53:16.000000 || 31 | manila-share | SCDA0062.uat.local@ganesha-nfs | nova | enabled | up | 2022-11-01T05:53:18.000000 || 34 | manila-share | SCDA0072.uat.local@ganesha-nfs | nova | enabled | up | 2022-11-01T05:53:18.000000 |+----+------------------+--------------------------------+------+---------+-------+----------------------------+

3.3 创建share-type(controller1)

$ manila type-create ganesha-nfs$ manila type-key ganesha-nfs set share_backend_name = ganesha-nfs$ manila type-key ganesha-nfs set snapshot_support=False

3.4 设置share配额(controller1)

$ manila quota-update c663dc26bbcd4d0c8921c929a74e9320 --shares 1000 --force $ manila absolute-limits+----------------------------+-------+| Name | Value |+----------------------------+-------+| maxTotalShareGigabytes | 1000 || maxTotalShareNetworks | 10 || maxTotalShareSnapshots | 50 || maxTotalShares | 1000 || maxTotalSnapshotGigabytes | 1000 || totalShareGigabytesUsed | 50 || totalShareNetworksUsed | 0 || totalShareSnapshotsUsed | 0 || totalSharesUsed | 50 || totalSnapshotGigabytesUsed | 0 |+----------------------------+-------+

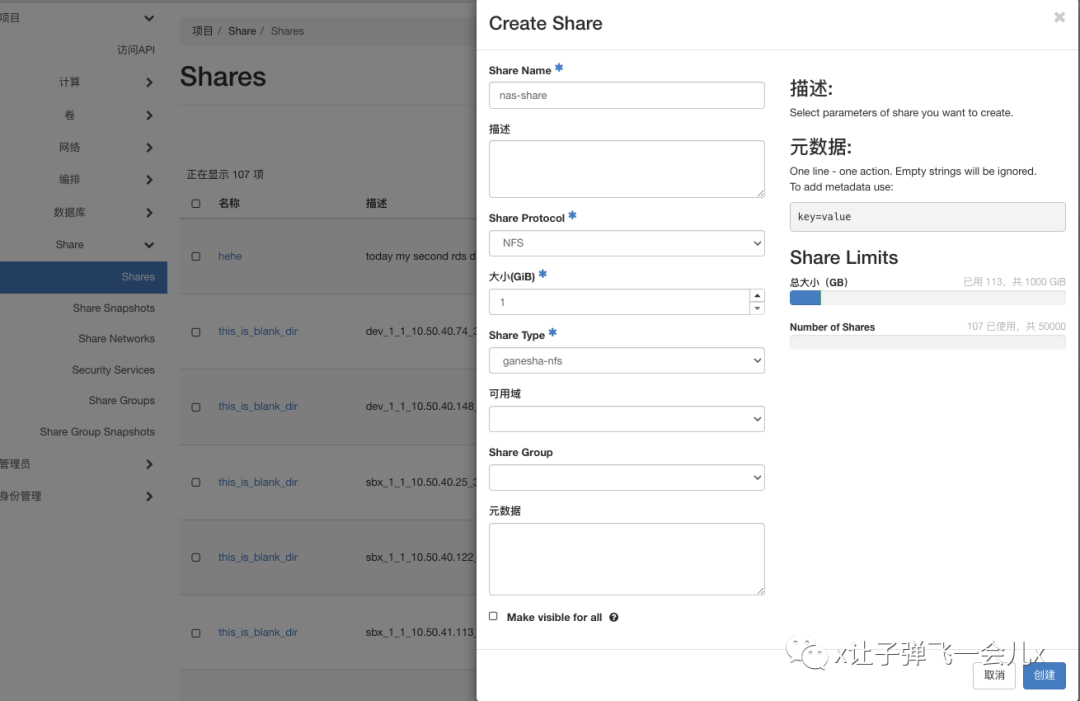

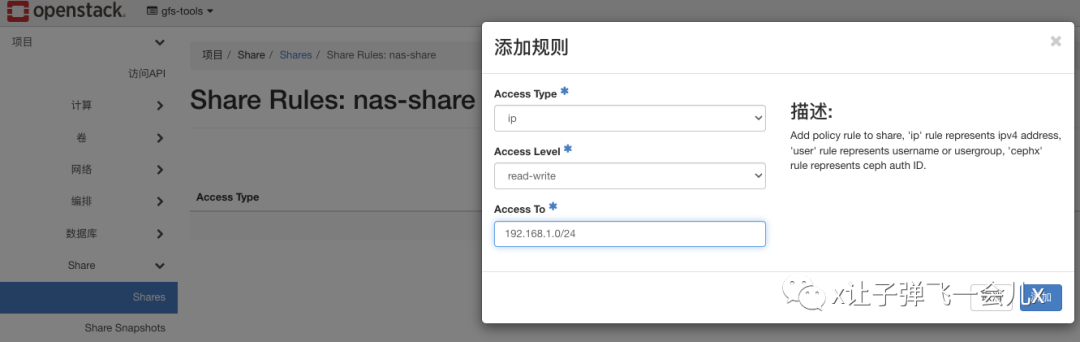

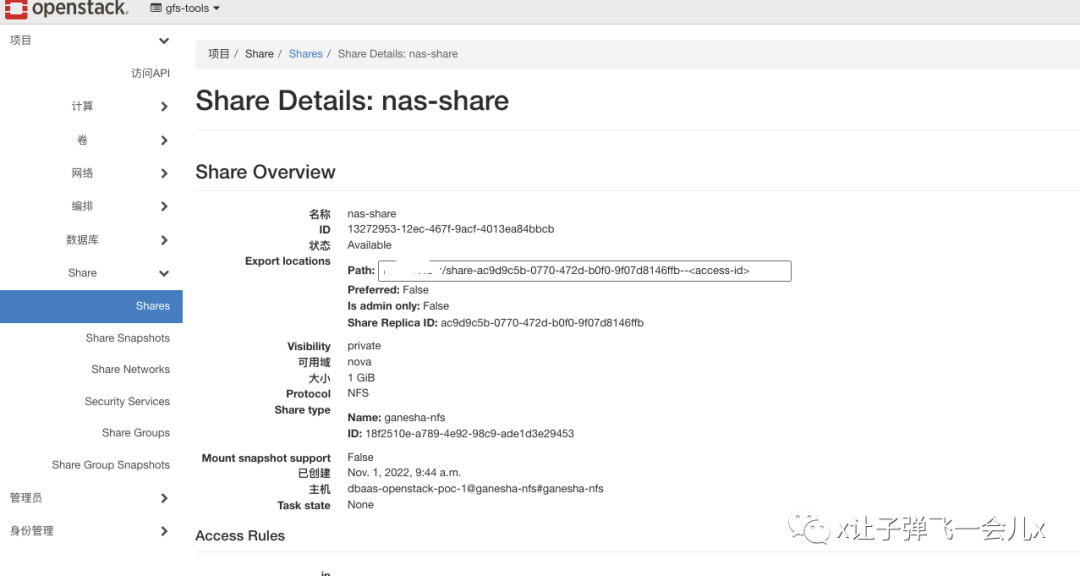

3.5 创建测试

461

461

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?