咋们先来看看运行结果

下面来上代码,完整代码,复制就可以运行的。不懂得或报错的,请留言。

# -*- coding:utf-8 -*

import requests

import re

import os

import json

from fake_useragent import UserAgent

import openpyxl

from openpyxl.drawing.image import Image

from lxml import etree

from datetime import datetime

import time

from hashlib import md5

import random

s = requests.Session()

file_name = time.strftime("%Y%m%d") # 获取此时时间

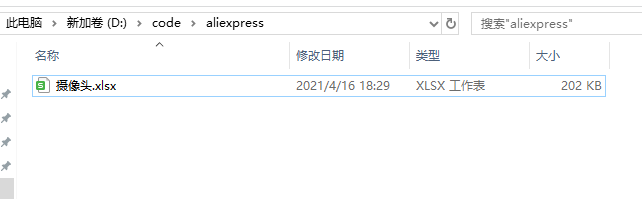

file_path = r"D:\code\aliexpress\\" # 磁盘路径

class SMT(object):

def __init__(self):

self.url = "https://feedback.aliexpress.com/display/productEvaluation.htm"

self.excel_key = 2 # 表格从第二行开始插入

def get_all_url(self):

headers = {'User-Agent': (UserAgent()).random}

response = requests.get("https://pt.aliexpress.com/", headers=headers, timeout=60)

cookies = response.cookies

# 获取cookie

x_csrf = "".join(re.findall(r'x_csrf(.*?) for', str(cookies))) # 1

aep_usuc_f = "".join(re.findall(r'aep_usuc_f(.*?) for', str(cookies))) # 3

ali_apache_id = "".join(re.findall(r'ali_apache_id(.*?) for', str(cookies))) # 8

intl_common_forever = "".join(re.findall(r'intl_common_forever(.*?) for', str(cookies))) # 5

xman_f = "".join(re.findall(r'xman_f(.*?) for', str(cookies))) # 4

xman_t = "".join(re.findall(r'xman_t(.*?) for', str(cookies))) # 2

xman_us_f = "".join(re.findall(r'xman_us_f(.*?) for', str(cookies))) # 6

JSESSIONID = "".join(re.findall(r'JSESSIONID(.*?) for', str(cookies))) # 7

cookie = 'ali_apache_id{}; acs_usuc_t=x_csrf{}; xman_t{}; cna=Xm07GGWoHQICAcuoBRT/yumY; xlly_s=1; ali_apache_track=; ali_apache_tracktmp=; _ga=GA1.2.483784494.1608608422; _gid=GA1.2.1166178232.1608608422; _m_h5_tk=6cb16d31c474598dcd1e384e6629188d_1608701585009; _m_h5_tk_enc=66889bb7420b1a6111fc780530175b23; aep_usuc_f{}; intl_locale=pt_BR; xman_f{}; aep_history=keywords%5E%0Akeywords%09%0A%0Aproduct_selloffer%5E%0Aproduct_selloffer%0932895092229%0932892877417%0933013642928%0932892877417%0933059387980%094001204825476%0933026833048%094001204825476; intl_common_forever{}; xman_us_f{}; JSESSIONID{}; tfstk=cufhBRVgh9JIxtJGhWOCo52GUpwOaheeA_5N_1w4-OQNoP568sfiQxrTwPxdx_H5.; l=eB_t73GIO9wbNRqyBOfwhurza77tHIRfIuPzaNbMiOCPO-Cp5DfPWZ-4FWL9CnhVHsFWR3uKcXmQB3qw2ynVcbYo942h2UBs3dC..; isg=BGhoxoiuo-npJI9uuC1pfUnYOVZ6kcyb7-hMFSKZwuPWfQnnyqGEKul_dBWN7YRz'.format(

ali_apache_id, x_csrf, xman_t, aep_usuc_f, xman_f, intl_common_forever, xman_us_f, JSESSIONID),

keywords = 'webcam'

perma_url = "https://pt.aliexpress.com/wholesale?trafficChannel=main&d=y&CatId=0&SearchText={}<ype=wholesale&SortType=default&page=1".format(

keywords) # 关键词接口

for page in range(1, 21): # 前20页

url = re.sub(r"page=\d+", "page=" + str(page), perma_url)

self.get_all_data(url, cookie)

def get_all_data(self, url, cookie):

headers = {'User-Agent': UserAgent().firefox}

response = requests.get(url, headers=headers, timeout=60)

dataa = "".join(re.findall(r'window.runParams = (.*?)}]};', str(response.text))) + "}]}" # 获取json数据

if dataa == "}]}":

dataa = "{" + "".join(re.findall(r'window.runParams = {(.*?)};', str(response.text))) + "}"

user_dicts = json.loads(dataa)['items']

for items in user_dicts:

productDetailUrl = "https:" + items.get("productDetailUrl") # 字符串拼接

imageUrl = "https:" + items.get("imageUrl")

productId = items.get("productId")

ownerMemberId = items.get("store").get("aliMemberId")

self.get_detailed(productId, ownerMemberId, productDetailUrl, imageUrl, cookie)

def get_detailed(self, productId, ownerMemberId, productDetailUrl, imageUrl, cookie):

cookie = str(cookie).replace("('", "").replace("',)", "")

headers = {'cookie': cookie, 'User-Agent': UserAgent().Chrome}

response = requests.get(productDetailUrl, headers=headers, timeout=60)

dataa = "".join(re.findall(r'data: (.*)}},', str(response.text))) + '}}'

title = json.loads(dataa)['titleModule'].get('subject')

tradeCount = json.loads(dataa)['titleModule'].get('tradeCount')

starRating = json.loads(dataa)['titleModule'].get('feedbackRating').get('averageStar')

openTime = json.loads(dataa)['storeModule'].get('openTime')

price = json.loads(dataa)['priceModule'].get('formatedActivityPrice')

if price is None:

price = json.loads(dataa)['priceModule'].get('formatedPrice')

productDetailUrl = re.sub(r"html?(.*)", "", productDetailUrl) + "html"

years = "".join(re.findall(r", (\d+)", openTime))

openTime = openTime.replace("Jan ", "01").replace("Feb ", "02").replace("Mar ", "03").replace("Apr ", "04") \

.replace("May ", "05").replace("Jun ", "06").replace("Jul ", "07").replace("Aug ", "08") \

.replace("Sep ", "09").replace("Oct ", "10").replace("Nov ", "11").replace("Dec ", "12") \

.replace(", ", "").replace(years, "")

openTime = years + openTime

d1 = datetime.strptime(file_name, '%Y%m%d')

d2 = datetime.strptime(openTime, '%Y%m%d')

delta = (d1 - d2).days

if delta < 730:

url = "https://feedback.aliexpress.com/display/productEvaluation.htm?v=2&productId={}&ownerMemberId={}&memberType=seller&startValidDate=&i18n=true".format(

productId, ownerMemberId)

print(productDetailUrl)

response = requests.get(url, headers=headers, timeout=60).text

soup = etree.HTML(response)

comments = "".join(soup.xpath('//span[@class="fb-star-selector"]//em//text()'))

if comments != "":

headers = {

'Cookie': 'ali_apache_id=11.134.216.25.1608873600753.215555.6; acs_usuc_t=x_csrf=13uzwzhu51z73&acs_rt=af675e16aefe4db2b6534a3b61d484d3; intl_locale=pt_BR; xman_t=C9ECiQ9quS524bp7fbPLtgIPbNsg/M1+F40u+aVgru+iLuGw6v0VKHTjHOXmR0WR; cna=oYZQGOffZ0kCAbcLJgUlVOBF; ali_apache_track=; ali_apache_tracktmp=; _ga=GA1.2.1856276488.1608873603; aep_usuc_f=site=bra&c_tp=BRL®ion=BR&b_locale=pt_BR; xman_f=GtGjF88jxOgkI8RA5QNzZU5BFj492kprczcCL6xibHR5enlSmWUMNcgUT3K+05IiMqFG4KX5wPjRT7kPRJ9RnKqKhqZWTwQYxRQXrcHb2/6TcZW6lqOPgg==; _m_h5_c=ebd64953fcaed77d5b16d863739ffd80_1610509104274%3B8c960303c49a1df1e0f0061a1368f8b8; _m_h5_tk=ec7650e9debcdd193a671225c65f90d2_1610941776057; _m_h5_tk_enc=879b9775882d223f209cba62f5fe7e8d; xlly_s=1; _gid=GA1.2.1055555956.1610934119; _gat=1; intl_common_forever=4SaCN83L2210K0Xnii309X91XA/Xycpn2KJdVdYOEtpDtRA1hoOgng==; aep_history=keywords%5E%0Akeywords%09%0A%0Aproduct_selloffer%5E%0Aproduct_selloffer%094001025844089%0932783608340%091005001614833526%091005001436445641%091005001686102250%0932255881055%091005001686102250%0932255881055; xman_us_f=x_locale=pt_BR&x_l=0&x_c_chg=0&x_as_i=%7B%22cookieCacheEffectTime%22%3A1610932361184%2C%22isCookieCache%22%3A%22Y%22%2C%22ms%22%3A%220%22%7D&acs_rt=af675e16aefe4db2b6534a3b61d484d3; JSESSIONID=37C800047FE1B28110966641E468F619; l=eBx_t_v4O5cQphuaBO5CFurza77T0IRb8sPzaNbMiInca6N19eergNCIwnYWWdtjgtfxbetzLAUwVRK8X3UK0iGkrX3uKgLRJxJ6-; isg=BI-P0zFfM4KKQAif50_jIn2OHiOZtOPWXk6-GKGcsP4FcK5yqYRoJ5imcqBOCLtO; tfstk=cLYRBwZBKxDlfAfv_3n0dTXP4BGcZ_Hdh763JfxcvwbmhNzdiWLMWhYtV6wRkjC..',

'User-Agent': (UserAgent()).random}

data = {

"ownerMemberId": ownerMemberId,

"memberType": "seller",

"productId": productId,

"evaStarFilterValue": "all Stars",

"evaSortValue": "sortdefault@feedback",

"page": 1,

"i18n": "true",

"withPictures": "false",

"withPersonalInfo": "false",

"withAdditionalFeedback": "false",

"onlyFromMyCountry": "true",

"isOpened": "true",

"translate": "Y ",

"jumpToTop": "false",

"v": "2"

}

response = s.post(self.url, headers=headers, timeout=60, data=data).text

time.sleep(0.3)

soup = etree.HTML(response)

page = int(soup.xpath('//*[@id="transction-feedback"]/div[3]/div[1]/span/em//text()')[0])

self.save_date(title, price, productId, starRating, openTime, delta, page, comments, tradeCount

, productDetailUrl, imageUrl)

def save_date(self, title, price, productId, starRating, openTime, delta, page, comments, tradeCount,

productDetailUrl, imageUrl):

list_data = [title, price, productId, starRating, openTime, delta, page, comments, tradeCount, "",

productDetailUrl]

name = ["标题", "价格", "商品ID", "评分", "上架时间", "相差多少天", "巴西评论数", "总评论数", "总销量", "图片", "商品链接"]

values = "摄像头"

# 写入excel表格里

try:

wb = openpyxl.load_workbook(file_path + values + ".xlsx")

except Exception as e:

wb = openpyxl.Workbook(file_path + values + ".xlsx")

try:

ws = wb[file_name]

ws.append(list_data)

wb.save(file_path + values + ".xlsx")

except Exception as e:

wb.create_sheet(title=file_name)

ws = wb[file_name]

ws.append(name)

ws.append(list_data)

wb.save(file_path + values + ".xlsx")

self.excel_key += 1

try:

wb = openpyxl.load_workbook(file_path + values + ".xlsx")

except Exception as e:

wb = openpyxl.Workbook(file_path + values + ".xlsx")

headers = {'referer': productDetailUrl, 'User-Agent': (UserAgent()).random}

try:

res = requests.get(imageUrl, headers=headers, timeout=30)

file = md5(imageUrl.encode()).hexdigest()

with open(file_path + file + ".jpg", 'wb') as f:

for data in res.iter_content(64):

f.write(data)

time.sleep(0.5)

sh = wb[file_name]

sh.column_dimensions["J"].width = 20

sh.row_dimensions[self.excel_key - 1].height = 80

img = Image(file_path + file + ".jpg")

img.width, img.height = 100, 80

sh.add_image(img, "J" + str(self.excel_key - 1))

wb.save(file_path + values + ".xlsx")

print("保存成功")

path_img = os.path.join(file_path + file + ".jpg")

os.remove(path_img)

except Exception as e:

print("图片报错", e)

def run(self):

self.get_all_url()

if __name__ == '__main__':

bd = SMT()

bd.run()

1547

1547

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?