文章目录

实际上,目前大多数效果较好的应用基本上都是基于OpenAI的再开发,因此非常有必要进一步了解OpenAI提供的关于ChatGPT的API的相关细节。

1、OpenAI关键概念⭐️

| 概念 | 描述 |

|---|---|

| 模型(Models) | OpenAI API针对不同的场景比如自然语言处理、图像处理、推荐系统等提供了不同的预训练模型,用户可以根据自身的需要来选择这些模型,比如GPT、DALL-E等 |

| 提示(Prompt) | Prompt是指用户输入的对话开场白或者上下文,用于指导模型生成相应的对话回复,同时其决定了对话的回复的风格、主题和连贯性等 |

| Tokens | Token指的是文本中的最小单元,比如单词、标点符号、数字等 |

| API KEY | 访问OpenAI API的访问密钥,用于表示和验证开发者的身份。可以从官网获得 |

2、OpenAI SDK介绍

实际上,官网提供了python和node.js的sdk,因此在这里不做过多的赘余,针对类似Go语言,目前也有一些封装的SDK,以下是通过Go 的做出的演示:

package main

import (

"context"

"fmt"

openai "github.com/sashabaranov/go-openai"

)

func main() {

client := openai.NewClient("your token")

resp, err := client.CreateChatCompletion(

context.Background(),

openai.ChatCompletionRequest{

Model: openai.GPT3Dot5Turbo,

Messages: []openai.ChatCompletionMessage{

{

Role: openai.ChatMessageRoleUser,

Content: "Hello!",

},

},

},

)

if err != nil {

fmt.Printf("ChatCompletion error: %v\n", err)

return

}

fmt.Println(resp.Choices[0].Message.Content)

}

3、OpenAI API KEY&API 认证

如果你有账号可以自己去创建这里的API KEY如果没有可以通过某宝直接搜API KEY购买即可,跳过注册账号的一步,不过仍然建议自己去申请账号然后获取KEY。

3.1 REST API安全认证

OpenAI API使用API密钥进行身份验证。主要是通过http请求头设置前面提到的API KEY即可

- http请求头

Authorization: Bearer OPENAI_API_KEY

- API认证例子

curl https://api.openai.com/v1/models -H "Authorization: Bearer $OPENAI_API_KEY"

应该是我host没有配置好的原因,死活没法用curl,因此在这里,我们使用python的request来模拟发送http请求

import requests

# 自定义请求头

headers = {

'Authorization': Bearer $OPENAI_API_KEY",

}

# 发送带自定义请求头的GET请求

response = requests.get('https://api.openai.com/v1/models', headers=headers)

# 检查响应状态码

if response.status_code == 200:

# 打印响应内容

print(response.text)

else:

print("Failed to retrieve data:", response.status_code)

输出如下

{

"object": "list",

"data": [

{

"id": "gpt-3.5-turbo-16k",

"object": "model",

"created": 1683758102,

"owned_by": "openai-internal"

},

{

"id": "gpt-3.5-turbo-1106",

"object": "model",

"created": 1698959748,

"owned_by": "system"

},

{

"id": "dall-e-3",

"object": "model",

"created": 1698785189,

"owned_by": "system"

},

{

"id": "gpt-3.5-turbo-16k-0613",

"object": "model",

"created": 1685474247,

"owned_by": "openai"

},

{

"id": "dall-e-2",

"object": "model",

"created": 1698798177,

"owned_by": "system"

},

{

"id": "text-embedding-3-large",

"object": "model",

"created": 1705953180,

"owned_by": "system"

},

{

"id": "whisper-1",

"object": "model",

"created": 1677532384,

"owned_by": "openai-internal"

},

{

"id": "tts-1-hd-1106",

"object": "model",

"created": 1699053533,

"owned_by": "system"

},

{

"id": "tts-1-hd",

"object": "model",

"created": 1699046015,

"owned_by": "system"

},

{

"id": "gpt-3.5-turbo",

"object": "model",

"created": 1677610602,

"owned_by": "openai"

},

{

"id": "gpt-3.5-turbo-0125",

"object": "model",

"created": 1706048358,

"owned_by": "system"

},

{

"id": "gpt-3.5-turbo-0301",

"object": "model",

"created": 1677649963,

"owned_by": "openai"

},

{

"id": "gpt-3.5-turbo-0613",

"object": "model",

"created": 1686587434,

"owned_by": "openai"

},

{

"id": "gpt-3.5-turbo-instruct-0914",

"object": "model",

"created": 1694122472,

"owned_by": "system"

},

{

"id": "tts-1",

"object": "model",

"created": 1681940951,

"owned_by": "openai-internal"

},

{

"id": "davinci-002",

"object": "model",

"created": 1692634301,

"owned_by": "system"

},

{

"id": "gpt-3.5-turbo-instruct",

"object": "model",

"created": 1692901427,

"owned_by": "system"

},

{

"id": "babbage-002",

"object": "model",

"created": 1692634615,

"owned_by": "system"

},

{

"id": "tts-1-1106",

"object": "model",

"created": 1699053241,

"owned_by": "system"

},

{

"id": "text-embedding-ada-002",

"object": "model",

"created": 1671217299,

"owned_by": "openai-internal"

},

{

"id": "text-embedding-3-small",

"object": "model",

"created": 1705948997,

"owned_by": "system"

}

]

}

4、OpenAI模型⭐️

4.1 模型分类

| 模型名称 | 模型描述 |

|---|---|

| GPT-4 | 一组在GPT-3.5上改进的模型,可以理解并生成自然语言或代码 |

| GPT-3.5 | 一组在GPT-3上改进的模型,可以理解并生成自然语言或代码 |

| DALL·E | 可以在给定自然语言提示的情况下生成和编辑图像的模型 |

| Whisper | 一组可以将音频转换为文本的模型 |

| TTS | 一组可以将文本转换为音频的模型 |

| Embeddings | 一组可以将文本转换为数字形式的模型 |

| Moderation | 可以检测文本是否敏感或不安全 |

| GPT-3 | 一组可以理解和生成自然语言的模型 |

4.2 GPT4

GPT-4是一个大型多模态模型(目前支持文本输入和文本输出,未来将支持图像输入),由于其广泛的常识和高级推理,它可以比我们以前的任何模型更准确地解决难题能力。

| 模型 | 介绍 | 最大Tokens |

|---|---|---|

| gpt-4 | 比任何GPT-3.5模型更强大,能够执行更复杂的任务,并针对聊天进行了优化 | 8,192 tokens |

| gpt-4-0314 | gpt-4 2023 年 3 月 14 日的快照。这个模型不会再收到更新 | 8,192 tokens |

| gpt-4-32k | 与基本 gpt-4 模式相同的功能,但上下文长度是其 4 倍。将使用我们最新的模型迭代进行更新。 | 32,768 tokens |

| gpt-4-32k-0314 | gpt-4-32 2023 年 3 月 14 日的快照。与 gpt-4-32k 不同,此模型不会收到更新 | 32,768 tokens |

提示:

- 模型后面的0314:快照时间

- 32k:代表的是模型词汇表的大小,意味着模型输入文本最多可以包含32,768个标记

4.3 GPT-3.5

GPT-3.5 模型可以理解并生成自然语言或代码。目前模型推理速度比GPT4快

| 模型 | 介绍 | 最大Token |

|---|---|---|

| gpt-3.5-turbo | 功能最强大的 GPT-3.5 模型并针对聊天进行了优化,成本仅为 text-davinci-003 的 1/10。 | 4,096 tokens |

| gpt-3.5-turbo-0301 | gpt-3.5-turbo 的快照,2023 年 3 月 1 日。与 gpt-3.5-turbo 不同,此模型不会收到更新 | 4,096 tokens |

| text-davinci-003 | 比curie, babbage, 和 ada 模型更好的质量、支持更长的文本输出 | 4,097 tokens |

| text-davinci-002 | 与 text-davinci-003 类似的功能,但使用监督微调而不是强化学习进行训练 | 4,097 tokens |

| code-davinci-002 | 针对代码生成相关任务进行优化的模型 | 8,001 tokens |

OpenAI提供了四种不同的语言模型,分别为Ada,Babbage、Curie和Davinci。它们的主要区别在于模型的规模和训练数据的大小,以及它们能够执行的任务类型和精度等方面。(简单来说就是训练数据的规模以及其本身的参数规模不同)

4.4 Embeddings

Embeddings主要用于生成文本向量,嵌入是文本的数字表现形式(类似的还有tf-idf、Word2Vec等),可以用于衡量两段文本之间的相关性。Embedding常用于搜索、聚类、推荐、异常检测和分类任务。

5、OpenAI快速入门

-

入门介绍

OpenAI的核心API接口之一就是https://api.openai.com/v1/completions,直接调用各种AI模型,完成下游任务。 -

API认证

调用API需要在http请求头设置tokenAuthorization: Bearer OPENAI_API_KEY -

调用AI完成任务

- prompt提示语

今天天气怎么样? - Post请求参数

"model":"davinci-002", "prompt":"今天天气怎么样?", "temperature":0, - 发送请求

可以类似上面使用curl或者就是类似前面使用python模拟请求

- prompt提示语

-

关于Temperature超参数

在OpenAI中temperature是一种用于控制生成文本多样性的超参数,比如GPT系列模型。他的取值范围通常为0到2,表示生成文本的多样性。

具体来说,当temperature的值较低时,生成的文本会趋于更加确定和重复,而当Temperature 的值较高时,生成的文本会更加随机和多样化。举例来说,如果 Temperature 值为 0.5,则生成文本的多样性会比 Temperature 值为 0.1 或 0.9 的时候更为中等。【实际开发中,为了比如在测试prompt的效果时,就需要使用一个较低的温度来尽量避免随机性带来对测试效果的影响】

6、Function calling(函数调用)⭐️⭐️⭐️

在GPT模型中,Function calling(函数调用)是指通过API向模型提供描述性的函数,使其能够智能地选择输出一个包含参数的JSON对象,以便调用一个或多个函数。值得注意的是,Chat Completions API并不直接执行函数调用,而是生成可以用于在代码中执行函数的JSON。

通俗的讲Function calling功能就是我们提供一组函数的定义(包括函数的功能描述、参数描述),把函数的定义传递给GPT模型,由模型根据用户的问题决定要调用那个函数,由于模型无法执行我们定义的外部函数,模型只能以请求响应的方式返回希望调用那个函数(包括函数调用参数),我们程序收到模型请求结果后,在本地执行函数调用,然后把函数调用的结果拼接到提示词中再次传给模型,让模型进一步处理,最后在把结果返回给用户。

6.1 应用场景

以下是其在实际应用中的一些例子:

- 创建调用外部API回答问题的助手,例如定义函数send_email(to: string, body: string)或get_current_weather(location: string, unit: ‘celsius’ | ‘fahrenheit’)。

- 将自然语言转换为API调用,例如将“Who are my top customers?”转换为get_customers(min_revenue: int, created_before: string, limit: int)然后调用内部API。

- 从文本中提取结构化数据,例如定义函数extract_data(name: string, birthday: string)或sql_query(query: string)。

利用函数调用功能,我们可以实现智能体(AI Agent),让AI可以跟我们本地的系统、数据库互动,例如让AI去查询最新的天气、查询股票价格、让AI去点外卖、订机票等等。(实际上,模型并没有联网,意味着说如果没有这样功能,模型无法完成一些实时的功能,但是function calling很好的解决了这样的问题)

6.2 支持function calling的模型

并非所有版本的模型都经过了函数调用数据的训练。目前支持函数调用的模型有:gpt-4、gpt-4-turbo-preview、gpt-4-0125-preview、gpt-4-1106-preview、gpt-4-0613、gpt-3.5-turbo、gpt-3.5-turbo-1106和gpt-3.5-turbo-0613。

此外,模型gpt-4-turbo-preview、gpt-4-0125-preview、gpt-4-1106-preview和gpt-3.5-turbo-1106支持并行函数调用功能,这允许它们一次性执行多个函数调用,并且能够并行解决这些函数调用效果和结果。

6.3 function calling的例子

通过查询天气的案列,使用python来实现整个过程

- 步骤1:准备外部函数(可以是自己编写的也可能是第三方的API)

def get_current_weather(location, unit="fahrenheit"):

# 实际为获取天气的接口

return json.dumps({

"location": location,

"weather": "rain",

"unit": unit

})

- 步骤2:根据问题和tools参数调用模型

我们需要通过Chat Completion API调用GPT模型,并传入用户的查询(如“当前天气如何”),以及tools参数,其中包含了我们刚刚定义的get_current_weather函数的描述。

from openai import OpenAI

client = OpenAI()

# 定义tools参数,其中包含了get_current_weather函数的描述

function_description = [{

"type": "function",

"function": {

"name": "get_current_weather",

"description": "获取给定地点的当前天气",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "城市名称,例如:“旧金山,CA”"

},

"unit": {

"type": "string",

"enum": ["celsius", "fahrenheit"]

}

},

"required": ["location"]

}

}

}]

# 发起调用,传入用户的问题

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "旧金山当前的天气如何?"}],

tools=function_description

)

- 步骤3:本地执行函数

模型返回的结果会包含模型希望调用的函数,函数信息通常包含在tool_calls响应参数中,我们就可以根据tool_calls参数描述的函数调用信息,在本地执行相应的函数调用。

# 解析模型响应中的tool_calls

tool_calls = response.choices[0].message.tool_calls

# 如果模型确定要调用一个函数

if tool_calls:

# 获取模型生成的参数

# ps: 这里只是假设模型仅调用一个函数,实际上有可能调用多个函数

arguments = json.loads(tool_calls[0].function.arguments)

# 执行我们之前定义的函数

# ps: 这里为了演示以硬编码的方式调用本地函数,实际业务你可以根据tool_calls参数动态的选择调用本地函数。

weather_info = get_current_weather(**arguments)

print(weather_info) # 我们可以在这里看到函数调用查询到的天气信息

- 步骤4:通过函数返回再次调用模型

我们可以将函数返回的结果作为一个新的消息发送给模型,这样模型就可以对这些结果进行处理并生成一个面向用户的响应。

# 使用刚才函数返回的结果继续与模型对话

follow_up_response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{"role": "user", "content": "旧金山当前的天气如何?"},

{"role": "function", "name": "get_current_weather", "content": weather_info}

],

tools=tools

)

- 步骤5:获取模型最终响应结果

# 处理得到最终的模型响应

final_output = follow_up_response.choices[0].message.content

print(final_output) # 这个输出就是我们要展示给用户的天气信息

实际运行如下:

import json

from openai import OpenAI

def get_current_weather(location, unit="fahrenheit"):

# 实际为获取天气的接口

return json.dumps({

"location": location,

"weather": "rain",

"unit": unit,

})

if __name__ == '__main__':

# 定义客户端

client = OpenAI(api_key="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")

# 定义函数描述信息

function_description = [{

"type": "function",

"function": {

"name": "get_current_weather",

"description": "获取给定地点的当前天气",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "城市名称,例如:“旧金山,CA”"

},

"unit": {

"type": "string",

"enum": ["celsius", "fahrenheit"]

}

},

"required": ["location"]

}

}

}]

# 发起调用

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "旧金山当前的天气如何?"}],

tools=function_description,

temperature=0

)

tool_calls = response.choices[0].message.tool_calls

# 如果模型确定要调用一个函数

if tool_calls:

# 获取模型生成的参数

arguments = json.loads(tool_calls[0].function.arguments)

# 调用本地函数

weather_info = get_current_weather(**arguments)

print(weather_info) # 我们可以在这里看到函数调用查询到的天气信息

输出为

{"location": "San Francisco, CA", "weather": "rain", "unit": "fahrenheit"}

再将这部分内容重新输入模型中:

from openai import OpenAI

client = OpenAI(api_key="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")

# 定义函数描述信息

function_description = [{

"type": "function",

"function": {

"name": "get_current_weather",

"description": "获取给定地点的当前天气",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "城市名称,例如:“旧金山,CA”"

},

"unit": {

"type": "string",

"enum": ["celsius", "fahrenheit"]

}

},

"required": ["location"]

}

}

}]

weather_info = '{"location": "San Francisco, CA", "weather": "rain", "unit": "fahrenheit"}'

r = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "旧金山当前的天气如何?"},

{"role":"function","name":"get_current_weather","content": weather_info}

],

tools=function_description,

temperature=0

)

# 打印输出

print(r.choices[0].message.content)

输出:

旧金山当前的天气是雨天。

7、Embeddings

嵌入,或者叫做Embeddings,在机器学习领域,尤其是在处理自然语言处理(NLP)问题时,是一种将文本数据转换为数值向量的技术。在人类语言中,单词和短语的意义是由它们的上下文和使用环境决定的。嵌入的目标是捕获这些语言单位的语义,使计算机可以处理并理解它们。

Embeddings的核心思想是将类似意义的词语映射到数学空间的临近点,即将词语表示为高维空间中的点,这样语义上非常相似的词语(例如“国王”和“王后”)在空间中的距离会很近。Embeddings通常由浮点数组成,即使是非常不同的文本片段,也可以有类似的嵌入表达。

嵌入的具体场景如下:

- 搜索(Search):通过嵌入特征,可以根据与查询文本的相关性来排列搜索结果。

- 聚类(Clustering):嵌入可以帮助识别和归纳语义上相似的文本片段,形成群组。

- 推荐系统(Recommendations):基于相关性推荐可以帮助发现并推荐与已知项相似的其他项。

- 异常检测(Anomaly Detection):嵌入可以用来识别与主要数据集显著不同的数据点。

- 多样性度量(Diversity Measurement):嵌入也可以用于分析不同文本之间的相似性分布。

- 分类(Classification):通过将文本与一组已知标签的嵌入进行比较,可以将其分类到最相似的类别中。

7.1 OpenAI Embedding介绍?

目前openAI提供多种嵌入模型,包括:text-embedding-3-large、text-embedding-ada-002、text-embedding-3-small。这些模型在处理嵌入时,具有各自独特的特点。例如,text-embedding-3-small提供了1536维的嵌入向量,而text-embedding-3-large则提供了3072维的嵌入向量,用于涵盖更加复杂的文本特性。通过调整参数,可以控制嵌入的维数,以满足特定应用场景的需求。以下是如何在不同应用场景中做出选择:

- 在关注性能的场景下:如果需要捕获更细粒度的语义信息,比如在精细化推荐系统或者高精度文本分类中,通常推荐使用text-embedding-3-large,虽然它的成本比小模型要高,但能提供更丰富的文本特征表示。

- 在成本敏感型应用中:对于需要处理大量数据,但对精度要求不是特别高的应用,例如初始的数据探索或者快速原型开发,那么text-embedding-3-small是一个更经济的选择,因为它在保持相对较高性能的同时,可以显著减少成本。

- 多语言环境:由于这些嵌入模型具有较高的多语言性能表现,它们在处理跨语言或多种语言场景时特别有用,这使其在全球化应用中成为理想的选择。

7.2 如何使用Embedding?

注意模型使用权限

from openai import OpenAI

client = OpenAI(api_key="YOUR API KEY")

r = client.embeddings.create(

model="text-embedding-ada-002",

input="人工智能真好玩儿!"

)

print(r.data)

输出如下:

[Embedding(embedding=[-0.017013590782880783, -0.015710927546024323, 0.012013464234769344, -0.023171642795205116, -0.011500293388962746, 0.006352135445922613, -0.010191049426794052, 0.00646398076787591, -0.015158280730247498, -0.032816626131534576, 0.003542853519320488, 0.021829504519701004, 0.00014299295435193926, 0.00412510521709919, -0.008868646807968616, 0.008888384327292442, 0.0206452626734972, 0.0024096008855849504, 0.02011893317103386, -0.01157266367226839, -0.003950758837163448, 0.02831651084125042, 0.00043956711306236684, -0.032527144998311996, -0.032921891659498215, 0.013763508759438992, 0.013789825141429901, -0.025290118530392647, 0.011473977006971836, -0.014408262446522713, 0.036106184124946594, -0.009066020138561726, -0.018724162131547928, -0.029500752687454224, -0.010302893817424774, 0.004585643298923969, -0.009566033259034157, 0.0042830039747059345, 0.01717149093747139, 0.006019890308380127, 0.013006910681724548, 0.01343455258756876, 0.00297211529687047, 0.024948004633188248, -0.019855769351124763, 0.026658574119210243, -0.00536526832729578, -0.014052989892661572, 0.004253397695720196, 0.010533163323998451, -0.0011373645393177867, 0.01102001778781414, -0.0064804283902049065, -0.004032997414469719, -0.006299502681940794, 0.0028865868225693703, -0.004868545103818178, 0.017632028087973595, 0.018145199865102768, -0.01693464256823063, 0.014750376343727112, -8.028576121432707e-05, -0.03360611945390701, 0.024474307894706726, -0.0016702727880328894, -0.014052989892661572, -0.011013438925147057, 0.006065944209694862, 0.04581695795059204, -0.009566033259034157, 0.012842432595789433, -0.004312609788030386, 0.0009029835346154869, 0.014487211592495441, 0.036974627524614334, -0.003973785322159529, -0.012500318698585033, 0.015987249091267586, 0.012223996222019196, 0.0019539971835911274, 0.006039627827703953, -0.010684482753276825, 0.023408491164445877, 0.010658166371285915, 0.010197628289461136, 0.017145173624157906, -0.01867152936756611, 0.020105775445699692, -0.0019441285403445363, -0.0058455439284443855, 0.00817126128822565, 0.0011998660629615188, 0.01425036322325468, 0.018474156036973, -0.004592222161591053, 0.04165895655751228, -0.006796225905418396, 0.009592349641025066, 0.00470406748354435, -0.013177967630326748, 0.01415825542062521, -0.011513451114296913, -0.028527043759822845, -0.0016727399779483676, -0.005914624314755201, 0.0010904882801696658, 0.019553130492568016, -0.010500268079340458, 0.029790233820676804, 0.007368608843535185, -0.02667173184454441, 0.02093474380671978, -0.005736988503485918, -0.01722412370145321, -0.024948004633188248, -0.013191125355660915, 0.02082947827875614, 0.008480479009449482, -0.04771174117922783, -0.004806043580174446, 0.015947775915265083, 0.024342725053429604, 0.008355475962162018, 0.009723932482302189, 0.028974423184990883, 0.03534300625324249, -0.02001366764307022, 0.010381843894720078, 0.0143029959872365, -0.014803009107708931, 0.025171693414449692, -0.013171388767659664, 0.039579957723617554, 0.016947800293564796, -0.03160607069730759, 0.009901568293571472, -0.0018882059957832098, -0.004592222161591053, -0.017421497032046318, -0.006595562677830458, 0.0132174426689744, 0.016381995752453804, -0.0013446066295728087, -0.022829528898000717, -0.00853969156742096, 0.024237459525465965, 0.0020378809422254562, 0.010500268079340458, -0.0018914955435320735, 0.02073737047612667, -0.003595486283302307, -0.018460996448993683, 0.013974040746688843, 0.037211474031209946, 0.004819201771169901, 0.007973887957632542, 0.01697411760687828, -0.00671727629378438, -0.008750222623348236, -0.010191049426794052, -0.00346061447635293, 0.007131760939955711, 0.0018734029727056623, -0.02238214947283268, 0.02585592307150364, 0.03423771634697914, -0.004032997414469719, 0.01476353406906128, 0.02151370607316494, -0.010921331122517586, 0.016329362988471985, 0.021053168922662735, -0.016763584688305855, -0.0016875430010259151, -0.012079255655407906, 0.008875226601958275, 0.020763687789440155, 0.0036711462307721376, -0.005079076625406742, -0.007335713133215904, -0.01249373983591795, 0.0002911258488893509, 0.01511880662292242, 0.016289889812469482, -0.0009745314018800855, -0.0020362362265586853, 0.00825021043419838, -0.006052786018699408, -0.005519877653568983, -0.007710722740739584, 0.01707938313484192, 0.012013464234769344, 0.0008248565718531609, -0.013112176209688187, -0.6105418801307678, -0.02807966247200966, -0.007467295508831739, -0.0015205979580059648, 0.010026571340858936, 0.011934515088796616, 0.01934259757399559, -0.004519851878285408, 0.009987096302211285, -0.028158612549304962, 0.0033092948142439127, 0.016526736319065094, 0.001632442930713296, 0.004358663689345121, -0.00909233745187521, -0.019697871059179306, 0.016039881855249405, -0.0036843044217675924, 0.003710620803758502, 0.01813204027712345, -0.026540150865912437, 0.03215871378779411, -0.016658319160342216, -0.002756649162620306, 0.005013285670429468, -0.012020043097436428, 0.008388372138142586, -0.006036337930709124, -0.01261216402053833, 0.004246818833053112, -0.001620929455384612, 0.01582935079932213, -0.009631824679672718, 0.002021433087065816, 0.0623173788189888, -0.011868723668158054, -0.02069789543747902, 0.03676409646868706, 0.0038290449883788824, 0.03647461533546448, -0.029053371399641037, -0.008401529863476753, 0.0033504143357276917, -0.006220553535968065, -0.0024803264532238245, 0.015697767958045006, 0.00571396155282855, 0.00029873294988647103, -0.0015205979580059648, -0.008112048730254173, 0.00017352416762150824, -0.004977100528776646, 0.0036941731814295053, 0.001378324581310153, 0.009967359714210033, 0.006036337930709124, 0.032342929393053055, -0.050764452666044235, 0.014066147617995739, 0.0012097348226234317, -0.005720540415495634, 0.0008750223205424845, -0.023276908323168755, -0.026540150865912437, -0.01116475835442543, 0.0376325398683548, -0.0014992158394306898, -0.00369088351726532, -0.004194186069071293, -0.02779018133878708, 0.0035921968519687653, -0.01037526410073042, 0.01280953735113144, -0.01780308596789837, 0.00579949002712965, 0.010434476658701897, 0.029263904318213463, -0.0039244419895112514, -0.001581454765982926, 0.00807915348559618, 0.007342292461544275, -0.01415825542062521, 0.013309549540281296, 0.002008274896070361, 0.010526584461331367, -0.00848705880343914, -0.024724313989281654, -0.011763458140194416, 0.012987173162400723, 0.0029573121573776007, 0.02739543467760086, 0.030027082189917564, 0.011079229414463043, -0.016895167529582977, -0.005503429565578699, 0.015592502430081367, -0.01179635338485241, -0.025987504050135612, 0.00559553736820817, -0.024921687319874763, 0.005434349179267883, -0.015013541094958782, 0.012434527277946472, 0.018697844818234444, -0.0036612774711102247, 0.01678990200161934, 0.001290328917093575, -0.009546295739710331, 0.03613249957561493, -0.029263904318213463, -0.002184266224503517, -0.013270075432956219, -0.0029392195865511894, 0.00542448041960597, -0.006394899915903807, -0.017250439152121544, -0.01295427791774273, 0.01113844197243452, 0.01755307987332344, -0.0010707509936764836, 0.031027106568217278, -0.008677853271365166, 0.019066276028752327, 0.003325742669403553, 0.012921381741762161, 0.023671656847000122, -0.0011982213472947478, -0.02889547310769558, -0.004651434253901243, -0.010013413615524769, -0.02475063130259514, -0.008309422060847282, -0.0013857261510565877, -0.0282638780772686, -0.0009630179847590625, -0.01261216402053833, 0.0035921968519687653, -0.015276704914867878, 0.0069672828540205956, 0.011388448067009449, -0.0163425225764513, -0.002384929219260812, 0.009342343546450138, -0.012763483449816704, 0.004621828440576792, -0.01136213168501854, 0.005730409175157547, 0.0027813208289444447, -0.01003315020352602, -0.006572536192834377, -0.004029707983136177, 0.00959892850369215, 0.011434501968324184, 0.016026724129915237, -0.00836863461881876, 0.007019915618002415, -0.008862067945301533, -0.006506744772195816, -0.012822695076465607, -0.004134973976761103, -0.014289838261902332, -0.008756802417337894, -0.0016735624521970749, -0.0009218985214829445, -0.014553003013134003, -0.01876363717019558, -0.024040086194872856, 0.01972418650984764, -0.04226423427462578, -0.0420537032186985, 0.017789926379919052, 0.0014046410797163844, -0.010355527512729168, 0.012743745930492878, -0.017816243693232536, 0.017618870362639427, -0.0170267503708601, -0.008269947953522205, -0.010835802182555199, -0.026803314685821533, -0.02198740281164646, -0.005029733292758465, -0.010868698358535767, -0.022250566631555557, 0.00945418793708086, 0.015500395558774471, 0.014947749674320221, 0.005115261767059565, -0.027316486462950706, -0.0052139488980174065, 0.009388397447764874, 0.0486065037548542, 0.020092617720365524, -0.012342419475317001, 0.02257952280342579, 0.014803009107708931, -0.012645059265196323, 0.003263241145759821, 0.033790335059165955, 0.030500777065753937, 0.013125334866344929, 0.01461879350244999, -0.011868723668158054, 0.011243707500398159, 0.017197806388139725, -0.011802932247519493, -0.006450822576880455, -0.015947775915265083, 0.013881932944059372, 0.0011455884668976068, -0.012585846707224846, -0.0014523396966978908, -0.0049178884364664555, -0.003179357387125492, 0.0004190073814243078, 0.01735570654273033, -0.01514512300491333, -0.0049244677647948265, -0.010454214178025723, -0.0033915338572114706, 0.004259977024048567, -0.011085809208452702, 0.0010658166138455272, 0.006348846014589071, -0.014776692725718021, 0.020039984956383705, 0.012533213943243027, 0.017158331349492073, 0.009230498224496841, -0.011697666719555855, -0.020184725522994995, 0.00969761610031128, 0.00913839042186737, 0.002661252161487937, 0.008750222623348236, -0.029106006026268005, 0.026132244616746902, -0.01275690458714962, 0.025724340230226517, 0.043290577828884125, -0.005618564318865538, -0.011710824444890022, 0.015131964348256588, -0.033500853925943375, 0.008112048730254173, 0.01220425870269537, 0.038474664092063904, 0.0037369374185800552, -0.009579191915690899, 0.04336952790617943, -0.038316767662763596, 0.0034474562853574753, -0.03450087830424309, 0.004375111311674118, 0.01972418650984764, -0.019855769351124763, 0.0159214586019516, 0.008434425108134747, 0.020145250484347343, 0.032816626131534576, 0.0009769985917955637, 0.03194818273186684, -0.009151549078524113, 0.005325793754309416, -0.0059837051667273045, 0.010809485800564289, 0.008572586812078953, -0.03526405617594719, -0.015697767958045006, 0.01386877428740263, -0.014368787407875061, -0.004802754148840904, 0.011513451114296913, -0.003179357387125492, 0.025316433981060982, -0.01003315020352602, 0.00565474946051836, -0.000800596084445715, -0.00041098910151049495, 0.01677674427628517, -0.01731623150408268, -0.03326400741934776, 0.012013464234769344, 0.02450062520802021, 0.01263848040252924, -0.03152712062001228, -0.04465903341770172, -0.0019819585140794516, -0.019513655453920364, -0.016263572499155998, 0.004029707983136177, 0.006960703991353512, -0.007434400264173746, 0.0059738364070653915, -0.003812597133219242, 0.008355475962162018, 0.04476429894566536, -0.003106987103819847, -0.027421751990914345, -0.001646423595957458, 0.00715149799361825, 0.013684559613466263, -0.01726359874010086, -0.05726461857557297, 0.006960703991353512, -0.010092362761497498, -0.02744806744158268, 0.009171286597847939, -0.008289685472846031, -0.029553385451436043, -0.007973887957632542, -0.012539793737232685, -0.004651434253901243, -0.02604013681411743, -0.01326349563896656, 0.02648751810193062, -0.01252005621790886, -0.02618487738072872, 0.036737777292728424, 0.001663693692535162, -0.012020043097436428, -0.010506846942007542, -0.017421497032046318, 0.004664592444896698, 0.05176447704434395, 0.010283157229423523, -0.0021612392738461494, 0.0038389137480407953, -0.025790130719542503, -0.011092388071119785, -0.04452745243906975, -0.013776667416095734, 0.0030708019621670246, -0.00631924020126462, -0.00773046026006341, 0.002539538312703371, 0.010743695311248302, -0.009441030211746693, 0.017237281426787376, 0.01765834540128708, 0.006562667433172464, -0.01581619307398796, 0.00640476867556572, 0.00412510521709919, 0.009783144108951092, 0.02256636507809162, 0.02339533343911171, 0.0016332652885466814, -0.010447634384036064, 0.0013075991300866008, 0.0006801160052418709, 0.03294820711016655, -0.01517143938690424, -0.018750477582216263, 0.006848858669400215, -4.0502676711184904e-05, -0.019026800990104675, -0.007901517674326897, -0.006210684776306152, 0.012026621960103512, 0.0013232245109975338, 0.022987429052591324, 0.0013832589611411095, -0.0013075991300866008, 0.03765885531902313, 0.04505378007888794, 0.005072497762739658, -0.01369771733880043, 0.006098839920014143, 0.00033491809153929353, 0.02267163060605526, 0.018460996448993683, -0.017500445246696472, -0.024145351722836494, -0.0032056737691164017, 0.01355297677218914, -0.029842866584658623, -0.007829147391021252, -0.010388422757387161, 0.027027005329728127, -0.03971153870224953, -0.022737421095371246, 0.012191100046038628, -0.018829427659511566, -0.014381945133209229, -0.024105878546833992, 0.0012771707260981202, 0.0005806069239042699, -0.018145199865102768, -0.02261899784207344, 0.023500598967075348, -0.011177916079759598, 0.0033208082895725965, 0.009796302765607834, 0.020329466089606285, -0.0271585863083601, -0.014210888184607029, -0.03926415741443634, -0.010421318002045155, 0.004325767979025841, 0.030211295932531357, -0.007750197779387236, -0.007862042635679245, 0.029158638790249825, 0.002547762356698513, -0.019500497728586197, -0.004026418551802635, -0.043764274567365646, -0.007769934833049774, -0.0031744230072945356, 0.006306082010269165, -0.00715149799361825, -0.00073233776492998, -0.009039703756570816, 0.024382200092077255, 0.016553053632378578, 0.04373795539140701, 0.03326400741934776, 0.012796378694474697, -0.002651383401826024, 0.0018322835676372051, 0.030158663168549538, -0.0031333034858107567, -0.0066942498087882996, 0.0119410939514637, -0.03536932170391083, -0.030079714953899384, -0.007079127710312605, 0.017382021993398666, -0.004200764931738377, 0.015066173858940601, -0.0006706585409119725, 0.01519775576889515, -0.020421573892235756, 0.005924493074417114, 0.0015534935519099236, 0.019697871059179306, -0.023645339533686638, 0.02947443537414074, 0.012079255655407906, 0.011388448067009449, 0.019592605531215668, -0.026500675827264786, -0.02947443537414074, -0.02290847897529602, -0.025882238522171974, 0.01673726923763752, 0.004049445502460003, -0.0203031487762928, -0.009941043332219124, -0.00092683284310624, -0.009737090207636356, 0.002018143655732274, 0.017395179718732834, -0.0013750350335612893, 0.0001632442872505635, 0.019987352192401886, -0.018579421564936638, -0.019316282123327255, -0.023421648889780045, 0.004289582837373018, 0.0037764119915664196, -0.013513502664864063, 0.0013454289874061942, -0.02455325797200203, -0.0026661863084882498, 0.025395384058356285, -0.004651434253901243, 0.02415851131081581, -0.03000076487660408, -0.004286293406039476, 0.012875327840447426, 0.01482932548969984, 0.016987275332212448, -0.006720566190779209, 0.014895116910338402, -0.03068499267101288, -0.001097067492082715, -0.011691087856888771, -0.020342623814940453, -0.015474078245460987, -0.010237103328108788, 0.02089526876807213, 0.024395359680056572, 0.04592222347855568, -0.02276373840868473, 0.0049902587197721004, 0.0017993879737332463, -0.01555302832275629, -0.015539869666099548, -0.014066147617995739, -0.012829274870455265, -0.026263827458024025, -0.004042866174131632, 0.009631824679672718, 0.00781598873436451, 0.004332347307354212, -0.02001366764307022, -0.00735545065253973, 0.00301487953402102, 0.003947468940168619, -0.008454162627458572, -0.014434578828513622, -0.05473823845386505, -0.0017845849506556988, -0.01490827463567257, -0.0016595817869529128, -0.003161264816299081, 0.0015830995980650187, -0.0017681372119113803, 0.01957944594323635, -0.00011194775288458914, 0.02575065568089485, 0.019039958715438843, 0.007631773594766855, -0.025263801217079163, -0.020711055025458336, 0.008414688520133495, 0.02580329030752182, -0.0035066683776676655, 0.014842483215034008, -0.019092591479420662, 0.009210760705173016, 0.031185004860162735, 0.04489587992429733, 0.031079739332199097, 0.034553512930870056, 0.0016217518132179976, -0.006720566190779209, 0.0078094094060361385, -0.023382175713777542, -0.014224046841263771, -0.02325059287250042, -0.017645185813307762, -0.005519877653568983, -0.01130949892103672, 0.0017681372119113803, -0.03757990524172783, 0.009493662975728512, -0.01119107473641634, -0.022171618416905403, -0.00741466274484992, 0.0011373645393177867, -0.0008651536190882325, -0.008862067945301533, 0.017710978165268898, 0.041553691029548645, -0.013079280965030193, 0.017776768654584885, 0.04750121012330055, 0.005023154430091381, 0.012737167067825794, -0.06989651918411255, -0.008789697661995888, -0.007677827496081591, 0.02305321954190731, 0.04402743652462959, -0.013789825141429901, -0.03034287877380848, 0.008375213481485844, -0.007263342849910259, -0.020289991050958633, -0.03144817054271698, -0.004447481594979763, 0.01087527722120285, 0.021382123231887817, -0.0017878744984045625, -0.022066352888941765, -0.001937549444846809, 0.023224275559186935, -0.017000433057546616, -0.02739543467760086, -0.0332903228700161, 0.006164630874991417, 0.009967359714210033, -0.006822542287409306, -0.0191978570073843, -0.006868596188724041, -0.009980517439544201, 0.008302843198180199, 0.007631773594766855, -0.011355552822351456, -0.005312635563313961, 0.015105647966265678, 0.0035527220461517572, 0.022895321249961853, -0.001975379418581724, 0.002653028117492795, 0.019618920981884003, -0.0037435165140777826, 0.006868596188724041, -0.01606619916856289, -0.012427948415279388, 0.04558010771870613, -0.01896101050078869, -0.014816166833043098, -0.000489732890855521, 0.02744806744158268, 0.0094081349670887, -0.019566288217902184, -0.005289608612656593, 0.01482932548969984, -0.005496850702911615, 0.0025543414521962404, -7.756159902783111e-05, 0.003190870862454176, 0.012210837565362453, 0.0029770496767014265, -0.020092617720365524, -0.015539869666099548, 0.004970521666109562, -0.006667932961136103, 0.00853969156742096, -0.028632309287786484, -0.01432931236922741, -0.0011694376589730382, -0.0018635343294590712, 0.008717327378690243, 0.02417166903614998, -0.01534249633550644, 0.006737013813108206, 0.035764068365097046, -0.021184749901294708, 0.017092540860176086, 0.005144868046045303, 0.012941119261085987, -0.010434476658701897, -0.012533213943243027, 0.02600066363811493, -0.005898176692426205, 0.018697844818234444, -0.022605840116739273, 0.009079178795218468, -0.01217794232070446, -0.009072599932551384, 0.013829300180077553, 0.005835675168782473, 0.01596093364059925, 0.03781675174832344, -0.010427897796034813, -0.0013429619139060378, -0.010223944671452045, -0.02021104097366333, -0.02088211104273796, 0.01148055586963892, 0.008664694614708424, 0.0066810911521315575, -0.026461200788617134, 0.013184546492993832, -0.019316282123327255, -0.013710875995457172, -0.00755940331146121, -0.05158026143908501, -0.03994838520884514, -0.0019046538509428501, -0.003677725326269865, -0.002631646115332842, -0.023711130023002625, -0.025119060650467873, -0.0009728866280056536, -0.015421445481479168, 0.0046349866315722466, -0.018487313762307167, 0.013309549540281296, 0.007217289414256811, 0.010276577435433865, 0.007625194266438484, -0.005957388784736395, 0.01386877428740263, 0.005717250984162092, -0.006184368394315243, -0.01769782043993473, -0.03097447380423546, 0.008441004902124405, -0.0035066683776676655, 0.03410613164305687, -0.0013421394396573305, -0.017737293615937233, -0.02850072644650936, -0.008204156532883644, -0.04094841331243515, -0.02426377683877945, -0.015987249091267586, 0.027369119226932526, 0.03594828397035599, 0.017855718731880188, -0.00553632527589798, -0.0032681755255907774, -0.004056024365127087, -0.0020477494690567255, -0.014263521879911423, -0.006848858669400215, 0.0007298705750145018, -0.010697641409933567, -0.015092490240931511, 0.02690858021378517, -0.03734305873513222, 0.0010098941856995225, 0.010118679143488407, 0.0016225742874667048, 1.4584561540686991e-05, 0.022737421095371246, 0.011105546727776527, -0.0030428406316787004, -0.006039627827703953, -0.015789875760674477, -0.0019030090188607574, -0.005401453468948603, -0.010131836868822575, -0.028342828154563904, -0.0002804347896017134, 0.010342368856072426, -0.008408108726143837, -0.0036382507532835007, 0.005707382224500179, -0.0004148954467382282, -0.002342164982110262, -0.005661328323185444, -0.0024918399285525084, -0.01939523220062256, -0.004450771491974592, -0.023355858400464058, -0.005098814144730568, -0.022658472880721092, -0.025553282350301743, 0.03218502923846245, -0.002756649162620306, -0.004977100528776646, -0.02223740890622139, 0.010592374950647354, 0.005263292230665684, -0.012967435643076897, 0.017382021993398666, -0.0188557431101799, 0.02518485300242901, -0.012421369552612305, 0.016671476885676384, -0.007901517674326897, -0.017763610929250717, -0.017197806388139725, 0.0012327616568654776, -0.035132475197315216, 0.0007088996353559196, -0.009816039353609085, 0.002985273487865925, -0.0006653130403719842, -0.005664618220180273, -0.027579650282859802, -0.027237536385655403, -0.01476353406906128, 0.01963207870721817, -0.011987147852778435, 0.009276552125811577, 0.03197449818253517, -0.0005234508425928652, -0.019908402115106583, 0.029106006026268005, 0.024290092289447784, 0.0007890826091170311, 0.011210812255740166, 0.29053372144699097, -0.026421725749969482, 0.003366862190887332, 0.01586882583796978, -0.0019309702329337597, 0.0376325398683548, 0.01432931236922741, -0.0034770623315125704, 0.009644982405006886, -0.005809358786791563, 0.015066173858940601, -0.0007697564433328807, -0.027711233124136925, -0.011013438925147057, 0.0013437842717394233, -0.031237637624144554, -0.023605864495038986, -0.02344796620309353, -0.0016974116442725062, -0.029290219768881798, 0.030606042593717575, -0.005115261767059565, -0.03000076487660408, -0.0275007002055645, 0.005121841095387936, -0.011052913032472134, -0.03318505734205246, -0.010973963886499405, 0.019408389925956726, 0.013947724364697933, -0.0318429172039032, -0.007684406358748674, 0.0022434783168137074, 0.010658166371285915, -0.01297401450574398, -0.012987173162400723, 0.013737192377448082, 0.014803009107708931, 0.03215871378779411, -0.004391559399664402, -0.0007454959559254348, -0.016697794198989868, 0.009664719924330711, 0.0058290958404541016, 0.010191049426794052, 0.015131964348256588, -0.02560591511428356, -0.018224148079752922, -0.001988537609577179, 0.0300533976405859, -0.012842432595789433, -0.009500241838395596, 0.030658677220344543, 0.032290298491716385, -0.011677929200232029, 0.010605533607304096, -0.0007339825388044119, 0.007875200361013412, 0.02831651084125042, 0.0061284457333385944, 0.022737421095371246, 0.02227688394486904, 0.004565905779600143, -0.0038389137480407953, -0.0413694754242897, -0.00028824748005717993, -0.02227688394486904, 0.026158561930060387, 0.016579370945692062, 0.005986994598060846, -0.004154711030423641, -0.020000509917736053, -0.025526966899633408, -0.009605508297681808, -0.03497457504272461, -0.024513782933354378, 0.053711894899606705, 0.008704169653356075, 0.016579370945692062, 0.02296111173927784, -0.0030625781510025263, -0.008756802417337894, -0.008059415966272354, -0.029606018215417862, -0.009723932482302189, -0.027421751990914345, 0.027000688016414642, 0.0012286497512832284, -0.008283105678856373, 0.002672765403985977, -0.0026448043063282967, -0.004914599005132914, 0.00215137074701488, -0.005835675168782473, -0.002271439414471388, 0.03342190384864807, 0.01693464256823063, 0.01568461023271084, 0.0031036974396556616, 0.015789875760674477, -0.029500752687454224, -0.019355757161974907, 0.0007339825388044119, 0.032342929393053055, -0.011960831470787525, 0.004141552839428186, 0.0022928216494619846, 0.0009334119386039674, -0.0005082366405986249, -0.008434425108134747, -0.012302945367991924, -0.030658677220344543, -0.0010666390880942345, 0.002633290830999613, 0.012888486497104168, 0.0071778143756091595, 0.00822389405220747, -0.01735570654273033, 0.0025543414521962404, -0.006789646577090025, 0.031237637624144554, -0.025592757388949394, -0.00706596951931715, 0.026369092985987663, -0.03086920827627182, -0.03573775291442871, -0.018632054328918457, 0.008138365112245083, -0.0053323726169764996, -0.041843172162771225, 0.027605967596173286, -0.03800096735358238, 0.01722412370145321, 0.017579395323991776, 0.004799464251846075, 0.014579319395124912, 0.018197832629084587, -0.0023536784574389458, 0.0006250159349292517, 0.008085732348263264, -0.007046232465654612, -0.01968471147119999, 0.01606619916856289, 0.004684329964220524, 0.015789875760674477, 0.01531617995351553, 0.019961034879088402, 0.012401632033288479, 0.015500395558774471, -0.01957944594323635, -0.049896009266376495, -0.016474103555083275, -0.0014021738898009062, -0.005796200595796108, 0.02155318111181259, -0.005914624314755201, -0.05042233690619469, -0.007362029980868101, -0.013342445716261864, 0.015250388532876968, -0.04094841331243515, -0.0016752071678638458, 0.03189555183053017, -0.03684304282069206, -0.012862170115113258, -0.019092591479420662, -0.16674108803272247, 0.010592374950647354, 0.02648751810193062, -0.04221160337328911, 0.019592605531215668, 0.003993522841483355, -0.009467346593737602, -0.009941043332219124, 0.009401555173099041, 0.003077381057664752, 0.0319218672811985, -0.002983628772199154, -0.013059543445706367, 0.0013240468688309193, 0.014631952159106731, 0.015658294782042503, -0.026592783629894257, 0.006385031156241894, 0.03681672737002373, -0.0075462451204657555, 0.0173688642680645, -0.030553409829735756, -0.018513629212975502, 0.0163425225764513, 0.0282638780772686, -0.004733673296868801, 0.003411271143704653, 0.01890837587416172, -0.0016431340482085943, -0.02913232147693634, 0.004582353867590427, -0.0166846364736557, 0.0289481058716774, 0.01295427791774273, 0.03221134841442108, -0.0018207700923085213, 0.03352716937661171, -0.004546168725937605, -0.015947775915265083, 0.0057731736451387405, 0.017882034182548523, 0.04615906998515129, 0.024000611156225204, 0.012270049192011356, 0.0022056482266634703, 0.014224046841263771, 0.02589539624750614, -0.03623776510357857, 0.002508287550881505, -0.003914573695510626, 0.004197475500404835, -0.027869131416082382, 0.027658600360155106, -0.017158331349492073, 0.035764068365097046, 0.007283080369234085, -0.009289710782468319, 0.021329490467905998, 5.229882663115859e-05, 0.010776590555906296, 0.0049968380481004715, -0.02136896550655365, -0.005684355273842812, 0.013467448763549328, -0.011302920058369637, -0.025263801217079163, -0.006595562677830458, 0.0059014661237597466, -0.001958931563422084, 0.01468458492308855, -0.017645185813307762, -0.009658141061663628, 0.023526916280388832, 0.0009926239727064967, 0.0034935101866722107, 0.02180318720638752, -0.003914573695510626, 0.015039857476949692, 0.00853969156742096, -0.01162529643625021, -0.008717327378690243, 0.025698022916913033, -0.018487313762307167, 0.009302868507802486, 0.01867152936756611, -0.008059415966272354, 0.02513222023844719, -0.008381792344152927, -0.023132167756557465, -0.016513578593730927, 0.029027055948972702, -0.025790130719542503, -0.014605635777115822, -0.007342292461544275, -0.0037566747050732374, 0.01381614152342081, 0.0036514089442789555, -0.007750197779387236, -0.004253397695720196, -0.028184929862618446, -0.013763508759438992, -0.00611528754234314, 0.0028504016809165478, 0.004921177867799997, 0.030763942748308182, -0.003937600180506706, 0.0054211909882724285, 0.013349024578928947, 0.04002733528614044, -0.01261216402053833, -0.012914802879095078, 0.007098865229636431, 0.022250566631555557, 0.030158663168549538, 0.017487287521362305, 0.02605329640209675, -0.03505352512001991, -0.03136922046542168, 0.0034935101866722107, 0.0007265810272656381, -0.01432931236922741, -0.007519928738474846, -0.004855386912822723, 0.03155343607068062, -0.011388448067009449, -0.019789978861808777, -0.06310687214136124, -0.04297478124499321, 0.02160581387579441, 0.0361851342022419, -0.03265872597694397, 0.026540150865912437, 0.00360535504296422, 0.01514512300491333, -0.03331663832068443, 0.018579421564936638, 0.006743593141436577, -0.030737625434994698, -0.02111895941197872, 0.011243707500398159, 0.010664745233952999, -0.023553231731057167, -0.0010395002318546176, 0.008283105678856373, 0.0007783915498293936, 0.030369196087121964, 0.014671426266431808, -0.0015321114333346486, 0.026395410299301147, -0.010250261053442955, -0.005658038891851902, 0.015368812717497349, -0.03105342388153076, 0.039816804230213165, 0.01605304144322872, -0.0007413840503431857, 0.029053371399641037, -0.03823781758546829, 0.004579063970595598, -0.011750299483537674, -0.005401453468948603, -0.002475392073392868, -0.008125207386910915, -0.02238214947283268, 0.009625245817005634, -0.03665883094072342, -0.0022204513661563396, -0.017276756465435028, 0.03068499267101288, -0.01606619916856289, -0.019618920981884003, -0.004384980071336031, 0.017934666946530342, 0.025434859097003937, 0.006075812969356775, -0.01968471147119999, -0.049948640167713165, -0.011954251676797867, -0.027527017518877983, 0.00913839042186737, 0.026895422488451004, -0.0035593013744801283, 0.034842994064092636, -0.0119410939514637, -0.0017368863336741924, -0.0006361181731335819, 0.001032098662108183, -0.018013617023825645, -0.035579852759838104, 0.015803035348653793, 0.015039857476949692, -0.0031859364826232195, -0.009447609074413776, -0.00942787155508995, -0.004319189116358757, -0.016553053632378578, -0.007769934833049774, 0.019645238295197487, -0.0029786943923681974, 0.011059492826461792, -0.027079638093709946, -0.019513655453920364, -0.010954226367175579, -0.0031415275298058987, 0.02075052820146084, 0.013658243231475353, -0.024869054555892944, -0.011263445019721985, 0.005556062795221806, -0.0188162699341774, 0.0053422413766384125, -0.003378375666216016, -0.030474461615085602, -0.0139608820900321, -0.012335840612649918, -0.027579650282859802, -0.004809333011507988, 0.008204156532883644, 0.006496876012533903, -0.02097421884536743, 0.010329211130738258, 0.001341317081823945, -0.004009970463812351, -0.00219413498416543, 0.024000611156225204, 0.009243656881153584, -0.007079127710312605, -0.020000509917736053, -0.07468611747026443, 0.01563197746872902, 0.005033023189753294, 0.011026596650481224, 0.022658472880721092, -0.012454264797270298, 0.024776946753263474, -0.0072567639872431755, 0.0016085936222225428, -0.012474002316594124, -0.020026827231049538, -0.010710799135267735, -0.010171311907470226, -0.0039014152716845274, -0.01208583451807499, -0.025263801217079163, 0.02831651084125042, -0.003542853519320488, 0.010112100280821323, 0.0027730970177799463, 0.023750605061650276, -0.00021998916054144502, 0.010046308860182762, 0.0006073345430195332, -0.006292923353612423, 0.006934387143701315, -0.030790258198976517, 0.020184725522994995, 0.0024507204070687294, -0.0023158486001193523, -0.001128318253904581, -0.01630304753780365, -0.013125334866344929, -0.007421241607517004, -0.02044788934290409, -0.02310585230588913, 0.020961061120033264, 0.019592605531215668, -0.00894759688526392, 0.03202713280916214, -0.007769934833049774, -0.03010603040456772, 0.01261216402053833, 0.0026892132591456175, -0.020329466089606285, 0.005233685951679945, -0.0289481058716774, 0.010329211130738258, 0.026303302496671677, -0.0015773428604006767, -0.0048520974814891815, 0.0191978570073843, -0.028527043759822845, -0.03110605664551258, -0.021355807781219482, -0.019763661548495293, 0.02272426337003708, -0.0014177992707118392, 0.003759964369237423, -0.00017958928947336972, 0.006437663920223713, -0.0026069744490087032, 0.017776768654584885, -0.00542448041960597, -0.020526839420199394, 0.01717149093747139, -0.006325819063931704, 0.012210837565362453, 0.028184929862618446, 0.005059339571744204, -0.012868748977780342, -0.004477087873965502, 0.011388448067009449, 0.025198010727763176, 0.013802983798086643, 0.01789519377052784, 0.004822491202503443, 0.005315924994647503, -0.020711055025458336, 0.002240188652649522, 0.005065918434411287, -0.00738176703453064, -0.03173765167593956, -0.003075736341997981, 0.01639515534043312, 0.0398431196808815, -0.01324375905096531, -0.006500165909528732, -0.015303021296858788, 0.014645109884440899, 0.005181053187698126, 0.0030083004385232925, 0.0044935354962944984, 0.012092413380742073, -0.004825781099498272, 0.03386928513646126, -0.010276577435433865, 0.011125283315777779, 0.01442142017185688, 0.022934794425964355, 0.020526839420199394, -0.01502669882029295, 0.00461853900924325, -0.019158383831381798, -0.02357954904437065, 0.005809358786791563, -0.03723779320716858, -0.024974320083856583, 0.008566007949411869, 0.016908325254917145, 0.027237536385655403, 0.00600344268605113, -0.006315950304269791, 0.013302970677614212, -0.0034441668540239334, 0.003907994367182255, 0.0030642228666692972, -0.01309243869036436, -0.03647461533546448, 0.02734280191361904, 0.010737115517258644, 0.01890837587416172, 0.009316027164459229, -0.0071120234206318855, 0.029053371399641037, 0.020724212750792503, 0.008743643760681152, -0.019553130492568016, 0.011256866157054901, -0.004477087873965502, -0.01442142017185688, -0.020632104948163033, -0.022947954013943672, -0.009756827726960182, -0.009493662975728512, 0.01986892707645893, -0.02522432804107666, 0.03342190384864807, -0.04250108450651169, 0.028763890266418457, 0.005921203643083572, 0.003990233410149813, 0.027369119226932526, 0.011638454161584377, -0.009026546031236649, 0.006457401439547539, 0.0015469144564121962, -0.03242187947034836, -0.003251727670431137, 0.016724109649658203, -0.023540074005723, -0.004332347307354212, -0.011908198706805706, -0.021776871755719185, -0.009191024117171764, -0.01078316941857338, 0.00986209325492382, -0.015500395558774471, 0.005806068889796734, 0.039869438856840134, 0.004457350354641676, 0.015131964348256588, 0.019461022689938545, -0.023184802383184433, -0.007664669305086136, 0.048790719360113144, 0.0029803391080349684, -0.006727145053446293, -0.00617449963465333, 0.0009490373777225614, -0.017053065821528435, -0.027421751990914345, -0.01084896083921194, -0.003944179508835077, 0.005092235282063484, 0.0007163011468946934, -0.0020740660838782787, 0.0032714649569243193, 0.013947724364697933, 0.0032467932906001806, 0.04031681641936302, -0.03597460314631462, -0.011526609770953655, 0.040106285363435745, -0.0002915370278060436, -0.012263470329344273, -0.0027813208289444447, -0.02102685160934925], index=0, object='embedding')]

未来这些向量会被保存在专门的向量数据库当中,方便后续的再使用。

8、Fine-tuning(GPT模型微调)⭐️⭐️⭐️

8.1 什么是Fine-tuning?

模型微调(Fine-tuning)是深度学习中的一个概念,指在预训练模型(Pre-trained Model)的基础上继续训练,以适应特定任务或数据集的过程。预训练模型已经在海量的数据上训练,学习到了丰富的特征表示。通过微调,可以在这个基础上进一步提升模型对于特定任务的性能。相较于从头开始训练模型,微调的优势主要包括:

- 节省时间和资源:预训练模型省去了从零开始训练模型的时间和计算资源,尤其是在大型模型和复杂任务中更为显著。

- 数据效率:微调通常只需要相对较少的标注数据即可取得良好的效果,特别是在数据稀缺的领域中。

- 转移学习:预训练模型在多样化的数据上学习,微调可以将这些知识转移到特定任务,提高泛化能力。

- 性能提升:微调可以让模型更好地贴合特定任务的需求,有助于提高模型质量,减少错误率。

例如,借助OpenAI的API,用户可以通过微调来定制化GPT模型,以获得更高品质的结果,同时节省因长Prompt而产生的代价,降低延迟。

8.2 Fine-tuning和Prompt engineer比较

Fine-tuning与prompt engineering(提示工程)是改进模型性能的两种不同策略。提示工程指的是通过精心设计的prompt来指导模型生成预期的回应,而不改动模型本身。它通常是追求性能改进的第一步,因为其反馈周期快,且不要求训练数据。

然而,某些情况下,即使经过了精心设计的prompt,模型仍然难以达到预期效果。在这些情况下,Fine-tuning成为提高模型性能的必然选择。通过提供大量例子让模型学习,微调能够在不同任务上达到比单纯提示工程更好的效果。

8.3 支持Fine-tuning的模型

OpenAI提供了一系列支持Fine-tuing的模型,其中包括gpt-3.5-turbo-1106(推荐使用)、gpt-3.5-turbo-0613、babbage-002、davinci-002,以及实验性接入的gpt-4-0613。这些模型可以通过Fine-tuing进一步训练以适应用户的特定需求。

Fine-tuning不仅适用于新的数据集,用户还可以在已微调过的模型基础上继续进行微调。这在获取了更多数据,并希望在不重复之前训练步骤的情况下进一步优化模型时非常有用。

对于大多数用户而言,gpt-3.5-turbo以其良好的结果和易用性成为首选。考虑到持续改进和用户的具体需求,OpenAI可能会不断更新和扩展支持微调的模型范围。

8.4 微调模型实际操作

注意:Fine-tuning是Expore Plan是没有办法完成的,得是付费方案,所以下面的内容仅提供展示

8.4.1 准备训练数据

为了进行Fine-tuning,你需要准备一个符合指定格式要求的数据集。通常,这个数据集包含了一系列的输入和期望的输出,OpenAI的Fine-tuning API支持两种主要的数据格式:对话模型和简单的问答对。

- 对话模型 数据集格式通常用于gpt-3.5-turbo模型,每个示例都是以一个对话的形式来组织的,其中每条消息都有角色、内容和可选名字。示例数据结构如下:

{

"messages": [

{"role": "system", "content": "你是一个有帮助的助理。"},

{"role": "user", "content": "今天天气怎么样?"},

{"role": "assistant", "content": "今天天气晴朗,适合外出。"}

]

}

每个案例必须被格式化为一个具有JSON Lines(.jsonl)格式的文件,每一行代表一个训练样本, 例子:

{"messages": [{"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What's the capital of France?"}, {"role": "assistant", "content": "Paris, as if everyone doesn't know that already."}]}

{"messages": [{"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "Who wrote 'Romeo and Juliet'?"}, {"role": "assistant", "content": "Oh, just some guy named William Shakespeare. Ever heard of him?"}]}

{"messages": [{"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "How far is the Moon from Earth?"}, {"role": "assistant", "content": "Around 384,400 kilometers. Give or take a few, like that really matters."}]}

- 简单的问答对 数据集格式适用于如babbage-002和davinci-002之类的模型,格式更简单,由一对prompt和completion的组合构成。参考示例如下:

{

"prompt": "今天天气如何?",

"completion": "今天天气晴朗,适合外出。"

}

同样,每个训练样本暂用一行,例子:

{"prompt": "<prompt text>", "completion": "<ideal generated text>"}

{"prompt": "<prompt text>", "completion": "<ideal generated text>"}

{"prompt": "<prompt text>", "completion": "<ideal generated text>"}

8.4.2 训练与测试数据分割

创建Fine-tuning数据集后,合理地划分训练集和测试集是至关重要的。通常,数据集会被分成两部分,大部分用于训练模型(通常是70%到90%),剩余的部分用于测试(剩下的10%到30%)。这样的分割有助于验证模型在看不见的数据上的效能,和严格评估模型性能。(这个部分实际上跟深度学习常规的操作一样,不过这里的测试指标可能和常规的精度、F1值不同)。

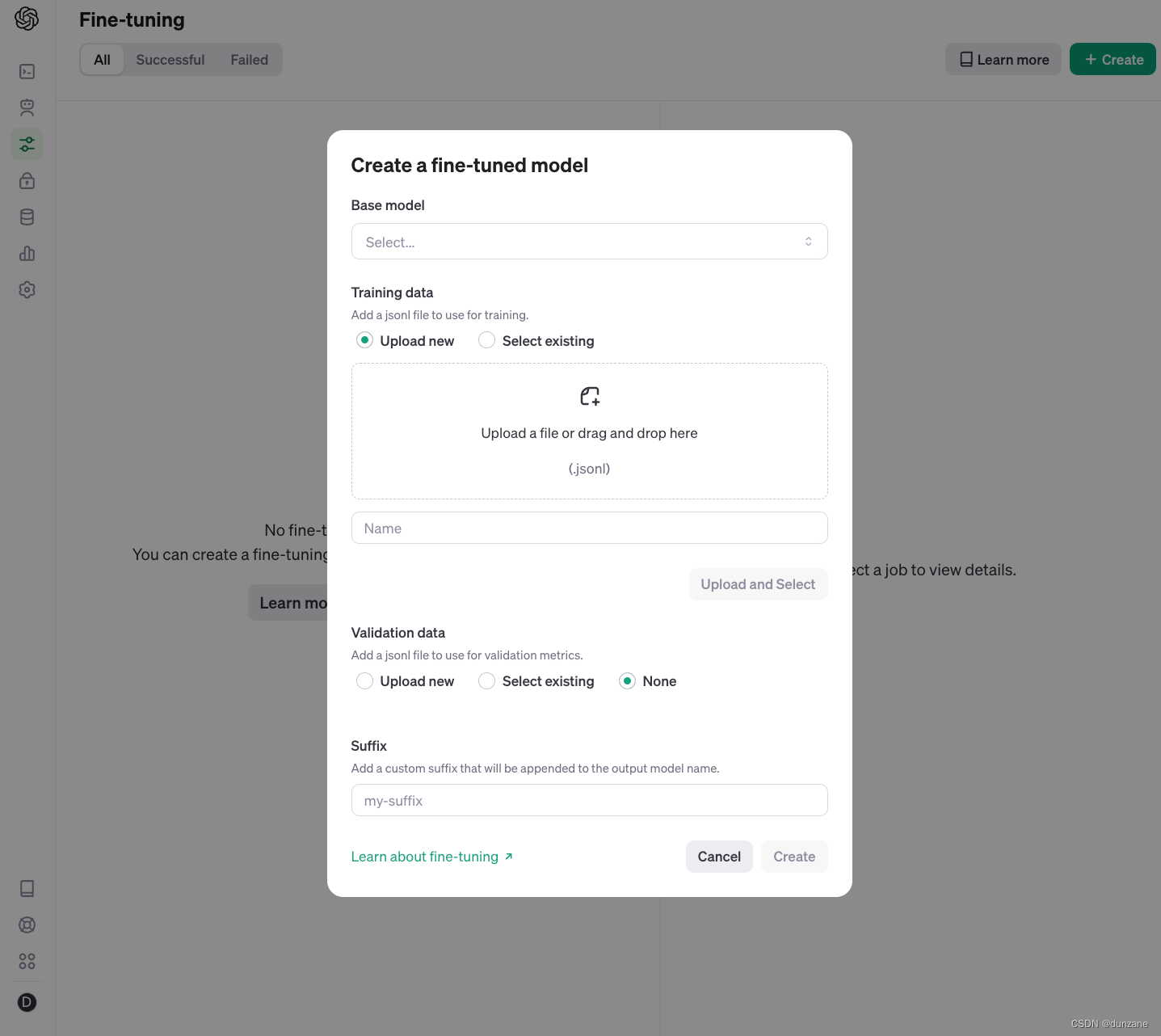

8.4.3 创建Fine-tuning模型

- 选择合适的模型

正确的预训练模型是确保任务成功的关键。一般需要从任务本身的复杂程度、数据量等角度来观察。 - 上传数据

from openai import OpenAI

client = OpenAI(api_key="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")

# 上传数据

f = client.files.create(

file=open("3_data.jsonnl", "rb"),

purpose="fine-tune"

)

print(f.id)

输出

file-ejqf9r8fw2niCJhZC8hje3xC

- 创建训练任务

t = client.fine_tuning.jobs.create(

training_file="file-ejqf9r8fw2niCJhZC8hje3xC",

model="gpt-3.5-turbo"

)

print(t.id)

- 监控任务

from openai import OpenAI

# 忽略api key参数设置

client = OpenAI()

# 显示10个模型微调任务

client.fine_tuning.jobs.list(limit=10)

# 查询指定任务ID的详细信息,如果模型训练成功,可以通过任务信息中fine_tuned_model 参数获取到微调的模型名称

client.fine_tuning.jobs.retrieve("ftjob-abc123")

# 根据任务ID取消任务

client.fine_tuning.jobs.cancel("ftjob-abc123")

# 根据任务ID查询任务日志

client.fine_tuning.jobs.list_events(fine_tuning_job_id="ftjob-abc123", limit=10)

# 删除指定的微调模型

client.models.delete("ft:gpt-3.5-turbo:acemeco:suffix:abc123")

8.4.4 Fine-tuning过程中的参数调整

超参数是在模型训练前设置的,且通常无法从数据中学习的参数。以下是几个重要的超参数:

- Epoch数(n_epochs): 这决定了您的模型将遍历整个数据集的次数。过多的epoch可能导致过拟合,过少则可能导致模型未充分学习。

- 学习率(learning_rate_multiplier): 学习率决定了模型在每次迭代中更新其权重的幅度。过高的学习率可能导致模型学习过程不稳定,而过低则可能导致学习过程缓慢。

- Batch大小(batch_size): 批处理大小决定了每次模型更新时将考虑多少训练实例。较大的批处理有助于稳定训练,但可能会增加内存压力。

例子:

from openai import OpenAI

client = OpenAI()

client.fine_tuning.jobs.create(

training_file="file-abc123",

model="gpt-3.5-turbo",

hyperparameters={

"n_epochs":2

}

)

8.4.5 评估与使用Fine-tuned模型

当我们完成模型的微调工作后,评估微调模型的性能至关重要。以下是一些标准评估方法:

-

比较样本: 使用前面准备的测试样本,分别调用基础模型和Fine-tuned模型,然后对比输出结果,这样可以比较Fine-tuned模型效果如何。

-

统计指标: 对微调过程中的损失(loss)和准确度(accuracy)等指标进行追踪。训练过程中损失应该降低,而准确度应该提高。

-

A/B测试: 设计实验,划分流量,同时运行基础模型和Fine-tuned模型来观察在实际环境中的表现差异。

-

用户反馈: 收集使用模型的用户反馈,尤其是在自然语言处理任务中,用户满意度是衡量模型性能的关键指标。

使用Fine-tuned的模型非常简单,只需要将你的Fine-tuned模型名称作为参数传入API调用中。以下是使用Fine-tuned模型的示例代码:

另外官网提供了Web-UI界面

9 OpenAI Assistants开发教程

Assistants实际使用需要付费

9.1 Assistants API 的定义与作用

Assistants API允许开发者在自己的应用程序中构建人工智能助手。通过定义自定义的指令与选择模型,助手可以利用模型、工具和知识来回应用户的查询。当前,Assistants API支持三种类型的工具:代码解析器(Code Interpreter)、检索(Retrieval)和函数调用(Function calling)。

9.2 Assistants API 的应用场景

Assistants API适用于各种需要交互式AI支持的场景。例如:

- 客户支持: 自动回答常见问题,减少人工客服的工作量。

- 在线教学: 解答学生提出的问题,提供定制化的学习支持。

- 数据分析: 分析用户上传的数据文件,生成报告和可视化图表。

- 个性化推荐: 根据用户的历史交互,提供个性化的建议和服务。

9.3 Assistants API开发流程

9.3.1 创建Assistants

curl "https://api.openai.com/v1/assistants" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer YOUR_OPENAI_API_KEY" \

-H "OpenAI-Beta: assistants=v1" \

-d '{

"instructions": "你是一个个人数学导师。编写并运行代码来回答数学问题。",

"name": "Math Tutor",

"tools": [{"type": "code_interpreter"}],

"model": "gpt-4"

}'

API参数说明:

- instructions - 系统指令,告诉助手需要干什么。

- name - 助手名字

- tools - 定义助手可以使用那些工具。每个助手最多可以有128个工具。目前工具的类型可以是code_interpreter、retrieval或function。

- model - 助手使用那个模型?

创建Assistant成功之后可以得到Assistant ID。

9.3.2 创建会话Thread

一个Thread代表一个对话,当用户开启对话时,我们推荐为每个用户创建一个会话Thread。您可以通过以下方式创建Thread:

curl https://api.openai.com/v1/threads \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-H "OpenAI-Beta: assistants=v1" \

-d ''

9.3.3 向Thread中添加消息

你可以向特定的Thread中添加消息,这些消息包含文本,并且可以选择性地包含用户允许上传的文件。例如:

curl https://api.openai.com/v1/threads/{thread_id}/messages \

-H "Content-Type: application/json" \

-H "Authorization: Bearer YOUR_OPENAI_API_KEY" \

-H "OpenAI-Beta: assistants=v1" \

-d '{

"role": "user",

"content": "我需要解这个方程 `3x + 11 = 14`。你能帮我吗?"

}'

9.3.4 运行Assistant以产生响应

要让助手响应用户消息,您需要创建一个Run。这使得助手读取Thread并决定是否调用工具(如果启用了)或简单地使用模型以最佳方式回答查询。

curl https://api.openai.com/v1/threads/{thread_id}/runs \

-H "Authorization: Bearer YOUR_OPENAI_API_KEY" \

-H "Content-Type: application/json" \

-H "OpenAI-Beta: assistants=v1" \

-d '{

"assistant_id": "assistant_id",

"instructions": "请以Jane Doe称呼用户。该用户为高级账户。"

}'

9.3.4 获取Assistant响应结果

当Assistant Run运行完成后,我们可以通过检查线程(Thread)中添加的消息来读取Assistant的响应结果。下面通过CURL请求展示如何发起请求,以及对API的参数进行详细讲解.跟Assistant助手的对话过程,类似两个人聊天的过程,当Assistant助手处理完用户的问题,会把Assistant助手消息追加到对话线程Thread中,因此我们只要查询对话线程Thread中的最新消息就可以获取助手的响应。

curl https://api.openai.com/v1/threads/thread_abc123/messages \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-H "OpenAI-Beta: assistants=v1"

10、OpenAI 开发例子

from openai import OpenAI

client = OpenAI(api_key="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")

r = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[{"role":"system","content":"你是一个GO语言专家"},

{"role":"user","content":"如何在go中使用rpc?"}]

)

print(r.choices[0].message.content)

聊天角色说明:

- system: 代表系统角色,系统消息通常用于描述 assistant(助手)扮演什么角色

- assistant:助手,代表AI机器人,AI返回的消息角色都是 assistant。

- user:代表用户,就是我们发的消息。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?