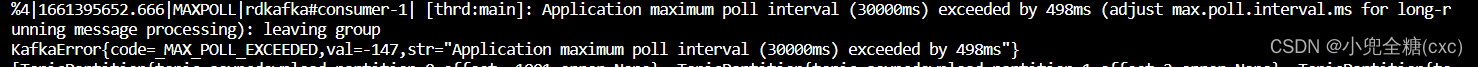

问题如上,当拉取数据,消费时间过长,就会出现leave group的情况

下面6个参数是3对,通俗理解如下:

1,2配合使用,告诉kafka集群,我消费者的处理能力,每秒至少能消费掉

3,4配合使用,告诉kafka集群,在我没事情干的时候,多久尝试拉取一次数据,即使此时没有数据(所以要处理空消息)

5,6配合使用,告诉kafka集群,什么情况你可以认为整个消费者挂了,触发rebanlance

| 参数名 | 含义 | 默认值 | 备注 |

|---|---|---|---|

| max.poll.interval.ms | 拉取时间间隔 | 300s | 每次拉取的记录必须在该时间内消费完 |

| max.poll.records | 每次拉取条数 | 500条 | 这个条数一定要结合业务背景合理设置 |

| fetch.max.wait.ms | 每次拉取最大等待时间 | 时间达到或者消息大小谁先满足条件都触发,没有消息但时间达到返回空消息体 | |

| fetch.min.bytes | 每次拉取最小字节数 | 时间达到或者消息大小谁先满足条件都触发 | |

| heartbeat.interval.ms | 向协调器发送心跳的时间间隔 | 3s | 建议不超过session.timeout.ms的1/3 |

| session.timeout.ms | 心跳超时时间 | 30s | 配置太大会导致真死消费者检测太慢 |

auto.offset.reset值含义解释

earliest

当各分区下有已提交的offset时,从提交的offset开始消费;无提交的offset时,从头开始消费

latest

当各分区下有已提交的offset时,从提交的offset开始消费;无提交的offset时,消费新产生的该分区下的数据

none

topic各分区都存在已提交的offset时,从offset后开始消费;只要有一个分区不存在已提交的offset,则抛出异常

import traceback

from confluent_kafka import Consumer

from models.batchdownload import BatchDownload

from e_document import settings as config

from functools import wraps

from datetime import datetime

from multiprocessing import Pool

import time

import json

import os

from asyncdownload.asyncdownload import makePackage

from utils.logger import elogger

class consumer:

def __init__(self,voucher_type_dict):

conf = {'bootstrap.servers': f'{config.Zookeeper["Host"]}:{config.Zookeeper["Port"]}',

'group.id': config.TopicGroup,

'enable.auto.commit': False,

"heartbeat.interval.ms":3000,

'session.timeout.ms': 30000,

'max.poll.interval.ms':30000,

# 'auto.offset.reset': 'latest'}

'auto.offset.reset': 'earliest',

'compression.type': 'gzip'#设置压缩

'message.max.bytes': 10485760}

#message.max.bytes 用来解决超过1M的数据无法发送

self.cons = Consumer(conf)

self.cons.subscribe([config.TopicName])

self.voucher_type_dict = voucher_type_dict

# consumer message from kafka and generate xml file

def consumerMessage(self):

while 1:

msg = self.cons.poll(1)

if msg == None:

continue

if not msg.error() is None:

print (msg.error())

continue

else:

try:

value = json.loads(msg.value())

print(self.cons.commit(message=msg,asynchronous=False))

makePackage(value,self.voucher_type_dict)

except Exception as e:

elogger(log_name='asyncdownload').get_logger().warning(traceback.extract_stack())

def close(self):

try:

self.cons.close()

except Exception as e:

pass

https://github.com/confluentinc/confluent-kafka-python/issues/552

1578

1578

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?