标题ST-GCN安装,运行测试

参考:ST-GCN源码运行完整版(含OpenPose编译安装)及常见问题

环境安装:

1.github: https://github.com/fendou201398/st-gcn

2.创建conda环境

conda create -n stgcn python=3.5.6

activate stgcn

3.cd 到下载安装包文件目录

pip install torch-1.2.0-cp35-cp35m-win_amd64.whl -i https://pypi.tuna.tsinghua.edu.cn/simple

pip install torchvision-0.4.0-cp35-cp35m-win_amd64.whl -i https://pypi.tuna.tsinghua.edu.cn/simple

4,其他库依赖安装

进入到st-gcn源码包目录下:

conda install ffmpeg

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

5.cmake编译openpose

参考:https://blog.csdn.net/xuelanlingying/article/details/102793110

运行测试:

python main.py demo_old --video D:/workSpace/deepLeanning/st-gcn-master/resource/media/clean_and_jerk.mp4 --openpose D:/workSpace/deepLeanning/openpose-master/build/x64/Release

python main.py demo_offline --video D:/workSpace/deepLeanning/st-gcn-master/resource/media/clean_and_jerk.mp4 --openpose D:/workSpace/deepLeanning/openpose-master

报错1:

File “main.py”, line 33, in

p.start()

File “D:\workSpace\deepLeanning\st-gcn-master\processor\demo_offline.py”, line 31, in start

video, data_numpy = self.pose_estimation()

TypeError: ‘NoneType’ object is not iterable

解决:

更改demo_offline里的文件内容如下:

def pose_estimation(self):

# load openpose python api

if self.arg.openpose is not None:

sys.path.append(‘{}/build/python/openpose/Release’.format(self.arg.openpose))

os.environ[‘PATH’] = os.environ[

‘PATH’] + ‘;’ + ‘D:/workSpace/deepLeanning/openpose-master/build/x64/Release/;’ + ‘D:/workSpace/deepLeanning/openpose-master/build/bin;’

# sys.path.append(‘{}/python’.format(self.arg.openpose))

# sys.path.append(‘{}/build/python’.format(self.arg.openpose))

try:

# from openpose import pyopenpose as op

import pyopenpose as op

except:

print(‘Can not find Openpose Python API.’)

return

报错2:

解决:将openpose重新编译一遍,python使用3.5.6,与本项目环境报保持一致;

运行:python main.py demo_offline --video D:/workSpace/deepLeanning/st-gcn-master/resource/media/clean_and_jerk.mp4 --openpose D:\workSpace\deepLeanning\st-gcn-master\openpose-master

报错3:

opWrapper.emplaceAndPop([datum])

TypeError: emplaceAndPop(): incompatible function arguments. The following argument types are supported:

- (self: pyopenpose.WrapperPython, arg0: std::vector<std::shared_ptrop::Datum,std::allocator<std::shared_ptrop::Datum > >) -> bool

Invoked with: <pyopenpose.WrapperPython object at 0x00000263AFEEA8F0>, [<pyopenpose.Datum object at 0x00000263AFF05F10>]

解决:

将opWrapper.emplaceAndPop([datum])更改如下:

opWrapper.emplaceAndPop(op.VectorDatum([datum]))

报错4:

Starting OpenPose Python Wrapper…

Auto-detecting all available GPUs… Detected 1 GPU(s), using 1 of them starting at GPU 0.

F0525 13:01:52.934809 102492 syncedmem.cpp:71] Check failed: error == cudaSuccess (2 vs. 0) out of memory

*** Check failure stack trace: ***

解决:

将params = dict(model_folder=‘./models’, model_pose=‘COCO’)更改如下:

params = dict(model_folder=‘./models’, model_pose=‘COCO’, net_resolution=‘320x176’)

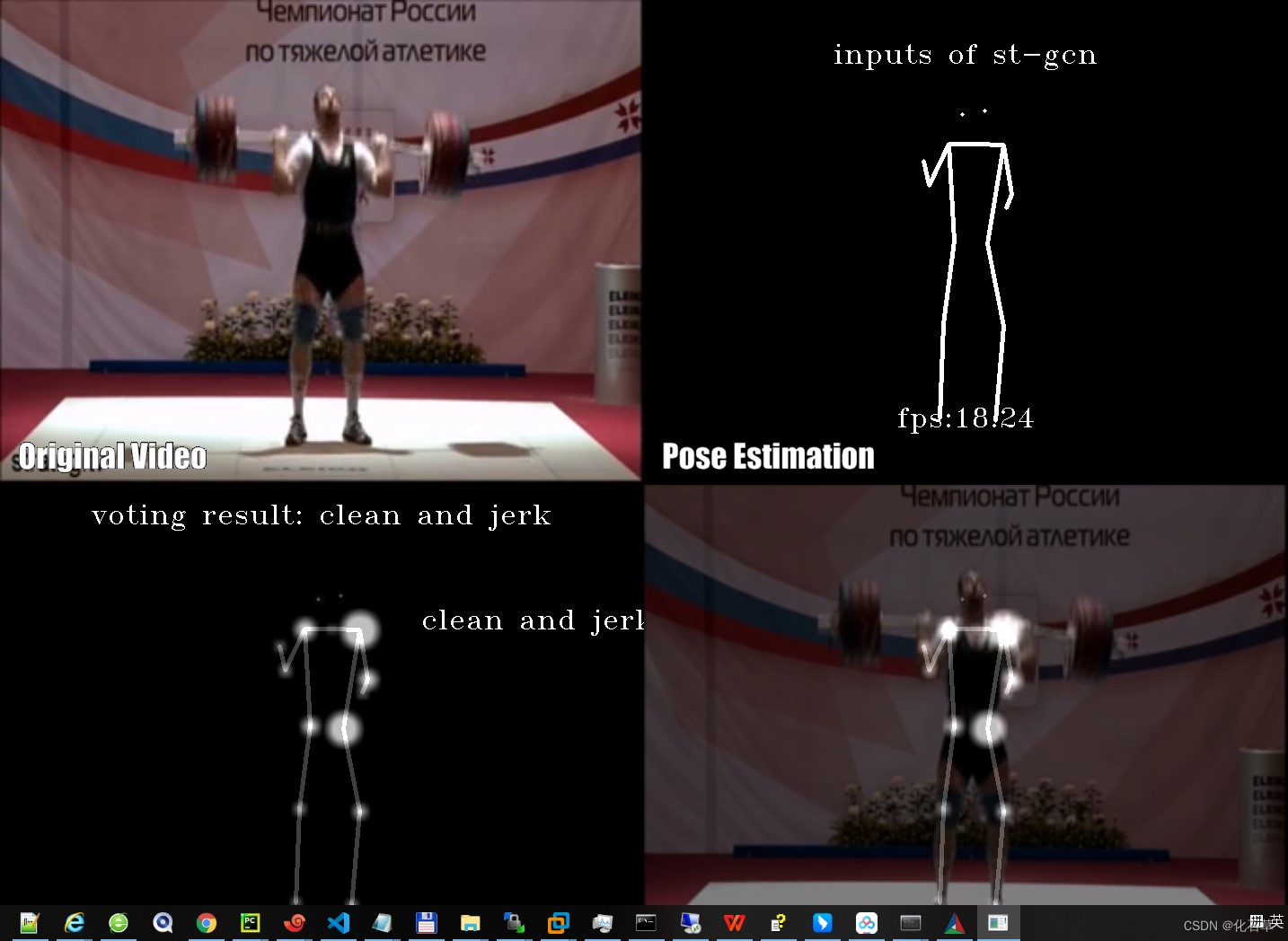

测试截图:

添加中文支持:

1,翻译并添加标签,修改代码:

修改 label_name_path = ‘./resource/kinetics_skeleton/label_name.txt’

为label_name_path = ‘./resource/kinetics_skeleton/label_name_ch.txt’

2.将opencv写中文改为PIL写中文

修改:

cv2.putText(text, body_label, pos_track[m],

cv2.FONT_HERSHEY_TRIPLEX, 0.5 * scale_factor,

(255, 255, 255))

为:

img_pil = Image.fromarray(cv2.cvtColor(text, cv2.COLOR_BGR2RGB))

font = ImageFont.truetype(font=‘C:/Windows/Fonts/simsun.ttc’, size=24)

draw = ImageDraw.Draw(img_pil)

draw.text(pos_track[m], body_label, font=font, fill=(255, 255, 0)) # PIL中RGB=(255,0,0)表示红色

text = cv2.cvtColor(np.asarray(img_pil), cv2.COLOR_RGB2BGR)

3.rtsp视频测试:

python main.py demo --video rtsp://admin:HuaWei123@192.168.1.121/LiveMedia/ch1/Media1 D:\workSpace\deepLeanning\st-gcn-master\openpose-master

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?