Fer2013 数据集人脸表情识别 详细代码

本文将从数据集、模型训练、模型实践应用(AI模型落地场景实际应用)几个部分完整讲解基于Fer2013 数据集的人脸表情识别项目,最终项目实现效果:

通过人体姿态(百度AI姿态识别接口)和表情识别模型综合评估学生听课效果

其中涉及理论部分请详见基于卷积网络的人脸表情识别及其应用(毕业论文)

github 链接 https://github.com/cucohhh/Expression-recognition/

github 项目地址

文章目录

一、卷积网络模型搭建训练代码

数据集载入

fer2013 数据集下载链接Fer2013数据集

数据集已经将训练集和测试集划分好,训练集两万多张图片,测试集三千张图片

excel格式 非常方便读取(使用pandas 库)

import pandas as pd

import numpy as np

##path 为数据集路径

def dataRead(path, x_data, y_data, data_size_begin, data_size_end):

# 加载训练集

train_data = pd.read_csv(path)

num_of_instances = len(train_data)

min_data = train_data.iloc[data_size_begin:data_size_end]

pixels = min_data['pixels']

emotions = min_data['emotion']

print("数据集加载完成,数据集大小")

print(len(pixels))

# 表情类别数

num_classes = 7

# x_train, y_train = [], []

# x_test, y_test = [], []

import os

import keras

for emotion, img in zip(emotions, pixels):

try:

emotion = keras.utils.to_categorical(emotion, num_classes) # 独热向量编码

val = img.split(" ")

pixels = np.array(val, 'float32')

x_data.append(pixels)

y_data.append(emotion)

except:

print("111")

print("表情 分类完成 finish")

print(len(x_data))

x_data = np.array(x_data)

y_data = np.array(y_data)

x_data = x_data.reshape(-1, 48, 48, 1)

print("数据集 格式转换完成")

print(len(x_data))

res = [];

res.append(x_data)

res.append(y_data)

return res;

模型训练、评估

模型的训练环境 :keras库 + TensorFlow1.1.5 版本

由于TensorFlow 版本的更新 keras库的导入也许需要部分调整,请自行修改

import numpy as np

import pandas as pd

from keras.layers import LeakyReLU

from keras import regularizers

from data_Reader import dataRead

# 加载训练集

path_train = "C:\\Users\\Administrator\\PycharmProjects\\pythonProject\\fer2013原-csv\\train.csv"

train_data_x =[];

train_data_y =[];

train_size_begin = 0;

train_size_end = 30000;

train = dataRead(path_train,train_data_x,train_data_y,train_size_begin,train_size_end)

# 加载测试集

path_test = "C:\\Users\\Administrator\\PycharmProjects\\pythonProject\\fer2013原-csv\\test.csv"

test_data_x=[]

test_data_y=[]

test_size_begin = 0;

test_size_end = 10000;

test = dataRead(path_test,test_data_x,test_data_y,test_size_begin,test_size_end)

from keras.models import Sequential

from keras.layers import Conv2D, MaxPool2D, AveragePooling2D, Activation, Dropout, Flatten, Dense, BatchNormalization

from keras.optimizers import Adam

from keras.preprocessing.image import ImageDataGenerator

batch_size = 256;

epochs = 40;

model = Sequential()

# 第一层卷积层

model.add(Conv2D(input_shape=(48, 48, 1), filters=32, kernel_size=3,strides=1 ,padding='same'))

#kernel_regularizer=regularizers.l2(0.01)))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Conv2D(filters=32, kernel_size=3,strides=1, ))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(MaxPool2D(pool_size=2, strides=2))

model.add(Dropout(0.5))

# 第二层卷积层

model.add(Conv2D(filters=64, kernel_size=3,strides=1 , ))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Conv2D(filters=64, kernel_size=3, strides=1 ,))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(MaxPool2D(pool_size=2, strides=2))

model.add(Dropout(0.5))

# 第三层卷积层

model.add(Conv2D(filters=128, kernel_size=3,strides=1 , padding='same', ))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Conv2D(filters=128, kernel_size=3,strides=1 , padding='same',))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(AveragePooling2D(pool_size=2, strides=2)) ## 进入全连接层时采用 平均池化层

model.add(Dropout(0.5))

# 全连接层

model.add(Flatten())

model.add(Dense(1024, activation='relu', ))

model.add(Dropout(0.2))

model.add(Dense(1024, activation='relu', ))

model.add(Dropout(0.2))

model.add(Dense(512, activation='relu' ,))

model.add(Dropout(0.2))

#softmax层

model.add(Dense(7, activation='softmax'))

# 进行训练

model.compile(loss='categorical_crossentropy', optimizer=Adam(), metrics=['accuracy'])

history = model.fit(train[0], train[1], batch_size=batch_size, epochs=epochs, validation_data=(test[0],test[1]),shuffle='true')

train_score = model.evaluate(train[0], train[1], verbose=0)

print('Train loss:', train_score[0])

print('Train accuracy:', 100 * train_score[1])

model.save('my_base_model_relu2_Dropout_Normalization.h5')

from paint_Process import paint_process

name = "my_base_model_relu2_Dropout_Normalization"

paint_process(history, name);

如何载入训练一半的模型继续训练,

每次都从新训练代码很麻烦,也很浪费时间,所以在此提供一个继续训练的代码

from keras import regularizers

from data_Reader_T import dataRead_T

from data_Reader import dataRead

# 加载训练集

path_train = "C:\\Users\\Administrator\\PycharmProjects\\pythonProject\\fer2013原-csv\\train.csv"

train_data_x =[];

train_data_y =[];

train_size_begin = 0;

train_size_end = 30000;

train = dataRead(path_train,train_data_x,train_data_y,train_size_begin,train_size_end)

# 加载测试集

path_test = "C:\\Users\\Administrator\\PycharmProjects\\pythonProject\\fer2013原-csv\\test.csv"

test_data_x=[]

test_data_y=[]

test_size_begin = 0;

test_size_end = 10000;

test = dataRead(path_test,test_data_x,test_data_y,test_size_begin,test_size_end)

from keras.models import Sequential

from keras.layers import Conv2D, MaxPool2D, Activation, Dropout, Flatten, Dense, BatchNormalization

from keras.optimizers import Adam

from keras.preprocessing.image import ImageDataGenerator

batch_size = 256;

epochs = 30;

from keras.models import load_model

model = load_model('C:\\Users\\Administrator\\PycharmProjects\\pythonProject\\trian_or_Test\\my_base_model_relu2_Dropout_Normalization.h5')

# 进行训练

model.compile(loss='categorical_crossentropy', optimizer=Adam(), metrics=['accuracy'])

history = model.fit(train[0], train[1], batch_size=batch_size, epochs=epochs, validation_data=(test[0],test[1]),shuffle='true')

train_score = model.evaluate(train[0], train[1], verbose=0)

print('Train loss:', train_score[0])

print('Train accuracy:', 100 * train_score[1])

model.save('my_base_model_relu2_L2_2twice.h5')

from paint_Process import paint_process

name = "my_base_model_relu2_L2_2twice"

paint_process(history, name);

训练过程参数绘制

import matplotlib.pyplot as plt

def paint_process(history,name):

epoch = len(history.history['loss']) #迭代的次数

plt.plot(range(epoch), history.history['loss'], label='loss')

plt.plot(range(epoch), history.history['accuracy'], label='train_acc')

plt.plot(range(epoch), history.history['val_accuracy'], label='val_acc')

plt.plot(range(epoch), history.history['val_loss'], label='val_loss')

plt.legend()

path = "C:\\Users\\Administrator\\PycharmProjects\\pythonProject\\trian_image\\"

plt.savefig(path+name);

plt.show()

人脸表情识别功能落地实践

这里用到了OpenCV库来获取笔记本摄像头,得到实时的视频图像数据,

使用的是OpenCV 4.2版本以及它的扩展库,

实现过程:

- 获取到视频数据

- 导入卷积网络模型对图像数据实时分析

- 绘制分析的结果到图像中

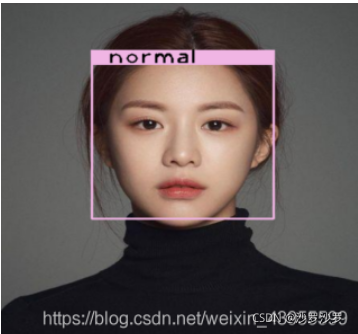

最终效果:

这里展示的是单个图片的识别效果(由于懒惰,不知道怎么添加gif),代码可以完成实时视频数据的分析(实际循环识别判断多帧图片)

import cv2

import numpy as np

import os

from tensorflow import keras

def resize_image(image):

if len(image) != 0:

image = cv2.resize(image, (48, 48))

#print(image.shape)

# ima=change_image_channels(image)

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

#print(gray.shape)

x = np.array(gray).reshape(48, 48, 1)

x = np.expand_dims(x, axis=0)

return x

def displayText(img, result):

text1 = "W:" + str(result[0][0])

cv2.putText(img, text1, (40, 50), cv2.FONT_HERSHEY_COMPLEX, 1.0, (10, 10, 210), 2)

text2 = "W:" + str(result[0][1])

cv2.putText(img, text2, (40, 80), cv2.FONT_HERSHEY_PLAIN, 2.0, (0, 0, 255), 2)

text3 = "W:" + str(result[0][2])

cv2.putText(img, text3, (40, 110), cv2.FONT_HERSHEY_PLAIN, 2.0, (0, 0, 255), 2)

text4 = "W:" + str(result[0][3])

cv2.putText(img, text4, (40, 140), cv2.FONT_HERSHEY_PLAIN, 2.0, (0, 0, 255), 2)

text5 = "W:" + str(result[0][4])

cv2.putText(img, text5, (40, 170), cv2.FONT_HERSHEY_PLAIN, 2.0, (0, 0, 255), 2)

text6 = "W:" + str(result[0][5])

cv2.putText(img, text6, (40, 200), cv2.FONT_HERSHEY_PLAIN, 2.0, (0, 0, 255), 2)

text7 = "W:" + str(result[0][6])

cv2.putText(img, text7, (40, 230), cv2.FONT_HERSHEY_PLAIN, 2.0, (0, 0, 255), 2)

def displayEmotion(img, result,x,y,w,h):

a = max(result)

l = a.tolist()

c = l.index(max(l))

if c == 0:

text = "anger:" #+ str(result[0][0])

if c == 1:

text = "disgust:" #+ str(result[0][1])

if c == 2:

text = "fear:" #+ str(result[0][2])

if c == 3:

text = "happy:" #+ str(result[0][3])

if c == 4:

text = "sad:" #+ str(result[0][4])

if c == 5:

text = "surprised:" #+ str(result[0][5])

if c == 6:

text = "normal:" #+ str(result[0][6])

cv2.rectangle(img, (x-5, y), (x+w+5, y-20), (228, 181, 240), thickness=-1)

cv2.putText(img, text, (x+20, y), cv2.FONT_HERSHEY_PLAIN, 2.0, (0, 0, 0), 2)

def findMax(result):

a = max(result)

l = a.tolist()

c = l.index(max(l))

if c == 0:

return "anger"

if c == 1:

return "disgust"

if c == 2:

return "fear"

if c == 3:

return "happy"

if c == 4:

return "sad"

if c == 5:

return "surprised"

if c == 6:

return "normal"

# from keras.preprocessing import image

from matplotlib.pyplot import imshow

face_cascade = cv2.CascadeClassifier(r'C:\ProgramData\Anaconda3\envs\tfenv\Lib\site-packages\cv2\data\haarcascade_frontalface_alt.xml')

# face_cascade.load("D:\Build\OpenCV\opencv-4.1.2\modules\core\src\persistence_xml\haarcascade_frontalface_alt.xml")

# eye_cascade = cv2.CascadeClassifier("D:\face_recognized\haarcascade_eye.xml")

model2 = keras.models.load_model("../Model/my_VGG_11_model_10000_256_100.h5")

cap = cv2.VideoCapture(-1)

result=""

def facial_expression_check(cap):

ret, img = cap.read()

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

faces = face_cascade.detectMultiScale(gray, 1.1, 5)

result=[]

#cv2.imshow("img", img)

if len(faces) > 0:

for faceRect in faces:

x, y, w, h = faceRect

image = img[y - 10: y + h + 10, x - 10: x + w + 10]

image = resize_image(image)

result = model2.predict(image)

# print(result)

# print(model2.predict(image))

cv2.rectangle(img, (x-5, y-5), (x + w+5, y + h+5), (228, 181, 240), 2)

displayEmotion(img, result, x, y, w, h)

roi_gray = gray[y:y + h // 2, x:x + w]

roi_color = img[y:y + h // 2, x:x + w]

# eyes = eye_cascade.detectMultiScale(roi_gray,1.1,1,cv2.CASCADE_SCALE_IMAGE,(2,2))

# for (ex,ey,ew,eh) in eyes:

# cv2.rectangle(roi_color,(ex,ey),(ex+ew,ey+eh),(0,255,0),2)

cv2.namedWindow("facial_expression",0)

cv2.resizeWindow("facial_expression", 300, 200);

cv2.imshow("facial_expression", img)

x='Noface'

if len(result)!=0:

x = findMax(result)

return x

def main():

while True:

facial_expression_check(cap)

if cv2.waitKey(10) & 0xFF == ord('q'):

break

二、PostureCheck(百度AI接口)

百度AI接口调用过程

-

在百度智慧AI平台注册账号

-

选择人体姿态识别功能、创建应用、下载官方提供的API库

-

记录你的

APP_ID = ’ ’

API_KEY = ‘’

SECRET_KEY = ’ ’ -

代码调用(此处同样使用opencv获取图像数据)

-

绘制识别到的重要人体姿态关节点

-

此处可以查询官网详细了解更多的接口使用方式

此处主要对环保双臂和高低肩(对学生状态影响较大的动作、具体详见博文基于卷积网络的人脸表情识别)进行了识别,读者可以根据自己实际需要自行修改其他动作

from aip import AipBodyAnalysis

from Test import draw_line

from datetime import datetime

import math

import cv2

import time

from tensorflow import keras

from face_check import facial_expression_check

from DocumentGeneration import generateDocument

from SMTP_send import SMTPSend

face_cascade = cv2.CascadeClassifier(

r'C:\ProgramData\Anaconda3\envs\tfenv\Lib\site-packages\cv2\data\haarcascade_frontalface_alt.xml')

model2 = keras.models.load_model("C:\\Users\\Administrator\\PycharmProjects\\pythonProject\\trian_or_Test\\my_base_model6.h5")

expression = {'anger': 0, 'disgust': 0, 'fear': 0, 'happy': 0, 'sad': 0, 'surprised': 0, 'normal': 0, 'Noface': 0}

posture = {'cross_arms': 0, 'shoulder_drop': 0}

# total = 0 #总共表情和姿态的检测次数

# 将opencv读取图片转换成字符串

def ChangeToString(image):

# image_str = cv2.imencode('.jpg',image)[1].tostring();

print("图片转字节中...")

a = datetime.now();

image_str = cv2.imencode('.jpg', image)[1].tobytes();

b = datetime.now();

changeTime = (b - a).seconds;

print(changeTime);

return image_str;

def BodyAnalysis(img_str):

print("分析数据中...");

""" 你的 APPID AK SK """

APP_ID = '23411049'

API_KEY = 'LNCh4Tz8Yq5LaMGj2qWLFH9Q'

SECRET_KEY = 'f4FEXEHZQ2T0HVkK4GXDGPZibZHjrch5 '

client = AipBodyAnalysis(APP_ID, API_KEY, SECRET_KEY)

""" 读取图片 """

image = img_str;

""" 调用人体关键点识别 """

a = datetime.now();

result = client.bodyAnalysis(image);

b = datetime.now();

bodyAnalysisTime = (b - a).seconds;

print(bodyAnalysisTime);

return result;

# 分析是否交叉手臂 判断手腕关键点的横坐标

def cross_arms(left_wrist, right_wrist):

if left_wrist <= right_wrist:

posture['cross_arms'] = posture['cross_arms'] + 1;

return True

else:

return False

# 分析肩部高低差,左右肩部关键点纵坐标差不能大于50px

def shoulder_drop(left_shoulder, right_shoulder):

if math.fabs(left_shoulder - right_shoulder) > 20:

posture['shoulder_drop'] = posture['shoulder_drop'] + 1;

return True

else:

return False

def Body_posture_check(frame):

img_str = ChangeToString(frame);

my_result = BodyAnalysis(img_str);

person = my_result['person_info'][0]['body_parts'];

draw_line(person, frame)

cv2.namedWindow("body_posture", 0)

cv2.resizeWindow("body_posture", 300, 200)

cv2.imshow("body_posture", frame)

cross_arms_count = 0

shoulder_drop_count = 0

if cross_arms(int(person['left_wrist']['x']), int(person['right_wrist']['x'])):

print("存在交叉双臂动作")

cross_arms_count = cross_arms_count + 1

if shoulder_drop(int(person['left_shoulder']['y']), int(person['right_shoulder']['y'])):

print("左右肩部高低差过大")

shoulder_drop_count = shoulder_drop_count + 1

# 设置摄像头捕获

cap = cv2.VideoCapture(0);

flag = True

def GetView():

print("dainji")

var = 30; # 每1000毫秒检测一次

person = None;

my_result = None;

total = 0;

while flag:

var = var + 1;

ret, frame = cap.read();

# cv2.imshow("image", frame)

# img_str = ChangeToString(frame);

# my_result = BodyAnalysis(img_str);

# person = my_result['person_info'][0]['body_parts'];

result = facial_expression_check(cap)

facial_expression_statistics(result)

total = total + 1

if (var % 30 == 0):

# img_str = ChangeToString(frame);

# my_result = BodyAnalysis(img_str);

# person = my_result['person_info'][0]['body_parts'];

# draw_line(person, frame);

# cv2.imshow("body_posture", frame)

Body_posture_check(frame)

if cv2.waitKey(10) & 0xff == ord('q'):

print(expression)

print(posture)

print(var)

#cap.release()

cv2.destroyAllWindows()

generateDocument(expression, posture, total)

time.sleep(2)

SMTPSend()

break

return

三、 模型落地实现对学生听课状态的评估

FerCheck + PostureCheck

FerCheck .py 包含人脸检测+表情识别功能

import cv2

import numpy as np

import os

from tensorflow import keras

def resize_image(image):

if len(image) != 0:

image = cv2.resize(image, (48, 48))

#print(image.shape)

# ima=change_image_channels(image)

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

#print(gray.shape)

x = np.array(gray).reshape(48, 48, 1)

x = np.expand_dims(x, axis=0)

return x

def displayText(img, result):

text1 = "W:" + str(result[0][0])

cv2.putText(img, text1, (40, 50), cv2.FONT_HERSHEY_COMPLEX, 1.0, (10, 10, 210), 2)

text2 = "W:" + str(result[0][1])

cv2.putText(img, text2, (40, 80), cv2.FONT_HERSHEY_PLAIN, 2.0, (0, 0, 255), 2)

text3 = "W:" + str(result[0][2])

cv2.putText(img, text3, (40, 110), cv2.FONT_HERSHEY_PLAIN, 2.0, (0, 0, 255), 2)

text4 = "W:" + str(result[0][3])

cv2.putText(img, text4, (40, 140), cv2.FONT_HERSHEY_PLAIN, 2.0, (0, 0, 255), 2)

text5 = "W:" + str(result[0][4])

cv2.putText(img, text5, (40, 170), cv2.FONT_HERSHEY_PLAIN, 2.0, (0, 0, 255), 2)

text6 = "W:" + str(result[0][5])

cv2.putText(img, text6, (40, 200), cv2.FONT_HERSHEY_PLAIN, 2.0, (0, 0, 255), 2)

text7 = "W:" + str(result[0][6])

cv2.putText(img, text7, (40, 230), cv2.FONT_HERSHEY_PLAIN, 2.0, (0, 0, 255), 2)

def displayEmotion(img, result,x,y,w,h):

a = max(result)

l = a.tolist()

c = l.index(max(l))

if c == 0:

text = "anger:" #+ str(result[0][0])

if c == 1:

text = "disgust:" #+ str(result[0][1])

if c == 2:

text = "fear:" #+ str(result[0][2])

if c == 3:

text = "happy:" #+ str(result[0][3])

if c == 4:

text = "sad:" #+ str(result[0][4])

if c == 5:

text = "surprised:" #+ str(result[0][5])

if c == 6:

text = "normal:" #+ str(result[0][6])

cv2.rectangle(img, (x-5, y), (x+w+5, y-20), (228, 181, 240), thickness=-1)

cv2.putText(img, text, (x+20, y), cv2.FONT_HERSHEY_PLAIN, 2.0, (0, 0, 0), 2)

def findMax(result):

a = max(result)

l = a.tolist()

c = l.index(max(l))

if c == 0:

return "anger"

if c == 1:

return "disgust"

if c == 2:

return "fear"

if c == 3:

return "happy"

if c == 4:

return "sad"

if c == 5:

return "surprised"

if c == 6:

return "normal"

# from keras.preprocessing import image

from matplotlib.pyplot import imshow

face_cascade = cv2.CascadeClassifier(r'C:\ProgramData\Anaconda3\envs\tfenv\Lib\site-packages\cv2\data\haarcascade_frontalface_alt.xml')

# face_cascade.load("D:\Build\OpenCV\opencv-4.1.2\modules\core\src\persistence_xml\haarcascade_frontalface_alt.xml")

# eye_cascade = cv2.CascadeClassifier("D:\face_recognized\haarcascade_eye.xml")

model2 = keras.models.load_model("../Model/my_VGG_11_model_10000_256_100.h5")

cap = cv2.VideoCapture(-1)

result=""

def facial_expression_check(cap):

ret, img = cap.read()

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

faces = face_cascade.detectMultiScale(gray, 1.1, 5)

result=[]

#cv2.imshow("img", img)

if len(faces) > 0:

for faceRect in faces:

x, y, w, h = faceRect

image = img[y - 10: y + h + 10, x - 10: x + w + 10]

image = resize_image(image)

result = model2.predict(image)

# print(result)

# print(model2.predict(image))

cv2.rectangle(img, (x-5, y-5), (x + w+5, y + h+5), (228, 181, 240), 2)

displayEmotion(img, result, x, y, w, h)

roi_gray = gray[y:y + h // 2, x:x + w]

roi_color = img[y:y + h // 2, x:x + w]

# eyes = eye_cascade.detectMultiScale(roi_gray,1.1,1,cv2.CASCADE_SCALE_IMAGE,(2,2))

# for (ex,ey,ew,eh) in eyes:

# cv2.rectangle(roi_color,(ex,ey),(ex+ew,ey+eh),(0,255,0),2)

cv2.namedWindow("facial_expression",0)

cv2.resizeWindow("facial_expression", 300, 200);

cv2.imshow("facial_expression", img)

x='Noface'

if len(result)!=0:

x = findMax(result)

return x

def main():

while True:

facial_expression_check(cap)

if cv2.waitKey(10) & 0xFF == ord('q'):

break

PostureCheck.py 姿态检测(调用了ferCheck中部分函数)

rom aip import AipBodyAnalysis

from Test import draw_line

from datetime import datetime

import math

import cv2

import time

from tensorflow import keras

from face_check import facial_expression_check

from DocumentGeneration import generateDocument

from SMTP_send import SMTPSend

face_cascade = cv2.CascadeClassifier(

r'C:\ProgramData\Anaconda3\envs\tfenv\Lib\site-packages\cv2\data\haarcascade_frontalface_alt.xml')

# face_cascade.load("D:\Build\OpenCV\opencv-4.1.2\modules\core\src\persistence_xml\haarcascade_frontalface_alt.xml")

# eye_cascade = cv2.CascadeClassifier("D:\face_recognized\haarcascade_eye.xml")

model2 = keras.models.load_model("C:\\Users\\Administrator\\PycharmProjects\\pythonProject\\trian_or_Test\\my_base_model6.h5")

expression = {'anger': 0, 'disgust': 0, 'fear': 0, 'happy': 0, 'sad': 0, 'surprised': 0, 'normal': 0, 'Noface': 0}

posture = {'cross_arms': 0, 'shoulder_drop': 0}

# total = 0 #总共表情和姿态的检测次数

# 将opencv读取图片转换成字符串

def ChangeToString(image):

# image_str = cv2.imencode('.jpg',image)[1].tostring();

print("图片转字节中...")

a = datetime.now();

image_str = cv2.imencode('.jpg', image)[1].tobytes();

b = datetime.now();

changeTime = (b - a).seconds;

print(changeTime);

return image_str;

def BodyAnalysis(img_str):

print("分析数据中...");

""" 你的 APPID AK SK """

APP_ID = '23411049'

API_KEY = 'LNCh4Tz8Yq5LaMGj2qWLFH9Q'

SECRET_KEY = 'f4FEXEHZQ2T0HVkK4GXDGPZibZHjrch5 '

client = AipBodyAnalysis(APP_ID, API_KEY, SECRET_KEY)

""" 读取图片 """

image = img_str;

""" 调用人体关键点识别 """

a = datetime.now();

result = client.bodyAnalysis(image);

b = datetime.now();

bodyAnalysisTime = (b - a).seconds;

print(bodyAnalysisTime);

return result;

# 分析是否交叉手臂 判断手腕关键点的横坐标

def cross_arms(left_wrist, right_wrist):

if left_wrist <= right_wrist:

posture['cross_arms'] = posture['cross_arms'] + 1;

return True

else:

return False

# 分析肩部高低差,左右肩部关键点纵坐标差不能大于50px

def shoulder_drop(left_shoulder, right_shoulder):

if math.fabs(left_shoulder - right_shoulder) > 20:

posture['shoulder_drop'] = posture['shoulder_drop'] + 1;

return True

else:

return False

def Body_posture_check(frame):

img_str = ChangeToString(frame);

my_result = BodyAnalysis(img_str);

person = my_result['person_info'][0]['body_parts'];

draw_line(person, frame)

cv2.namedWindow("body_posture", 0)

cv2.resizeWindow("body_posture", 300, 200)

cv2.imshow("body_posture", frame)

cross_arms_count = 0

shoulder_drop_count = 0

if cross_arms(int(person['left_wrist']['x']), int(person['right_wrist']['x'])):

print("存在交叉双臂动作")

cross_arms_count = cross_arms_count + 1

if shoulder_drop(int(person['left_shoulder']['y']), int(person['right_shoulder']['y'])):

print("左右肩部高低差过大")

shoulder_drop_count = shoulder_drop_count + 1

def facial_expression_statistics(result):

expression[result] = expression[result] + 1

# 设置摄像头捕获

cap = cv2.VideoCapture(0);

flag = True

def GetView():

print("dainji")

var = 30; # 每1000毫秒检测一次

person = None;

my_result = None;

total = 0;

while flag:

var = var + 1;

ret, frame = cap.read();

# cv2.imshow("image", frame)

# img_str = ChangeToString(frame);

# my_result = BodyAnalysis(img_str);

# person = my_result['person_info'][0]['body_parts'];

result = facial_expression_check(cap)

facial_expression_statistics(result)

total = total + 1

if (var % 30 == 0):

# img_str = ChangeToString(frame);

# my_result = BodyAnalysis(img_str);

# person = my_result['person_info'][0]['body_parts'];

# draw_line(person, frame);

# cv2.imshow("body_posture", frame)

Body_posture_check(frame)

if cv2.waitKey(10) & 0xff == ord('q'):

print(expression)

print(posture)

print(var)

#cap.release()

cv2.destroyAllWindows()

generateDocument(expression, posture, total)

time.sleep(2)

SMTPSend()

break

return

def stopLearning():

print("dainji stop Learning")

flag = False;

cap.release()

cv2.destroyAllWindows()

time.sleep(10)

SMTPSend()

def mytest():

img = cv2.imread('C:\\Users\\Administrator\\Pictures\\Camera Roll\\test3.jpeg');

# draw_line(result,img);

str = ChangeToString(img);

result = BodyAnalysis(str);

result1 = result['person_info'][0]['body_parts'];

draw_line(result1, img);

cv2.imshow('result', img);

cv2.waitKey(0);

print(result['person_info'][0]['body_parts']['nose']['x']);

学习状态分析报告生成功能

根据分析结果生成word版分析报告

from docx import Document

import docx

# 输入数据

expression = {'anger': 0, 'disgust': 0, 'fear': 0, 'happy': 10, 'sad': 0, 'surprised': 0, 'normal': 0,'Noface':0}

posture = {'cross_arms': 0, 'shoulder_drop': 0}

def getMaxExpression(expression):

maxnum = 0;

maxkey = ''

for keys, value in expression.items():

if value > maxnum:

maxnum = value

maxkey = keys

return maxkey

def generateDocument(expression, posture, total):

document = Document()

# 添加异常姿态表格

document.add_heading('学习状态评估', level=2)

document.add_heading('非放松自然状态动作统计', level=2)

posture_table = document.add_table(rows=2, cols=2)

row = posture_table.rows[0]

row.cells[0].text = '环抱双臂动作'

row.cells[1].text = str(posture['cross_arms'])

row = posture_table.rows[1]

row.cells[0].text = '肩部高低不一致'

row.cells[1].text = str(posture['shoulder_drop'])

# 添加表情统计表格

document.add_heading('面部表情状态数据统计', level=2)

table = document.add_table(rows=7, cols=2)

row = table.rows[0]

row.cells[0].text = 'anger'

row.cells[1].text = str(expression['anger'])

row = table.rows[1]

row.cells[0].text = 'disgust'

row.cells[1].text = str(expression['disgust'])

row = table.rows[2]

row.cells[0].text = 'fear';

row.cells[1].text = str(expression['fear'])

row = table.rows[3]

row.cells[0].text = 'happy'

row.cells[1].text = str(expression['happy'])

row = table.rows[4]

row.cells[0].text = 'sad'

row.cells[1].text = str(expression['sad'])

row = table.rows[5]

row.cells[0].text = 'surprised'

row.cells[1].text = str(expression['surprised'])

row = table.rows[6]

row.cells[0].text = 'normal'

row.cells[1].text = str(expression['normal'])

document.add_heading('学习状态评估结果', level=2)

# 总结出现次数最多的表情 对各个表情种类的出现进行分析

maxExpression = getMaxExpression(expression);

# 总结 normal 和happy 出现占比

study_state_text = ''

normal_happy = (expression['normal'] + expression['happy']) / total*100

Noface = (expression['Noface']) / total*100

# 总结异常姿势出现 进行相应的提醒

# posture_text =''

cross_arms_text = ''

shoulder_drop_text = ''

Noface_text=''

if posture['cross_arms'] > 0:

cross_arms_text = '出现双臂环绕动作,易导致出现紧张不适心理状态,影响听课状态,建议放松双臂,保持放松坐姿'

normal_happy-10

if posture['shoulder_drop'] > 0:

shoulder_drop_text = '两侧肩部高度差过大,建议保持肩膀放松且平衡'

normal_happy-10

if normal_happy >= 80:

study_state_text = '非常良好'

if normal_happy > 70 and normal_happy <=80:

study_state_text = '良好'

if normal_happy > 50 and normal_happy <70:

study_state_text = '一般'

if normal_happy <= 50:

study_state_text = '较差'

if Noface >=30:

study_state_text='较差'

Noface_text='出现没有识别到面部的情况,有可能存在逃课行为'

paragraph1 = document.add_paragraph("学生学习状态评价:"+study_state_text)

paragraph2 = document.add_paragraph("出现最多的表情为:" + maxExpression+"占整个学习阶段的:"+str(normal_happy)+"%")

paragraph3 = document.add_paragraph(cross_arms_text)

paragraph3 = document.add_paragraph(shoulder_drop_text)

paragraph4 = document.add_paragraph(Noface_text)

document.save('a.docx')

print(total)

#generateDocument(expression, posture, 100)

邮件转发功能实现

实现将分析报告转发至指定邮箱

import smtplib

from email.mime.multipart import MIMEMultipart

from email.mime.text import MIMEText

def SMTPSend():

# 设置smtplib所需的参数

# 下面的发件人,收件人是用于邮件传输的。

smtpserver = 'smtp.qq.com'

username = 'xxxx@qq.com'

password = 'xxxxxx'

sender = 'xxxxxx@qq.com'

# receiver='XXX@126.com'

# 收件人为多个收件人

receiver = ['xxxxxx@qq.com']

subject = '学习状态报告'

# 通过Header对象编码的文本,包含utf-8编码信息和Base64编码信息。以下中文名测试ok

# subject = '中文标题'

# subject=Header(subject, 'utf-8').encode()

# 构造邮件对象MIMEMultipart对象

# 下面的主题,发件人,收件人,日期是显示在邮件页面上的。

msg = MIMEMultipart('mixed')

msg['Subject'] = subject

msg['From'] = 'xxxx@qq.com <xxxx@qq.com>'

# msg['To'] = 'XXX@126.com'

# 收件人为多个收件人,通过join将列表转换为以;为间隔的字符串

msg['To'] = ";".join(receiver)

# msg['Date']='2012-3-16'

txt = MIMEText("学习状态评估报告已添加至附件请查收!!!")

msg.attach(txt)

# 构造附件

sendfile = open(r'C:\Users\Administrator\PycharmProjects\pythonProject\aip-python-sdk-4.15.1\a.docx', 'rb').read()

text_att = MIMEText(sendfile, 'base64', 'utf-8')

text_att["Content-Type"] = 'application/octet-stream'

# 以下附件可以重命名成aaa.txt

# text_att["Content-Disposition"] = 'attachment; filename="aaa.txt"'

# 另一种实现方式

text_att.add_header('Content-Disposition', 'attachment', filename='学习状态评估报告.docx')

msg.attach(text_att)

# 发送邮件

smtp = smtplib.SMTP()

smtp.connect('smtp.qq.com')

# 我们用set_debuglevel(1)就可以打印出和SMTP服务器交互的所有信息。

# smtp.set_debuglevel(1)

smtp.login(username, password)

smtp.sendmail(sender, receiver, msg.as_string())

smtp.quit()

UI交互代码

此处由于只实现了一个简单的“单机版”的学习状态评估功能,所以只简单的引入TK库进行界面的编写,注册和登录没有写入数据库,只是进行简单的序列化操作保存在文件中。

界面图片

时隔久远代码 代码可能有些问题,请大家多多包含!!建议大家根据需要自行编写

import pickle

import tkinter as tk

import tkinter.messagebox

from PIL import Image, ImageTk

from Expression_posture import GetView

from Expression_posture import stopLearning

from DocumentGeneration import generateDocument

usr_name=''

usr_pwd=''

usr_info=''

import cv2

from Expression_posture import cap

flag = False

def usr_login_win():

try:

with open('usr_info.pickle', 'rb') as usr_file:

usrs_info = pickle.load(usr_file)

except:

with open('usr_info.pickle', 'wb') as usr_file:

usrs_info = {'admin': 'admin'}

pickle.dump(usrs_info, usr_file)

def loginCheck( ):

usr_name = var_usr_name.get()

usr_pwd = var_usr_pwd.get()

if usr_name in usrs_info:

if usr_pwd == usrs_info[usr_name]:

tk.messagebox.showinfo(title='Welcome', message='欢迎' + usr_name+'登录!')

btn2 = tk.Button(win, width=265, image=startLearning_photo, compound=tk.CENTER, bg='#FFFFFF',

relief='flat', command=GetView)

btn2.place(x=210, y=330)

stopLabel = tk.Label(win, text='注:开始学习后输入q字母退出学习', font=('Bold', 13), fg='#5B0261',

bg='#FFFFFF')

stopLabel.place(x=210,y=380)

# stop_learning = tk.Button(win, width=265, image=startLearning_photo, compound=tk.CENTER, bg='#FFFFFF',

# relief='flat', command=stopLearning)

# stop_learning.place(x=210,y=300)

btn3.place_forget()

btn4.place_forget()

window_login.destroy()

else:

tk.messagebox.showerror(message='ERROR!')

# 用户名密码不能为空

elif usr_name == '' or usr_pwd == '':

tk.messagebox.showerror(message='用户名不能为空!')

window_login = tk.Toplevel(win)

window_login.title('login')

window_login.geometry('400x300')

background = tk.Label(window_login, image=login_bg_photo, compound=tk.CENTER, relief='flat')

background.pack()

tk.Label(window_login, text='账户:',font=("Bold",11),fg='#707070',bg='#FFFFFF').place(x=100, y=100)

tk.Label(window_login, text='密码:',font=("Bold",11),fg='#707070',bg='#FFFFFF').place(x=100, y=140)

var_usr_name = tk.StringVar()

var_usr_pwd = tk.StringVar()

enter_usr_name = tk.Entry(window_login, textvariable=var_usr_name,font=('Arail',15),width=20,relief='groove',bd=2)

enter_usr_name.place(x=160, y=100)

enter_usr_pwd = tk.Entry(window_login, textvariable=var_usr_pwd, show='*',font=('Arail',15),width=20,relief='groove',bd=2)

enter_usr_pwd.place(x=160, y=140)

btn_login = tk.Button(window_login, image=login_photo, compound=tk.CENTER, bg='#FFFFFF', relief='flat',

command=lambda: [loginCheck()])

#btn_login = tk.Button(window_login, text="login", width=10, height=2, command=loginCheck)

btn_login.place(x=100, y=200)

def usr_sign_window():

def signtowcg():

NewName = new_name.get()

NewPwd = new_pwd.get()

ConfirPwd = pwd_comfirm.get()

receiveEmail = receive_email.get()

try:

with open('usr_info.pickle', 'rb') as usr_file:

exist_usr_info = pickle.load(usr_file)

except FileNotFoundError:

exist_usr_info = {}

if NewName in exist_usr_info:

tk.messagebox.showerror(message='用户名存在!')

elif NewName == '' and NewPwd == '':

tk.messagebox.showerror(message='用户名和密码不能为空!')

elif NewPwd != ConfirPwd:

tk.messagebox.showerror(message='密码前后不一致!')

else:

exist_usr_info[NewName] = NewPwd

with open('usr_info.pickle', 'wb') as usr_file:

pickle.dump(exist_usr_info, usr_file)

tk.messagebox.showinfo(message='注册成功!')

window_sign_up.destroy()

#注册界面自定义函数

def close_regWin():

window_sign_up.destroy()

# 新建注册窗口

window_sign_up = tk.Toplevel(win)

sw = window_sign_up.winfo_screenwidth() # 得到屏幕宽度

sh = window_sign_up.winfo_screenheight() # 得到屏幕高度

ww = 500

wh = 400

# 窗口宽高为100

x = (sw - ww) / 2

y = (sh - wh) / 2

window_sign_up.geometry("%dx%d+%d+%d" % (ww, wh, x, y))

#注册页面背景设置

window_sign_up.overrideredirect(False)

register_background = tk.Label(window_sign_up, image=register_bg_photo, compound=tk.CENTER, relief='flat')

register_background.pack()

#关闭窗口按钮

# reg_close = tk.Button(window_sign_up,image=close_photo, relief='flat',command=close_regWin,compound=tk.CENTER,bd=0)

# reg_close.place(x=475,y=1)

# 注册编辑框

new_name = tk.StringVar()

new_pwd = tk.StringVar()

pwd_comfirm = tk.StringVar()

receive_email = tk.StringVar()

tk.Label(window_sign_up, text='账户名:',font=('Bold',11),fg='#707070',bg='#FFFFFF').place(x=110, y=50)

enter_new_name=tk.Entry(window_sign_up, textvariable=new_name,font=('Arail',15),width=20,relief='groove',bd =2)

enter_new_name.place(x=190,y=50)

tk.Label(window_sign_up, text='密码:',font=('Bold',11),fg='#707070',bg='#FFFFFF').place(x=110, y=100)

enter_new_pwd=tk.Entry(window_sign_up, textvariable=new_pwd, show='*',font=('Arail',15),width=20,relief='groove',bd =2)

enter_new_pwd.place(x=190,y=100)

tk.Label(window_sign_up, text='确认密码:',font=('Bold',11),fg='#707070',bg='#FFFFFF').place(x=110, y=150)

enter_pwd_comfirm=tk.Entry(window_sign_up, textvariable=pwd_comfirm, show='*',font=('Arail',15),width=20,relief='groove',bd =2)

enter_pwd_comfirm.place(x=190,y=150)

tk.Label(window_sign_up,text ='注册邮箱:',font=('Bold',11),fg='#707070',bg='#FFFFFF').place(x=110,y=200)

enter_new_email = tk.Entry(window_sign_up,font=('Arail',15),width=20,relief='groove',bd =2)

enter_new_email.place(x=190,y=200)

tk.Label(window_sign_up,text='收件邮箱:',font=('Bold',11),fg='#707070',bg='#FFFFFF').place(x=110, y=250)

enter_new_email2 = tk.Entry(window_sign_up, textvariable=receive_email, font=('Arail', 15), width=20, relief='groove', bd=2)

enter_new_email2.place(x=190, y=250)

alert_lable1 = tk.Label(window_sign_up,text='注:完成学习后将向此邮箱发送学习状态评估报告',font=('Bold',8),fg='#5B0261',bg='#FFFFFF')

alert_lable1.place(x=190,y=280)

# 确认注册

bt_confirm = tk.Button(window_sign_up,image=reg_reg_photo,compound=tk.CENTER,relief='flat',bg='#FFFFFF',bd=0, command=signtowcg)

bt_confirm.place(x=270,y=310)

#自己创建的函数

def StopLearning():

print("stoplearning")

cap.release()

#主界面内容设置

win = tk.Tk()

win.configure(bg='#FFFFFF')

sw = win.winfo_screenwidth() #得到屏幕宽度

sh = win.winfo_screenheight() #得到屏幕高度

ww = 700

wh = 465

#窗口宽高为100

x = (sw-ww) / 2

y = (sh-wh) / 2

win.geometry("%dx%d+%d+%d" %(ww,wh,x,y))

win.title("Study Supervise")

def changeBtn2State():

print("change")

#btn2['state'] = tk.NORMAL

def CloseWin():

win.destroy()

#登录窗口涉及图片

login_bg_img = Image.open('UI_image/login_bg.png')

login_bg_photo = ImageTk.PhotoImage(login_bg_img)

#注册窗口涉及图片变量

register_bg_img = Image.open('UI_image/Register_bg.png')

register_bg_photo = ImageTk.PhotoImage(register_bg_img)

reg_close_img= Image.open('UI_image/close.png')

reg_close_photo= ImageTk.PhotoImage(reg_close_img)

reg_reg_img = Image.open('UI_image/register.png')

reg_reg_photo = ImageTk.PhotoImage(reg_reg_img)

# 主界面样式设置

bg_img = Image.open('UI_image/bg_img3.png')

background_img = ImageTk.PhotoImage(bg_img)

win.overrideredirect(False)

background = tk.Label(win,image=background_img,compound=tk.CENTER,relief='flat')

background.pack()

startLearning_img = Image.open('UI_image/StartLearning.png')

startLearning_photo = ImageTk.PhotoImage(startLearning_img)

login_img = Image.open('UI_image/login.png')

login_photo = ImageTk.PhotoImage(login_img)

btn3 = tk.Button(win,image=login_photo,compound=tk.CENTER,bg='#FFFFFF',relief='flat',command=lambda:[usr_login_win(),changeBtn2State()],)

btn3.place(x=165,y=330)

register_img = Image.open('UI_image/register.png')

register_photo = ImageTk.PhotoImage(register_img)

btn4 = tk.Button(win,image=register_photo,compound=tk.CENTER,bg='#FFFFFF',relief='flat',command=usr_sign_window)

btn4.place(x=400,y = 330)

win.mainloop()

324

324

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?