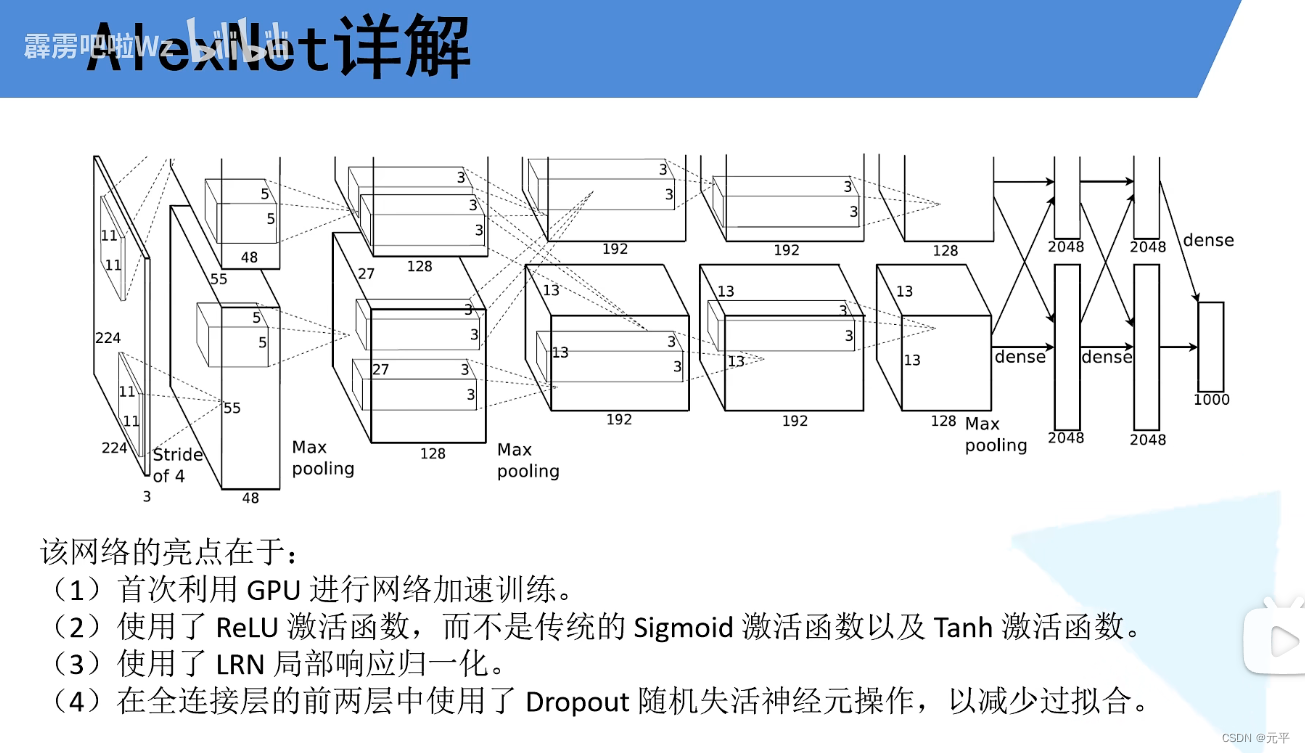

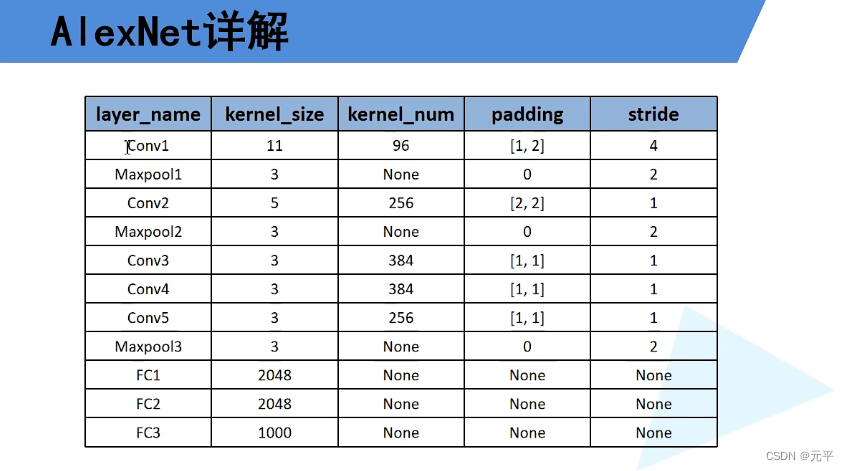

·1.AlexNet网络架构及其配置参数

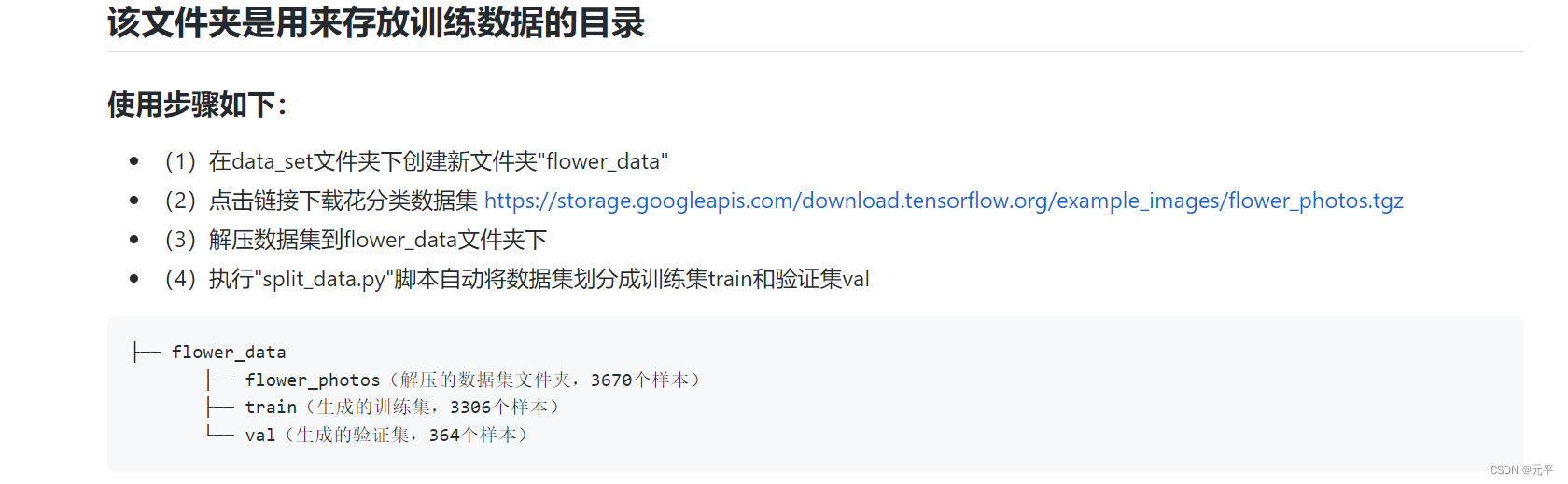

2.数据集准备

数据集下载链接:http://download.tensorflow.org/example_images/flower_photos.tgz

包含五种类型的花朵数据 每种类型有600-900张不等

3.数据集划分:

打开 PowerShell ,执行 “split_data.py” 分类脚本自动将数据集划分成 训练集train 和 验证集val

split_data.py代码如下:

import os

from shutil import copy

import random

def mkfile(file):

if not os.path.exists(file):

os.makedirs(file)

# 获取 flower_photos 文件夹下除 .txt 文件以外所有文件夹名(即5种花的类名)

file_path = 'flower_data/flower_photos'

flower_class = [cla for cla in os.listdir(file_path) if ".txt" not in cla]

# 创建 训练集train 文件夹,并由5种类名在其目录下创建5个子目录

mkfile('flower_data/train')

for cla in flower_class:

mkfile('flower_data/train/'+cla)

# 创建 验证集val 文件夹,并由5种类名在其目录下创建5个子目录

mkfile('flower_data/val')

for cla in flower_class:

mkfile('flower_data/val/'+cla)

# 划分比例,训练集 : 验证集 = 9 : 1

split_rate = 0.1

# 遍历5种花的全部图像并按比例分成训练集和验证集

for cla in flower_class:

cla_path = file_path + '/' + cla + '/' # 某一类别花的子目录

images = os.listdir(cla_path) # iamges 列表存储了该目录下所有图像的名称

num = len(images)

eval_index = random.sample(images, k=int(num*split_rate)) # 从images列表中随机抽取 k 个图像名称

for index, image in enumerate(images):

# eval_index 中保存验证集val的图像名称

if image in eval_index:

image_path = cla_path + image

new_path = 'flower_data/val/' + cla

copy(image_path, new_path) # 将选中的图像复制到新路径

# 其余的图像保存在训练集train中

else:

image_path = cla_path + image

new_path = 'flower_data/train/' + cla

copy(image_path, new_path)

print("\r[{}] processing [{}/{}]".format(cla, index+1, num), end="") # processing bar

print()

print("processing done!")

4.搭建模型:

import torch.nn as nn

import torch

class AlexNet(nn.Module):

def __init__(self, num_classes=1000, init_weights=False):

super(AlexNet, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 48,kernel_size=11,stride=4,padding=2), # input[3,224,224] output[48,55,55]

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3,stride=2),

nn.Conv2d(48, 128,kernel_size=5,padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3,stride=2),

nn.Conv2d(128, 192, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(192, 192, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(192, 128, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

)

self.classifier = nn.Sequential(

nn.Dropout(p=0.5),

nn.Linear(128*6*6, 2048),

nn.ReLU(inplace=True),

nn.Dropout(p=0.5),

nn.Linear(2048, 2048),

nn.ReLU(inplace=True),

nn.Linear(2048, num_classes),

)

if init_weights:

self._initialize_weights()

def forward(self, x): #正向传播

x = self.features(x)

x = torch.flatten(x, start_dim=1)

x = self.classifier(x)

return x

def _initialize_weights(self):

for m in self.modules(): #遍历模型的所有层

if isinstance(m, nn.Conv2d): #判断层结构是否为给定层结构

nn.init.kaiming_normal_(m.weight, mode='fan_out'),

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01) #正态分布初始化

nn.init.constant_(m.bias, 0)

5.使用数据集训练模型(pytorch-gpu):

# 导入包

import torch

import torch.nn as nn

from torchvision import transforms, datasets, utils

import matplotlib.pyplot as plt

import numpy as np

import torch.optim as optim

from model import AlexNet

import os

import json

import time

# 使用GPU训练

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(device)

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224), # 随机裁剪,再缩放成 224×224

transforms.RandomHorizontalFlip(p=0.5), # 水平方向随机翻转,概率为 0.5, 即一半的概率翻转, 一半的概率不翻转

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]),

"val": transforms.Compose([transforms.Resize((224, 224)), # cannot 224, must (224, 224)

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])}

# 获取图像数据集的路径

# data_root = os.path.abspath(os.path.join(os.getcwd(), "../..")) # get data root path 返回上上层目录

# 'D:\Downloads-chrome\deep-learning-for-image-processing-master\deep-learning-for-image-processing-master\data_set'

# image_path = data_root + "/data_set/flower_data/" # flower data_set path

image_path = r'C:\Users\18312\PycharmProjects\AlexNet_pytorch\data_set\flower_data' #数据文件夹

#print(image_path)

# # 导入训练集并进行预处理

train_dataset = datasets.ImageFolder(root=image_path + "/train",

transform=data_transform["train"])

train_num = len(train_dataset)

#

# # 按batch_size分批次加载训练集

train_loader = torch.utils.data.DataLoader(train_dataset, # 导入的训练集

batch_size=32, # 每批训练的样本数

shuffle=True, # 是否打乱训练集

num_workers=0) # 使用线程数,在windows下设置为0

# 导入验证集并进行预处理

validate_dataset = datasets.ImageFolder(root=image_path + "/val",

transform=data_transform["val"])

val_num = len(validate_dataset)

print(val_num)

# 加载验证集

validate_loader = torch.utils.data.DataLoader(validate_dataset, # 导入的验证集

batch_size=32,

shuffle=True,

num_workers=0)

# 字典,类别:索引 {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4}

flower_list = train_dataset.class_to_idx

# 将 flower_list 中的 key 和 val 调换位置

cla_dict = dict((val, key) for key, val in flower_list.items())

# 将 cla_dict 写入 json 文件中

json_str = json.dumps(cla_dict, indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

net = AlexNet(num_classes=5, init_weights=True) # 实例化网络(输出类型为5,初始化权重)

net.to(device) # 分配网络到指定的设备(GPU/CPU)训练 这里有问题

loss_function = nn.CrossEntropyLoss() # 交叉熵损失

optimizer = optim.Adam(net.parameters(), lr=0.0002) # 优化器(训练参数,学习率)

save_path = './AlexNet.pth'

best_acc = 0.0

for epoch in range(10):

net.train() # 训练过程中开启 Dropout

running_loss = 0.0 # 每个 epoch 都会对 running_loss 清零

time_start = time.perf_counter() # 对训练一个 epoch 计时

for step, data in enumerate(train_loader, start=0): # 遍历训练集,step从0开始计算

images, labels = data # 获取训练集的图像和标签

optimizer.zero_grad() # 清除历史梯度

outputs = net(images.to(device)) # 正向传播

loss = loss_function(outputs, labels.to(device)) # 计算损失

loss.backward() # 反向传播

optimizer.step() # 优化器更新参数

running_loss += loss.item()

# 打印训练进度(使训练过程可视化)

rate = (step + 1) / len(train_loader) # 当前进度 = 当前step / 训练一轮epoch所需总step

a = "*" * int(rate * 50)

b = "." * int((1 - rate) * 50)

print("\rtrain loss: {:^3.0f}%[{}->{}]{:.3f}".format(int(rate * 100), a, b, loss), end="")

print()

print('%f s' % (time.perf_counter() - time_start))

########################################### validate ###########################################

net.eval() # 验证过程中关闭 Dropout

acc = 0.0

with torch.no_grad():

for val_data in validate_loader:

val_images, val_labels = val_data

outputs = net(val_images.to(device))

predict_y = torch.max(outputs, dim=1)[1] # 以output中值最大位置对应的索引(标签)作为预测输出

acc += (predict_y == val_labels.to(device)).sum().item()

val_accurate = acc / val_num

# 保存准确率最高的那次网络参数

if val_accurate > best_acc:

best_acc = val_accurate

torch.save(net.state_dict(), save_path)

print('[epoch %d] train_loss: %.3f test_accuracy: %.3f \n' %

(epoch + 1, running_loss / step, val_accurate))

print('Finished Training')

训练过程如下(华硕天选3 rtx3070显卡 8g显存 性能模式下大概13s一个epoch):

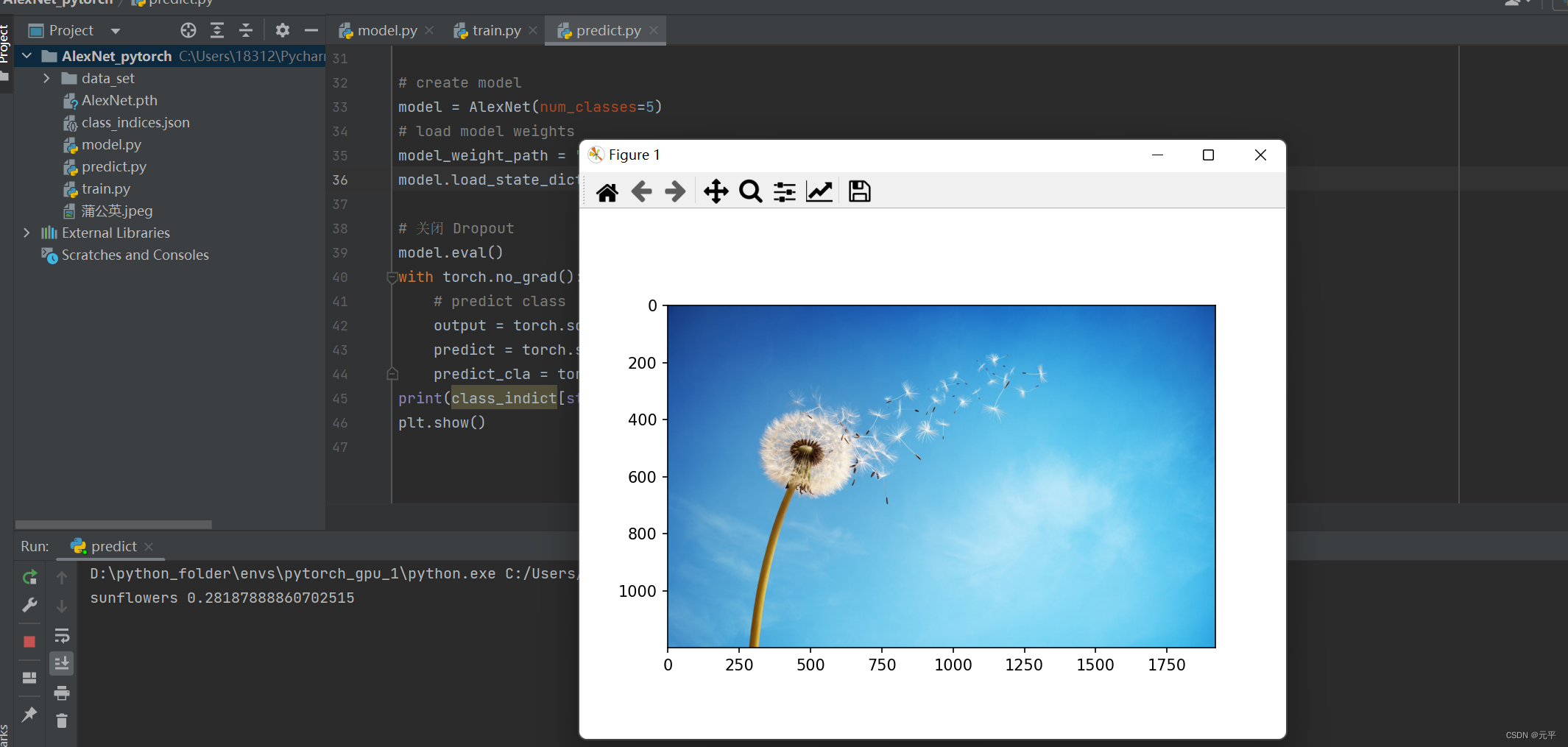

6.使用模型进行预测,网上下载一张蒲公英图片,并保存在当前项目文件夹下,预测代码如下:

import os

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

import torch

from model import AlexNet

from PIL import Image

from torchvision import transforms

import matplotlib.pyplot as plt

import json

# 预处理

data_transform = transforms.Compose(

[transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

# load image

img = Image.open("蒲公英.jpeg")

plt.imshow(img)

# [N, C, H, W]

img = data_transform(img)

# expand batch dimension

img = torch.unsqueeze(img, dim=0)

# read class_indict

try:

json_file = open('./class_indices.json', 'r')

class_indict = json.load(json_file)

except Exception as e:

print(e)

exit(-1)

# create model

model = AlexNet(num_classes=5)

# load model weights

model_weight_path = "./AlexNet.pth"

model.load_state_dict(torch.load(model_weight_path))

# 关闭 Dropout

model.eval()

with torch.no_grad():

# predict class

output = torch.squeeze(model(img)) # 将输出压缩,即压缩掉 batch 这个维度

predict = torch.softmax(output, dim=0)

predict_cla = torch.argmax(predict).numpy()

print(class_indict[str(predict_cla)], predict[predict_cla].item())

plt.show()

代码运行结果如下:

这里我故意选了一张预测错误的图片 模型只训练了几轮 准确度也只有0.7左右 错误也是正常的 可以多训练几轮 再用模型预测。

仅供学习交流 如有侵权 联系删除 QQ:1831255835

致谢:

https://www.bilibili.com/video/BV1W7411T7qc/?spm_id_from=333.788&vd_source=e93113e88e71956b3d18f1eb599bf012

https://blog.csdn.net/m0_37867091/article/details/107150142

189

189

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?