日志采集采用轻量级的Filebeat而不采用重量级的logstash,由于项目的过滤并不复杂,此处也不采用logstash进行过滤,而采用filebeat自身的过滤即可实现,因此省去logstash,不足欢迎指点。

前期准备k8s集群

测试过程中发现镜像有时会下载不了,此处采用将镜像先下载到本地,然后打上标签上传至harbor仓库,harbor仓库可自行搭建

# 下载

docker pull docker.elastic.co/elasticsearch/elasticsearch:7.17.2

docker pull docker.elastic.co/kibana/kibana:7.17.2

docker pull docker.elastic.co/beats/filebeat:7.17.2

# 打标签 将${harbor_url}和${harbor_project}换成自己的地址和目录

docker tag docker.elastic.co/elasticsearch/elasticsearch:7.17.2 ${harbor_url}/${harbor_project}/elasticsearch:7.17.2

docker tag docker.elastic.co/kibana/kibana:7.17.2 ${harbor_url}/${harbor_project}/kibana:7.17.2

docker tag docker.elastic.co/beats/filebeat:7.17.2 ${harbor_url}/${harbor_project}/filebeat:7.17.2

# 登陆自己的harbor服务器

docker login -u admin -p ${password} ${harbor_url}

# 上传至harbor仓库

docker push ${harbor_url}/${harbor_project}/elasticsearch:7.17.2

docker push ${harbor_url}/${harbor_project}/kibana:7.17.2

docker push ${harbor_url}/${harbor_project}/filebeat:7.17.2

在k8s集群中创建docker拉取harbor密钥

# -n 后是指定的空间,根据自己的情况去更改,此处我是将EFK安装在kube-system命名空间下

kubectl create secret docker-registry harbor-pull-secret-188 --docker-server=${harbor_url} --docker-username=admin --docker-password=${password} -n kube-system

搭建elasticsearch+kibana

在master节点创建一个efk目录,用来存放配置文件,根据自己情况而定

cd /mnt

mkdir efk

mkdir filebeat

mkdir elasticsearch-kibana

在elasticsearch-kibana目录下创建配置文件elasticsearch.yml

cluster.name: my-es

node.name: "node-1"

path.data: /usr/share/elasticsearch/data

#path.logs: /var/log/elasticsearch

bootstrap.memory_lock: false

network.host: 0.0.0.0

http.port: 9200

discovery.seed_hosts: ["127.0.0.1", "[::1]"]

cluster.initial_master_nodes: ["node-1"]

#增加参数,使head插件可以访问es

http.cors.enabled: true

http.cors.allow-origin: "*"

http.cors.allow-headers: Authorization,X-Requested-With,Content-Length,Content-Type

在elasticsearch-kibana目录下创建配置文件kibana.yml

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.hosts: "http://es-kibana-0.es-kibana.kube-system:9200"

kibana.index: ".kibana"

i18n.locale: "zh-CN"

在k8s中创建elasticsearch和kibana的配置文件configmap

cd /mnt/elasticsearch-kibana

kubectl create configmap es-config -n kube-system --from-file=elasticsearch.yml

kubectl create configmap kibana-config -n kube-system --from-file=kibana.yml

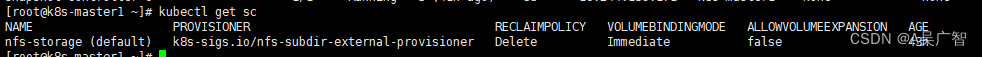

查看有没有StorageClass

kubectl get sc

如果存在则跳过此步骤,没有则按下面的步骤执行

配置NFS服务器

# 安装

yum -y install nfs-utils

# 创建或使用用已有的文件夹作为nfs文件存储点

vi /etc/exports

(写入如下内容)

/mnt *(rw,no_root_squash,sync)

# 配置生效并查看生效

exportfs -r

exportfs

(显示如下:)

/mnt <world>

# 启动rpcbind、nfs服务

systemctl restart rpcbind && systemctl enable rpcbind

systemctl restart nfs && systemctl enable nfs

# 查看 RPC 服务的注册状况

rpcinfo -p localhost

# 显示如下

program vers proto port service

100000 4 tcp 111 portmapper

100000 3 tcp 111 portmapper

100000 2 tcp 111 portmapper

100000 4 udp 111 portmapper

100000 3 udp 111 portmapper

100000 2 udp 111 portmapper

# showmount测试

showmount -e 192.168.0.145

# 显示如下

Export list for 192.168.0.145:

/mnt *

# 问题排查,如果遇到showmount -e 192.168.0.146失败,使用如下命令

# 先停用

systemctl stop rpcbind

systemctl stop nfs

# 再重启

systemctl start rpcbind

systemctl start nfs

新建storageclass-nfs.yaml(需要更改ip和文件夹,文件夹必须存在,不存在手动创建,否则会失败)

## 创建了一个存储类

apiVersion: storage.k8s.io/v1

kind: StorageClass #存储类的资源名称

metadata:

name: nfs-storage #存储类的名称,自定义

annotations:

storageclass.kubernetes.io/is-default-class: "true" #注解,是否是默认的存储,注意:KubeSphere默认就需要个默认存储,因此这里注解要设置为“默认”的存储系统,表示为"true",代表默认。

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner #存储分配器的名字,自定义

parameters:

archiveOnDelete: "true" ## 删除pv的时候,pv的内容是否要备份

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1 #只运行一个副本应用

strategy: #描述了如何用新的POD替换现有的POD

type: Recreate #Recreate表示重新创建Pod

selector: #选择后端Pod

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner #创建账户

containers:

- name: nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/nfs-subdir-external-provisioner:v4.0.2 #使用NFS存储分配器的镜像

# resources:

# limits:

# cpu: 10m

# requests:

# cpu: 10m

volumeMounts:

- name: nfs-client-root #定义个存储卷,

mountPath: /persistentvolumes #表示挂载容器内部的路径

env:

- name: PROVISIONER_NAME #定义存储分配器的名称

value: k8s-sigs.io/nfs-subdir-external-provisioner #需要和上面定义的保持名称一致

- name: NFS_SERVER #指定NFS服务器的地址,你需要改成你的NFS服务器的IP地址

value: 192.168.0.145 ## 指定自己nfs服务器地址

- name: NFS_PATH

value: /mnt/nfs ## nfs服务器共享的目录 #指定NFS服务器共享的目录

volumes:

- name: nfs-client-root #存储卷的名称,和前面定义的保持一致

nfs:

server: 192.168.0.145 #NFS服务器的地址,和上面保持一致,这里需要改为你的IP地址

path: /mnt/nfs #NFS共享的存储目录,和上面保持一致

---

apiVersion: v1

kind: ServiceAccount #创建个SA账号

metadata:

name: nfs-client-provisioner #和上面的SA账号保持一致

# replace with namespace where provisioner is deployed

namespace: default

---

#以下就是ClusterRole,ClusterRoleBinding,Role,RoleBinding都是权限绑定配置,不在解释。直接复制即可。

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

创建StorageClass

kubectl apply -f storageclass-nfs.yaml

# 查看是否存在

kubectl get sc

创建es存储pvc,pv配置文件:es-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: es-pv-claim

namespace: kube-system

labels:

app: es

spec:

accessModes:

- ReadWriteMany

storageClassName: "nfs-storage"

resources:

requests:

storage: 200Gi

创建pvc

kubectl apply -f es-pvc.yaml

创建es-kibana的yaml配置文件: es-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

labels:

app: es-kibana

name: es-kibana

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: es-kibana

serviceName: "es-kibana"

template:

metadata:

labels:

app: es-kibana

spec:

imagePullSecrets:

- name: harbor-pull-secret-188

containers:

- image: harbor.wuliuhub.com:8443/deerchain/elasticsearch:7.17.2

imagePullPolicy: IfNotPresent

name: elasticsearch

lifecycle:

postStart:

exec:

command: [ "/bin/bash", "-c", "sysctl -w vm.max_map_count=262144; ulimit -l unlimited;chown -R elasticsearch:elasticsearch /usr/share/elasticsearch/data;" ]

resources:

requests:

memory: "800Mi"

cpu: "800m"

limits:

memory: "1Gi"

cpu: "1000m"

volumeMounts:

- name: es-config

mountPath: /usr/share/elasticsearch/config/elasticsearch.yml

subPath: elasticsearch.yml

- name: es-persistent-storage

mountPath: /usr/share/elasticsearch/data

env:

- name: TZ

value: Asia/Shanghai

- image: harbor.wuliuhub.com:8443/deerchain/kibana:7.17.2

imagePullPolicy: IfNotPresent

name: kibana

env:

- name: TZ

value: Asia/Shanghai

volumeMounts:

- name: kibana-config

mountPath: /usr/share/kibana/config/kibana.yml

subPath: kibana.yml

volumes:

- name: es-config

configMap:

name: es-config

- name: kibana-config

configMap:

name: kibana-config

- name: es-persistent-storage

persistentVolumeClaim:

claimName: es-pv-claim

#hostNetwork: true

#dnsPolicy: ClusterFirstWithHostNet

nodeSelector:

kubernetes.io/hostname: k8s-master1

创建es-kibana应用

kubectl create -f es-statefulset.yaml

# 查看

kubectl get pod -o wide -n kube-system|grep es

# 使用curl命令测试elasticsearch是否正常

[root@k8s-master1 ~]# kubectl get pod -o wide -n kube-system|grep es

es-kibana-0 2/2 Running 2 (42h ago) 43h 10.244.159.134 k8s-master1 <none> <none>

[root@k8s-master1 ~]# curl 10.244.159.134:9200

{

"name" : "node-1",

"cluster_name" : "my-es",

"cluster_uuid" : "0ZLWYBf_QY-iR7YwnnoQIA",

"version" : {

"number" : "7.17.2",

"build_flavor" : "default",

"build_type" : "docker",

"build_hash" : "de7261de50d90919ae53b0eff9413fd7e5307301",

"build_date" : "2022-03-28T15:12:21.446567561Z",

"build_snapshot" : false,

"lucene_version" : "8.11.1",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

创建es-kibana的cluserip的svc:es-cluster-none-svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: es-kibana

name: es-kibana

namespace: kube-system

spec:

ports:

- name: es9200

port: 9200

protocol: TCP

targetPort: 9200

- name: es9300

port: 9300

protocol: TCP

targetPort: 9300

clusterIP: None

selector:

app: es-kibana

type: ClusterIP

创建

kubectl apply -f es-cluster-none-svc.yaml

为了查看方便创建一个nodeport类型的svc:es-nodeport-svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: es-kibana

name: es-kibana-nodeport-svc

namespace: kube-system

spec:

ports:

- name: 9200-9200

port: 9200

protocol: TCP

targetPort: 9200

#nodePort: 9200

- name: 5601-5601

port: 5601

protocol: TCP

targetPort: 5601

#nodePort: 5601

selector:

app: es-kibana

type: NodePort

创建

kubectl apply -f es-nodeport-svc.yaml

# 查看

[root@k8s-master1 ~]# kubectl get svc -n kube-system|grep es-kibana

es-kibana ClusterIP None <none> 9200/TCP,9300/TCP 7d19h

es-kibana-nodeport-svc NodePort 192.168.0.122 <none> 9200:30148/TCP,5601:32337/TCP 7d19h

使用nodeip+port访问,本次端口为32337

页面显示正常即可

创建filebeat服务

在filebeat目录下创建filebeat.yml

max_procs: 1 # 限制一个CPU核心,避免过多抢占业务资源

queue.mem.events: 2048 # 存储于内存队列的事件数,排队发送 (默认4096)

queue.mem.flush.min_events: 1536 # 小于queue.mem.events ,增加此值可提高吞吐量 (默认值2048)

filebeat.inputs:

- type: log

ignore_older: 48h # 忽略这个时间之前的文件(根据文件改变时间)

max_bytes: 20480 # *单条日志的大小限制,建议限制(默认为10M,queue.mem.events * max_bytes 将是占有内存的一部分)

recursive_glob.enabled: true # 是否启用glob匹配,可匹配多级路径(最大8级):/A/**/*.log => /A/*.log ~ /A/**/**/**/**/**/**/**/**/*.log

fields_under_root: true # 将添加的字段加在JSON的最外层

tail_files: false # 不建议一直开启,从日志文件的最后开始读取新内容(保证读取最新文件),但是如果有日志轮转,可能导致文件内容丢失,建议结合 ignore_older 将其设置为false

scan_frequency: 10s

backoff: 1s

max_backoff: 10s

backoff_factor: 2

enabled: true

paths:

- /mnt/log/*/log_error.log

multiline: # 多行处理,正则表示如果前面几个数字不是4个数字开头,那么就会合并到一行,解决Java堆栈错误日志收集问题

pattern: ^\d{4}-\d{1,2}-\d{1,2}\s\d{1,2}:\d{1,2}:\d{1,2} #匹配Java日志开头时间

negate: true # 正则是否开启,默认false不开启

match: after # 不匹配的正则的行是放在上面一行的前面还是后面

max_lines: 200 # 最多匹配多少行,如果超出最大行数,则丢弃多余的行(默认500)

timeout: 1s # 超时时间后,即使还未匹配到下一个行日志(下一个多行事件),也将此次匹配的事件刷出 (默认5s)

processors:

- drop_fields:

fields: ["input","host","agent","ecs"]

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 3

setup.kibana:

output.elasticsearch:

hosts: ["http://es-kibana-0.es-kibana.kube-system:9200"]

index: "deerchain-all-error-log-%{+yyyy.MM.dd}"

indices:

- index: "deerchain-allinpay-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-allinpay/log_error.log"

- index: "deerchain-app-member-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-app-member/log_error.log"

- index: "deerchain-attachment-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-attachment/log_error.log"

- index: "deerchain-change-data-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-change-data/log_error.log"

- index: "deerchain-contract-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-contract/log_error.log"

- index: "deerchain-enterprise-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-enterprise/log_error.log"

- index: "deerchain-goods-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-goods/log_error.log"

- index: "deerchain-invoice-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-invoice/log_error.log"

- index: "deerchain-manager-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-manager/log_error.log"

- index: "deerchain-member-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-member/log_error.log"

- index: "deerchain-message-push-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-message-push/log_error.log"

- index: "deerchain-orders-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-orders/log_error.log"

- index: "deerchain-panel-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-panel/log_error.log"

- index: "deerchain-panel-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-panel/log_error.log"

- index: "deerchain-platform-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-platform/log_error.log"

- index: "deerchain-settlement-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-settlement/log_error.log"

- index: "deerchain-system-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-system/log_error.log"

- index: "deerchain-taxation-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-taxation/log_error.log"

- index: "deerchain-web-enterprise-error-log-%{+yyyy.MM.dd}"

when.contains:

log.file.path: "/mnt/log/deerchain-web-enterprise/log_error.log"

setup.template.enabled: false

setup.template.overwrite: true

setup.ilm.enabled: false

创建filebeat配置文件

kubectl create configmap filebeat-config -n kube-system --from-file=filebeat.yml

创建filebeat-daemonset.yaml,此处分两种介绍,第一种是将日志所有的日志通过nfs生成在某一个节点下,这个时候只需要在有日志的节点下运行filebeat即可,第二种是搜集每个节点的k8s日志,则需要在每个节点下运行filebeat

第一种 filebeat-daemonset-node1.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

labels:

app: filebeat

name: filebeat

namespace: kube-system

spec:

replicas: 1

serviceName: "filebeat"

selector:

matchLabels:

app: filebeat

template:

metadata:

labels:

app: filebeat

spec:

imagePullSecrets:

- name: harbor-pull-secret-188

containers:

- image: harbor.wuliuhub.com:8443/deerchain/filebeat:7.17.2

imagePullPolicy: IfNotPresent

name: filebeat

volumeMounts:

- name: filebeat-config

mountPath: /etc/filebeat.yml

subPath: filebeat.yml

- name: k8s-system-logs

mountPath: /var/log/containers/

- name: varlogpods

mountPath: /var/log/pods/

- name: dockercontainers

mountPath: /mnt/run/docker/containers/

- name: mntlog

mountPath: /mnt/log/

#使用配置文件启动filebeat

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 500Mi

#设置访问容器的用户ID本次设置为0即访问容器为root用户

#不设置默认容器用户为filebeat则会出现访问日志文件没权限的问题

#设置该参数使用kubelet exec登录容器的用户为root用户

securityContext:

runAsUser: 0

env:

- name: TZ

value: "CST-8"

volumes:

- name: filebeat-config

configMap:

name: filebeat-config

#把主机的日志/var/logs/messages挂载至容器

- name: k8s-system-logs

hostPath:

path: /var/log/containers/

type: ""

- name: varlogpods

hostPath:

path: /var/log/pods/

type: ""

- name: dockercontainers

hostPath:

path: /mnt/run/docker/containers/

type: ""

- name: mntlog

hostPath:

path: /mnt/log/

type: ""

nodeSelector:

kubernetes.io/hostname: k8s-node1 # 只在一个节点运行

运行

kubectl apply -f filebeat-daemonset-node1.yaml

第二种是搜集每个节点的k8s日志,则需要在每个节点下运行filebeat,创建filebeat-daemonset.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: filebeat

name: filebeat

namespace: kube-system

spec:

selector:

matchLabels:

app: filebeat

template:

metadata:

labels:

app: filebeat

spec:

imagePullSecrets:

- name: harbor-pull-secret-188

containers:

- image: harbor.wuliuhub.com:8443/deerchain/filebeat:7.17.2

imagePullPolicy: IfNotPresent

name: filebeat

volumeMounts:

- name: filebeat-config

mountPath: /etc/filebeat.yml

subPath: filebeat.yml

- name: k8s-system-logs

mountPath: /var/log/containers/

- name: varlogpods

mountPath: /var/log/pods/

- name: dockercontainers

mountPath: /mnt/run/docker/containers/

- name: mntlog

mountPath: /mnt/log/

#使用配置文件启动filebeat

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 500Mi

#设置访问容器的用户ID本次设置为0即访问容器为root用户

#不设置默认容器用户为filebeat则会出现访问日志文件没权限的问题

#设置该参数使用kubelet exec登录容器的用户为root用户

securityContext:

runAsUser: 0

env:

- name: TZ

value: "CST-8"

volumes:

- name: filebeat-config

configMap:

name: filebeat-config

#把主机的日志/var/logs/messages挂载至容器

- name: k8s-system-logs

hostPath:

path: /var/log/containers/

type: ""

- name: varlogpods

hostPath:

path: /var/log/pods/

type: ""

- name: dockercontainers

hostPath:

path: /mnt/run/docker/containers/

type: ""

- name: mntlog

hostPath:

path: /mnt/log/

type: ""

运行

kubectl apply -f filebeat-daemonset.yaml

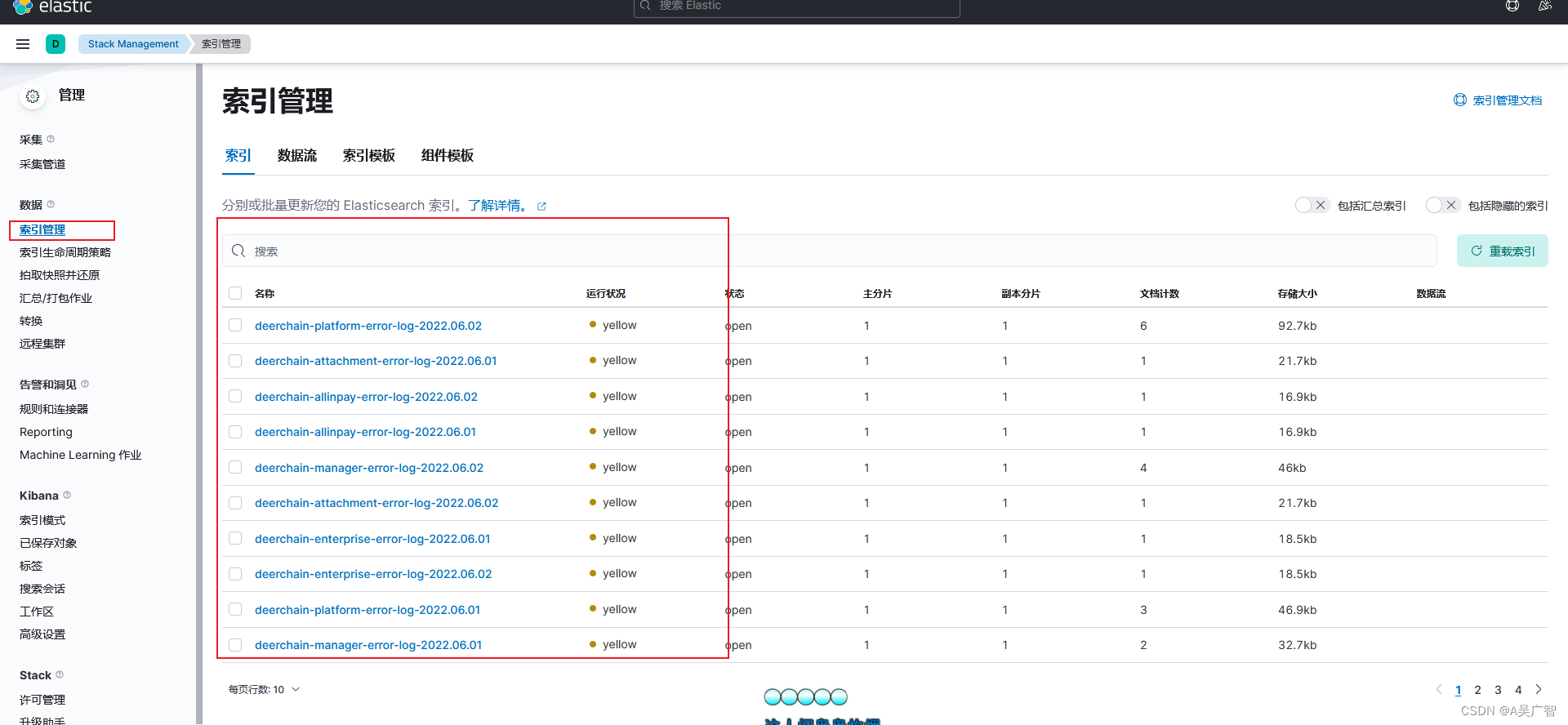

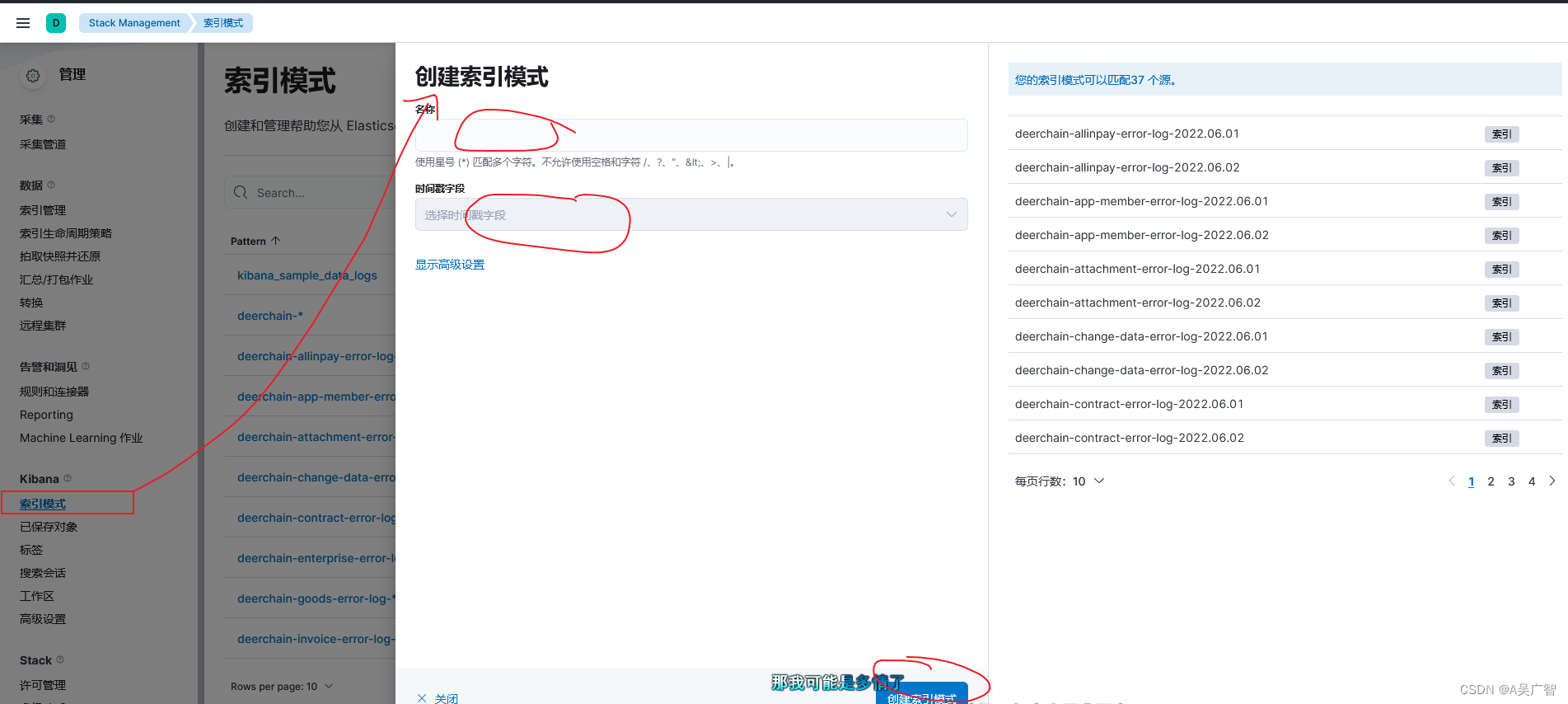

在kibana添加索引

索引是否可以正常展示

创建

效果如下

576

576

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?