一、数据介绍

最近正好了解到AUTO-ML相关的信息,了解到两个框架。就从kaggle上找了个数据集进行尝试,(为什么在kaggle上尝试,因为kaggle给每个人分配的资源实在是太香了)

虽然已经放了链接在这里,我想还是有很多人不会去看,所以我还是把数据的相关信息放在下面了。 www.kaggle.com/datasets/rsrishav/patient-survival-after-one-year-of-treatment

1.数据来源:格陵兰省的一家医院一直在努力通过观察患者的历史生存情况来改善其护理条件。 他们试图查看他们的数据,但无法确定导致高存活率的主要因素。

2. 目标:该数据集包含从格陵兰一家医院收集的患者记录。 “Survived1year”列是具有二进制条目(0 或 1)的目标变量。 Survived1year == 0,表示患者在治疗 1 年后没有存活 Survived1year == 1,表示患者在治疗 1 年后存活

3.数据描述:

IDPatientCare_Situation:治疗期间患者的护理情况

Diagnosed_Condition:患者的诊断状况

ID_Patient:患者识别号

Treatmentwithdrugs:治疗期间使用的药物类别

Survived1year:如果患者在一年后存活(0 表示没有存活;1 表示存活)

Patient_Age:患者的年龄

PatientBodyMass_Index:根据患者体重、身高等计算得出的值。

Patient_Smoker:患者是否吸烟

PatientRuralUrban:如果患者留在该国的农村或城市地区

PreviousCondition:治疗开始前患者的状况(此变量分为 8 列 - A、B、C、D、E、F、Z 和 Numberofprevcond。A、B、C、D、E、F 和 Z 是患者的既往情况,假设有一位患者,如果A列的条目为1,则表示该患者的先前情况为A,如果患者没有该情况,则为0,同理 其他条件。如果患者先前的条件为 A 和 C ,则 A 和 C 列将分别具有条目 1 和 1,而另一列 B、D、E、F、Z 将具有条目 0、0、0、0、 分别为 0。列 Numberofprev_cond 的条目为 2,即在这种情况下为 1 + 0 + 1 + 0 + 0 + 0 + 0 + 0 = 2。)

二、特征工程

1.查看相关数据

# 这是kaggle上获取数据目录的代码,你要是下载下来的话,就不需要看着一步。

import numpy as np

import pandas as pd

import warnings

warnings.filterwarnings('ignore')

#为什么这里会导入这个包的,因为警告实在是太多了,看的眼睛痛。

import os

for dirname, _, filenames in os.walk('/kaggle/input'):

for filename in filenames:

print(os.path.join(dirname, filename))

# 读取数据

train = pd.read_csv("/kaggle/input/patient-survival-after-one-year-of-treatment/Training_set_intermediate.csv")

test = pd.read_csv("/kaggle/input/patient-survival-after-one-year-of-treatment/Testing_set_intermediate.csv")

advance_train_df = pd.read_csv("/kaggle/input/patient-survival-after-one-year-of-treatment/Training_set_advance.csv")

advance_test_df = pd.read_csv("/kaggle/input/patient-survival-after-one-year-of-treatment/Testing_set_advance.csv")

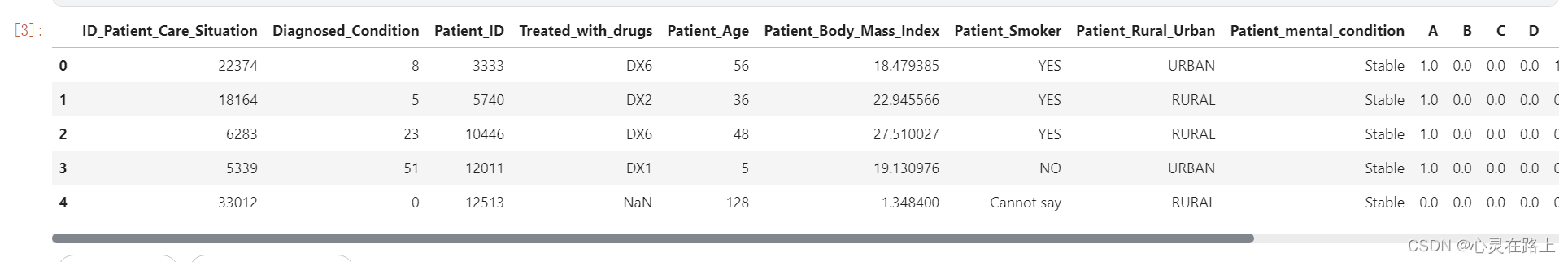

没啥重要的,就是看看数据是什么样的,看看结构。

train.head()

test.head()

train.isnull().sum()

train.shape, test.shape

train.isnull().sum()

2.处理数据

别问为什么写这么长代码 不直接用循环什么的,因为复制方便…

# 这里就是为了看看这些列的特征是不是都是这样的格式,

train['DX1']=train.Treated_with_drugs.str.contains('DX1')

train['DX2']=train.Treated_with_drugs.str.contains('DX2')

train['DX3']=train.Treated_with_drugs.str.contains('DX3')

train['DX4']=train.Treated_with_drugs.str.contains('DX4')

train['DX5']=train.Treated_with_drugs.str.contains('DX5')

test['DX1']=test.Treated_with_drugs.str.contains('DX1')

test['DX2']=test.Treated_with_drugs.str.contains('DX2')

test['DX3']=test.Treated_with_drugs.str.contains('DX3')

test['DX4']=test.Treated_with_drugs.str.contains('DX4')

test['DX5']=test.Treated_with_drugs.str.contains('DX5')

# 填充

train['A'].fillna(train['A'].mode()[0],inplace=True)

train['B'].fillna(train['B'].mode()[0],inplace=True)

train['C'].fillna(train['C'].mode()[0],inplace=True)

train['D'].fillna(train['D'].mode()[0],inplace=True)

train['E'].fillna(train['E'].mode()[0],inplace=True)

train['F'].fillna(train['F'].mode()[0],inplace=True)

train['Z'].fillna(train['Z'].mode()[0],inplace=True)

test['A'].fillna(test['A'].mode()[0],inplace=True)

test['B'].fillna(test['B'].mode()[0],inplace=True)

test['C'].fillna(test['C'].mode()[0],inplace=True)

test['D'].fillna(test['D'].mode()[0],inplace=True)

test['E'].fillna(test['E'].mode()[0],inplace=True)

test['F'].fillna(test['F'].mode()[0],inplace=True)

test['Z'].fillna(test['Z'].mode()[0],inplace=True)

处理下两个数据集 提取想要的特征,一些没用的特征可以舍去;

- 这里也一样,因为当初选的时候就是一个个挑的,挑完之后发现好多啊。但是又不想改了,你要是想看起来简便些的话,可以直接drop掉没有用的列。

df=train[['Diagnosed_Condition','Patient_Age','Patient_Body_Mass_Index','Patient_Smoker','Patient_Rural_Urban','Patient_mental_condition',

'A',

'B',

'C',

'D',

'E',

'F',

'Z',

'Number_of_prev_cond',

'Survived_1_year',

'DX1',

'DX2',

'DX3',

'DX4',

'DX5']]

df_test=test[['Diagnosed_Condition','Patient_Age','Patient_Body_Mass_Index','Patient_Smoker','Patient_Rural_Urban','Patient_mental_condition',

'A',

'B',

'C',

'D',

'E',

'F',

'Z',

'Number_of_prev_cond',

'DX1',

'DX2',

'DX3',

'DX4',

'DX5']]

#归一化处理,get_dummies函数就是使用独热编码

df=pd.get_dummies(df, columns=["Patient_Smoker","Patient_Rural_Urban","Patient_mental_condition"])

# 处理下训练集和测试集

df_test=df_test.drop('Number_of_prev_cond',axis=1)

df_test[['DX1', 'DX2', 'DX3', 'DX4', 'DX5']]=df_test[['DX1', 'DX2', 'DX3', 'DX4', 'DX5']].fillna(0).astype(float)

df_test['Patient_Smoker_Cannot say']=0

二、开始跑模型了

1.先使用XGboost

# 模型三步走。

from sklearn.model_selection import train_test_split

df[['DX1', 'DX2', 'DX3', 'DX4', 'DX5']]=df[['DX1', 'DX2', 'DX3', 'DX4', 'DX5']].fillna(0).astype(float)

X=df.drop(['Survived_1_year','Number_of_prev_cond'],axis=1)

y=df[['Survived_1_year']]

X=X.fillna(0)

X_train,X_test,y_train,y_test=train_test_split(X,y,stratify=y)

clf_xgb=xgb.XGBClassifier(objective='binary:logistic',seed=42)

clf_xgb.fit(X_train,y_train,verbose=True,early_stopping_rounds=10,eval_metric='aucpr',eval_set=[[X_test,y_test]])

preds=clf_xgb.predict(df_test)

preds=pd.DataFrame(preds)

preds.to_csv('patient_survival_preds.csv')

2.这里使用下xgboos-hperopt

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.model_selection import KFold, TimeSeriesSplit

from sklearn.model_selection import StratifiedKFold

from sklearn.metrics import roc_auc_score

from xgboost import plot_importance

from sklearn.metrics import make_scorer

import time

# hyperopt具体使用可以去看文档

#推荐https://github.com/FontTian/hyperopt-doc-zh/tree/master/tutorials/zh

from hyperopt import fmin, hp, tpe, Trials, space_eval, STATUS_OK, STATUS_RUNNING

from functools import partial

#这里就是定义一个hyperopt参数空间,以达到自动化调参的目的。

def hyper_opt(params):

time1 = time.time()

params = {

'max_depth': int(params['max_depth']),

'gamma': "{:.3f}".format(params['gamma']),

'subsample': "{:.2f}".format(params['subsample']),

'reg_alpha': "{:.3f}".format(params['reg_alpha']),

'reg_lambda': "{:.3f}".format(params['reg_lambda']),

'learning_rate': "{:.3f}".format(params['learning_rate']),

'num_leaves': '{:.3f}'.format(params['num_leaves']),

'colsample_bytree': '{:.3f}'.format(params['colsample_bytree']),

'min_child_samples': '{:.3f}'.format(params['min_child_samples']),

'feature_fraction': '{:.3f}'.format(params['feature_fraction']),

'bagging_fraction': '{:.3f}'.format(params['bagging_fraction'])

}

print("\n############## New Run ################")

print(f"params = {params}")

# K折

FOLDS = 4

count=1

skf = StratifiedKFold(n_splits=FOLDS, shuffle=True, random_state=42)

tss = TimeSeriesSplit(n_splits=FOLDS)

y_preds = np.zeros(advance_train_df.shape[0])

y_oof = np.zeros(X_train.shape[0])

score_mean = 0

for tr_idx, val_idx in tss.split(X_train, y_train):

clf = xgb.XGBClassifier(

n_estimators=600, random_state=4, verbose=True,

tree_method='gpu_hist',

**params

)

X_tr, X_vl = X_train.iloc[tr_idx, :], X_train.iloc[val_idx, :]

y_tr, y_vl = y_train.iloc[tr_idx], y_train.iloc[val_idx]

clf.fit(X_tr, y_tr)

try:

score = make_scorer(roc_auc_score, needs_proba= True)(clf, X_vl, y_vl)

except ValueError:

pass

score_mean += score

print(f'{count} CV - score: {round(score, 4)}')

count += 1

time2 = time.time() - time1

print(f"Total Time Run: {round(time2 / 60,2)}")

print(f'Mean ROC_AUC: {score_mean / FOLDS}')

del X_tr, X_vl, y_tr, y_vl, clf, score

return -(score_mean / FOLDS)

#这里选择下XGB的参数

parameters = {

'max_depth': hp.quniform('max_depth', 7, 23, 1),

'reg_alpha': hp.uniform('reg_alpha', 0.01, 0.4),

'reg_lambda': hp.uniform('reg_lambda', 0.01, .4),

'learning_rate': hp.uniform('learning_rate', 0.01, 0.2),

'colsample_bytree': hp.uniform('colsample_bytree', 0.3, .9),

'gamma': hp.uniform('gamma', 0.01, .7),

'num_leaves': hp.choice('num_leaves', list(range(20, 250, 10))),

'min_child_samples': hp.choice('min_child_samples', list(range(100, 250, 10))),

'subsample': hp.choice('subsample', [0.2, 0.4, 0.5, 0.6, 0.7, .8, .9]),

'feature_fraction': hp.uniform('feature_fraction', 0.4, .8),

'bagging_fraction': hp.uniform('bagging_fraction', 0.4, .9)

}

%%time

hyperOpt_best = fmin(fn = hyper_opt, space = parameters, algo = tpe.suggest, max_evals = 10) #check max_evals

best_parameters = space_eval(parameters, hyperOpt_best)

print("BEST HyperOpt PARAMS: ", best_parameters)

best_parameters['max_depth'] = int(best_parameters['max_depth'])

#跑 kaggle给的GPU真好用

xgb_best = xgb.XGBClassifier(n_estimators=300, **best_parameters, tree_method='gpu_hist')

#xgb_best = xgboost.XGBClassifier(n_estimators=300, param_grid = best_parameters, tree_method='gpu_hist')

xgb_best.fit(X_train, y_train)

#这是kaggle比赛的最后一步,把预测的数据写入给定的格式的文件中上传。

preds=xgb_best.predict(df_test)

preds=pd.DataFrame(preds)

preds.to_csv('patient_survival_preds.csv')

#可以可视化看看

feature_important = xgb_best.get_booster().get_score(importance_type="weight")

keys = list(feature_important.keys())

values = list(feature_important.values())

plot_df = pd.DataFrame(data=values, index=keys, columns=["score"]).sort_values(by = "score", ascending=False)

plot_df.reset_index(inplace=True)

plot_df.columns = ['Feature Name', 'Score']

plot_df.sort_values('Score', ascending = False, inplace = True)

plot_df.set_index('Feature Name', inplace = True)

plt.figure(figsize = (15,8))

plot_df.iloc[:25].plot(kind = 'bar', legend = False, figsize = (16,7), color="g", align="center")

plt.title("Feature Importance\n", fontsize=20)

plt.xticks(fontsize = 10)

plt.xlabel('Top 25 Feature Names', fontsize=18)

plt.ylabel('Score\n', fontsize =18)

plt.show()

PS:如果想尝试LightGBM或者catboost的话,对着代码直接修改就好,三个模型的代码基本一致,修修改改就是一个新的模型。

有兴趣的道友可以了解下别的AUTO-ML框架,TPOT等,毕竟那位华为200W年薪的天才真仙少年就是搞AUTO-ML的。

这篇博客介绍了作者在Kaggle上使用患者生存数据进行机器学习实践的情况,主要涉及XGBoost模型的构建和Hyperopt库的超参数优化。首先,对数据进行了简单的介绍和预处理,包括缺失值处理和特征工程。接着,通过XGBoost进行初步建模,并利用Hyperopt自动化调参以优化模型性能。最终,展示了模型的特征重要性和预测结果。

这篇博客介绍了作者在Kaggle上使用患者生存数据进行机器学习实践的情况,主要涉及XGBoost模型的构建和Hyperopt库的超参数优化。首先,对数据进行了简单的介绍和预处理,包括缺失值处理和特征工程。接着,通过XGBoost进行初步建模,并利用Hyperopt自动化调参以优化模型性能。最终,展示了模型的特征重要性和预测结果。

1844

1844

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?