源码在最下面 最下面 最下面!

前言

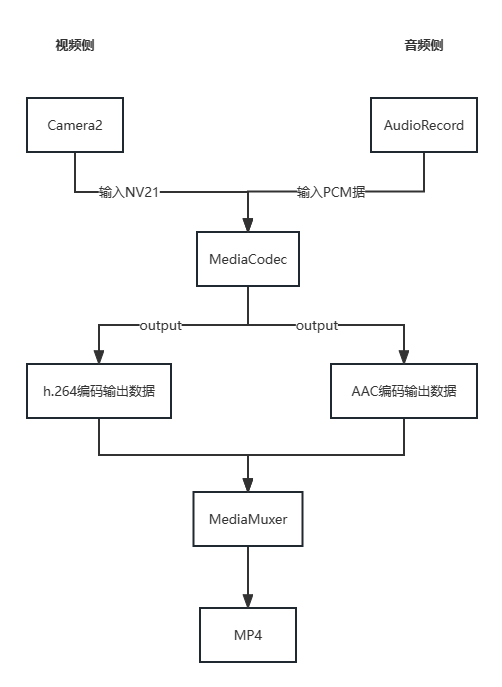

最近在学习音视频开发,涉及到MediaCodec的相关知识点,了解到MediaCodec+MediaMuxer可以编码视频流以及音频流最后合成MP4。

现在网络上流动的帖子大部分都是通过CameraAPI去进行视频流的采集,很少使用Camera2API或者CameraXAPI去进行视频流的采集。这不正巧我的工作就是相机影像方面,咱最熟的就是Camera2。

那废话不多说!献丑了!

预览实现

按照惯例,我们先使用Camera2将相机的一个基本预览拉起来,这样也方便后续的调试。这边我就不会太过详细去讲解,如果有不是很了解的同学,可以去看我之前的帖子,毕竟输入了Camera2这个关键词看到我这篇帖子多多少少都是学习过相关API知识的。(50条消息) 使用Camera2实现预览功能_camera2 预览_JS-s的博客-CSDN博客

这边只会快速的过一下核心代码。

首先看一下布局文件

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity"

android:orientation="vertical"

android:keepScreenOn="true">

<TextureView

android:id="@+id/previewtexture"

android:layout_weight="1"

android:layout_width="720dp"

android:layout_height="720dp"/>

<Button

android:id="@+id/shutterclick"

android:layout_margin="20dp"

android:layout_width="match_parent"

android:layout_height="50dp"

android:text="点击录像"/>

</LinearLayout>非常简单,就是一个TextureView与一个开始录制的按钮 ,TextureView主要负责预览流的显示,按钮进行录制按钮的开始与结束。

在textureview可用之后,设置相机属性,打开相机,创建预览流,一气呵成。部分核心代码如下所示。(看不看懂无所谓噢,后面会直接把所有代码附上,让你慢慢看)

previewTextureView.setSurfaceTextureListener(new TextureView.SurfaceTextureListener() {

@Override

public void onSurfaceTextureAvailable(@NonNull SurfaceTexture surfaceTexture, int width, int height) {

Log.d(TAG, "onSurfaceTextureAvailable");

setupCamera(width, height);

}

@Override

public void onSurfaceTextureSizeChanged(@NonNull SurfaceTexture surfaceTexture, int i, int i1) {

}

@Override

public boolean onSurfaceTextureDestroyed(@NonNull SurfaceTexture surfaceTexture) {

return false;

}

@Override

public void onSurfaceTextureUpdated(@NonNull SurfaceTexture surfaceTexture) {

}

});

private void setupCamera(int width, int height) {

CameraManager cameraManage = (CameraManager) getSystemService(Context.CAMERA_SERVICE);

try {

String[] cameraIdList = cameraManage.getCameraIdList();

for (String cameraId : cameraIdList) {

CameraCharacteristics cameraCharacteristics = cameraManage.getCameraCharacteristics(cameraId);

//demo就就简单写写后摄录像

if (cameraCharacteristics.get(CameraCharacteristics.LENS_FACING) != CameraCharacteristics.LENS_FACING_BACK) {

//表示匹配到前摄,直接跳过这次循环

continue;

}

StreamConfigurationMap map = cameraCharacteristics.get(CameraCharacteristics.SCALER_STREAM_CONFIGURATION_MAP);

Size[] outputSizes = map.getOutputSizes(SurfaceTexture.class);

//因为重点不在相机预览,我们这边给定一个预览尺寸,不进行最优选择。

Size size = new Size(1440, 1440);

previewSize = size;

mWidth = previewSize.getWidth();

mHeight = previewSize.getHeight();

mCameraId = cameraId;

}

} catch (CameraAccessException e) {

throw new RuntimeException(e);

}

openCamera();

}

private void openCamera() {

Log.d(TAG, "openCamera: success");

CameraManager cameraManager = (CameraManager) getSystemService(Context.CAMERA_SERVICE);

try {

if (ActivityCompat.checkSelfPermission(this, Manifest.permission.CAMERA) != PackageManager.PERMISSION_GRANTED) {

// TODO: Consider calling

// ActivityCompat#requestPermissions

// here to request the missing permissions, and then overriding

// public void onRequestPermissionsResult(int requestCode, String[] permissions,

// int[] grantResults)

// to handle the case where the user grants the permission. See the documentation

// for ActivityCompat#requestPermissions for more details.

return;

}

cameraManager.openCamera(mCameraId, stateCallback, cameraHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

private CameraDevice.StateCallback stateCallback = new CameraDevice.StateCallback() {

@Override

public void onOpened(@NonNull CameraDevice cameraDevice) {

Log.d(TAG, "onOpen");

mCameraDevice = cameraDevice;

startPreview(mCameraDevice);

}

@Override

public void onDisconnected(@NonNull CameraDevice cameraDevice) {

Log.d(TAG, "onDisconnected");

}

@Override

public void onError(@NonNull CameraDevice cameraDevice, int i) {

Log.d(TAG, "onError");

}

};

private void startPreview(CameraDevice mCameraDevice) {

try {

Log.d(TAG, "startPreview");

setPreviewImageReader();

previewCaptureRequestBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW);

SurfaceTexture previewSurfaceTexture = previewTextureView.getSurfaceTexture();

previewSurfaceTexture.setDefaultBufferSize(mWidth, mHeight);

Surface previewSurface = new Surface(previewSurfaceTexture);

Surface previewImageReaderSurface = previewImageReader.getSurface();

previewCaptureRequestBuilder.addTarget(previewSurface);

mCameraDevice.createCaptureSession(Arrays.asList(previewSurface, previewImageReaderSurface), new CameraCaptureSession.StateCallback() {

@Override

public void onConfigured(@NonNull CameraCaptureSession cameraCaptureSession) {

Log.d(TAG, "onConfigured");

previewCaptureSession = cameraCaptureSession;

try {

cameraCaptureSession.setRepeatingRequest(previewCaptureRequestBuilder.build(), cameraPreviewCallback, cameraHandler);

} catch (CameraAccessException e) {

throw new RuntimeException(e);

}

}

@Override

public void onConfigureFailed(@NonNull CameraCaptureSession cameraCaptureSession) {

}

}, cameraHandler);

} catch (CameraAccessException e) {

throw new RuntimeException(e);

}

}一个预览的基本流程就是这么一点东西,里面具体啥意思,我这边真不讲啊,要真不会的话,可以去看看我上面分享的博文链接。

这边我只需要额外注意一个方法,setPreviewImageReader(),这个跟后面录制视频的流程关系非常大。这个咱们来开第二个章节详细说道说道。

录像前的知识捡漏

AudioRecord

AudioRecord是Android系统提供的用于实现录音的功能类。 这个录音使用流程非常简单,但是该类录制出来的是PCM文件是无法直接用常见的播放器进行播放的。但是使用MediaCodec实现录像功能,使用AudioRecord类来捕获音频流就非常合适,MediaCodec可以将PCM格式的数据转换成AAC格式,这个格式就非常适合合成MP4的音频。

1. 构造一个 AudioRecord 对象,其中需要的最小录音缓存buffffer大小可以通过 getMinBufffferSize方法得到。如果 buffffer容量过小,将导致对象构造的失败。2. 初始化一个 buffffer ,该 buffffer 大于等于 AudioRecord 对象用于写声音数据的 buffffer 大小。3. 开始录音4. 创建一个数据流,一边从 AudioRecord 中读取声音数据到初始化的 buffffer ,一边将 buffffer 中数据导入数据流。5. 关闭数据流6. 停止录音

我们现在使用AudioRecord录制的音频是PCM格式,我们需要将录制到的buffer数据放入到MediaCodec中转换成AAC编码格式。

MediaCodec

Android 在 API 16 后引入的音视频编解码 API,Android 应用层统一由 MediaCodec API 提供音视频编解码的功能,由参数配置来决定采用何种编解码算法、是否采用硬件编解码加速等。由于使用硬件编解码,兼容性有不少问题,据说 MediaCodec 坑比较多。本人在使用的过程中那是非常多问题,煎熬的很。

MediaCodec 可以处理具体的流,主要有这几个方法:getInputBuffffers :获取需要编码数据的输入流队列,返回的是一个 ByteBuffffer 数组queueInputBuffffer :输入流入队列dequeueInputBuffffer :从输入流队列中取数据进行编码操作getOutputBuffffers :获取编解码之后的数据输出流队列,返回的是一个 ByteBuffffer 数组dequeueOutputBuffffer :从输出队列中取出编码操作之后的数据releaseOutputBuffffer :处理完成,释放 ByteBuffffer 数据

//基本上的代码流程就是这样

//创建MediaCodec

- createEncoderByType/createDecoderByType

//配置,这个必不可少,是MediaCodec的生命周期

- configure

//启动MediaCodec

- start

//不断获取待处理的数据流

- while(true) {

//在MediaCodec中拿到一个输入缓存区的索引index,可以将MeidaCodec缓存区理解成一个数组,

//每个数组元素可进行buffer的存储,我们现在这个方法是拿到每个数组元素的下标。

//好在下一步往每个数组元素里面存inputbuffer

- dequeueInputBuffer

//通过该方法将之前获取的流数据放入到缓存区中等到MediaCodec进行处理

- queueInputBuffer

//在MediaCodec中拿到一个输出缓存区的索引index,

//这个输出缓存区是之前输入的数据处理之后就会存放在这

- dequeueOutputBuffer

//通过拿到的索引获取处理之后的buffer值

- getOutputBuffer

//释放处理之后的buffer值

- releaseOutputBuffer

}

- stop

- releaseMediaMuxer

MediaMuxer的作用是生成音频或视频文件;还可以把音频与视频混合成一个音视频文件。

相关 API 介绍:MediaMuxer(String path, int format) :path: 输出文件的名称 format:输出文件的格式;当前只支持 MP4 格式;addTrack(MediaFormat format):添加通道;start() :开始合成文件writeSampleData(int trackIndex, ByteBuffffer byteBuf,MediaCodec.BufffferInfobufffferInfo) :把 ByteBuffffer 中的数据写入到在构造器设置的文件中;stop() :停止合成文件release() :释放资源

实现录像流程

流程图总结

我们先从流程图看看我们需要获取到什么数据,需要解决什么问题。

根据流程我们可以总结一下:

- 我们需要想办法使用Camera2在录像的时候实时获取到预览的数据,并将其转换成NV21的格式方便后续处理。

- 使用AudioRecord实时录制音频,并且需要去到pcm音频数据。

- 因为视频流跟音频流需要分别编码成两个完全不相同的编码格式(h.264、AAC),我们需要分别创建两个MediaCodec进行分开处理。

- 两个MediaCodec处理的数据传入到MediaMuxer中,MediaMuxer进行合并成MP4。

接下来我们按照步骤一个一个将其走通。

Camera2获取YUV数据并转换NV21

首先咱们来说一下思路,Camera2跟Camera区别还是比较大的,Camera提供了一个回调可以直接拿到预览数据,但是Camera2就莫得,但是hold on hold on,先别骂。办法总比困难多,我们回想一下我们是怎么用Camera2实现拍照的。我们是通过imagereader当session.capture完之后,imagereader可用之后提取image变量从而进行保存图片,发现没有,这要稍做设置,image就可以提取出来YUV数据。按照这个思路,我们是否可以当执行录像的时候,例如session.setRepeatRequest的时候设置一个videoImageReader,每次捕获完之后videoImageReader就开始可以开始回调Image,从而获取到录像的YUV数据。

话不多说,思路有了之后,我们便可以进行代码实现。

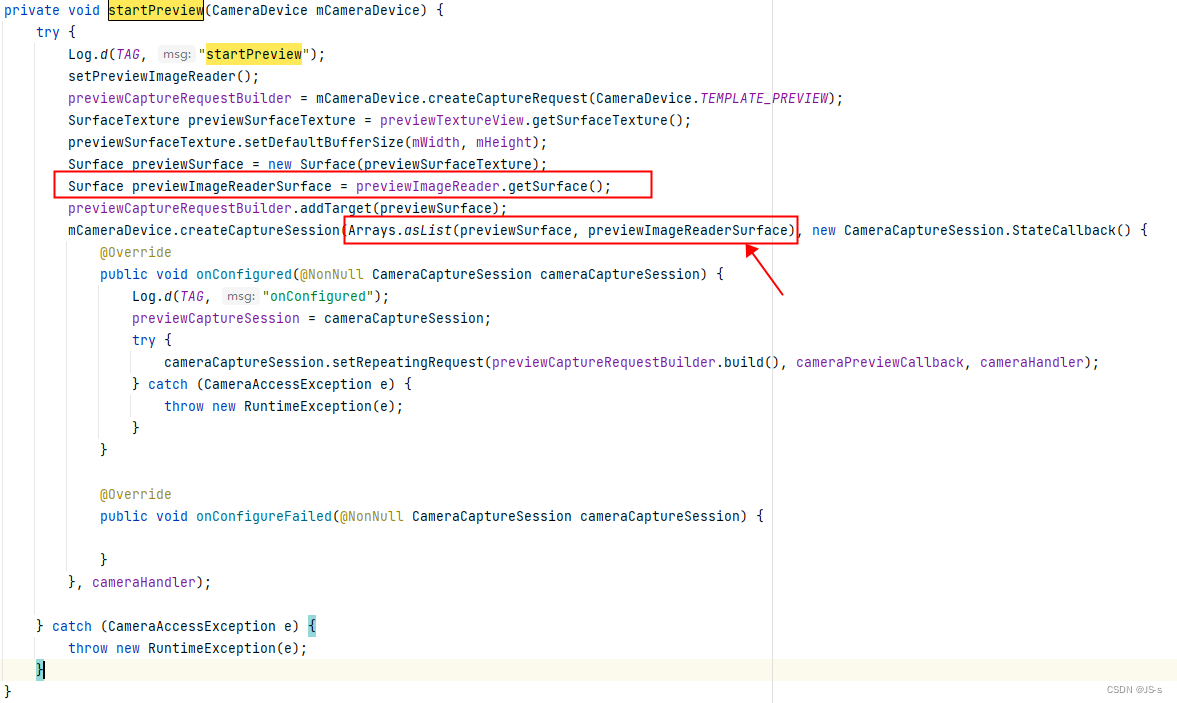

上面的代码是设置好相机各种属性之后,准备开启预览的时候的session设置,一切都跟Camera2的预览差不多,但是需要注意一下的是我红色框标注的部分,我新建了一个previewImageReader,这个ImageReader就是我上面说的录像的时候捕获YUV数据用的。因为我录像与预览使用的session是准备使用同一个的,所以我在新建session的时候就将previewImageReader的surface放入到了创建session中。但是需要注意的是,仔细看一下上面代码previewCaptureRequestBuilder.addTarget,会发现只add了textureview的surface,咱可千万不要一激动就把previewImageReaderSurface也给add进去了,要这样的话,到时候预览的时候previewImageReader可都会回调。我们在session进行录像的时候,重建一个capturerequestbuilder将previewImageReaderSurface给add进去,这个后面应该也会有相关的代码展示。

private void startVideoSession() {

Log.d(TAG, "startVideoSession");

if (previewCaptureSession!=null){

try {

previewCaptureSession.stopRepeating();

} catch (CameraAccessException e) {

throw new RuntimeException(e);

}

}

SurfaceTexture previewSurfaceTexture = previewTextureView.getSurfaceTexture();

Surface previewSurface = new Surface(previewSurfaceTexture);

Surface previewImageReaderSurface = previewImageReader.getSurface();

try {

videoRequest = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_RECORD);

videoRequest.set(CaptureRequest.CONTROL_AF_MODE, CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE);

videoRequest.addTarget(previewSurface);

videoRequest.addTarget(previewImageReaderSurface);

previewCaptureSession.setRepeatingRequest(videoRequest.build(), videosessioncallback, cameraHandler);

} catch (CameraAccessException e) {

throw new RuntimeException(e);

}

}看见没有在这里才去addTarget,然后重新开一个重复捕获模式去进行录像。

接下来我们来看看previewImageReader的创建代码:

private void setPreviewImageReader() {

previewImageReader = ImageReader.newInstance(previewSize.getWidth(), previewSize.getHeight(), ImageFormat.YUV_420_888, 1);

previewImageReader.setOnImageAvailableListener(new ImageReader.OnImageAvailableListener() {

@Override

public void onImageAvailable(ImageReader imageReader) {

Log.d(TAG,"onImageAvailable");

Image image = imageReader.acquireNextImage();

int width = image.getWidth();

int height = image.getHeight();

int I420size = width * height*3/2;

Log.d(TAG,"I420size:"+I420size);

byte[] nv21 = new byte[I420size];

YUVToNV21_NV12(image,nv21,image.getWidth(),image.getHeight(),"NV21");

encodeVideo(nv21);

image.close();

}

}, previewCaptureHandler);

}可以看到这个ImageReader是设置成了YUV_420_888的格式,我之前有想过直接设置成NV21的格式,毕竟有这个选项,但是最后证明这是不行的,只能老老实实将YUV_420_888通过逻辑装换成NV21的格式。

private static void YUVToNV21_NV12(Image image, byte[] nv21, int w, int h, String type) {

Image.Plane[] planes = image.getPlanes();

int remaining0 = planes[0].getBuffer().remaining();

int remaining1 = planes[1].getBuffer().remaining();

int remaining2 = planes[2].getBuffer().remaining();

//分别准备三个数组接收YUV分量。

byte[] yRawSrcBytes = new byte[remaining0];

byte[] uRawSrcBytes = new byte[remaining1];

byte[] vRawSrcBytes = new byte[remaining2];

planes[0].getBuffer().get(yRawSrcBytes);

planes[1].getBuffer().get(uRawSrcBytes);

planes[2].getBuffer().get(vRawSrcBytes);

int j = 0, k = 0;

boolean flag = type.equals("NV21");

for (int i = 0; i < nv21.length; i++) {

if (i < w * h) {

//首先填充w*h个Y分量

nv21[i] = yRawSrcBytes[i];

} else {

if (flag) {

//若NV21类型 则Y分量分配完后第一个将是V分量

nv21[i] = vRawSrcBytes[j];

//PixelStride有用数据步长 = 1紧凑按顺序填充,=2每间隔一个填充数据

j += planes[1].getPixelStride();

} else {

//若NV12类型 则Y分量分配完后第一个将是U分量

nv21[i] = uRawSrcBytes[k];

//PixelStride有用数据步长 = 1紧凑按顺序填充,=2每间隔一个填充数据

k += planes[2].getPixelStride();

}

//紧接着可以交错UV或者VU排列不停的改变flag标志即可交错排列

flag = !flag;

}

}

}具体的原理可以看看这篇博客

(51条消息) 【Android Camera2】彻底弄清图像数据YUV420_888转NV21问题/良心教学/避坑必读!_yuv_420_888_奔跑的鲁班七号的博客-CSDN博客

转换成NV21之后我们需要做的就是将这些数据传进MediaCodec中,也就是那个encodeVideo方法。我们先不进行讲解,等到后面的MediaCodec再一起讲讲。

AudioRecord录制音频

这个在网上的资料还是比较多的,就那一套流程,创建初始化,把那些参数啥啥的设置后,之后就开始就行。

private void initAudioRecord() {

//这minBufferSize是代表设备能接受的最小buffersize

//sample_rate是44100,最常见就是这个值

AudioiMinBufferSize = AudioRecord.getMinBufferSize(SAMPLE_RATE, AudioFormat.CHANNEL_IN_STEREO, AudioFormat.ENCODING_PCM_16BIT);

if (ActivityCompat.checkSelfPermission(this, Manifest.permission.RECORD_AUDIO) != PackageManager.PERMISSION_GRANTED) {

// TODO: Consider calling

// ActivityCompat#requestPermissions

// here to request the missing permissions, and then overriding

// public void onRequestPermissionsResult(int requestCode, String[] permissions,

// int[] grantResults)

// to handle the case where the user grants the permission. See the documentation

// for ActivityCompat#requestPermissions for more details.

return;

}

//这下面的一些参数例如什么16BIT可以自行去了解一下,现阶段基本知道一下就行吧

audioRecord = new AudioRecord.Builder()

.setAudioFormat(new AudioFormat.Builder()

.setEncoding(AudioFormat.ENCODING_PCM_16BIT)

.setSampleRate(SAMPLE_RATE)

.setChannelMask(AudioFormat.CHANNEL_IN_MONO).build())

.setBufferSizeInBytes(AudioiMinBufferSize)

.setAudioSource(MediaRecorder.AudioSource.MIC)

.build();

}初始化之后,我们在开始录像的时候,便可以将起启动

private void startAudioRecord() {

audioRecordThread = new Thread(new Runnable() {

@Override

public void run() {

mIsAudioRecording = true;

audioRecord.startRecording();

while (mIsAudioRecording) {

byte[] inputAudioData = new byte[AudioiMinBufferSize];

int res = audioRecord.read(inputAudioData, 0, inputAudioData.length);

if (res > 0) {

//Log.d(TAG,res+"");

if (AudioCodec!=null){

if (captureListener!=null){

captureListener.onCaptureListener(inputAudioData,res);

}

//callbackData(inputAudioData,inputAudioData.length);

}

}

}

}

});

audioRecordThread.start();

}inputAudioData就是每次录制出来的byte数据,这些数据是PCM编码格式的,我们要做的也是将其放入到专门的MediaCodec中 。而captureListener.onCaptureListener(inputAudioData,res)就是将pcm传入MediaCodec中。

MediaCodec接收数据,处理数据

视频流设置MediaCodec

1.初始化视频流的MediaCodec,并进行Configure

private void initMediaCodec(int width, int height) {

Log.d(TAG, "width:" + width);

Log.d(TAG, "Height:" + height);

try {

//先拿到格式容器

/*

MediaFormat.createVideoFormat中的宽高参数,不能为奇数

过小或超过屏幕尺寸,也会出现这个错误

*/

//这里面的长宽设置应该跟imagereader里面获得的image的长宽进行对齐

MediaFormat videoFormat = MediaFormat.createVideoFormat(MediaFormat.MIMETYPE_VIDEO_AVC, 1440, 1440);

//设置色彩控件

videoFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420Flexible);

//设置码率,码率就是数据传输单位时间传递的数据位数

videoFormat.setInteger(MediaFormat.KEY_BIT_RATE, 500_000);

//设置帧率

videoFormat.setInteger(MediaFormat.KEY_FRAME_RATE, 20);

//设置关键帧间隔

videoFormat.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, 1);

videoFormat.setInteger(MediaFormat.KEY_MAX_INPUT_SIZE,4000000);

//创建MediaCodc,注意这个格式,视频编码一般都是使用这个type

videoMediaCodec = MediaCodec.createEncoderByType(MediaFormat.MIMETYPE_VIDEO_AVC);

videoMediaCodec.configure(videoFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

} catch (IOException e) {

throw new RuntimeException(e);

}

}2.将NV21数据传递进输入缓存区

private void encodeVideo(byte[] nv21) {

//输入

int index = videoMediaCodec.dequeueInputBuffer(WAIT_TIME);

//Log.d(TAG,"video encord video index:"+index);

if (index >= 0) {

ByteBuffer inputBuffer = videoMediaCodec.getInputBuffer(index);

inputBuffer.clear();

int remaining = inputBuffer.remaining();

inputBuffer.put(nv21, 0, nv21.length);

videoMediaCodec.queueInputBuffer(index, 0, nv21.length, (System.nanoTime()-nanoTime) / 1000, 0);

}

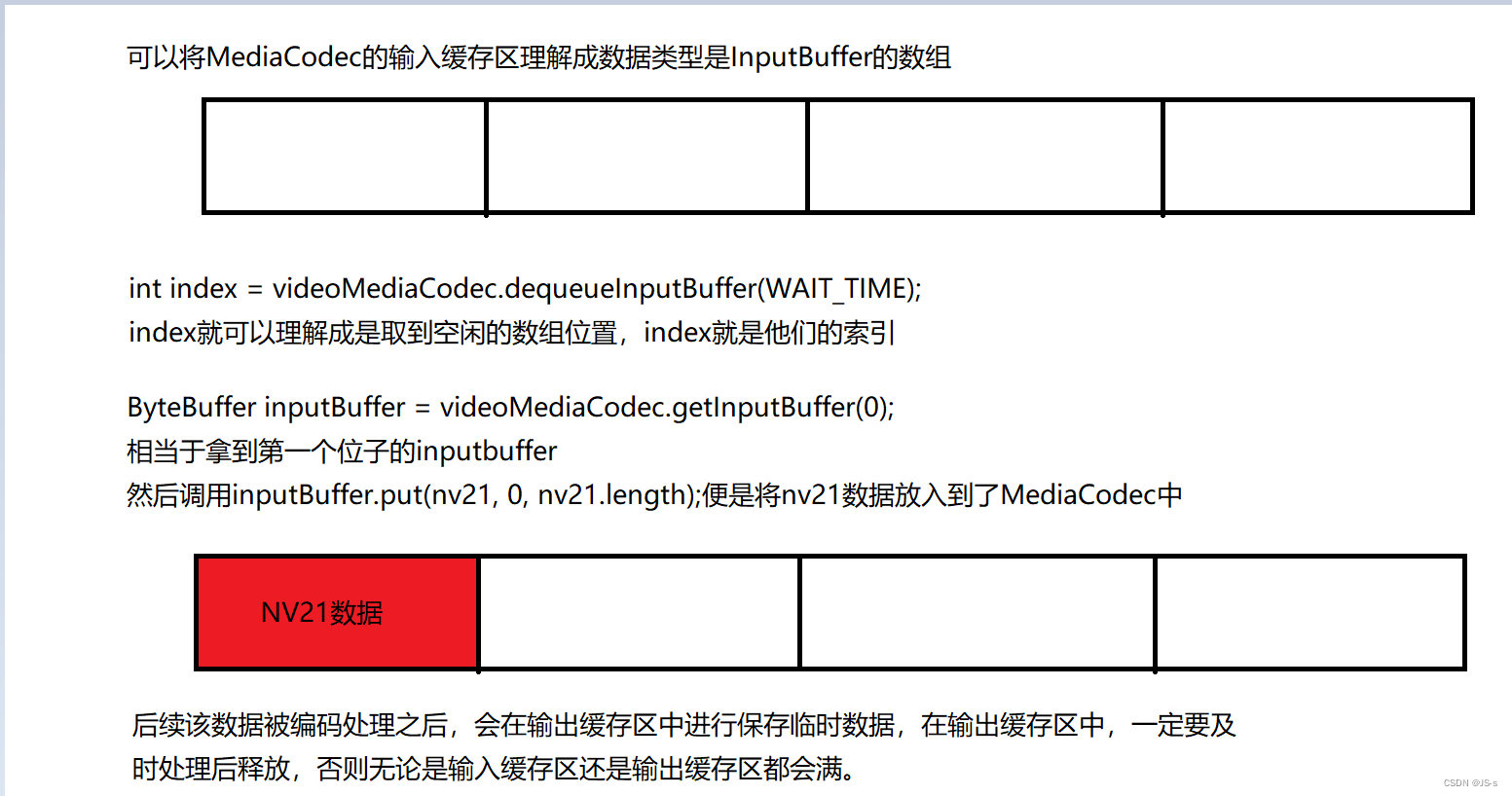

}图解如下图

3.从输出缓存区中取出已经处理好的数据

private void encodeVideoH264() {

MediaCodec.BufferInfo videoBufferInfo = new MediaCodec.BufferInfo();

//获取输出缓存区index索引

int videobufferindex = videoMediaCodec.dequeueOutputBuffer(videoBufferInfo, 0);

Log.d(TAG,"videobufferindex:"+videobufferindex);

//这个状态无论有没有outputbuffer,只要第一次都会执行,可以在这里进行一些MediaMuxer的逻辑操作,例如加轨道

if (videobufferindex == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

//添加轨道

mVideoTrackIndex = mediaMuxer.addTrack(videoMediaCodec.getOutputFormat());

Log.d(TAG,"mVideoTrackIndex:"+mVideoTrackIndex);

if (mAudioTrackIndex != -1) {

Log.d(TAG,"encodeVideoH264:mediaMuxer is Start");

mediaMuxer.start();

isMediaMuxerStarted+=1;

setPCMListener();

}

} else {

if (isMediaMuxerStarted>=0) {

while (videobufferindex >= 0) {

//获取输出数据成功

ByteBuffer videoOutputBuffer = videoMediaCodec.getOutputBuffer(videobufferindex);

Log.d(TAG,"Video mediaMuxer writeSampleData");

mediaMuxer.writeSampleData(mVideoTrackIndex, videoOutputBuffer, videoBufferInfo);

videoMediaCodec.releaseOutputBuffer(videobufferindex, false);

videobufferindex=videoMediaCodec.dequeueOutputBuffer(videoBufferInfo,0);

}

}

}

}音频流设置MediaCodec

其实跟视频流的MediaCodec也是差不多的,我这边就不那么详细了

1.初始化音频流的MediaCodec,并进行Configure

private void initAudioCodec() {

MediaFormat format = MediaFormat.createAudioFormat(MIMETYPE_AUDIO_AAC, SAMPLE_RATE, CHANNEL_COUNT);

format.setInteger(MediaFormat.KEY_AAC_PROFILE, MediaCodecInfo.CodecProfileLevel.AACObjectLC);

format.setInteger(MediaFormat.KEY_BIT_RATE, BIT_RATE);

format.setInteger(MediaFormat.KEY_MAX_INPUT_SIZE, 8192);

try {

//编码类型AAC格式

AudioCodec = MediaCodec.createEncoderByType(MIMETYPE_AUDIO_AAC);

AudioCodec.configure(format, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

} catch (IOException e) {

throw new RuntimeException(e);

}

}2. 将PCM数据传递进输入缓存区

private void callbackData(byte[] inputAudioData, int length) {

//已经拿到AudioRecord的byte数据

//准备将其放入到MediaCodc中

int index = AudioCodec.dequeueInputBuffer(-1);

if (index<0){

return;

}

Log.d(TAG,"AudioCodec.dequeueInputBuffer:"+index);

ByteBuffer[] inputBuffers = AudioCodec.getInputBuffers();

ByteBuffer audioInputBuffer = inputBuffers[index];

audioInputBuffer.clear();

Log.d(TAG, "call back Data length:" + length);

Log.d(TAG, "call back Data audioInputBuffer remain:" + audioInputBuffer.remaining());

audioInputBuffer.put(inputAudioData);

audioInputBuffer.limit(inputAudioData.length);

presentationTimeUs += (long) (1.0 * length / (44100 * 2 * (16 / 8)) * 1000000.0);

AudioCodec.queueInputBuffer(index, 0, inputAudioData.length, (System.nanoTime()-nanoTime)/1000, 0);

}3.从输出缓存区中取出已经处理好的数据

private void encodePCMToAC() {

MediaCodec.BufferInfo audioBufferInfo = new MediaCodec.BufferInfo();

//获得输出

int audioBufferFlag = AudioCodec.dequeueOutputBuffer(audioBufferInfo, 0);

Log.d(TAG, "CALL BACK DATA FLAG:" + audioBufferFlag);

if (audioBufferFlag==MediaCodec.INFO_OUTPUT_FORMAT_CHANGED){

//這時候進行添加軌道

mAudioTrackIndex=mediaMuxer.addTrack(AudioCodec.getOutputFormat());

Log.d(TAG,"mAudioTrackIndex:"+mAudioTrackIndex);

if (mVideoTrackIndex!=-1){

Log.d(TAG,"encodecPCMToACC:mediaMuxer is Start");

mediaMuxer.start();

isMediaMuxerStarted+=1;

//开始了再创建录音回调

setPCMListener();

}

}else {

Log.d(TAG,"isMediaMuxerStarted:"+isMediaMuxerStarted);

if (isMediaMuxerStarted>=0){

while (audioBufferFlag>=0){

//获取输出数据成功

ByteBuffer outputBuffer = AudioCodec.getOutputBuffer(audioBufferFlag);

mediaMuxer.writeSampleData(mAudioTrackIndex,outputBuffer,audioBufferInfo);

AudioCodec.releaseOutputBuffer(audioBufferFlag,false);

audioBufferFlag = AudioCodec.dequeueOutputBuffer(audioBufferInfo, 0);

}

}

}

}自此MediaCodec的输入输出数据便大体完成了。

我们现在拿到了H.264的视频数据以及AAC的音频数据 ,我们可以准备使用MediaMuxer进行合并最后导出MP4。

MediaMuxer合成MP4

我们先理一下整体的合成流程

- 首先我们需要初始化一下,设置一下参数啥的

- 一个MP4包括两个轨道,一个是视频轨道,一个是音频轨道,需要在合适的时候进行添加

- 添加好轨道之后,我们就可以启动

- 往里面写入编码后的数据

- 结束,生成MP4

因为MediaMuxer一般都是嵌套在MediaCodec取输出数据的逻辑中使用,所有我这边只会放一些核心的代码出来。

截至到目前为止所有代码都是节选的,咱们最主要是了解他的过程,已经重要的方法,整个流程的梳理,可以通过看最后的完整代码进行走通。

private void initMediaMuxer() {

String filename = Environment.getExternalStorageDirectory().getAbsolutePath() + "/DCIM/Camera/" + getCurrentTime() + ".mp4";

try {

mediaMuxer = new MediaMuxer(filename, MediaMuxer.OutputFormat.MUXER_OUTPUT_MPEG_4);

mediaMuxer.setOrientationHint(90);

} catch (IOException e) {

throw new RuntimeException(e);

}

}添加轨道,启动

mAudioTrackIndex=mediaMuxer.addTrack(AudioCodec.getOutputFormat());

mVideoTrackIndex = mediaMuxer.addTrack(videoMediaCodec.getOutputFormat());

mediaMuxer.start();添加数据

mediaMuxer.writeSampleData(mVideoTrackIndex, videoOutputBuffer, videoBufferInfo);

mediaMuxer.writeSampleData(mAudioTrackIndex,outputBuffer,audioBufferInfo);停止MedaiMuxer并释放

private void stopMediaMuxer() {

isMediaMuxerStarted=-1;

mediaMuxer.stop();

mediaMuxer.release();

}停止释放之后,MP4就基本上合成成功。

自此,大体的录像流程就这么多。

笔者也是过来人,知道这么讲其实会比较晕,还不如直接丢所有的源码出来,自己慢慢开。所以咱这次直接就全给丢出来。

在此之前,我先总结一下遇到的一些问题

常见问题

dequeueInputBuffer或dequeueOutputBuffer为-1

首先这个问题得先知道这两个方法返回-1,意味着什么

dequeueInputBuffer返回-1是代表输入缓存区没有空闲的位子,给我们输入数据了。这里经过探究,一般缓存区最大就相当于长度为4的数组,如果一直没有释放掉,可能就会输入几帧数据之后一直放回-1。这个问题的排查解决方式就是。如果一开始就返回-1,那这种情况就比较棘手了,我也不知道怎么搞,没遇到过,看看是不是MediaCodec什么参数没有设置好导致的。

但是如果是走了几帧,后就一直返回-1。拿这时候就有一个比较明确的思路了,赶紧去看看,输出缓存区的数据有没有被处理释放掉,因为输出缓存区的数据一直不去拿去处理释放,输入这边的也就不会释放,所以都是相连的,我之前一直卡着我的就是这个原因导致的 。

而如果dequeueOutputBuffer一直返回-1的话,这时候就代表一直无法可用的输出数据,这时候可以优先去排查一下输入数据有没有输入成功。

录制出来视频绿屏

这个主要就是和长宽之类的参数有关了,从Imagereader到MediaCodec的长宽设置一定要一致,否则极易绿屏,还有可能是YUV转NV21的时候有问题,可以着重排查一下这两个地方。

源码展示

布局文件

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity"

android:orientation="vertical"

android:keepScreenOn="true">

<TextureView

android:id="@+id/previewtexture"

android:layout_weight="1"

android:layout_width="720dp"

android:layout_height="720dp"/>

<Button

android:id="@+id/shutterclick"

android:layout_margin="20dp"

android:layout_width="match_parent"

android:layout_height="50dp"

android:text="点击录像"/>

</LinearLayout>逻辑文件

package com.example.newwaytorecordcameraapplication;

import static android.media.MediaFormat.MIMETYPE_AUDIO_AAC;

import androidx.annotation.NonNull;

import androidx.appcompat.app.AppCompatActivity;

import androidx.core.app.ActivityCompat;

import android.Manifest;

import android.content.Context;

import android.content.pm.PackageManager;

import android.graphics.ImageFormat;

import android.graphics.SurfaceTexture;

import android.hardware.camera2.CameraAccessException;

import android.hardware.camera2.CameraCaptureSession;

import android.hardware.camera2.CameraCharacteristics;

import android.hardware.camera2.CameraDevice;

import android.hardware.camera2.CameraManager;

import android.hardware.camera2.CaptureRequest;

import android.hardware.camera2.TotalCaptureResult;

import android.hardware.camera2.params.StreamConfigurationMap;

import android.media.AudioFormat;

import android.media.AudioRecord;

import android.media.Image;

import android.media.ImageReader;

import android.media.MediaCodec;

import android.media.MediaCodecInfo;

import android.media.MediaFormat;

import android.media.MediaMuxer;

import android.media.MediaRecorder;

import android.os.Bundle;

import android.os.Environment;

import android.os.Handler;

import android.os.HandlerThread;

import android.os.Message;

import android.os.SystemClock;

import android.provider.MediaStore;

import android.util.Log;

import android.util.Size;

import android.view.Surface;

import android.view.TextureView;

import android.view.View;

import android.widget.Button;

import android.widget.Toast;

import java.io.IOException;

import java.nio.ByteBuffer;

import java.text.DateFormat;

import java.text.SimpleDateFormat;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.Collections;

import java.util.Date;

import java.util.List;

import java.util.concurrent.atomic.AtomicBoolean;

public class MainActivity extends AppCompatActivity implements View.OnClickListener {

private TextureView previewTextureView;

private Button shutterClick;

private Size previewSize;

private static final String TAG = "VideoRecord";

private String mCameraId;

private Handler cameraHandler;

private CameraCaptureSession previewCaptureSession;

private MediaCodec videoMediaCodec;

private AudioRecord audioRecord;

private boolean isRecordingVideo = false;

private int mWidth;

private int mHeight;

private ImageReader previewImageReader;

private Handler previewCaptureHandler;

private CaptureRequest.Builder videoRequest;

private static final int WAIT_TIME = 0;

private static final int SAMPLE_RATE = 44100;

private long nanoTime;

private int AudioiMinBufferSize;

private boolean mIsAudioRecording = false;

private static final int CHANNEL_COUNT = 2;

private MediaCodec AudioCodec;

private static final int BIT_RATE = 96000;

private MediaMuxer mediaMuxer;

private volatile int mAudioTrackIndex = -1;

private volatile int mVideoTrackIndex = -1;

private volatile int isMediaMuxerStarted = -1;

private Thread audioRecordThread;

private Thread VideoCodecThread;

private Thread AudioCodecThread;

private long presentationTimeUs;

private AudioCaptureListener captureListener;

private volatile int isStop=0;

CaptureRequest.Builder previewCaptureRequestBuilder;

private volatile int videoMediaCodecIsStoped=0;

private volatile int AudioCodecIsStoped=0;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

initView();

}

//1.初始化MediaCodec视频编码

//设置mediaformat,到时候放到MediaRecodc里面

private void initMediaCodec(int width, int height) {

Log.d(TAG, "width:" + width);

Log.d(TAG, "Height:" + height);

try {

//先拿到格式容器

/*

MediaFormat.createVideoFormat中的宽高参数,不能为奇数

过小或超过屏幕尺寸,也会出现这个错误

*/

MediaFormat videoFormat = MediaFormat.createVideoFormat(MediaFormat.MIMETYPE_VIDEO_AVC, 1440, 1440);

//设置色彩控件

videoFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420Flexible);

//设置码率,码率就是数据传输单位时间传递的数据位数

videoFormat.setInteger(MediaFormat.KEY_BIT_RATE, 500_000);

//设置帧率

videoFormat.setInteger(MediaFormat.KEY_FRAME_RATE, 20);

//设置关键帧间隔

videoFormat.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, 1);

videoFormat.setInteger(MediaFormat.KEY_MAX_INPUT_SIZE,4000000);

//创建MediaCodc

videoMediaCodec = MediaCodec.createEncoderByType(MediaFormat.MIMETYPE_VIDEO_AVC);

videoMediaCodec.configure(videoFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

} catch (IOException e) {

throw new RuntimeException(e);

}

}

private void initView() {

previewTextureView = findViewById(R.id.previewtexture);

shutterClick = findViewById(R.id.shutterclick);

shutterClick.setOnClickListener(this);

}

@Override

protected void onResume() {

super.onResume();

previewTextureView.setSurfaceTextureListener(new TextureView.SurfaceTextureListener() {

@Override

public void onSurfaceTextureAvailable(@NonNull SurfaceTexture surfaceTexture, int width, int height) {

Log.d(TAG, "onSurfaceTextureAvailable");

setupCamera(width, height);

}

@Override

public void onSurfaceTextureSizeChanged(@NonNull SurfaceTexture surfaceTexture, int i, int i1) {

}

@Override

public boolean onSurfaceTextureDestroyed(@NonNull SurfaceTexture surfaceTexture) {

return false;

}

@Override

public void onSurfaceTextureUpdated(@NonNull SurfaceTexture surfaceTexture) {

}

});

HandlerThread videoRecordThread = new HandlerThread("VideoRecordThread");

videoRecordThread.start();

cameraHandler = new Handler(videoRecordThread.getLooper()) {

@Override

public void handleMessage(@NonNull Message msg) {

super.handleMessage(msg);

}

};

HandlerThread preivewImageReaderThread = new HandlerThread("preivewImageReaderThread");

preivewImageReaderThread.start();

previewCaptureHandler = new Handler(preivewImageReaderThread.getLooper()) {

@Override

public void handleMessage(@NonNull Message msg) {

super.handleMessage(msg);

}

};

}

//设置预览的ImageReader

private void setPreviewImageReader() {

previewImageReader = ImageReader.newInstance(previewSize.getWidth(), previewSize.getHeight(), ImageFormat.YUV_420_888, 1);

previewImageReader.setOnImageAvailableListener(new ImageReader.OnImageAvailableListener() {

@Override

public void onImageAvailable(ImageReader imageReader) {

Log.d(TAG,"onImageAvailable");

Image image = imageReader.acquireNextImage();

int width = image.getWidth();

int height = image.getHeight();

int I420size = width * height*3/2;

Log.d(TAG,"I420size:"+I420size);

byte[] nv21 = new byte[I420size];

YUVToNV21_NV12(image,nv21,image.getWidth(),image.getHeight(),"NV21");

encodeVideo(nv21);

image.close();

}

}, previewCaptureHandler);

}

private static byte[] YUV_420_888toNV21(Image image) {

int width = image.getWidth();

int height = image.getHeight();

Log.d(TAG,"image.getWidth():"+image.getWidth());

Log.d(TAG,"image.getHeight()"+image.getHeight());

ByteBuffer yBuffer = getBufferWithoutPadding(image.getPlanes()[0].getBuffer(), image.getWidth(), image.getPlanes()[0].getRowStride(),image.getHeight(),false);

ByteBuffer vBuffer;

//part1 获得真正的消除padding的ybuffer和ubuffer。需要对P格式和SP格式做不同的处理。如果是P格式的话只能逐像素去做,性能会降低。

if(image.getPlanes()[2].getPixelStride()==1){ //如果为true,说明是P格式。

vBuffer = getuvBufferWithoutPaddingP(image.getPlanes()[1].getBuffer(), image.getPlanes()[2].getBuffer(),

width,height,image.getPlanes()[1].getRowStride(),image.getPlanes()[1].getPixelStride());

}else{

vBuffer = getBufferWithoutPadding(image.getPlanes()[2].getBuffer(), image.getWidth(), image.getPlanes()[2].getRowStride(),image.getHeight()/2,true);

}

//part2 将y数据和uv的交替数据(除去最后一个v值)赋值给nv21

int ySize = yBuffer.remaining();

int vSize = vBuffer.remaining();

byte[] nv21;

int byteSize = width*height*3/2;

nv21 = new byte[byteSize];

yBuffer.get(nv21, 0, ySize);

vBuffer.get(nv21, ySize, vSize);

//part3 最后一个像素值的u值是缺失的,因此需要从u平面取一下。

ByteBuffer uPlane = image.getPlanes()[1].getBuffer();

byte lastValue = uPlane.get(uPlane.capacity() - 1);

nv21[byteSize - 1] = lastValue;

return nv21;

}

//Semi-Planar格式(SP)的处理和y通道的数据

private static ByteBuffer getBufferWithoutPadding(ByteBuffer buffer, int width, int rowStride, int times,boolean isVbuffer){

if(width == rowStride) return buffer; //没有buffer,不用处理。

int bufferPos = buffer.position();

int cap = buffer.capacity();

byte []byteArray = new byte[times*width];

int pos = 0;

//对于y平面,要逐行赋值的次数就是height次。对于uv交替的平面,赋值的次数是height/2次

for (int i=0;i<times;i++) {

buffer.position(bufferPos);

//part 1.1 对于u,v通道,会缺失最后一个像u值或者v值,因此需要特殊处理,否则会crash

if(isVbuffer && i==times-1){

width = width -1;

}

buffer.get(byteArray, pos, width);

bufferPos+= rowStride;

pos = pos+width;

}

//nv21数组转成buffer并返回

ByteBuffer bufferWithoutPaddings=ByteBuffer.allocate(byteArray.length);

// 数组放到buffer中

bufferWithoutPaddings.put(byteArray);

//重置 limit 和postion 值否则 buffer 读取数据不对

bufferWithoutPaddings.flip();

return bufferWithoutPaddings;

}

//Planar格式(P)的处理

private static ByteBuffer getuvBufferWithoutPaddingP(ByteBuffer uBuffer,ByteBuffer vBuffer, int width, int height, int rowStride, int pixelStride){

int pos = 0;

byte []byteArray = new byte[height*width/2];

for (int row=0; row<height/2; row++) {

for (int col=0; col<width/2; col++) {

int vuPos = col*pixelStride + row*rowStride;

byteArray[pos++] = vBuffer.get(vuPos);

byteArray[pos++] = uBuffer.get(vuPos);

}

}

ByteBuffer bufferWithoutPaddings=ByteBuffer.allocate(byteArray.length);

// 数组放到buffer中

bufferWithoutPaddings.put(byteArray);

//重置 limit 和postion 值否则 buffer 读取数据不对

bufferWithoutPaddings.flip();

return bufferWithoutPaddings;

}

private void encodeVideo(byte[] nv21) {

//输入

int index = videoMediaCodec.dequeueInputBuffer(WAIT_TIME);

//Log.d(TAG,"video encord video index:"+index);

if (index >= 0) {

ByteBuffer inputBuffer = videoMediaCodec.getInputBuffer(index);

inputBuffer.clear();

int remaining = inputBuffer.remaining();

inputBuffer.put(nv21, 0, nv21.length);

videoMediaCodec.queueInputBuffer(index, 0, nv21.length, (System.nanoTime()-nanoTime) / 1000, 0);

}

}

private void encodeVideoH264() {

MediaCodec.BufferInfo videoBufferInfo = new MediaCodec.BufferInfo();

int videobufferindex = videoMediaCodec.dequeueOutputBuffer(videoBufferInfo, 0);

Log.d(TAG,"videobufferindex:"+videobufferindex);

if (videobufferindex == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

//添加轨道

mVideoTrackIndex = mediaMuxer.addTrack(videoMediaCodec.getOutputFormat());

Log.d(TAG,"mVideoTrackIndex:"+mVideoTrackIndex);

if (mAudioTrackIndex != -1) {

Log.d(TAG,"encodeVideoH264:mediaMuxer is Start");

mediaMuxer.start();

isMediaMuxerStarted+=1;

setPCMListener();

}

} else {

if (isMediaMuxerStarted>=0) {

while (videobufferindex >= 0) {

//获取输出数据成功

ByteBuffer videoOutputBuffer = videoMediaCodec.getOutputBuffer(videobufferindex);

Log.d(TAG,"Video mediaMuxer writeSampleData");

mediaMuxer.writeSampleData(mVideoTrackIndex, videoOutputBuffer, videoBufferInfo);

videoMediaCodec.releaseOutputBuffer(videobufferindex, false);

videobufferindex=videoMediaCodec.dequeueOutputBuffer(videoBufferInfo,0);

}

}

}

}

private void encodePCMToAC() {

MediaCodec.BufferInfo audioBufferInfo = new MediaCodec.BufferInfo();

//获得输出

int audioBufferFlag = AudioCodec.dequeueOutputBuffer(audioBufferInfo, 0);

Log.d(TAG, "CALL BACK DATA FLAG:" + audioBufferFlag);

if (audioBufferFlag==MediaCodec.INFO_OUTPUT_FORMAT_CHANGED){

//這時候進行添加軌道

mAudioTrackIndex=mediaMuxer.addTrack(AudioCodec.getOutputFormat());

Log.d(TAG,"mAudioTrackIndex:"+mAudioTrackIndex);

if (mVideoTrackIndex!=-1){

Log.d(TAG,"encodecPCMToACC:mediaMuxer is Start");

mediaMuxer.start();

isMediaMuxerStarted+=1;

//开始了再创建录音回调

setPCMListener();

}

}else {

Log.d(TAG,"isMediaMuxerStarted:"+isMediaMuxerStarted);

if (isMediaMuxerStarted>=0){

while (audioBufferFlag>=0){

ByteBuffer outputBuffer = AudioCodec.getOutputBuffer(audioBufferFlag);

mediaMuxer.writeSampleData(mAudioTrackIndex,outputBuffer,audioBufferInfo);

AudioCodec.releaseOutputBuffer(audioBufferFlag,false);

audioBufferFlag = AudioCodec.dequeueOutputBuffer(audioBufferInfo, 0);

}

}

}

}

private void setPCMListener() {

setCaptureListener(new AudioCaptureListener() {

@Override

public void onCaptureListener(byte[] audioSource, int audioReadSize) {

callbackData(audioSource,audioReadSize);

}

});

}

private static void YUVToNV21_NV12(Image image, byte[] nv21, int w, int h, String type) {

Image.Plane[] planes = image.getPlanes();

int remaining0 = planes[0].getBuffer().remaining();

int remaining1 = planes[1].getBuffer().remaining();

int remaining2 = planes[2].getBuffer().remaining();

//分别准备三个数组接收YUV分量。

byte[] yRawSrcBytes = new byte[remaining0];

byte[] uRawSrcBytes = new byte[remaining1];

byte[] vRawSrcBytes = new byte[remaining2];

planes[0].getBuffer().get(yRawSrcBytes);

planes[1].getBuffer().get(uRawSrcBytes);

planes[2].getBuffer().get(vRawSrcBytes);

int j = 0, k = 0;

boolean flag = type.equals("NV21");

for (int i = 0; i < nv21.length; i++) {

if (i < w * h) {

//首先填充w*h个Y分量

nv21[i] = yRawSrcBytes[i];

} else {

if (flag) {

//若NV21类型 则Y分量分配完后第一个将是V分量

nv21[i] = vRawSrcBytes[j];

//PixelStride有用数据步长 = 1紧凑按顺序填充,=2每间隔一个填充数据

j += planes[1].getPixelStride();

} else {

//若NV12类型 则Y分量分配完后第一个将是U分量

nv21[i] = uRawSrcBytes[k];

//PixelStride有用数据步长 = 1紧凑按顺序填充,=2每间隔一个填充数据

k += planes[2].getPixelStride();

}

//紧接着可以交错UV或者VU排列不停的改变flag标志即可交错排列

flag = !flag;

}

}

}

private void setupCamera(int width, int height) {

CameraManager cameraManage = (CameraManager) getSystemService(Context.CAMERA_SERVICE);

try {

String[] cameraIdList = cameraManage.getCameraIdList();

for (String cameraId : cameraIdList) {

CameraCharacteristics cameraCharacteristics = cameraManage.getCameraCharacteristics(cameraId);

//demo就就简单写写后摄录像

if (cameraCharacteristics.get(CameraCharacteristics.LENS_FACING) != CameraCharacteristics.LENS_FACING_BACK) {

//表示匹配到前摄,直接跳过这次循环

continue;

}

StreamConfigurationMap map = cameraCharacteristics.get(CameraCharacteristics.SCALER_STREAM_CONFIGURATION_MAP);

Size[] outputSizes = map.getOutputSizes(SurfaceTexture.class);

Size size = new Size(1440, 1440);

previewSize = size;

mWidth = previewSize.getWidth();

mHeight = previewSize.getHeight();

mCameraId = cameraId;

}

} catch (CameraAccessException e) {

throw new RuntimeException(e);

}

openCamera();

}

private void initAudioRecord() {

AudioiMinBufferSize = AudioRecord.getMinBufferSize(SAMPLE_RATE, AudioFormat.CHANNEL_IN_STEREO, AudioFormat.ENCODING_PCM_16BIT);

if (ActivityCompat.checkSelfPermission(this, Manifest.permission.RECORD_AUDIO) != PackageManager.PERMISSION_GRANTED) {

// TODO: Consider calling

// ActivityCompat#requestPermissions

// here to request the missing permissions, and then overriding

// public void onRequestPermissionsResult(int requestCode, String[] permissions,

// int[] grantResults)

// to handle the case where the user grants the permission. See the documentation

// for ActivityCompat#requestPermissions for more details.

return;

}

audioRecord = new AudioRecord.Builder()

.setAudioFormat(new AudioFormat.Builder()

.setEncoding(AudioFormat.ENCODING_PCM_16BIT)

.setSampleRate(SAMPLE_RATE)

.setChannelMask(AudioFormat.CHANNEL_IN_MONO).build())

.setBufferSizeInBytes(AudioiMinBufferSize)

.setAudioSource(MediaRecorder.AudioSource.MIC)

.build();

}

private void openCamera() {

Log.d(TAG, "openCamera: success");

CameraManager cameraManager = (CameraManager) getSystemService(Context.CAMERA_SERVICE);

try {

if (ActivityCompat.checkSelfPermission(this, Manifest.permission.CAMERA) != PackageManager.PERMISSION_GRANTED) {

// TODO: Consider calling

// ActivityCompat#requestPermissions

// here to request the missing permissions, and then overriding

// public void onRequestPermissionsResult(int requestCode, String[] permissions,

// int[] grantResults)

// to handle the case where the user grants the permission. See the documentation

// for ActivityCompat#requestPermissions for more details.

return;

}

cameraManager.openCamera(mCameraId, stateCallback, cameraHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

private CameraDevice mCameraDevice;

private CameraDevice.StateCallback stateCallback = new CameraDevice.StateCallback() {

@Override

public void onOpened(@NonNull CameraDevice cameraDevice) {

Log.d(TAG, "onOpen");

mCameraDevice = cameraDevice;

startPreview(mCameraDevice);

}

@Override

public void onDisconnected(@NonNull CameraDevice cameraDevice) {

Log.d(TAG, "onDisconnected");

}

@Override

public void onError(@NonNull CameraDevice cameraDevice, int i) {

Log.d(TAG, "onError");

}

};

private void startPreview(CameraDevice mCameraDevice) {

try {

Log.d(TAG, "startPreview");

setPreviewImageReader();

previewCaptureRequestBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW);

SurfaceTexture previewSurfaceTexture = previewTextureView.getSurfaceTexture();

previewSurfaceTexture.setDefaultBufferSize(mWidth, mHeight);

Surface previewSurface = new Surface(previewSurfaceTexture);

Surface previewImageReaderSurface = previewImageReader.getSurface();

previewCaptureRequestBuilder.addTarget(previewSurface);

mCameraDevice.createCaptureSession(Arrays.asList(previewSurface, previewImageReaderSurface), new CameraCaptureSession.StateCallback() {

@Override

public void onConfigured(@NonNull CameraCaptureSession cameraCaptureSession) {

Log.d(TAG, "onConfigured");

previewCaptureSession = cameraCaptureSession;

try {

cameraCaptureSession.setRepeatingRequest(previewCaptureRequestBuilder.build(), cameraPreviewCallback, cameraHandler);

} catch (CameraAccessException e) {

throw new RuntimeException(e);

}

}

@Override

public void onConfigureFailed(@NonNull CameraCaptureSession cameraCaptureSession) {

}

}, cameraHandler);

} catch (CameraAccessException e) {

throw new RuntimeException(e);

}

}

private volatile boolean videoIsReadyToStop=false;

private CameraCaptureSession.CaptureCallback cameraPreviewCallback=new CameraCaptureSession.CaptureCallback() {

@Override

public void onCaptureCompleted(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull TotalCaptureResult result) {

super.onCaptureCompleted(session, request, result);

if(videoIsReadyToStop){

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

throw new RuntimeException(e);

}

videoIsReadyToStop=false;

stopMediaCodecThread();

}

}

};

private Size getOptimalSize(Size[] sizes, int width, int height) {

Size tempSize = new Size(width, height);

List<Size> adaptSize = new ArrayList<>();

for (Size size : sizes) {

if (width > height) {

//横屏的时候看,或是平板形式

if (size.getHeight() > height && size.getWidth() > width) {

adaptSize.add(size);

}

} else {

//竖屏的时候

if (size.getWidth() > height && size.getHeight() > width) {

adaptSize.add(size);

}

}

}

if (adaptSize.size() > 0) {

tempSize = adaptSize.get(0);

int minnum = 999999;

for (Size size : adaptSize) {

int num = size.getHeight() * size.getHeight() - width * height;

if (num < minnum) {

minnum = num;

tempSize = size;

}

}

}

return tempSize;

}

@Override

public void onClick(View view) {

switch (view.getId()) {

case R.id.shutterclick:

if (isRecordingVideo) {

//stop recording video

isRecordingVideo = false;

//开始停止录像

Log.d(TAG,"Stop recording video");

stopRecordingVideo();

} else {

isRecordingVideo = true;

//开始录像

startRecording();

}

break;

}

}

private void stopRecordingVideo() {

stopVideoSession();

stopAudioRecord();

}

private void stopMediaMuxer() {

isMediaMuxerStarted=-1;

mediaMuxer.stop();

mediaMuxer.release();

}

private void stopMediaCodecThread() {

isStop=2;

AudioCodecThread=null;

VideoCodecThread=null;

}

private void stopMediaCodec() {

AudioCodec.stop();

AudioCodec.release();

videoMediaCodec.stop();

videoMediaCodec.release();

}

private void stopVideoSession() {

if (previewCaptureSession!=null){

try {

previewCaptureSession.stopRepeating();

videoIsReadyToStop=true;

} catch (CameraAccessException e) {

throw new RuntimeException(e);

}

}

try {

previewCaptureSession.setRepeatingRequest(previewCaptureRequestBuilder.build(), cameraPreviewCallback, cameraHandler);

} catch (CameraAccessException e) {

throw new RuntimeException(e);

}

}

private void stopAudioRecord() {

mIsAudioRecording=false;

audioRecord.stop();

audioRecord.release();

audioRecord=null;

audioRecordThread=null;

}

private void startRecording() {

isStop=1;

nanoTime = System.nanoTime();

initMediaMuxer();

initAudioRecord();

initMediaCodec(mWidth, mHeight);

initAudioCodec();

//將MediaCodec分成獨立的線程

initMediaCodecThread();

//啟動MediaCodec以及线程

startMediaCodec();

//开启录像session

startVideoSession();

//音頻開始錄製

startAudioRecord();

}

private void startMediaCodec() {

if (AudioCodec!=null){

AudioCodecIsStoped=0;

AudioCodec.start();

}

if (videoMediaCodec!=null){

videoMediaCodecIsStoped=0;

videoMediaCodec.start();

}

if (VideoCodecThread!=null){

VideoCodecThread.start();

}

if (AudioCodecThread!=null){

AudioCodecThread.start();

}

}

private void initMediaCodecThread() {

VideoCodecThread=new Thread(new Runnable() {

@Override

public void run() {

//输出为H264

while (true){

if (isStop==2){

Log.d(TAG,"videoMediaCodec is stopping");

break;

}

encodeVideoH264();

}

videoMediaCodec.stop();

videoMediaCodec.release();

videoMediaCodecIsStoped=1;

if (AudioCodecIsStoped==1){

stopMediaMuxer();

}

}

});

AudioCodecThread=new Thread(new Runnable() {

@Override

public void run() {

while (true){

if (isStop==2){

Log.d(TAG,"AudioCodec is stopping");

break;

}

encodePCMToAC();

}

AudioCodec.stop();

AudioCodec.release();

AudioCodecIsStoped=1;

if (videoMediaCodecIsStoped==1){

stopMediaMuxer();

}

}

});

}

private void startAudioRecord() {

audioRecordThread = new Thread(new Runnable() {

@Override

public void run() {

mIsAudioRecording = true;

audioRecord.startRecording();

while (mIsAudioRecording) {

byte[] inputAudioData = new byte[AudioiMinBufferSize];

int res = audioRecord.read(inputAudioData, 0, inputAudioData.length);

if (res > 0) {

//Log.d(TAG,res+"");

if (AudioCodec!=null){

if (captureListener!=null){

captureListener.onCaptureListener(inputAudioData,res);

}

//callbackData(inputAudioData,inputAudioData.length);

}

}

}

}

});

audioRecordThread.start();

}

private void initMediaMuxer() {

String filename = Environment.getExternalStorageDirectory().getAbsolutePath() + "/DCIM/Camera/" + getCurrentTime() + ".mp4";

try {

mediaMuxer = new MediaMuxer(filename, MediaMuxer.OutputFormat.MUXER_OUTPUT_MPEG_4);

mediaMuxer.setOrientationHint(90);

} catch (IOException e) {

throw new RuntimeException(e);

}

}

public String getCurrentTime() {

Date date = new Date(System.currentTimeMillis());

DateFormat dateFormat = new SimpleDateFormat("yyyyMMddhhmmss");

return dateFormat.format(date);

}

//初始化Audio MediaCodec

private void initAudioCodec() {

MediaFormat format = MediaFormat.createAudioFormat(MIMETYPE_AUDIO_AAC, SAMPLE_RATE, CHANNEL_COUNT);

format.setInteger(MediaFormat.KEY_AAC_PROFILE, MediaCodecInfo.CodecProfileLevel.AACObjectLC);

format.setInteger(MediaFormat.KEY_BIT_RATE, BIT_RATE);

format.setInteger(MediaFormat.KEY_MAX_INPUT_SIZE, 8192);

try {

AudioCodec = MediaCodec.createEncoderByType(MIMETYPE_AUDIO_AAC);

AudioCodec.configure(format, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

} catch (IOException e) {

throw new RuntimeException(e);

}

}

private void callbackData(byte[] inputAudioData, int length) {

//已经拿到AudioRecord的byte数据

//准备将其放入到MediaCodc中

int index = AudioCodec.dequeueInputBuffer(-1);

if (index<0){

return;

}

Log.d(TAG,"AudioCodec.dequeueInputBuffer:"+index);

ByteBuffer[] inputBuffers = AudioCodec.getInputBuffers();

ByteBuffer audioInputBuffer = inputBuffers[index];

audioInputBuffer.clear();

Log.d(TAG, "call back Data length:" + length);

Log.d(TAG, "call back Data audioInputBuffer remain:" + audioInputBuffer.remaining());

audioInputBuffer.put(inputAudioData);

audioInputBuffer.limit(inputAudioData.length);

presentationTimeUs += (long) (1.0 * length / (44100 * 2 * (16 / 8)) * 1000000.0);

AudioCodec.queueInputBuffer(index, 0, inputAudioData.length, (System.nanoTime()-nanoTime)/1000, 0);

}

/*private void getEncordData() {

MediaCodec.BufferInfo outputBufferInfo = new MediaCodec.BufferInfo();

//获得输出

int flag = AudioCodec.dequeueOutputBuffer(outputBufferInfo, 0);

Log.d(TAG, "CALL BACK DATA FLAG:" + flag);

if (flag == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

//第一次都会执行这个

//这时候可以加轨道到MediaMuxer中,但是先不要启动,等到两个轨道都加好再start

mAudioTrackIndex = mediaMuxer.addTrack(AudioCodec.getOutputFormat());

if (mAudioTrackIndex != -1 && mVideoTrackIndex != -1) {

mediaMuxer.start();

Log.d(TAG, "AudioMediaCodec start mediaMuxer");

isMediaMuxerStarted = true;

}

} else {

if (isMediaMuxerStarted) {

if (flag >= 0) {

if (mAudioTrackIndex != -1) {

Log.d(TAG, "AudioCodec.getOutputBuffer:");

ByteBuffer outputBuffer = AudioCodec.getOutputBuffer(flag);

mediaMuxer.writeSampleData(mAudioTrackIndex, outputBuffer, outputBufferInfo);

AudioCodec.releaseOutputBuffer(flag, false);

}

}

}

}

}*/

private void startVideoSession() {

Log.d(TAG, "startVideoSession");

if (previewCaptureSession!=null){

try {

previewCaptureSession.stopRepeating();

} catch (CameraAccessException e) {

throw new RuntimeException(e);

}

}

SurfaceTexture previewSurfaceTexture = previewTextureView.getSurfaceTexture();

Surface previewSurface = new Surface(previewSurfaceTexture);

Surface previewImageReaderSurface = previewImageReader.getSurface();

try {

videoRequest = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_RECORD);

videoRequest.set(CaptureRequest.CONTROL_AF_MODE, CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE);

videoRequest.addTarget(previewSurface);

videoRequest.addTarget(previewImageReaderSurface);

previewCaptureSession.setRepeatingRequest(videoRequest.build(), videosessioncallback, cameraHandler);

} catch (CameraAccessException e) {

throw new RuntimeException(e);

}

}

private CameraCaptureSession.CaptureCallback videosessioncallback = new CameraCaptureSession.CaptureCallback() {

@Override

public void onCaptureCompleted(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull TotalCaptureResult result) {

super.onCaptureCompleted(session, request, result);

}

};

public interface AudioCaptureListener {

/**

* 音频采集回调数据源

*

* @param audioSource :音频采集回调数据源

* @param audioReadSize :每次读取数据的大小

*/

void onCaptureListener(byte[] audioSource,int audioReadSize);

}

public AudioCaptureListener getCaptureListener() {

return captureListener;

}

public void setCaptureListener(AudioCaptureListener captureListener) {

this.captureListener = captureListener;

}

}自此一个基本功能就完成了。

但是我这边留了一些问题,没有解决,因为不是这个demo的重点。首先就是录像结束后第二次点击录像会闪退。

其次音频时间戳有点对不上,导致有点变声。

各位观众可以后续研究一下。

1644

1644

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?