ClickHouse(HA)

简介

此文档以三台服务器为例,做3分片2副本部署。

具体设计如下所示:

| 主机 | 端口 | 备注 |

|---|---|---|

| cn-01 | 9000 | 1分片的1备份 |

| cn-01 | 9001 | 3分片的2备份 |

| cn-02 | 9000 | 2分片的1备份 |

| cn-02 | 9001 | 1分片的2备份 |

| cn-03 | 9000 | 3分片的1备份 |

| cn-03 | 9001 | 2分片的2备份 |

注:如果clickhouse用zookeeper来解决数据一致性问题,请先安装zookeeper

安装包下载

阿里云:https://mirrors.aliyun.com/clickhouse/rpm/

官网:https://packages.clickhouse.com/rpm/

以22.3.10.22ARM版本为例,下载如下四个rpm安装包

clickhouse-client-22.3.10.22.aarch64.rpm

clickhouse-common-static-22.3.10.22.aarch64.rpm

clickhouse-common-static-dbg-22.3.10.22.aarch64.rpm

clickhouse-server-22.3.10.22.aarch64.rpm

注:其它格式安装包安装,请参考官网

安装

安装包上传至服务器

在三台服务器/opt目录下创建clickhouse目录,存放安装包

mkdir /opt/clickhouse

解压安装

在三台服务器执行如下操作

# 全部解压

# -i(install安装软件包)

# -v(view可视化)

# -h(hour方便自己记忆,显示安装进度)

rpm -ivh /opt/clickhouse/*.rpm

# 解压后文件存放位置

# bin --> /usr/bin

# conf --> /etc/clickhouse-server

# lib --> /var/lib/clickhouse

# log --> /var/log/clickhouse-server

注:在安装过程中会出现密码输入,可根据需求进行设置

修改配置文件

在三台服务器创建目录与文件

# 数据目录

mkdir /data/clickhouse

mkdir /data/clickhouse01

# 日志目录

mkdir /var/log/clickhouse-server01

# 配置文件

touch /etc/clickhouse-server/metrika.xml

touch /etc/clickhouse-server/metrika01.xml

在三台服务器执行如下操作

# 复制一份config文件,给服务器分片的2备份使用

cp /etc/clickhouse-server/config.xml /etc/clickhouse-server/config01.xml

编辑config.xml,三台服务器相同

<!-- 修改 -->

<path>/data/clickhouse/</path>

<tmp_path>/data/clickhouse/tmp/</tmp_path>

<user_files_path>/data/clickhouse/user_files/</user_files_path>

<user_directories>

<local_directory>

<path>/data/clickhouse/access/</path>

</local_directory>

</user_directories>

<remote_servers incl="remote_servers">

<!-- 添加incl="remote_servers",清空内容 -->

</remote_servers>

<format_schema_path>/data/clickhouse/format_schemas/</format_schema_path>

<!-- 取消注释 -->

<listen_host>::</listen_host>

<!-- 添加 -->

<zookeeper incl="zookeeper-servers" optional="true"></zookeeper>

<include_from>/etc/clickhouse-server/metrika.xml</include_from>

<macros incl="macros" optional="true"></macros>

编辑config01.xml,三台服务器相同

<!-- 修改 -->

<logger>

<log>/var/log/clickhouse-server01/clickhouse-server.log</log>

<errorlog>/var/log/clickhouse-server01/clickhouse-server.err.log</errorlog>

</logger>

<http_port>8124</http_port>

<tcp_port>9001</tcp_port>

<mysql_port>9003</mysql_port>

<postgresql_port>9006</postgresql_port>

<interserver_http_port>9010</interserver_http_port>

<path>/data/clickhouse01/</path>

<tmp_path>/data/clickhouse01/tmp/</tmp_path>

<user_files_path>/data/clickhouse01/user_files/</user_files_path>

<user_directories>

<local_directory>

<path>/data/clickhouse01/access/</path>

</local_directory>

</user_directories>

<remote_servers incl="remote_servers">

<!-- 添加incl="remote_servers",清空内容 -->

</remote_servers>

<format_schema_path>/data/clickhouse01/format_schemas/</format_schema_path>

<!-- 取消注释 -->

<listen_host>::</listen_host>

<!-- 添加 -->

<zookeeper incl="zookeeper-servers" optional="true"></zookeeper>

<include_from>/etc/clickhouse-server/metrika01.xml</include_from>

<macros incl="macros" optional="true"></macros>

编辑metrika.xml,三台服务器有区别,具体注意看注释

<yandex>

<!--集群分片副本配置 3分片2副本 -->

<remote_servers>

<ck_3shards_2replicas>

<!-- 第1个分片 -->

<shard>

<!-- 是否写入1副本,然后集群间数据同步 -->

<internal_replication>true</internal_replication>

<!-- 1分片的1副本 -->

<replica>

<host>cn-01</host>

<port>9000</port>

</replica>

<!-- 1分片的2副本 -->

<replica>

<host>cn-02</host>

<port>9001</port>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>cn-02</host>

<port>9000</port>

</replica>

<replica>

<host>cn-03</host>

<port>9001</port>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>cn-03</host>

<port>9000</port>

</replica>

<replica>

<host>cn-01</host>

<port>9001</port>

</replica>

</shard>

</ck_3shards_2replicas>

</remote_servers>

<!--zookeeper配置-->

<zookeeper-servers>

<node index="1">

<host>cn-01</host>

<port>2181</port>

</node>

<node index="2">

<host>cn-02</host>

<port>2181</port>

</node>

<node index="3">

<host>cn-03</host>

<port>2181</port>

</node>

</zookeeper-servers>

<!--压缩策略-->

<clickhouse_compression>

<case>

<min_part_size>10000000000</min_part_size>

<min_part_size_ratio>0.01</min_part_size_ratio>

<method>lz4</method>

</case>

</clickhouse_compression>

<!-- 本机绑定地址 -->

<networks>

<ip>::/0</ip>

</networks>

<!-- 注:macros配置三台服务器不同 -->

<!-- cn-01 -->

<macros>

<layer>01</layer>

<shard>01</shard>

<replica>cluster01-01-1</replica>

</macros>

<!-- cn-02 -->

<macros>

<layer>01</layer>

<shard>02</shard>

<replica>cluster01-02-1</replica>

</macros>

<!-- cn-03 -->

<macros>

<layer>01</layer>

<shard>03</shard>

<replica>cluster01-03-1</replica>

</macros>

</yandex>

编辑metrika01.xml,三台服务器有区别,具体注意看注释

<yandex>

<!--集群分片副本配置 3分片2副本 -->

<remote_servers>

<ck_3shards_2replicas>

<!-- 第1个分片 -->

<shard>

<!-- 是否写入1副本,然后集群间数据同步 -->

<internal_replication>true</internal_replication>

<!-- 1分片的1副本 -->

<replica>

<host>cn-01</host>

<port>9000</port>

</replica>

<!-- 1分片的2副本 -->

<replica>

<host>cn-02</host>

<port>9001</port>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>cn-02</host>

<port>9000</port>

</replica>

<replica>

<host>cn-03</host>

<port>9001</port>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>cn-03</host>

<port>9000</port>

</replica>

<replica>

<host>cn-01</host>

<port>9001</port>

</replica>

</shard>

</ck_3shards_2replicas>

</remote_servers>

<!--zookeeper配置-->

<zookeeper-servers>

<node index="1">

<host>cn-01</host>

<port>2181</port>

</node>

<node index="2">

<host>cn-02</host>

<port>2181</port>

</node>

<node index="3">

<host>cn-03</host>

<port>2181</port>

</node>

</zookeeper-servers>

<!--压缩策略-->

<clickhouse_compression>

<case>

<min_part_size>10000000000</min_part_size>

<min_part_size_ratio>0.01</min_part_size_ratio>

<method>lz4</method>

</case>

</clickhouse_compression>

<!-- 本机绑定地址 -->

<networks>

<ip>::/0</ip>

</networks>

<!-- 注:macros配置三台服务器不同 -->

<!-- cn-01 -->

<macros>

<layer>01</layer>

<shard>03</shard>

<replica>cluster01-03-2</replica>

</macros>

<!-- cn-02 -->

<macros>

<layer>01</layer>

<shard>01</shard>

<replica>cluster01-01-2</replica>

</macros>

<!-- cn-03 -->

<macros>

<layer>01</layer>

<shard>02</shard>

<replica>cluster01-02-2</replica>

</macros>

</yandex>

注:更多相关配置可参考官方文档

在三台服务器修改文件、目录的所属用户与所属组

chown -R clickhouse:clickhouse /data/clickhouse/

chown -R clickhouse:clickhouse /data/clickhouse01/

chown -R clickhouse:clickhouse /var/log/clickhouse-server01

chown -R clickhouse:clickhouse /etc/clickhouse-server/metrika.xml

chown -R clickhouse:clickhouse /etc/clickhouse-server/metrika01.xml

chown -R clickhouse:clickhouse /etc/clickhouse-server/config.xml

chown -R clickhouse:clickhouse /etc/clickhouse-server/config01.xml

启动

在三台服务器启动,使用默认的配置文件config.xml

clickhouse start

在三台服务器启动,使用配置文件config01.xml

# 复制新的启动文件

cp /usr/lib/systemd/system/clickhouse-server.service /usr/lib/systemd/system/clickhouse-server01.service

# 修改clickhouse-server01.service文件

ExecStart=/usr/bin/clickhouse-server --config=/etc/clickhouse-server/config01.xml --pid-file=/run/clickhouse-server/clickhouse-server01.pid

# 启动

systemctl start clickhouse-server01.service

查看是否启动成功

在三台服务器分别访问客户端,查看集群信息

clickhouse-client --port 9000 -m

clickhouse-client --port 9001 -m

# 进入后查看集群信息

SELECT * from system.clusters;

# clusters字段

cluster:集群名

shard_num:分片的编号

shard_weight:分片的权重

replica_num:副本的编号

host_name:服务器的host名称

host_address:服务器的ip地址

port:clickhouse集群的端口

is_local:是否为你当前查询本地

user:创建用户

ZooKeeper(HA)

简介

此文档以三台服务器为例

注:zookeeper需依赖JDK,请先安装JDK

安装包下载

阿里云:https://mirrors.aliyun.com/apache/zookeeper/

官网:https://zookeeper.apache.org/releases.html

以3.7.1版本为例,下载安装包

apache-zookeeper-3.7.1-bin.tar.gz

安装

安装包上传至服务器

在三台服务器/opt目录下创建zookeeper目录,存放安装包

mkdir /opt/zookeeper

将文件上传至/opt/zookeeper目录内

解压安装

在三台服务器执行如下操作

tar -zxvf /opt/zookeeper/zookeeper-3.5.5.tar.gz

# 将解压后的目录移动到/etc下,并重命名

mv /opt/zookeeper/zookeeper-3.5.5 /etc/zookeeper

修改配置文件

在三台服务器执行如下操作

# 创建zookeeper数据存放目录

mkdir /data/zookeeper

# 复制配置文件

cp /etc/zookeeper/conf/zoo_sample.cfg /etc/zookeeper/conf/zoo.cfg

编辑zoo.cfg,三台服务器相同

# 修改数据存放目录

dataDir=/data/zookeeper

# server.每个节点服务编号=服务器ip地址:集群通信端口:选举端口

server.1=cn-01:2888:3888

server.2=cn-02:2888:3888

server.3=cn-03:2888:3888

# 常用配置解释

# 访问端口号

# clientPort=2181

# 这个时间是作为zookeeper服务器之间或客户端与服务器之间维持心跳的时间间隔

# tickTime=2000

# 配置zookeeper接受客户端初始化连接时最长能忍受多少个心跳时间间隔数

# initLimit=10

# Leader与Follower之间发送消息,请求和应答时间长度

# syncLimit=5

# 日志目录

# dataLogDir=/data/log/zookeeper

创建myid文件

在cn-01的/data/zookeeper目录下创建myid文件,并写入1

在cn-02的/data/zookeeper目录下创建myid文件,并写入2

在cn-03的/data/zookeeper目录下创建myid文件,并写入3

echo 1 > /data/zookeeper/myid

echo 2 > /data/zookeeper/myid

echo 3 > /data/zookeeper/myid

配置zookeeper环境变量

三台服务器内执行如下操作

编辑/etc/profile文件,添加如下内容

# Zookeeper Config

export ZOOKEEPER_HOME=/etc/zookeeper

export PATH=$PATH:$ZOOKEEPER_HOME/bin

启动

启动前需先检查三台服务器的防火墙是否关闭,常用操作如下

# 关闭防火墙

systemctl stop firewalld

# 禁止防火墙开机启动

systemctl disable firewalld

# 查看防火墙状态

systemctl status firewalld

# 打开防火墙

systemctl start firewalld

注:防火墙不关闭启动zookeeper会失败!

zookeeper常用指令

# 启动zookeeper

zkServer.sh start

# 查看zookeeper的状态,会产生一个leader,其余为follower

zkServer.sh status

# 停止zookeeper

zkServer.sh stop

ClickHouse Keeper

简介

ClickHouse服务为了副本和分布式DDL查询执行使用ZooKeeper协调系统,ClickHouse Keeper和ZooKeeper是相互兼容的,可互相替代。

注:当前还在预发生产阶段,只是在内部部分使用于生产环境和测试CI中,不支持外部集成

安装

无需额外安装,安装clickhouse时已经携带,只需配置即可!

注:由于三台机器做六节点,clickhouse-keeper配置存在问题,将采用3分片1副本演示部署

# raft_configuration不允许指定两次hostname,错误如下

Local address specified more than once (2 times) in raft_configuration. Such configuration is not allowed because single host can vote multiple times.

配置

将3分片2副本调整为3分片1副本进行调试,具体细节不再演示!

创建目录

在三台服务器执行如下操作

# 创建clickhouse-keeper数据存放目录

mkdir /data/clickhouse-keeper

# 赋予权限

chown -R clickhouse:clickhouse /data/clickhouse-keeper

修改配置文件

修改cn-01的config.xml

<!-- 添加 -->

<keeper_server>

<!-- 与metrika.xml文件内zookeeper-servers的port一致 -->

<tcp_port>2181</tcp_port>

<server_id>1</server_id>

<log_storage_path>/data/clickhouse-keeper/coordination/log</log_storage_path>

<snapshot_storage_path>/data/clickhouse-keeper/coordination/snapshots</snapshot_storage_path>

<coordination_settings>

<operation_timeout_ms>10000</operation_timeout_ms>

<session_timeout_ms>30000</session_timeout_ms>

<raft_logs_level>trace</raft_logs_level>

</coordination_settings>

<raft_configuration>

<server>

<id>1</id>

<hostname>cn-01</hostname>

<port>9444</port>

</server>

<server>

<id>2</id>

<hostname>cn-02</hostname>

<port>9444</port>

</server>

<server>

<id>3</id>

<hostname>cn-03</hostname>

<port>9444</port>

</server>

</raft_configuration>

</keeper_server>

cn-02、cn-03配置

<!-- cn-02与cn-01其它相同,只需更改此项 -->

<server_id>2</server_id>

<!-- cn-03与cn-01其它相同,只需更改此项 -->

<server_id>3</server_id>

正常重启clickhouse即可使用clickhouse-keeper

ClickHouse集群测试

创建数据库

-- cluster_test:数据库名

-- ck_3shards_2replicas:集群名

create database cluster_test on cluster ck_3shards_2replicas;

创建表

# 创建本地表

CREATE TABLE cluster_test.test_table ON CLUSTER ck_3shards_2replicas(

id UInt64 COMMENT 'UInt64',

protocol UInt16 COMMENT 'UInt16',

appProtocol UInt16 COMMENT 'UInt16',

srcMAC UInt64 COMMENT 'UInt64',

destMAC UInt64 COMMENT 'UInt64',

IPType UInt8 COMMENT 'UInt8',

srcIPv4 IPv4 COMMENT 'IPv4',

destIPv4 IPv4 COMMENT 'IPv4',

srcIPv6 IPv6 COMMENT 'IPv6',

destIPv6 IPv6 COMMENT 'IPv6',

srcPort UInt16 COMMENT 'UInt16',

destPort UInt16 COMMENT 'UInt16',

regionType UInt8 COMMENT 'UInt8',

srcCountry UInt16 COMMENT 'UInt16',

destCountry UInt16 COMMENT 'UInt16',

srcLoc UInt32 COMMENT 'UInt32',

destLoc UInt32 COMMENT 'UInt32',

srcTTL Array(UInt32) COMMENT 'Array(UInt32)',

srcTTLCount Array(UInt32) COMMENT 'Array(UInt32)',

destTTL Array(UInt32) COMMENT 'Array(UInt32)',

destTTLCount Array(UInt32) COMMENT 'Array(UInt32)',

outPackets UInt64 COMMENT 'UInt64',

outBytes UInt64 COMMENT 'UInt64',

validOutPackets UInt64 COMMENT 'UInt64',

validOutBytes UInt64 COMMENT 'UInt64',

inPackets UInt64 COMMENT 'UInt64',

inBytes UInt64 COMMENT 'UInt64',

validInPackets UInt64 COMMENT 'UInt64',

validInBytes UInt64 COMMENT 'UInt64',

totalPackets UInt64 COMMENT 'UInt64',

totalBytes UInt64 COMMENT 'UInt64',

sessionTime UInt32 COMMENT 'UInt32',

interactionCount UInt32 COMMENT 'UInt32',

closeType UInt8 COMMENT 'UInt8',

beginTime DateTime64(3) COMMENT 'DateTime64',

requestTime DateTime64(3) COMMENT 'DateTime64',

speed Float64 COMMENT 'Float64',

pcapFiles Array(UInt64) COMMENT 'Array(UInt64)',

pcapLocs Array(UInt64) COMMENT 'Array(UInt64)',

entropy Float64 COMMENT 'Float64',

synCount UInt8 COMMENT 'UInt8',

finCount UInt8 COMMENT 'UInt8',

rstCount UInt8 COMMENT 'UInt8',

alarm UInt8 COMMENT 'UInt8',

extensionType UInt8 COMMENT 'UInt8',

extensionString1 String COMMENT 'String',

extensionString2 String COMMENT 'String',

extensionString3 String COMMENT 'String',

extensionString4 String COMMENT 'String',

extensionString5 String COMMENT 'String',

extensionInt1 Int64 COMMENT 'Int64',

extensionInt2 Int64 COMMENT 'Int64',

extensionInt3 Int64 COMMENT 'Int64',

extensionInt4 Int64 COMMENT 'Int64',

extensionInt5 Int64 COMMENT 'Int64',

INDEX protocol_index protocol TYPE set(100) GRANULARITY 2,

INDEX appProtocol_index appProtocol TYPE set(65536) GRANULARITY 2,

INDEX srcMAC_index srcMAC TYPE bloom_filter GRANULARITY 4,

INDEX destMAC_index destMAC TYPE bloom_filter GRANULARITY 4,

INDEX srcIPv4_index srcIPv4 TYPE bloom_filter GRANULARITY 4,

INDEX destIPv4_index destIPv4 TYPE bloom_filter GRANULARITY 4,

INDEX srcPort_index srcPort TYPE set(65536) GRANULARITY 2,

INDEX destPort_index destPort TYPE set(65536) GRANULARITY 2,

INDEX srcCountry_index srcCountry TYPE set(65536) GRANULARITY 2,

INDEX destCountry_index destCountry TYPE set(65536) GRANULARITY 2,

INDEX outPackets_index outPackets TYPE minmax GRANULARITY 2,

INDEX outBytes_index outBytes TYPE minmax GRANULARITY 2,

INDEX inPackets_index inPackets TYPE minmax GRANULARITY 2,

INDEX inBytes_index inBytes TYPE minmax GRANULARITY 2,

INDEX sessionTime_index sessionTime TYPE minmax GRANULARITY 2,

INDEX beginTime_index beginTime TYPE minmax GRANULARITY 16,

INDEX requestTime_index requestTime TYPE minmax GRANULARITY 16,

INDEX speed_index speed TYPE minmax GRANULARITY 2,

INDEX alarm_index alarm TYPE set(2) GRANULARITY 16

)ENGINE = ReplicatedMergeTree('/clickhouse/tables/{shard}/test_table','{replica}')

PARTITION BY (regionType, extensionType)

PRIMARY KEY id

ORDER BY (id, requestTime, totalPackets, totalBytes, sessionTime, interactionCount)

SETTINGS index_granularity = 8192;

# 创建分布式表

CREATE TABLE cluster_test.test_table_all ON CLUSTER ck_3shards_2replicas as cluster_test.test_table ENGINE=Distributed(ck_3shards_2replicas,cluster_test,test_table, intHash64(id));

测试

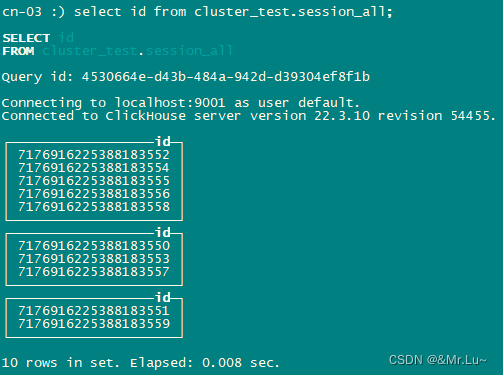

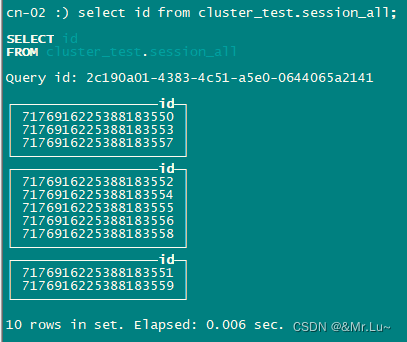

在任意服务器插入十条数据后,查询全部数据如下!

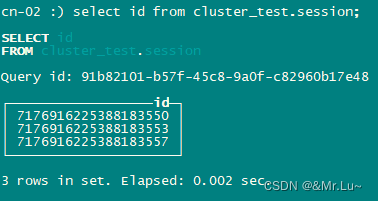

cn-01的9000与cn-02的9001、cn-02的9000与cn-03的9001、cn-03的9000与cn-01的9001互为副本

以cn-01的9000与cn-02的9001为例查询本地数据如下

手动停掉cn-01的clickhouse服务,查询全部数据如下

综上所述:分片及副本数据有效!

2008

2008

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?