一.kafka配置sasl_ssl认证

之前配置过ssl在这个基础上配置sasl_ssl

在config目录下创建sasl_ssl目录复制 server.properties一份到sasl_ssl下命名为server_sasl_ssl.properties修改文件

listeners=PLAINTEXT://0.0.0.0:9092,SASL_SSL://:9093

advertised.listeners=PLAINTEXT://172.29.128.71:9092,SASL_SSL://172.29.128.71:9093

ssl.truststore.location=/usr/local/soft/kafka_2.12-3.2.3/config/ssl2/server.truststore.jks

ssl.truststore.password=123456

ssl.keystore.location=/usr/local/soft/kafka_2.12-3.2.3/config/ssl2/server.keystore.jks

ssl.keystore.password=123456

ssl.key.password=123456

security.inter.broker.protocol=SASL_SSL

sasl.mechanism.inter.broker.protocol=PLAIN

ssl.endpoint.identification.algorithm=

sasl.enabled.mechanisms=PLAIN

sasl.mechanism=PLAIN

listener.name.sasl_ssl.ssl.client.auth=required

添加client.properties

security.protocol=SASL_SSL

sasl.mechanism=PLAIN

ssl.truststore.location=/usr/local/soft/kafka_2.12-3.2.3/config/ssl2/server.truststore.jks

ssl.truststore.password=123456

ssl.keystore.location=/usr/local/soft/kafka_2.12-3.2.3/config/ssl2/server.keystore.jks

ssl.keystore.password=123456

ssl.key.password=123456

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="admin-secret";

ssl.endpoint.identification.algorithm=

添加密码验证文件kafka_server_jaas.conf

KafkaServer{

org.apache.kafka.common.security.plain.PlainLoginModule required

username="admin"

password="admin-secret"

user_admin="admin-secret"

user_alice="alice-secret";};

复制 脚本

cp kafka-topics.sh kafka-topics-sasl.sh

cp kafka-console-producer.sh kafka-console-producer-sasl.sh

cp kafka-server-start.sh kafka-server-start-sasl.sh

cp kafka-console-consumer.sh kafka-console-consumer-sasl.sh分别在复制后带sasl的sh下倒数第二行添加

export KAFKA_OPTS="-Djava.security.auth.login.config=/usr/local/soft/kafka_2.12-3.2.3/config/sasl_ssl/kafka_server_jaas.conf"

启动

#启动zk

./bin/zookeeper-server-start.sh ./config/zookeeper.properties

#启动kafka

./bin/kafka-server-start-sasl.sh ./config/sasl_ssl/server_sasl_ssl.properties

创建主题

./bin/kafka-topics-sasl.sh --create --topic sasl_ssl --partitions 1 --replication-factor 1 --bootstrap-server localhost:9093 --command-config ./config/sasl

启动生产者消费者

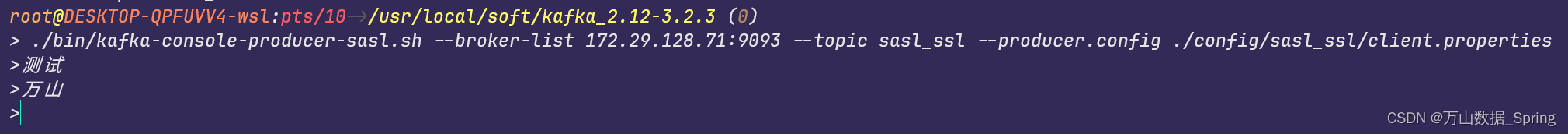

./bin/kafka-console-producer-sasl.sh --broker-list 172.29.128.71:9093 --topic sasl_ssl --producer.config ./config/sasl_ssl/client.properties

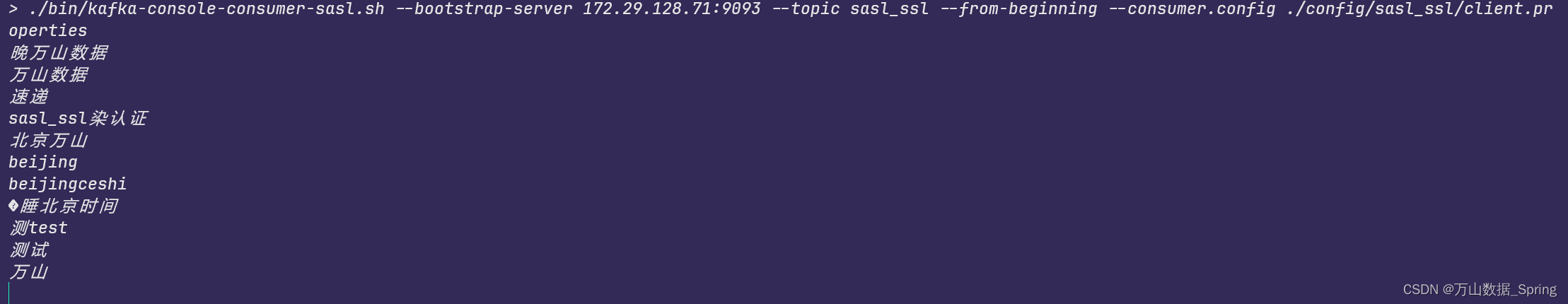

./bin/kafka-console-consumer-sasl.sh --bootstrap-server 172.29.128.71:9093 --topic sasl_ssl --from-beginning --consumer.config ./config/sasl_ssl/client.properties

二.clickchouse配置连接kafka

同样修改/etc/clickhouse-server/config.d下的metrika.xml

vi metrika.xml修改为

<yandex>

<kafka>

<max_poll_interval_ms>60000</max_poll_interval_ms>

<session_timeout_ms>60000</session_timeout_ms>

<heartbeat_interval_ms>10000</heartbeat_interval_ms>

<reconnect_backoff_ms>5000</reconnect_backoff_ms>

<reconnect_backoff_max_ms>60000</reconnect_backoff_max_ms>

<request_timeout_ms>20000</request_timeout_ms>

<retry_backoff_ms>500</retry_backoff_ms>

<message_max_bytes>20971520</message_max_bytes>

<debug>all</debug><!-- only to get the errors -->

<security_protocol>SASL_SSL</security_protocol>

<sasl_mechanism>PLAIN</sasl_mechanism>

<sasl_username>admin</sasl_username>

<sasl_password>admin-secret</sasl_password>

<ssl_ca_location>/etc/clickhouse-server/ssl2/server.crt</ssl_ca_location>

<ssl_certificate_location>/etc/clickhouse-server/ssl2/server.pem</ssl_certificate_location>

<ssl_key_location>/etc/clickhouse-server/ssl2/server.key</ssl_key_location>

<ssl_key_password>123456</ssl_key_password>

</kafka>

</yandex>

重启clickhouse

systemctl restart clickhouse-server.service

#创建kafka引擎表

CREATE TABLE kafka_test.log_kafka

(

`CONTENT` String

)

ENGINE = Kafka

SETTINGS kafka_broker_list = '172.29.128.71:9093', kafka_topic_list = 'sasl_ssl', kafka_group_name = 'consumer-group1', kafka_format = 'TabSeparated', kafka_num_consumers = 1#创建物化视图

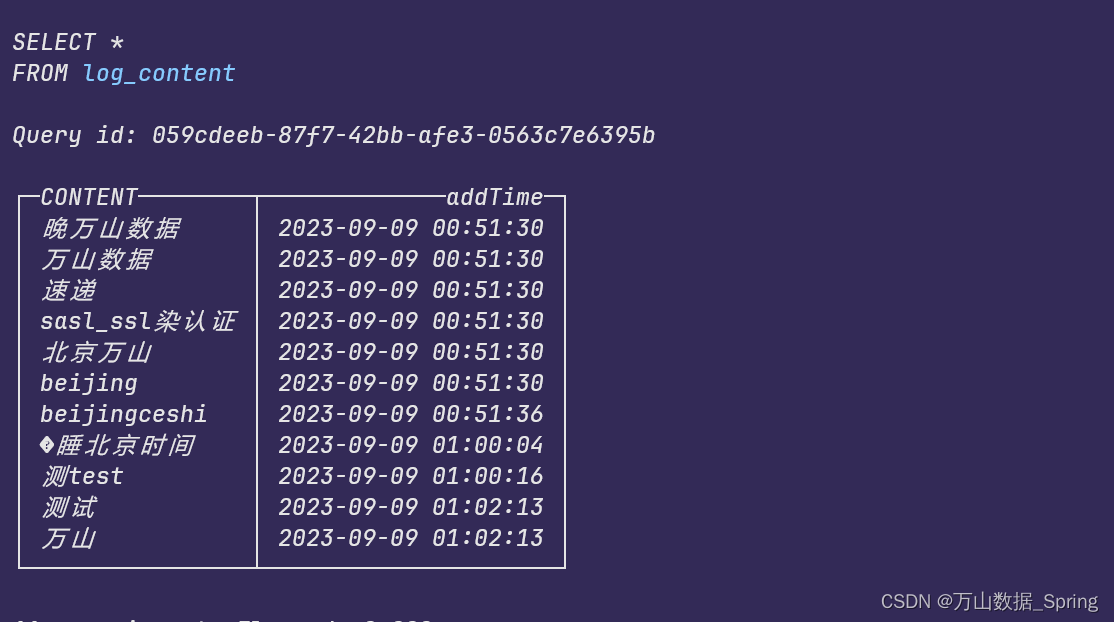

CREATE MATERIALIZED VIEW kafka_test.log_content

(

`CONTENT` Nullable(String),

`addTime` DateTime

)

ENGINE = MergeTree

ORDER BY addTime

SETTINGS index_granularity = 8192 AS

SELECT

CONTENT,

now() AS addTime

FROM kafka_test.log_kafka

338

338

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?