1.1下载sdk运行

1.2配置环境

添加至项目,可以拖动复制或者以类库形式添加

face-resource此文件夹 放到根目录上一层

激活文件与所有dll引用放到根目录

嫌麻烦可以将sdk的根目录所有文件直接拖动至项目的根目录

1.3 使用

百度离线人脸识别sdk只支持Release方式运行,所以项目的配置也改为此方式(不然会提示baiduapi…….一系列dll找不到)

sdk使用OpenCvSharp3-AnyCPU的引用

static class Program

{

/// <summary>

/// 应用程序的主入口点。

/// </summary>

[UnmanagedFunctionPointer(CallingConvention.Cdecl)]

delegate void FaceCallback(IntPtr bytes, int size, String res);

// sdk初始化

[DllImport("BaiduFaceApi.dll", EntryPoint = "sdk_init", CharSet = CharSet.Ansi

, CallingConvention = CallingConvention.Cdecl)]

private static extern int sdk_init(bool id_card);

// 是否授权

[DllImport("BaiduFaceApi.dll", EntryPoint = "is_auth", CharSet = CharSet.Ansi

, CallingConvention = CallingConvention.Cdecl)]

private static extern bool is_auth();

// 获取设备指纹

[DllImport("BaiduFaceApi.dll", EntryPoint = "get_device_id", CharSet = CharSet.Ansi

, CallingConvention = CallingConvention.Cdecl)]

private static extern IntPtr get_device_id();

// sdk销毁

[DllImport("BaiduFaceApi.dll", EntryPoint = "sdk_destroy", CharSet = CharSet.Ansi

, CallingConvention = CallingConvention.Cdecl)]

private static extern void sdk_destroy();

// 测试获取设备指纹device_id

public static void test_get_device_id()

{

IntPtr ptr = get_device_id();

string buf = Marshal.PtrToStringAnsi(ptr);

Console.WriteLine("device id is:" + buf);

}

[STAThread]

static void Main()

{

bool id = false;

int n = sdk_init(id);

if (n != 0)

{

Console.WriteLine("auth result is {0:D}", n);

Console.ReadLine();

}

// 测试是否授权

bool authed = is_auth();

Console.WriteLine("authed res is:" + authed);

test_get_device_id();

Application.EnableVisualStyles();

Application.SetCompatibleTextRenderingDefault(false);

Application.Run(new Form1());

}

}

使用时将Program类修改,不然无法使用人脸检测接口 错误报告为参数非法回传 -1

1.3.1 人脸图片检测方法

[DllImport("BaiduFaceApi.dll", EntryPoint = "track", CharSet = CharSet.Ansi

, CallingConvention = CallingConvention.Cdecl)]

private static extern IntPtr track(string file_name, int max_track_num);

public void test_track()

{

// 传入图片文件绝对路径

string file_name = "d:\\kehu2.jpg";

int max_track_num = 1; // 设置最多检测人数(多人脸检测),默认为1,最多可设为10

IntPtr ptr = track(file_name, max_track_num);

string buf = Marshal.PtrToStringAnsi(ptr);

Console.WriteLine("track res is:" + buf);

}

人脸检测接口,此方法需要在d盘根目录放一张名为“kehu2.jpg”的图片来检测是否含有人脸。

1.3.2 人脸摄像头实时检测方法

public void usb_csharp_track_face(int dev)

{

using (var window = new Window("face"))

using (VideoCapture cap = VideoCapture.FromCamera(dev))

{

if (!cap.IsOpened())

{

Console.WriteLine("open camera error");

return;

}

// Frame image buffer

Mat image = new Mat();

// When the movie playback reaches end, Mat.data becomes NULL.

while (true)

{

RotatedRect box;

cap.Read(image); // same as cvQueryFrame

if (!image.Empty())

{

int ilen = 2;//传入的人脸数

TrackFaceInfo[] track_info = new TrackFaceInfo[ilen];

for (int i = 0; i < ilen; i++)

{

track_info[i] = new TrackFaceInfo();

track_info[i].landmarks = new int[144];

track_info[i].headPose = new float[3];

track_info[i].face_id = 0;

track_info[i].score = 0;

}

int sizeTrack = Marshal.SizeOf(typeof(TrackFaceInfo));

IntPtr ptT = Marshal.AllocHGlobal(sizeTrack* ilen);

//Marshal.Copy(ptTrack, 0, ptT, ilen);

// FaceInfo[] face_info = new FaceInfo[ilen];

// face_info = new FaceInfo(0.0F,0.0F,0.0F,0.0F,0.0F);

//Cv2.ImWrite("usb_track_Cv2.jpg", image);

/* trackMat

* 传入参数: maxTrackObjNum:检测到的最大人脸数,传入外部分配人脸数,需要分配对应的内存大小。

* 传出检测到的最大人脸数

* 返回值: 传入的人脸数 和 检测到的人脸数 中的最小值,实际返回的人脸。

****/

int faceSize = ilen;//返回人脸数 分配人脸数和检测到人脸数的最小值

int curSize = ilen;//当前人脸数 输入分配的人脸数,输出实际检测到的人脸数

faceSize = track_mat(ptT, image.CvPtr, ref curSize);

for (int index = 0; index < faceSize; index++)

{

IntPtr ptr = new IntPtr();

if( 8 == IntPtr.Size)

{

ptr = (IntPtr)(ptT.ToInt64() + sizeTrack * index);

}

else if(4 == IntPtr.Size)

{

ptr = (IntPtr)(ptT.ToInt32() + sizeTrack * index);

}

track_info[index] = (TrackFaceInfo)Marshal.PtrToStructure(ptr, typeof(TrackFaceInfo));

//face_info[index] = (FaceInfo)Marshal.PtrToStructure(info_ptr, typeof(FaceInfo));

Console.WriteLine("in Liveness::usb_track face_id is {0}:",track_info[index].face_id);

Console.WriteLine("in Liveness::usb_track landmarks is:");

for (int k = 0; k < 1; k ++)

{

Console.WriteLine("{0},{1},{2},{3},{4},{5},{6},{7},{8},{9},",

track_info[index].landmarks[k], track_info[index].landmarks[k+1],

track_info[index].landmarks[k+2], track_info[index].landmarks[k + 3],

track_info[index].landmarks[k+4], track_info[index].landmarks[k + 5],

track_info[index].landmarks[k+6], track_info[index].landmarks[k + 7],

track_info[index].landmarks[k+8], track_info[index].landmarks[k + 9]

);

}

for (int k = 0; k < track_info[index].headPose.Length; k++)

{

Console.WriteLine("in Liveness::usb_track angle is:{0:f}", track_info[index].headPose[k]);

}

Console.WriteLine("in Liveness::usb_track score is:{0:f}", track_info[index].score);

// 角度

Console.WriteLine("in Liveness::usb_track mAngle is:{0:f}", track_info[index].box.mAngle);

// 人脸宽度

Console.WriteLine("in Liveness::usb_track mWidth is:{0:f}", track_info[index].box.mWidth);

// 中心点X,Y坐标

Console.WriteLine("in Liveness::usb_track mCenter_x is:{0:f}", track_info[index].box.mCenter_x);

Console.WriteLine("in Liveness::usb_track mCenter_y is:{0:f}", track_info[index].box.mCenter_y);

画人脸框

box = bounding_box(track_info[index].landmarks, track_info[index].landmarks.Length);

draw_rotated_box(ref image, ref box, new Scalar(0, 255, 0));

// 实时检测人脸属性和质量可能会视频卡顿,若一定必要可考虑跳帧检测

// 获取人脸属性(通过传入人脸信息)

//IntPtr ptrAttr = FaceAttr.face_attr_by_face(image.CvPtr, ref track_info[index]);

//string buf = Marshal.PtrToStringAnsi(ptrAttr);

//Console.WriteLine("attr res is:" + buf);

获取人脸质量(通过传入人脸信息)

//IntPtr ptrQua = FaceQuality.face_quality_by_face(image.CvPtr, ref track_info[index]);

//buf = Marshal.PtrToStringAnsi(ptrQua);

//Console.WriteLine("quality res is:" + buf);

实时检测人脸特征值可能会视频卡顿,若一定必要可考虑跳帧检测

//float[] feature = new float[512];

//IntPtr ptrfea = new IntPtr();

//int count = FaceCompare.get_face_feature_by_face(image.CvPtr, ref track_info[index], ref ptrfea);

返回值为512表示取到了特征值

//if(ptrfea == IntPtr.Zero)

//{

// Console.WriteLine("Get feature failed!");

// continue;

//}

//if (count == 512)

//{

// for (int i = 0; i < count; i++)

// {

// IntPtr floptr = new IntPtr();

// if ( 8 == IntPtr.Size)

// {

// floptr = (IntPtr)(ptrfea.ToInt64() + i * count * Marshal.SizeOf(typeof(float)));

// }

// else if( 4 == IntPtr.Size)

// {

// floptr = (IntPtr)(ptrfea.ToInt32() + i * count * Marshal.SizeOf(typeof(float)));

// }

// feature[i] = (float)Marshal.PtrToStructure(floptr, typeof(float));

// Console.WriteLine("feature {0} is: {1:e8}", i, feature[i]);

// }

// Console.WriteLine("feature count is:{0}", count);

//}

}

Marshal.FreeHGlobal(ptT);

window.ShowImage(image);

Cv2.WaitKey(1);

Console.WriteLine("mat not empty");

}

else

{

Console.WriteLine("mat is empty");

}

}

image.Release();

}

}

以上为原sdk方法;

只可检测人脸 不可活体检测;

static VideoCapture camera1 = null;

static VideoCapture camera2 = null;

public bool usb_csharp_track_face(int dev)

{

string buf = "";

int faceSize = 0;

int faceNum = 2;//传入的人脸数

int face_size = faceNum;//当前传入人脸数,传出人脸数

//VideoCapture cap = VideoCapture.FromCamera(dev);

//Mat image = new Mat();

long ir_time = 0;

// 序号0为电脑识别的usb摄像头编号,本demo中0为ir红外摄像头

// 不同摄像头和电脑识别可能有区别

// 编号一般从0-10 */

int device = select_usb_device_id();

camera1 = VideoCapture.FromCamera(device);

if (!camera1.IsOpened())

{

Console.WriteLine("camera1 open error");

return false;

}

camera1.Set(CaptureProperty.FrameWidth, 1440);

camera1.Set(CaptureProperty.FrameHeight, 1080);

camera2 = VideoCapture.FromCamera(device + 1);

if (!camera2.IsOpened())

{

Console.WriteLine("camera2 open error");

return false;

}

RotatedRect box;

Mat frame1 = new Mat();

Mat frame2 = new Mat();

Mat rgb_mat = new Mat();

Mat ir_mat = new Mat();

//var window_ir = new Window("ir_face");

//var window_rgb = new Window("rgb_face");

if (!camera1.IsOpened())

{

return false;

}

#region 尝试

while (true)

{

//Thread.Sleep(1000);

camera1.Read(frame1);

camera2.Read(frame2);

if (!frame1.Empty() && !frame2.Empty())

{

int ilen = 2;//传入的人脸数

TrackFaceInfo[] track_info = new TrackFaceInfo[ilen];

for (int i = 0; i < ilen; i++)

{

track_info[i] = new TrackFaceInfo();

track_info[i].landmarks = new int[144];

track_info[i].headPose = new float[3];

track_info[i].face_id = 0;

track_info[i].score = 0;

}

int sizeTrack = Marshal.SizeOf(typeof(TrackFaceInfo));

IntPtr ptT = Marshal.AllocHGlobal(sizeTrack * ilen);

#region 原

if (frame1.Size(0) > frame2.Size(0))

{

rgb_mat = frame1;

ir_mat = frame2;

}

else

{

rgb_mat = frame2;

ir_mat = frame1;

}

#endregion

float rgb_score = 0;

float ir_score = 0;

//Marshal.Copy(ptTrack, 0, ptT, ilen);

//FaceInfo[] face_info = new FaceInfo[ilen];

//face_info = new FaceInfo(0.0F,0.0F,0.0F,0.0F,0.0F);

//Cv2.ImWrite("usb_track_Cv2.jpg", image);

/* trackMat

* 传入参数: maxTrackObjNum:检测到的最大人脸数,传入外部分配人脸数,需要分配对应的内存大小。

* 传出检测到的最大人脸数

* 返回值: 传入的人脸数 和 检测到的人脸数 中的最小值,实际返回的人脸。

****/

faceSize = ilen;//返回人脸数 分配人脸数和检测到人脸数的最小值

int curSize = ilen;//当前人脸数 输入分配的人脸数,输出实际检测到的人脸数

faceSize = track_mat(ptT, frame1.CvPtr, ref curSize);

IntPtr ptr1 = rgb_ir_liveness_check_faceinfo(rgb_mat.CvPtr, ir_mat.CvPtr, ref rgb_score, ref ir_score, ref faceSize, ref ir_time, ptT);

curSize = ilen;

faceSize = track_mat(ptT, frame1.CvPtr, ref curSize);

//Console.WriteLine(rgb_score.ToString());

//Console.WriteLine(ir_score.ToString());

//textBox1.Text = faceSize.ToString();

for (int index = 0; index < faceSize; index++)

{

IntPtr ptr = new IntPtr();

if (8 == IntPtr.Size)

{

ptr = (IntPtr)(ptT.ToInt64() + sizeTrack * index);

}

else if (4 == IntPtr.Size)

{

ptr = (IntPtr)(ptT.ToInt32() + sizeTrack * index);

}

track_info[index] = (TrackFaceInfo)Marshal.PtrToStructure(ptr, typeof(TrackFaceInfo));

IntPtr ptrQua = FaceQuality.face_quality_by_face(frame1.CvPtr, ref track_info[index]);

buf = Marshal.PtrToStringAnsi(ptrQua);

}

textBox2.Text = buf.ToString();

Marshal.FreeHGlobal(ptT);

//window.ShowImage(image);

pictureBox1.Image = frame1.ToBitmap();

Cv2.WaitKey(1);

#region 测试

if (rgb_score != 0 && ir_score != 0)

{

if (faceSize >= 1)

{

JObject jObject = (JObject)JsonConvert.DeserializeObject(buf);

var json = (JObject)JsonConvert.DeserializeObject(jObject["data"].ToString());

string result = json["result"].ToString();

// textBox1.Text = json["result"].ToString();

var json1 = (JObject)JsonConvert.DeserializeObject(result);

if (double.Parse(json1["bluriness"].ToString()) <= 0.00001 && double.Parse(json1["occl_r_eye"].ToString()) < 0.1 && double.Parse(json1["occl_l_eye"].ToString()) < 0.1 &&

double.Parse(json1["occl_mouth"].ToString()) <= 0 && double.Parse(json1["occl_nose"].ToString()) < 0.11 && double.Parse(json1["occl_r_contour"].ToString()) < 0.1

&& double.Parse(json1["occl_chin"].ToString()) <= 0 && double.Parse(json1["occl_l_contour"].ToString()) < 0.1)

{

string fileName = DateTime.Now.ToString("yyyyMMdd") + ".jpg";

string path = AppDomain.CurrentDomain.BaseDirectory;

string picture = path + @"img" + "\\" + fileName;

frame1.SaveImage(picture);

falnem = picture;

if (Sdistinguish() != "0")

{

Class1 c = new Class1();

Thread.Sleep(2000);

dtTo = new TimeSpan(0, 0, 2);

camera2.Release();

camera2.Release();

thread.Abort();

return true;

}

else

{

Thread.Sleep(2000);

dtTo = new TimeSpan(0, 0, 2);

camera2.Release();

camera2.Release();

thread.Abort();

//msg2 msg2 = new msg2();

//msg2.Show();

return true;

this.Close();

}

Thread.Sleep(2000);

thread.Abort();

//msg2 msg2 = new msg2();

//msg2.Show();

this.Close();

//window.Close();

}

}

}

#endregion

//return faceSize;

}

else

{

Console.WriteLine("mat is empty");

}

}

#endregion

frame1.Release();

return false;

//usb_csharp_track_face(1);

}

以上为我修改后的;

private string distinguish()

{

dz = "1";

//string fileName = DateTime.Now.ToString("yyyyMMddHH") + ".jpg";

string fileName = falnem;

//string fileName = "" + ".jpg";

//SavePicture(fileName);

FaceCompare faceCompare = new FaceCompare();

JObject jObject = (JObject)JsonConvert.DeserializeObject(faceCompare.test_identify(fileName, "group", ""));

// JObject jObject = (JObject)JsonConvert.DeserializeObject(faceCompare.test_identify("d:\\4.jpg", "test_group", "u1"));

var json = (JObject)JsonConvert.DeserializeObject(jObject["data"].ToString());

var json1 = (JObject)JsonConvert.DeserializeObject(json["result"].ToString());

var json1 = (JArray)json["result"].ToString();

textBox1.Text = "对比值:" + json1["score"].ToString() +"/r用户:"+json1["user_id"].ToString() ;

textBox1.Text= faceCompare.test_identify("d:\\4.jpg", "test_group", "test_user");

string result = json["result"].ToString().Substring(1, json["result"].ToString().Length - 2);

// textBox1.Text = json["result"].ToString();

var json1 = (JObject)JsonConvert.DeserializeObject(result);

//textBox2.Text = "对比值:" + json1["score"].ToString() + "\r\n用户:" + json1["user_id"].ToString();

string user = json1["user_id"].ToString();

string lockes = "0";

#region 查询数据库

using (var db = new ces(dbPath))

{

//List<TestFaceDB> testFaces = db.testFaces.Where(x => x.User == json1["user_id"].ToString()).ToList();

var testFaces = db.testFaces.Where(x => x.User == user).ToList();

//string testFaces = db.testFaces.Select(*).Where(x => x.User == json1["user_id"].ToString()).ToString();

//this.Text = $"{DateTime.Now}, 查到{books.Count}条记录";

lockes = testFaces[0].Locks;

}

int lo = int.Parse(lockes);

if (int.Parse(lockes) > 100)

{

int d = 0;

while (lo > 100)

{

lo -= 100;

d++;

}

int z = 1;

for (int i = 0; i < d - 1; i++)

{

z = z * 2;

};

dz = "0" + z.ToString();

}

#endregion

double score = double.Parse(json1["score"].ToString());

lockes = lo.ToString();

if (score > 80)

{

if (int.Parse(lockes) < 10) lockes = "0" + lockes;

return lockes;

}

else

{

return lockes;

}

}

//1:N比较

public /*void*/string test_identify(string str, string usr_grp, string usr_id)

{

string file1 = str;//"d:\\6.jpg";

string user_group = usr_grp;//"test_group";

string user_id = usr_id;//"test_user";

IntPtr ptr = identify(file1, user_group, user_id);

string buf = Marshal.PtrToStringAnsi(ptr);

//Console.WriteLine("identify res is:" + buf);

return buf;

}

distinguish()方法为识别后在数据库中寻找匹配人脸数据;

将活体实时检测与人脸实时检测合并为一个方法,关闭了window显示窗口,修改为pictureBox控件 通过线程调用(不然死循环会崩溃);关闭摄像头为 Release();方法

数据均为json数据 ;

具体数据参数见百度文档

根据需求修改判断条件是否符合项目中的人脸标准;

识别流程为

启动线程->打开摄像头->检测到人脸->将人脸图片保存->用图片传入调用人脸对比方法;

1.3.3 人脸注册方法

string user_id = User.Text;

string group_id = "group";

string file_name = falnem;

string lockse = group.Text;

string user_info = info.Text;

string zc = faceManager.test_user_add(user_id, group_id, file_name, user_info);

JObject jObjectzc = (JObject)JsonConvert.DeserializeObject(zc);

if(jObjectzc["msg"].ToString()== "success")

{

List<TestFaceDB> testFaceDBs = new List<TestFaceDB>()

{

new TestFaceDB() {User = user_id,Locks = lockse,Phone = user_info}

};

textBox1.Text = dbPath;

using (var db = new ces(dbPath))

{

int count = db.InsertAll(testFaceDBs);

//this.Text = $"{DateTime.Now}, 插入{count}条记录";

}

msg msg = new msg();

msg.ShowDialog();

Thread.Sleep(5000);

dtTo = new TimeSpan(0, 0, 2);

camera1.Release();

camera2.Release();

return true;

}

Thread.Sleep(3000);

同上的方法 先检测摄像头中是否使活体人脸并存图片文件,保存后调用人脸注册

public /*void*/ string test_user_add(string uid,string gid,string fil,string uin)

{

//人脸注册

//string user_id = "test_user";

//string group_id = "test_group";

//string file_name = "d:\\2.jpg";

//string user_info = "user_info";

string user_id = uid;

string group_id = gid;

string file_name = fil;

string user_info = uin;

IntPtr ptr = user_add(user_id, group_id, file_name, user_info);

string buf = Marshal.PtrToStringAnsi(ptr);

return buf;

}

这个我修改添加了参数;

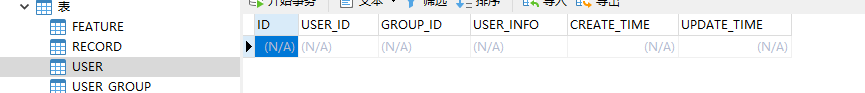

因sdk带的SQLite数据库字段比较少,并且我也没有花心思想办法去修改它创建的数据库,所以创建个新的和sdk中数据库以一个字段相连,就当扩展数据库了;

在注册的时候顺带存新数据库一份就可以,要什么字段看自己需求;

当查找数据库中数据的时候查到就取那个字段,然后去新数据库中查找对应的其他字段。

1.4 打包

打包简单方法直接将根目录数据放到一个文件夹然后添加到Application Folder文件夹,然后在同目录将face-resource放进去就可以了,在安装的时候不能安装到系统盘 不然接口会出问题;不知道什么原因

安装到一个电脑的时候需要使用百度离线识别的sdk序列号激活不然不可使用;因为百度离线人脸识别sdk 是通过电脑指纹判断是否激活,如果遇到LicenseTool.exe打不开弹出缺少系统dll组件请去网络下载,或许因为电脑系统为盗版系统组件未安装齐

也可以在百度下载激活的压缩文件;

在添加输出的时候将sdk作为本地化资源输出,项目作为主输出,输出类型为Release;

1054

1054

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?