Java API

4.1 依赖

<!-- https://mvnrepository.com/artifact/org.apache.hbase/hbase-client -->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>1.2.4</version>

</dependency>

4.2 核心操作

获取客户端对象和连接对象

@Before

public void getClient() throws Exception {

configuration = new Configuration();

configuration.set("hbase.zookeeper.quorum", "HadoopNode00");

configuration.set("hbase.zookeeper.property.clientPort", "2181");

conn = ConnectionFactory.createConnection(configuration);

admin = conn.getAdmin();

}

@After

public void close() throws Exception {

admin.close();

conn.close();

}

4.3 namespace常规操作

@Test

public void createNameSpace() throws Exception {

NamespaceDescriptor namespaceDescriptor = NamespaceDescriptor.create("baizhi123").addConfiguration("admin", "gjf").build();

admin.createNamespace(namespaceDescriptor);

}

@Test

public void listNameSpace() throws Exception {

NamespaceDescriptor[] listNamespaceDescriptors = admin.listNamespaceDescriptors();

for (NamespaceDescriptor listNamespaceDescriptor : listNamespaceDescriptors) {

System.out.println(listNamespaceDescriptor.getName());

}

}

@Test

public void modifyNameSpace() throws Exception {

NamespaceDescriptor namespaceDescriptor = NamespaceDescriptor.create("baizhi123").addConfiguration("aa", "bb").removeConfiguration("admin").build();

admin.modifyNamespace(namespaceDescriptor);

}

@Test

public void deleteNameSpace() throws Exception{

admin.deleteNamespace("baizhi123");

}

4.4 表常规操作

@Test

public void createTables() throws Exception {

/*

创建表名的对象(封装表名字)

* */

TableName tableName = TableName.valueOf("baizhi:t_user1");

/*

* 封装 表 的相关信息

* */

HTableDescriptor hTableDescriptor = new HTableDescriptor(tableName);

/*

* 封装列簇的相关信息

* */

HColumnDescriptor cf1 = new HColumnDescriptor("cf1");

cf1.setMaxVersions(3);

=

/*

*

* */

HColumnDescriptor cf2 = new HColumnDescriptor("cf2");

cf2.setMaxVersions(3);

/*

* 在hTableDescriptor 对象中添加列簇描述对象

* */

hTableDescriptor.addFamily(cf1);

hTableDescriptor.addFamily(cf2);

/*

* 创建 table

* */

admin.createTable(hTableDescriptor);

}

@Test

public void dropTable() throws Exception {

TableName tableName = TableName.valueOf("baizhi:t_user1");

admin.disableTable(tableName);

admin.deleteTable(tableName);

}

4.5 CURD

4.5.1 put

更新单个记录

@Test

public void testPutOne() throws Exception {

TableName tableName = TableName.valueOf("baizhi:t_user");

/*

* 通过conn对象获得table的操作对象

* */

Table table = conn.getTable(tableName);

Put put1 = new Put("1".getBytes());

put1.addColumn("cf1".getBytes(), "name".getBytes(), "zhangsan".getBytes());

put1.addColumn("cf1".getBytes(), "age".getBytes(), "18".getBytes());

put1.addColumn("cf1".getBytes(), "sex".getBytes(), "false".getBytes());

table.put(put1);

table.close();

}

插入多个记录

@Test

public void testPutList() throws Exception {

TableName tableName = TableName.valueOf("baizhi:t_user");

BufferedMutator bufferedMutator = conn.getBufferedMutator(tableName);

Put put1 = new Put("4".getBytes());

put1.addColumn("cf1".getBytes(), "name".getBytes(), "zhangsan".getBytes());

put1.addColumn("cf1".getBytes(), "age".getBytes(), "18".getBytes());

put1.addColumn("cf1".getBytes(), "sex".getBytes(), "false".getBytes());

Put put2 = new Put("5".getBytes());

put2.addColumn("cf1".getBytes(), "name".getBytes(), "zhangsan".getBytes());

put2.addColumn("cf1".getBytes(), "age".getBytes(), "18".getBytes());

put2.addColumn("cf1".getBytes(), "sex".getBytes(), "false".getBytes());

Put put3 = new Put("6".getBytes());

put3.addColumn("cf1".getBytes(), "name".getBytes(), "zhangsan".getBytes());

put3.addColumn("cf1".getBytes(), "age".getBytes(), "18".getBytes());

put3.addColumn("cf1".getBytes(), "sex".getBytes(), "false".getBytes());

ArrayList<Put> puts = new ArrayList<Put>();

puts.add(put1);

puts.add(put2);

puts.add(put3);

bufferedMutator.mutate(puts);

bufferedMutator.close();

}

4.5.2 delete

单个删除

@Test

public void tetsDelete() throws Exception {

TableName tableName = TableName.valueOf("baizhi:t_user");

Table table = conn.getTable(tableName);

Delete delete = new Delete("6".getBytes());

table.delete(delete);

table.close();

}

批量删除

@Test

public void testDeleteList() throws Exception{

TableName tableName = TableName.valueOf("baizhi:t_user");

BufferedMutator bufferedMutator = conn.getBufferedMutator(tableName);

Delete delete1 = new Delete("1".getBytes());

Delete delete2 = new Delete("2".getBytes());

Delete delete3 = new Delete("3".getBytes());

ArrayList<Delete> deletes = new ArrayList<Delete>();

deletes.add(delete1);

deletes.add(delete2);

deletes.add(delete3);

bufferedMutator.mutate(deletes);

bufferedMutator.close();

}

4.5.3 get

@Test

public void testGet() throws Exception {

TableName tableName = TableName.valueOf("baizhi:t_user");

Table table = conn.getTable(tableName);

Get get = new Get("4".getBytes());

Result result = table.get(get);

byte[] name = result.getValue("cf1".getBytes(), "name".getBytes());

byte[] age = result.getValue("cf1".getBytes(), "age".getBytes());

byte[] sex = result.getValue("cf1".getBytes(), "sex".getBytes());

System.out.println(new String(name) + "-" + new String(age) + "-" + new String(sex));

}

@Test

public void testGet02() throws Exception {

/*

*

* hbase(main):012:0> t.get '4',{COLUMN=>'cf1:name',VERSIONS=>3}

COLUMN CELL

cf1:name timestamp=1569284691440, value=zs

cf1:name timestamp=1569283965094, value=zhangsan

* */

TableName tableName = TableName.valueOf("baizhi:t_user");

Table table = conn.getTable(tableName);

Get get = new Get("4".getBytes());

get.setMaxVersions(2);

get.addColumn("cf1".getBytes(), "name".getBytes());

Result result = table.get(get);

List<Cell> cellList = result.getColumnCells("cf1".getBytes(), "name".getBytes());

for (Cell cell : cellList) {

/*

* rowkey 列名 列值 时间戳

* */

byte[] rowkey = CellUtil.cloneRow(cell);

byte[] cf = CellUtil.cloneFamily(cell);

byte[] qualifier = CellUtil.cloneQualifier(cell);

byte[] value = CellUtil.cloneValue(cell);

long timestamp = cell.getTimestamp();

System.out.println(new String(rowkey) + "--" + new String(cf) + "--" + new String(qualifier) + "--" + new String(value) +"--" +timestamp);

}

4.5.4 scan

@Test

public void testScan() throws Exception {

TableName tableName = TableName.valueOf("baizhi:t_user");

Table table = conn.getTable(tableName);

Scan scan = new Scan();

PrefixFilter prefixFilter1 = new PrefixFilter("4".getBytes());

PrefixFilter prefixFilter2 = new PrefixFilter("5".getBytes());

FilterList list = new FilterList(FilterList.Operator.MUST_PASS_ONE,prefixFilter1, prefixFilter2);

scan.setFilter(list);

ResultScanner results = table.getScanner(scan);

for (Result result : results) {

byte[] row = result.getRow();

byte[] name = result.getValue("cf1".getBytes(), "name".getBytes());

byte[] age = result.getValue("cf1".getBytes(), "age".getBytes());

byte[] sex = result.getValue("cf1".getBytes(), "sex".getBytes());

System.out.println(new String(row) + "--" + new String(name) + "-" + new String(age) + "-" + new String(sex));

}

}

MapReduce on Hbase

5.1 依赖

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-jobclient</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>1.2.4</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>1.2.4</version>

</dependency>

5.2 模拟问题

HBase中有相关的数据

书写MR 程序

运行

baidu sina ali

rowkey salary name age

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.mapreduce.TableInputFormat;

import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil;

import org.apache.hadoop.hbase.mapreduce.TableOutputFormat;

import org.apache.hadoop.io.DoubleWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.MRJobConfig;

import javax.swing.*;

public class JobRunner {

public static void main(String[] args) throws Exception {

System.setProperty("HADOOP_USER_NAME", "root");

Configuration configuration = new Configuration();

configuration.set("hbase.zookeeper.quorum", "HadoopNode00");

configuration.set("hbase.zookeeper.property.clientPort", "2181");

configuration.addResource("conf2/core-site.xml");

configuration.addResource("conf2/hdfs-site.xml");

configuration.addResource("conf2/mapred-site.xml");

configuration.addResource("conf2/yarn-site.xml");

configuration.set(MRJobConfig.JAR, "G:\\IDEA_WorkSpace\\BigData\\HBase_Test\\target\\HBase_Test-1.0-SNAPSHOT.jar");

configuration.set("mapreduce.app-submission.cross-platform", "true");

Job job = Job.getInstance(configuration);

job.setJarByClass(JobRunner.class);

/*

*

* */

job.setInputFormatClass(TableInputFormat.class);

job.setOutputFormatClass(TableOutputFormat.class);

/*

*

* 设置mapper 相关

* */

TableMapReduceUtil.initTableMapperJob(

"baizhi:t_user1",

new Scan(),

UserMapper.class,

Text.class,

DoubleWritable.class,

job

);

TableMapReduceUtil.initTableReducerJob(

"baizhi:t_result",

UserReducer.class,

job);

job.waitForCompletion(true);

}

}

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.hbase.mapreduce.TableMapper;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.hadoop.io.DoubleWritable;

import org.apache.hadoop.io.Text;

import java.io.IOException;

public class UserMapper extends TableMapper<Text, DoubleWritable> {

@Override

protected void map(ImmutableBytesWritable key, Result value, Context context) throws IOException, InterruptedException {

byte[] bytes = key.get();

String rowkey = Bytes.toString(bytes);

String company = rowkey.split(":")[0];

byte[] salaryByte = value.getValue("cf1".getBytes(), "salary".getBytes());

double salary = Bytes.toDouble(salaryByte);

context.write(new Text(company), new DoubleWritable(salary));

}

}

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.mapreduce.TableReducer;

import org.apache.hadoop.io.DoubleWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import java.io.IOException;

public class UserReducer extends TableReducer<Text, DoubleWritable, NullWritable> {

@Override

protected void reduce(Text key, Iterable<DoubleWritable> values, Context context) throws IOException, InterruptedException {

double totalSalary = 0.0;

int count = 0;

for (DoubleWritable value : values) {

totalSalary += value.get();

count++;

}

Put put = new Put(key.getBytes());

put.addColumn("cf1".getBytes(), "avgSalary".getBytes(), (totalSalary / count + "").getBytes());

context.write(NullWritable.get(), put);

}

}

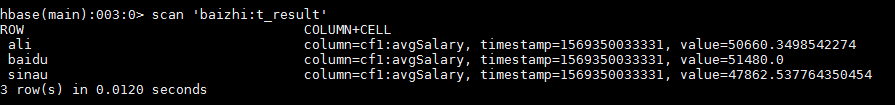

5.3 最终结果

91

91

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?