一、理论知识

1.1最主流的推荐算法:协同过滤

1.2 其他推荐算法的了解

1.3 推荐系统的大纲

1.4 环境说明:

本项目是基于用户画像生成的向量数据进行计算,至于我整个项目,我会尽力把前面两个项目补完整。项目0和项目一可能存在一点瑕疵,我有时间再完善一下(我真的是从头到尾搭建了一遍,路上还遇到不少bug)。

项目0单节点的虚拟机做大数据开发(四万字全)_林柚晞的博客-CSDN博客

至于准实时数仓和用户画像的项目我会后面再整理更新。

二、部署

2.1 生成Itemcf(物品协同过滤)的源数据表

因为Itemcf是基于event表(里面有用户id和用户对网页的浏览、点击、点赞等行为)

下面这个表是专门存3天内 用户 的对网站发生点击行为的用户行为表。

start-all.sh

hive --service metastore &

hive --service hiveserver2 &

hive

启动presto

launcher start

presto-cli --server qianfeng01:8090 --catalog hive

show schemas; #查看数据库

下面是在presto中建表

create table dwb_news.user_acticle_action comment 'user article action data' with(format='ORC')

as

with t1 as (

select

distinct_id as uid,

article_id as aid,

case when(event = 'AppPageView') then '点击'

else action_type end as action,

logday as action_date

from ods_news.event

where event in ('NewsAction', 'AppPageView')

and logday >= format_datetime(cast('2022-03-21' as timestamp), 'yyyyMMdd')

and logday < format_datetime(cast('2022-03-23' as timestamp), 'yyyyMMdd')

and article_id <> ''

)

select uid, aid, action, max(action_date) as action_date from t1 where action <> ''

group by uid, aid, action;

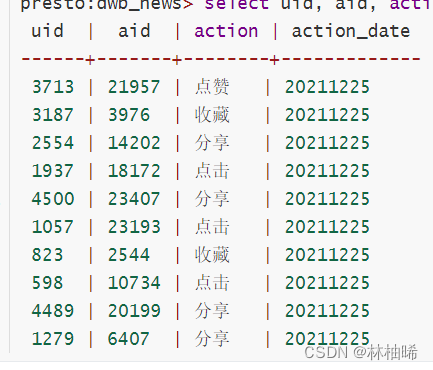

select uid, aid, action, action_date from dwb_news.user_acticle_action limit 10;

我是在hive中查询的

其实我们需要前三列数据,转换为(uid,aid,score)

人为设定一个行为的数据,比如:点击:0.1, 分享:0.15, 评论:0.2, 收藏:0.25,点赞:0.3。用户对一个文章发生了行为的时候,就自动转化为评分,这些分数累加在一起就是1 。

基于评分,还有一个时间函数,降低权重,因为规定行为发生越久远,权重越低,用户的兴趣会降低。

在这里我们使用udf函数定义这个行为转换为评分。

框里面是不同数据类型的转换(从上到下),最后的DataFrame要和评分表关联。

2.2 udf函数:读取源数据制作评分表

打开idea新建一个maven工程

pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.qf.bigdata</groupId>

<artifactId>recommend</artifactId>

<version>1.0</version>

<properties>

<scala.version>2.11.12</scala.version>

<play-json.version>2.3.9</play-json.version>

<maven-scala-plugin.version>2.10.1</maven-scala-plugin.version>

<scala-maven-plugin.version>3.2.0</scala-maven-plugin.version>

<maven-assembly-plugin.version>2.6</maven-assembly-plugin.version>

<spark.version>2.4.5</spark.version>

<scope.type>compile</scope.type>

<json.version>1.2.3</json.version>

<hbase.version>1.3.6</hbase.version>

<hadoop.version>2.8.1</hadoop.version>

<!--compile provided-->

</properties>

<dependencies>

<!--json 包-->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>${json.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>${spark.version}</version>

<scope>${scope.type}</scope>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>${spark.version}</version>

<scope>${scope.type}</scope>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-hive_2.11</artifactId>

<version>${spark.version}</version>

<scope>${scope.type}</scope>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib_2.11</artifactId>

<version>${spark.version}</version>

<scope>${scope.type}</scope>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.47</version>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.17</version>

<scope>${scope.type}</scope>

</dependency>

<dependency>

<groupId>commons-codec</groupId>

<artifactId>commons-codec</artifactId>

<version>1.6</version>

</dependency>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

<scope>${scope.type}</scope>

</dependency>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-reflect</artifactId>

<version>${scala.version}</version>

<scope>${scope.type}</scope>

</dependency>

<dependency>

<groupId>com.github.scopt</groupId>

<artifactId>scopt_2.11</artifactId>

<version>4.0.0-RC2</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-avro_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>2.3.7</version>

<scope>${scope.type}</scope>

<exclusions>

<exclusion>

<groupId>javax.mail</groupId>

<artifactId>mail</artifactId>

</exclusion>

<exclusion>

<groupId>org.eclipse.jetty.aggregate</groupId>

<artifactId>*</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

<scope>${scope.type}</scope>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>${hbase.version}</version>

<scope>${scope.type}</scope>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>${hbase.version}</version>

<scope>${scope.type}</scope>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-hadoop2-compat</artifactId>

<version>${hbase.version}</version>

<scope>${scope.type}</scope>

</dependency>

<dependency>

<groupId>org.jpmml</groupId>

<artifactId>jpmml-sparkml</artifactId>

<version>1.5.9</version>

</dependency>

</dependencies>

<repositories>

<repository>

<id>alimaven</id>

<url>http://maven.aliyun.com/nexus/content/groups/public/</url>

<releases>

<updatePolicy>never</updatePolicy>

</releases>

<snapshots>

<updatePolicy>never</updatePolicy>

</snapshots>

</repository>

</repositories>

<build>

<sourceDirectory>src/main/scala</sourceDirectory>

<testSourceDirectory>src/test/scala</testSourceDirectory>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<ar

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

3251

3251

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?