1.kibana

1.1kibana概述

1.2安装Kibana

kibana安装的前提是拥有elasticsearch集群,并且这个集群有适合kibana的数据.

1.2.1 问题

本案例要求:

安装Kibana

配置启动服务查看5601端口是否正常

通过web页面访问Kibana

1.2.2 步骤

实现此案例需要按照如下步骤进行

步骤一:安装kibana

1)在另一台主机,配置ip为192.168.1.56,配置yum源,更改主机名

2)安装kibana

[root@kibana ~]# yum -y install kibana

[root@kibana ~]# rpm -qc kibana

/opt/kibana/config/kibana.yml

[root@kibana ~]# vim /opt/kibana/config/kibana.yml 去掉注释,并且去掉空格

+2 server.port: 5601

//若把端口改为80,可以成功启动kibana,但ss时没有端口,没有监听80端口,服务里面写死了,不能用80端口,只能是5601这个端口

+5 server.host: "0.0.0.0" //服务器监听地址

+15 elasticsearch.url: http://192.168.1.51:9200

//声明地址,从哪里查,集群里面随便选一个

+23 kibana.index: ".kibana" //kibana自己创建的索引

+26 kibana.defaultAppId: "discover" //打开kibana页面时,默认打开的页面discover

+53 elasticsearch.pingTimeout: 1500 //ping检测超时时间

+57 elasticsearch.requestTimeout: 30000 //请求超时

+64 elasticsearch.startupTimeout: 5000 //启动超时

[root@kibana ~]# systemctl restart kibana

[root@kibana ~]# systemctl enable kibana

Created symlink from /etc/systemd/system/multi-user.target.wants/kibana.service to /usr/lib/systemd/system/kibana.service.

[root@kibana ~]# ss -ntulp | grep 5601 //查看监听端口

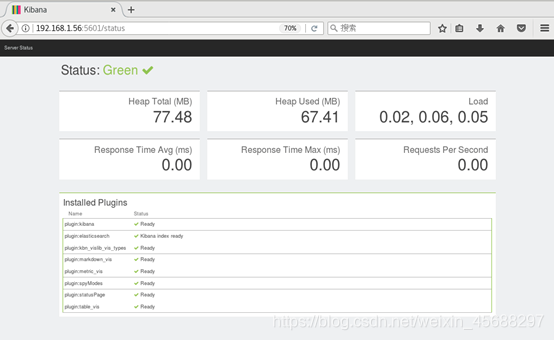

3)浏览器访问kibana,如图所示:

[student@room9pc01 ~]$ firefox 192.168.1.56:5601

4)点击Status,查看是否安装成功,Status:Grenn,installed Plugins全部是绿色的对钩,说明安装成功,如图所示:

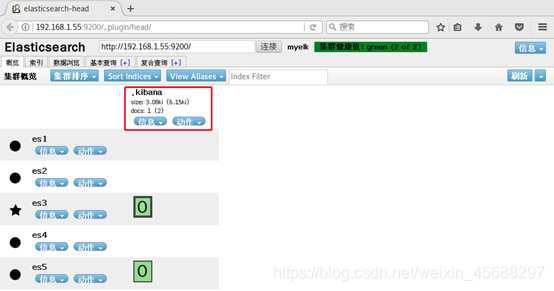

5)用head插件访问会有.kibana的索引信息,如图所示:

[student@room9pc01 ~]$ firefox http://192.168.1.55:9200/_plugin/head

步骤二:使用kibana查看数据是否导入成功

1)数据导入以后查看logs是否导入成功,如图所示:

[student@room9pc01 ~]$ firefox http://192.168.1.55:9200/_plugin/head

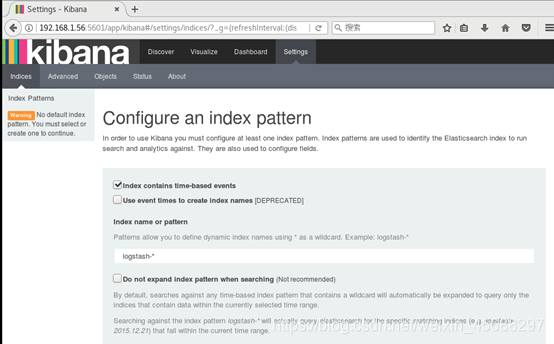

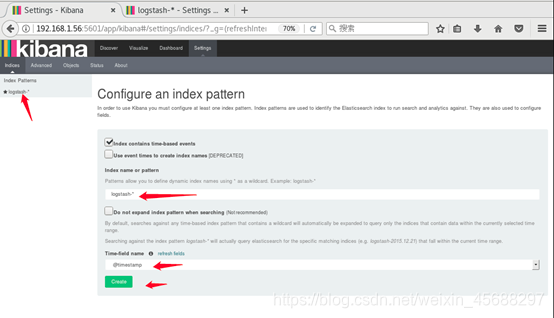

2)kibana导入数据,如图所示:

[student@room9pc01 ~]$ firefox http://192.168.1.56:5601

输入Logstash-*,选择time field name 选择@timestrap

3)成功创建会有logstash-*,如图所示:

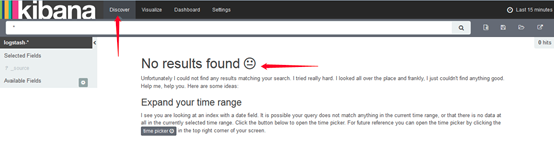

4)导入成功之后选择Discover,如图所示:

注意: 这里没有数据的原因是导入日志的时间段不对,默认配置是最近15分钟,在这可以修改一下时间来显示

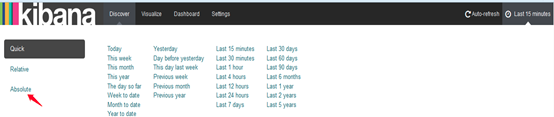

5)kibana修改时间,选择Lsat 15 miuntes,如图所示:

6)选择Absolute(自定义时间),如图所示:

7)选择时间2015-5-15到2015-5-22,如图-10所示:

8)查看结果,如图所示:

此时可以把鼠标放在图上,会发现鼠标变成一个十字,按住鼠标左键从柱状图左边界拉到右边界,会自动识别时间,显示具体时间的图形

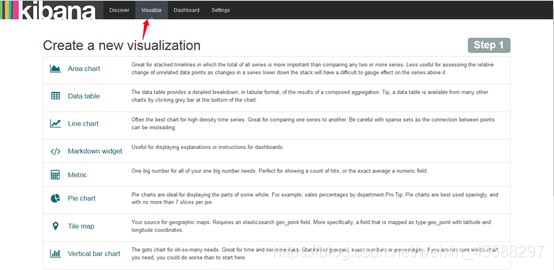

9)除了柱状图,Kibana还支持很多种展示方式,选择Visualize(显形) ,如图所示:

10)做一个饼图,选择Pie chart,如图所示:

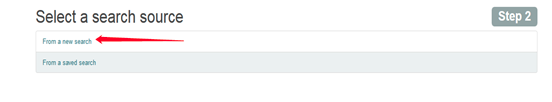

11)选择from a new search(创建一个新的),另外一个From a saved search(从保存的进行修改),如图所示:

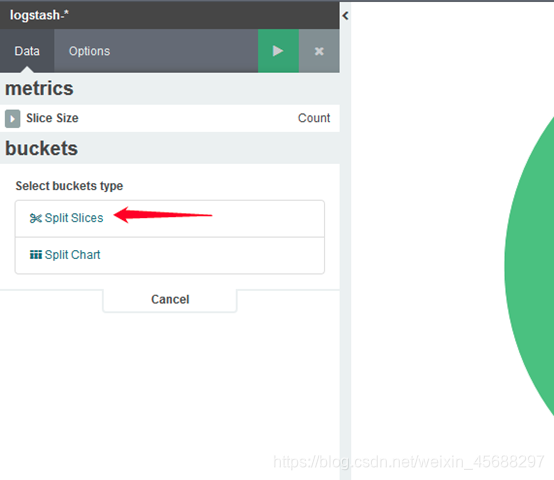

12)选择Spilt Slices(切割分片),如图所示:

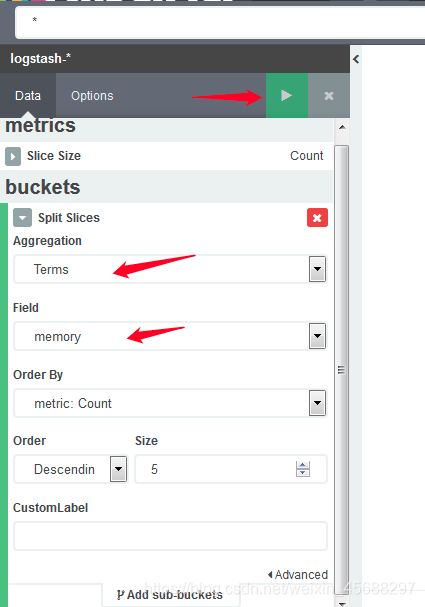

13)选择Trems,Memory(也可以选择其他的,这个不固定),点击播放按钮,如图所示:

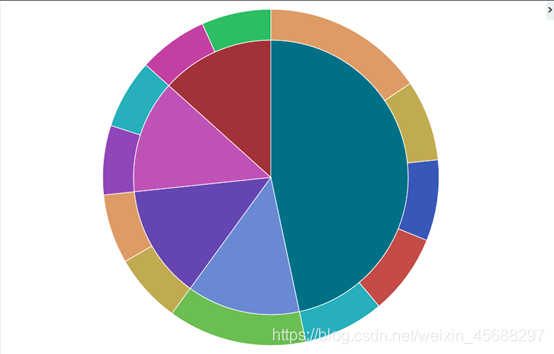

14)结果,如图所示:

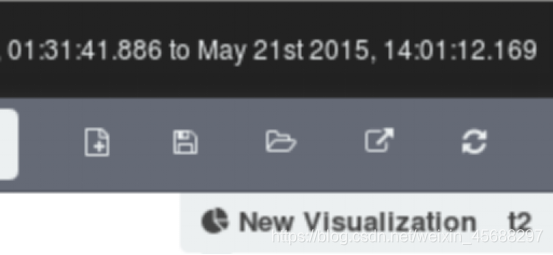

左边第一个为新建,第二个为保存

自定义保存图形的名字

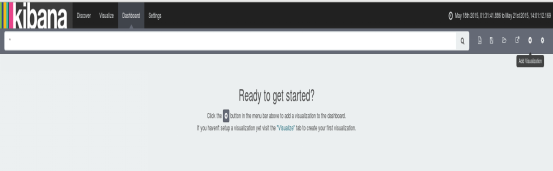

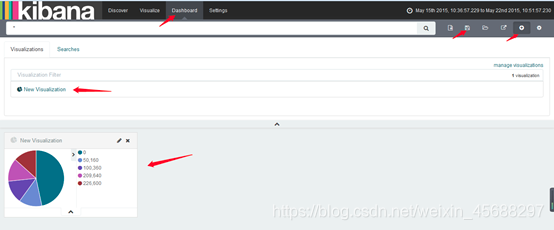

15)保存后可以在Dashboard查看,如图所示:

点击右上角的+号,选择保存的图形名字,打开即可

2.logstash

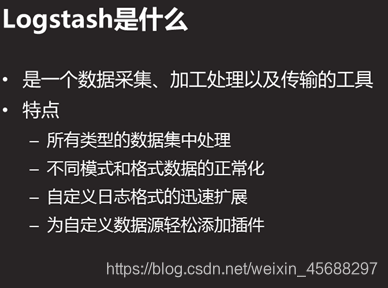

2.1logstash是什么?

2.2logstash安装

2.2.1 步骤

实现此案例需要按照如下步骤进行。

步骤一:安装logstash

1)配置主机名,ip和yum源,配置/etc/hosts(请把es1-es5、kibana主机配置和logstash一样的/etc/hosts)

[root@logstash ~]# eip 57 eip只能修改一次,之后即必须采用/etc/sysconfig/network-scripts/ifcfg-eth0进行修改

[root@es1 ~]# rsync -av /etc/yum.repos.d/local.repo root@192.168.1.57:/etc/yum.repos.d/ 利用rsync修改logstash的yum配置文件

Warning: Permanently added ‘192.168.1.57’ (ECDSA) to the list of known hosts.

root@192.168.1.57’s password:

sending incremental file list

local.repo

sent 382 bytes received 41 bytes 282.00 bytes/sec

total size is 286 speedup is 0.68

[root@logstash ~]# vim /etc/hosts

192.168.1.51 es1

192.168.1.52 es2

192.168.1.53 es3

192.168.1.54 es4

192.168.1.55 es5

192.168.1.56 kibana

192.168.1.57 logstash

2)安装java-1.8.0-openjdk和logstash

[root@logstash ~]# yum -y install java-1.8.0-openjdk

[root@logstash ~]# yum -y install logstash

[root@logstash ~]# java -version

openjdk version “1.8.0_161”

OpenJDK Runtime Environment (build 1.8.0_161-b14)

OpenJDK 64-Bit Server VM (build 25.161-b14, mixed mode)

[root@logstash ~]# touch /etc/logstash/logstash.conf

[root@logstash ~]# /opt/logstash/bin/logstash --version

logstash 2.3.4

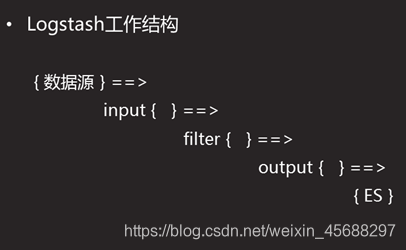

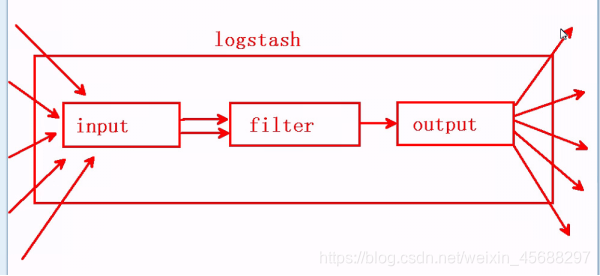

2.3logstash工作结构

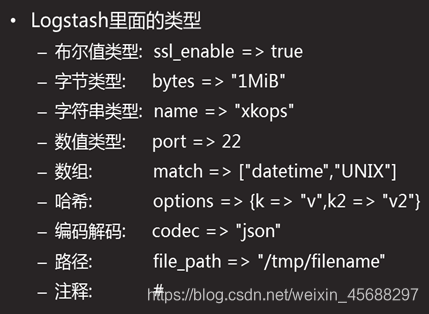

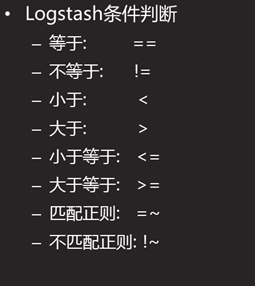

分为input,filter,output三个模块,stdin标准输入,stdout标准输出.在键值对中key: value,而在logstash中为key => value

input只负责接受数据(来自外界的数据),原封不动的传输给filter,filter负责处理数据,然后将处理后的数据交给output,output原封不动的将处理好的数据传输给elasticsearch

[root@logstash ~]# /opt/logstash/bin/logstash-plugin list //查看插件

…

logstash-input-stdin //标准输入插件

logstash-output-stdout //标准输出插件

…

[root@logstash ~]# vim /etc/logstash/logstash.conf 配置文件内只写了格式,未写数据处理方法,表明数据原样输出

input{

stdin{}

}

filter{

}

output{

stdout{}

}

[root@logstash ~]# /opt/logstash/bin/logstash -f /etc/logstash/logstash.conf

//启动并测试 因为logstash没有配置文件,所以需要指明配置文件

Settings: Default pipeline workers: 2

Pipeline main started

nkndkna //输入nkndkna

2019-10-16T03:05:52.215Z logstash nkndkna //原样输出nkndkna ==按ctrl + c第一次退出==

SIGINT received. Shutting down the agent. {:level=>:warn}

stopping pipeline {:id=>"main"} ==按ctrl + c第二次退出==

Received shutdown signal, but pipeline is still waiting for in-flight events

to be processed. Sending another ^C will force quit Logstash, but this may cause

data loss. {:level=>:warn}

SIGINT received. Terminating immediately.. {:level=>:fatal}

[root@logstash ~]#

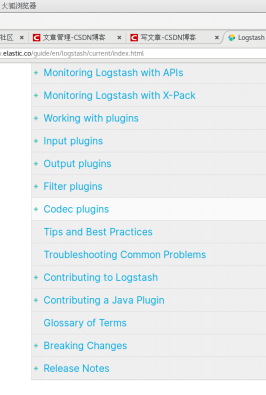

备注:若不会写配置文件可以找帮助,插件文档的位置:

https://github.com/logstash-plugins—点击第一个logstash-integration-kafka—找central location

https://www.elastic.co/guide/en/logstash/current/index.html直接找这个网站也可以

[root@logstash ~]# /opt/logstash/bin/logstash-plugin list

Ignoring ffi-1.9.13 because its extensions are not built. Try: gem pristine ffi --version 1.9.13

logstash-codes-***** 编码类插件,指定数据的类别

logstash-filter-***** 处理数据的插件,指定数据处理方式

logstash-input-***** 读取的插件,指定从哪读取数据

logstash-output-***** 输出的插件,指定数据以哪种方式输出

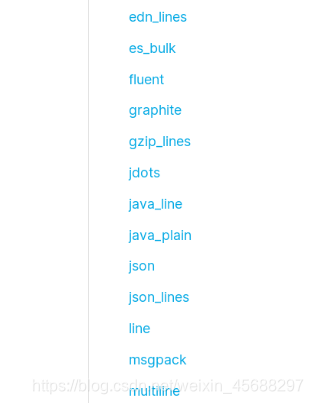

3)codec(编码)类插件

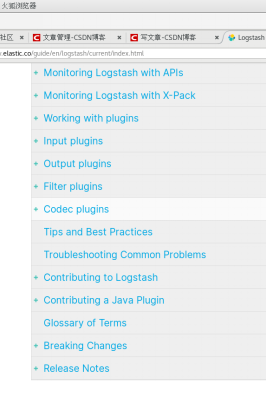

输入https://www.elastic.co/guide/en/logstash/current/index.html进行访问,找到codec plugins,并点击前面的+号,进入下拉菜单

在下拉菜单中找到json,点击json进入链接,发现无具体样例,那直接写codec => “json”

[root@logstash ~]# vim /etc/logstash/logstash.conf

input{

stdin{ codec => "json" }

}

filter{

}

output{

stdout{}

}

[root@logstash bin]# /opt/logstash/bin/logstash -f /etc/logstash/logstash.conf

Settings: Default pipeline workers: 2

Pipeline main started

dffsfwewgt

2019-10-16T05:10:04.376Z logstash dffsfwewgt //标准输出

{"a": 1}

2019-10-16T05:10:23.466Z logstash %{message} //标准输出只能输出message

[root@logstash bin]#vim /etc/logstash/logstash.conf

input{

stdin{ codec => "json" }

}

filter{

}

output{

stdout{ codec => "json" }

[root@logstash bin]# /opt/logstash/bin/logstash -f /etc/logstash/logstash.conf

Settings: Default pipeline workers: 2

Pipeline main started

hjijoo

{"message":"hjijoo","tags":["_jsonparsefailure"],"@version":"1","@timestamp":"2019-10-16T05:32:48.631Z","host":"logstash"} //json编码失败

{"a": 1}

{"a":1,"@version":"1","@timestamp":"2019-10-16T05:33:00.611Z","host":"logstash"} //json编码成功,但不易于读

[root@logstash bin]#vim /etc/logstash/logstash.conf

input{

stdin{ codec => "json" }

}

filter{

}

output{

stdout{ codec => "rubydebug" } //竖列显示,易读

[root@logstash bin]# /opt/logstash/bin/logstash -f /etc/logstash/logstash.conf

Settings: Default pipeline workers: 2

Pipeline main started

bdjakdlk

{

"message" => "bdjakdlk",

"tags" => [

[0] "_jsonparsefailure" //编码失败

],

"@version" => "1",

"@timestamp" => "2019-10-16T05:40:27.121Z",

"host" => "logstash"

}

{"a": 1}

{

"a" => 1,

"@version" => "1",

"@timestamp" => "2019-10-16T05:40:37.844Z",

"host" => "logstash"

}

[root@logstash bin]#vim /etc/logstash/logstash.conf

input{

}

filter{

}

output{

stdout{ codec => "rubydebug" }

}

4)file模块插件

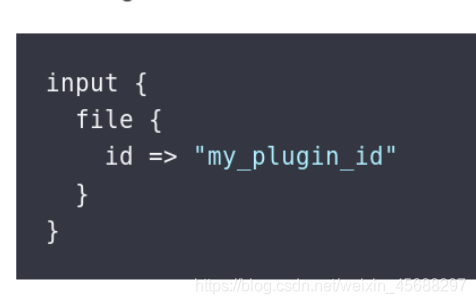

在网站https://www.elastic.co/guide/en/logstash/current/index.html中找到你想使用的模块(如+input plugins)打开模块文件前面的+号

在下拉菜单中找到file,点击file 进入链接,找到具体样例

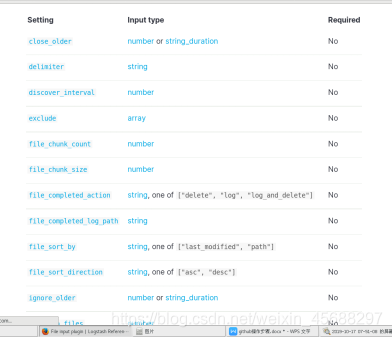

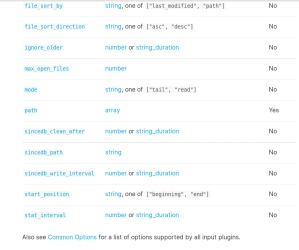

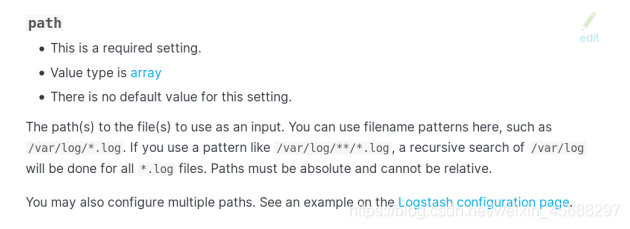

将例子复制在文本中,在参数表中找到必配项,其中required中的NO表示可选项,可以不写,YES表示必填项,必须写入,input type指的是数据类型,其中string字符类型,number数值类型,array数组类型,看下表发现只有path为yes选项,点击path,进入path详细信息

经过查看path须指明文件路径

[root@logstash bin]# vim /etc/logstash/logstash.conf

input{

file {

path => ["/tmp/a.log", "/var/tmp/b.log"]

}

}

filter{

}

output{

stdout{ codec => "rubydebug" }

}

再开一个终端,进行验证

[root@logstash tmp]# echo A_KaTeX parse error: Expected 'EOF', got '#' at position 43: …t@logstash tmp]#̲ echo A_{RANDOM} >> /var/tmp/b.log

[root@logstash ~]# /opt/logstash/bin/logstash -f /etc/logstash/logstash.conf

{

“message” => “A_5801”,

“@version” => “1”,

“@timestamp” => “2019-10-16T06:43:47.180Z”,

“path” => “/tmp/a.log”,

“host” => “logstash”,

}

{

“message” => “A_2745”,

“@version” => “1”,

“@timestamp” => “2019-10-16T06:43:47.174Z”,

“path” => “/var/tmp/b.log”,

“host” => “logstash”,

}

[root@logstash bin]# vim /etc/logstash/logstash.conf

sincedb_path => /root/.sincedb-xxxxxxxxxxxx //里面存在指针,如果关闭logstash,它会将指针指到哪儿写入/root/,sincedb-xxx文件中,下次开启会读取这个文件,就会知道上次读取到哪,这次会接着读.

start_postion => beginning //只有两个位置,开头和结尾,当第一次开启时会读取这个文件(即没有sincedb_path文件时),一般为end,可以设置为beginning.

input{

file {

path => ["/tmp/a.log", "/var/tmp/b.log"]

sincedb_path => "/var/lib/sincedb"

start_position => "beginning"

}

}

filter{

}

output{

stdout { codec => "rubydebug" }

}

[root@logstash ~]# echo A_${RANDOM} >> /tmp/b.log

[root@logstash ~]# echo A_${RANDOM} >> /var/tmp/b.log

[root@logstash ~]# /opt/logstash/bin/logstash -f /etc/logstash/logstash.conf

{

"message" => "A_5801",

"@version" => "1",

"@timestamp" => "2019-10-16T06:43:47.180Z",

"path" => "/tmp/a.log",

"host" => "logstash",

}

{

"message" => "A_2745",

"@version" => "1",

"@timestamp" => "2019-10-16T06:43:47.174Z",

"path" => "/var/tmp/b.log",

"host" => "logstash",

}

{

"message" => "A_2601",

"@version" => "1",

"@timestamp" => "2019-10-16T06:43:47.180Z",

"path" => "/tmp/a.log",

"host" => "logstash",

}

{

"message" => "A_3745",

"@version" => "1",

"@timestamp" => "2019-10-16T06:43:47.174Z",

"path" => "/var/tmp/b.log",

"host" => "logstash",

}

[root@logstash ~]# vim /etc/logstash/logstash.conf

input{

file {

path => ["/tmp/a.log"]

sincedb_path => "/var/lib/sincedb"

start_position => "beginning"

type => "testlog" //类似于打标签,显示这个文件来自于testlog

}

file {

path => ["/var/tmp/b.log"]

sincedb_path => "/var/lib/sincedb"

start_position => "beginning"

type => "tsd1906"

}

filter{

}

output{

stdout{ codec => "rubydebug" }

}

[root@logstash ~]# echo A_${RANDOM} >> /tmp/a.log

[root@logstash ~]# echo A_${RANDOM} >> /var/tmp/b.log

[root@logstash ~]# /opt/logstash/bin/logstash -f /etc/logstash/logstash.conf

Settings: Default pipeline workers: 2

Pipeline main started

{

"message" => "A_7201",

"@version" => "1",

"@timestamp" => "2019-10-16T06:43:47.180Z",

"path" => "/tmp/a.log",

"host" => "logstash",

"type" => "testlog"

}

{

"message" => "A_1345",

"@version" => "1",

"@timestamp" => "2019-10-16T06:43:47.174Z",

"path" => "/var/tmp/b.log",

"host" => "logstash",

"type" => "tsd1906"

}

5)filter grok插件

grok插件:

解析各种非结构化的日志数据插件

grok使用正则表达式把非结构化的数据结构化

在分组匹配,正则表达式需要根据具体数据结构编写

虽然编写困难,但适用性极广

解析Apache的日志

使用grok插件解析apache访问日志的内容

新建一个httpd服务器,开启httpd服务器,并设置默认网页hello world,新建步骤省略

[root@room ~]# curl 192.168.1.58

hello world

[root@web ~]# cat /var/log/httpd/access_log

"192.168.1.254 - - [15/Sep/2018:18:25:46 +0800] \"GET /noindex/css/open-sans.css HTTP/1.1\" 200 5081 \"http://192.168.1.65/\" \"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:52.0) Gecko/20100101 Firefox/52.0\""

[root@logstash ~]#vim /tmp/a.log 删除原有数据,修改为access_log日志内容

"192.168.1.254 - - [15/Sep/2018:18:25:46 +0800] \"GET /noindex/css/open-sans.css HTTP/1.1\" 200 5081 \"http://192.168.1.65/\" \"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:52.0) Gecko/20100101 Firefox/52.0\""

[root@logstash ~]#rm -rf /var/tmp/b.log

[root@logstash ~]#vim /etc/logstash/logstash.conf

input{

file {

path => [ "/tmp/a.log" ]

sincedb_path => "/var/lib/logstash/sincedb"

start_position => "beginning"

type => "testlog"

}

}

filter{

grok{

match => [ "message", "(?<key>reg)" ]

}

}

output{

stdout{ codec => "rubydebug" }

}

[root@logstash ~]# /opt/logstash/bin/logstash -f /etc/logstash/logstash.conf

//出现message的日志,但是没有解析是什么意思

Settings: Default pipeline workers: 2

Pipeline main started

{

"message" => "192.168.1.254 - - [15/Sep/2018:18:25:46 +0800] \"GET /noindex/css/open-sans.css HTTP/1.1\" 200 5081 \"http://192.168.1.65/\" \"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:52.0) Gecko/20100101 Firefox/52.0\""

"@version" => "1",

"@timestamp" => "2018-09-15T10:26:51.335Z",

"path" => "/tmp/a.log",

"host" => "logstash",

"type" => "testlog",

"tags" => [

[0] "_grokparsefailure"

]

}

若要解决没有解析的问题,同样的方法把日志复制到/tmp/a.log,logstash.conf配置文件里面修改grok

查找正则宏路径

[root@logstash ~]# cd /opt/logstash/vendor/bundle/jruby/1.9/gems/logstash-patterns-core-2.0.5/patterns

[root@logstash patterns]# vim grok-patterns 查找COMBINEDAPACHELOG定义httpd日志格式的正则宏路径

COMBINEDAPACHELOG %{COMMONAPACHELOG} %{QS:referrer} %{QS:agent}

[root@logstash ~]# vim /etc/logstash/logstash.conf

...

filter{

grok {

match => ["message", "%{COMBINEDAPACHELOG}"]

}

}

...

[root@logstash ~]# /opt/logstash/bin/logstash -f /etc/logstash/logstash.conf

Settings: Default pipeline workers: 2

Pipeline main started

{

"message" => "192.168.1.254 - - [15/Sep/2018:18:25:46 +0800] \"GET /noindex/css/open-sans.css HTTP/1.1\" 200 5081 \"http://192.168.1.65/\" \"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:52.0) Gecko/20100101 Firefox/52.0\"",

"@version" => "1",

"@timestamp" => "2018-09-15T10:55:57.743Z",

"path" => "/tmp/a.log",

"host" => "logstash",

"type" => "testlog",

"clientip" => "192.168.1.254",

"ident" => "-",

"auth" => "-",

"timestamp" => "15/Sep/2019:18:25:46 +0800",

"verb" => "GET",

"request" => "/noindex/css/open-sans.css",

"httpversion" => "1.1",

"response" => "200",

"bytes" => "5081",

"referrer" => "\"http://192.168.1.65/\"",

"agent" => "\"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:52.0) Gecko/20100101 Firefox/52.0\""

}

使用正则表达式表示httpd日志格式

[root@logstash ~]# vim /etc/logstash/logstash.conf

input{

file {

path => "/tmp/a.log"

sincedb_path => "/dev/null"//使用/dev/null表明取消指针,该路径不存数据,每次开启从beginning开始

start_position => "beginning"

type => "testlog"

}

}

filter{

grok {

match => { "message" => "(?<client_ip>[0-9.]+) (?<login_name>\S+) (?<username>\S+) \[(?<time>.+)\] \"(?<method>[A-Z]+) (?<url>\S+) (?<proto>[A-Z]+).(?<ver>[0-9.]+)\" (?<rc>\d+) (?<byte>\d+) \"(?<ref>.+)\" \"(?<agent>.+)\"" }

}

}

output{

stdout{ codec => "rubydebug" }

}

[root@logstash ~]# /opt/logstash/bin/logstash -f /etc/logstash/logstash.conf

Settings: Default pipeline workers: 2

Pipeline main started

{

"message" => "192.168.1.254 - - [15/Sep/2018:18:25:46 +0800] \"GET /noindex/css/open-sans.css HTTP/1.1\" 200 5081 \"http://192.168.1.65/\" \"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:52.0) Gecko/20100101 Firefox/52.0\"",

"@version" => "1",

"@timestamp" => "2018-09-15T10:55:57.743Z",

"path" => "/tmp/a.log",

"host" => "logstash",

"type" => "testlog",

"clientip" => "192.168.1.254",

"ident" => "-",

"auth" => "-",

"timestamp" => "15/Sep/2019:18:25:46 +0800",

"verb" => "GET",

"request" => "/noindex/css/open-sans.css",

"httpversion" => "1.1",

"response" => "200",

"bytes" => "5081",

"referrer" => "\"http://192.168.1.65/\"",

"agent" => "\"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:52.0) Gecko/20100101 Firefox/52.0\""

}

3.综合练习

3.1 问题

本案例要求:

安装配置 beats插件

安装一台Apache服务并配置

使用filebeat收集Apache服务器的日志

使用grok处理filebeat发送过来的日志

存入elasticsearch

使用 kibana 做图形展示

1)在之前安装了Apache的主机上面安装filebeat

[root@web ~]# yum -y install filebeat

[root@web ~]# vim /etc/filebeat/filebeat.yml

+14 paths:

+15 - /var/log/httpd/access_log //httpd服务日志的路径,短横线加空格代表yml格式

+72 document_type: apache_log //文档类型

+183 #elasticsearch: //加上注释

+188 #hosts: [“localhost:9200”] //加上注释

+278 logstash: //去掉注释 将所有访问58的访问信息传输到logstash上进行数据处理

+280 hosts: [“192.168.1.57:5044”] //去掉注释,logstash那台主机的ip

[root@web ~]# grep -Pv “^(\s*#|$)” /etc/filebeat/filebeat.yml 查看未带注释的行内容

filebeat:

prospectors:

-

paths:

- /var/log/httpd/access_log //httpd服务的日志路径

input_type: log

document_type: apache_log

registry_file: /var/lib/filebeat/registry

output:

logstash:

hosts: ["192.168.1.57:5044"]

shipper:

logging:

files:

rotateeverybytes: 10485760 # = 10MB

[root@web ~]# systemctl start filebeat

[root@web ~]#systemctl enable filebeat

2)修改logstash.conf文件

[root@logstash ~]# vim /etc/logstash/logstash.conf

input{

file {

path => ["/tmp/a.log"]

sincedb_path => "/var/lib/logstash/since.db"

start_position => "beginning"

type => "testlog"

}

beats{

port => 5044 //beats利用端口5044获取httpd访问日志,查找beats格式的方法同上

}

}

filter{

if [type] == "apache_log" { //if判断,如果文件类型是apache_log时,执行下列命令

grok{

match => ["message", "%{COMBINEDAPACHELOG}"] //使用宏ip定义apache日志格式

}}

}

output{

# stdout{ codec => "rubydebug" } //注释掉stdout防止一直输出

if [type] == "apache_log"{ //if判断,如果文件类型是apache_log时,执行下列命令

elasticsearch { //logstash处理后的数据传输给elasticsearch

hosts => ["es1:9200", "es2:9200"] //定义主机地址(elasticsearch集群主机地址),防止一个单点故障

index => "apache_log" //创建新的索引apache_log,访问es[1-5]:9200/_plugin/head即可发现新创建的apache_log(前提是庲的索引中没有重名的存在,或者把以前的全部删除curl -X DELETE es[1-5]:9200/_cluster/*)

flush_size => 2000 //传输数据到达2000字节访问elasticsearch

idle_flush_time => 10 //10秒访问一次elasticsearch

}}

}

[root@logstash ~]# /opt/logstash/bin/logstash -f /etc/logstash/logstash.conf

Settings: Default pipeline workers: 2

Pipeline main started 不会出现内容

打开另一终端查看5044是否成功启动

[root@logstash ~]# netstat -antup | grep 5044

tcp6 0 0 :::5044 ::? LISTEN 23776/java

[student@room9pc01 ~]$ firefox http://192.168.1.55:9200/_plugin/head

4879

4879

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?