目录

1 作用

利用当前参考关键帧(

mpReferenceKF)中的地图点和当前帧图像进行匹配,通过 PnP + 优化估计当前帧位姿。

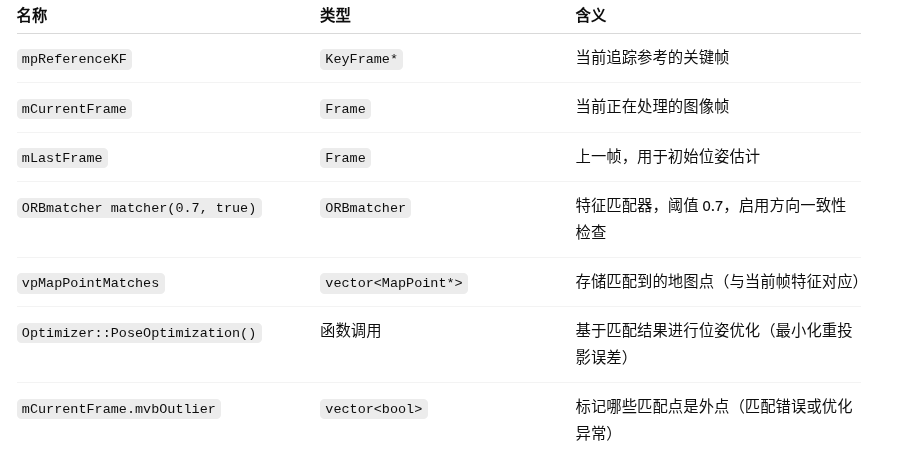

2 参数及含义

3 代码

bool Tracking::TrackReferenceKeyFrame() { // Compute Bag of Words vector mCurrentFrame.ComputeBoW(); // We perform first an ORB matching with the reference keyframe // If enough matches are found we setup a PnP solver ORBmatcher matcher(0.7,true); vector<MapPoint*> vpMapPointMatches; int nmatches = matcher.SearchByBoW(mpReferenceKF,mCurrentFrame,vpMapPointMatches); if(nmatches<15) return false; mCurrentFrame.mvpMapPoints = vpMapPointMatches; mCurrentFrame.SetPose(mLastFrame.mTcw); Optimizer::PoseOptimization(&mCurrentFrame); // Discard outliers int nmatchesMap = 0; for(int i =0; i<mCurrentFrame.N; i++) { if(mCurrentFrame.mvpMapPoints[i]) { if(mCurrentFrame.mvbOutlier[i]) { MapPoint* pMP = mCurrentFrame.mvpMapPoints[i]; mCurrentFrame.mvpMapPoints[i]=static_cast<MapPoint*>(NULL); mCurrentFrame.mvbOutlier[i]=false; pMP->mbTrackInView = false; pMP->mnLastFrameSeen = mCurrentFrame.mnId; nmatches--; } else if(mCurrentFrame.mvpMapPoints[i]->Observations()>0) nmatchesMap++; } } return nmatchesMap>=10; }

4 解析

4.1 计算词袋向量

// Compute Bag of Words vector mCurrentFrame.ComputeBoW();

4.1.1 ComputeBoW() 函数

把当前帧的ORB特征描述子(Descriptors)转化为词袋向量(BoW向量和特征向量),

用于快速特征匹配、重定位、回环检测等任务。该处调用的是Frame.cc

4.1.1.1 Frame::ComputeBoW()

(1)声明:Frame.h

// Compute Bag of Words representation. void ComputeBoW();(2)定义:Frame.cc

void Frame::ComputeBoW() { if(mBowVec.empty()) { //转换描述子格式:N*32矩阵转化为vector<cv::Mat>数组形式 vector<cv::Mat> vCurrentDesc = Converter::toDescriptorVector(mDescriptors); //使用 ORB 词典量化生成 BoW 向量 mpORBvocabulary->transform(vCurrentDesc,mBowVec,mFeatVec,4); } }(3)参数及含义

(4)解析

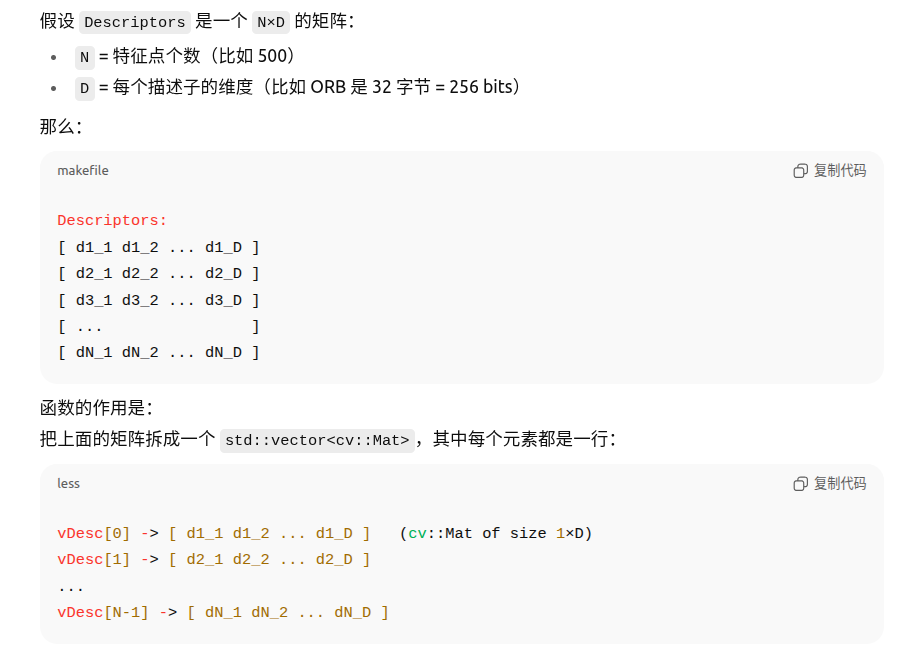

a.vector<cv::Mat> vCurrentDesc = Converter::toDescriptorVector(mDescriptors);

std::vector<cv::Mat> Converter::toDescriptorVector(const cv::Mat &Descriptors) { //vDesc为浅拷贝,如引用的矩阵数据被销毁,则vDesc也消失 std::vector<cv::Mat> vDesc; //将描述子矩阵每行拆解,分别放入vDesc vDesc.reserve(Descriptors.rows); for (int j=0;j<Descriptors.rows;j++) vDesc.push_back(Descriptors.row(j)); return vDesc; }

4.1.1.2 KeyFrame::ComputeBoW()

(1)声明:KeyFrame.h

// Bag of Words Representation void ComputeBoW();(2)定义:KeyFrame.cc

void KeyFrame::ComputeBoW() { //如果BoW向量或特征向量为空 if(mBowVec.empty() || mFeatVec.empty()) { vector<cv::Mat> vCurrentDesc = Converter::toDescriptorVector(mDescriptors); // Feature vector associate features with nodes in the 4th level (from leaves up) // We assume the vocabulary tree has 6 levels, change the 4 otherwise mpORBvocabulary->transform(vCurrentDesc,mBowVec,mFeatVec,4); } }与4.1.1.2基本一致,

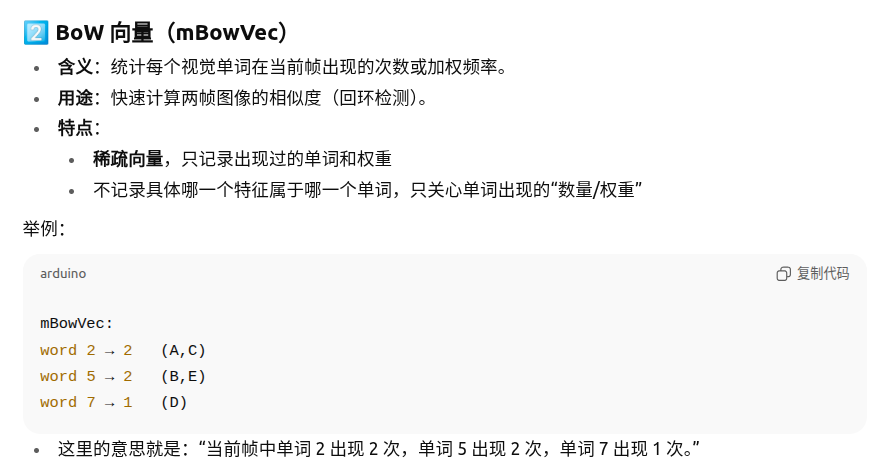

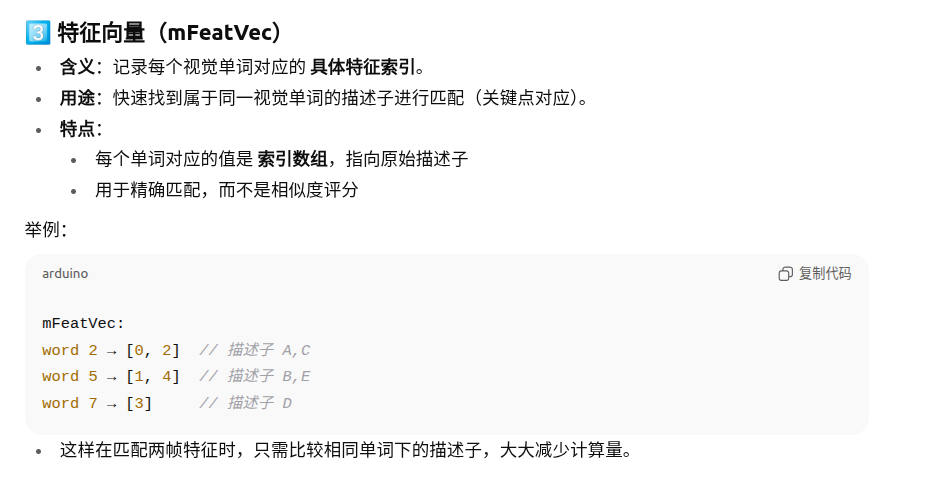

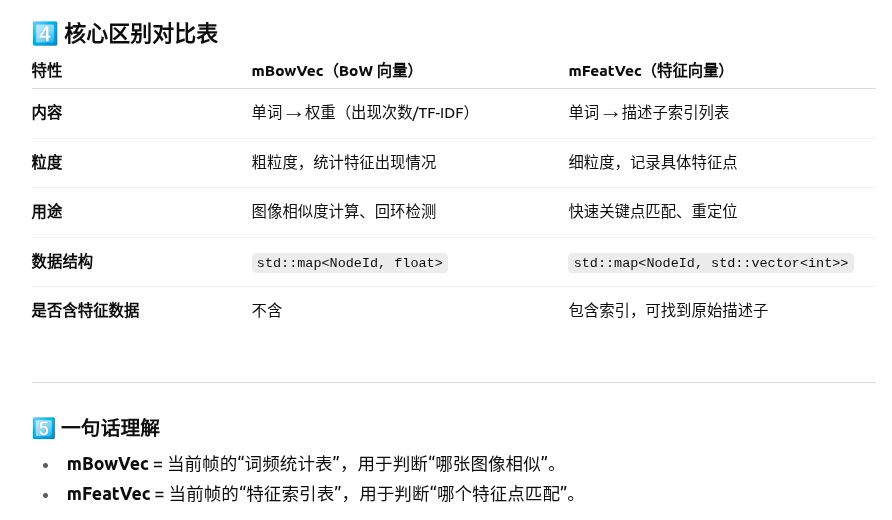

PS.BoW向量 VS 特征向量

4.2 词袋与参考关键帧匹配

// We perform first an ORB matching with the reference keyframe // If enough matches are found we setup a PnP solver //ORB匹配器,匹配阀值0.7,使用弱约束(允许较远距离匹配) ORBmatcher matcher(0.7,true); vector<MapPoint*> vpMapPointMatches; //匹配成功的地图点数=地图点参考关键帧与当前帧BOW向量匹配成功存入地图点配对成功数量 int nmatches = matcher.SearchByBoW(mpReferenceKF,mCurrentFrame,vpMapPointMatches);

4.3 检查匹配数量

if(nmatches<15) return false;

4.4 绑定匹配结果并设定初始位姿

mCurrentFrame.mvpMapPoints = vpMapPointMatches; mCurrentFrame.SetPose(mLastFrame.mTcw);

4.5 优化当前位姿(PnP+BA)

Optimizer::PoseOptimization(&mCurrentFrame);

4.5.1 PoseOptimization() 函数

(1)作用

单帧相机的位姿(Tcw)进行优化,使该帧在当前的地图点观测下最符合重投影误差最小化。

(2)声明:Optimizer.h

int static PoseOptimization(Frame* pFrame);(3)定义:Optimizer.cc

int Optimizer::PoseOptimization(Frame *pFrame) { g2o::SparseOptimizer optimizer; g2o::BlockSolver_6_3::LinearSolverType * linearSolver; linearSolver = new g2o::LinearSolverDense<g2o::BlockSolver_6_3::PoseMatrixType>(); g2o::BlockSolver_6_3 * solver_ptr = new g2o::BlockSolver_6_3(linearSolver); g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg(solver_ptr); optimizer.setAlgorithm(solver); int nInitialCorrespondences=0; // Set Frame vertex g2o::VertexSE3Expmap * vSE3 = new g2o::VertexSE3Expmap(); vSE3->setEstimate(Converter::toSE3Quat(pFrame->mTcw)); vSE3->setId(0); vSE3->setFixed(false); optimizer.addVertex(vSE3); // Set MapPoint vertices const int N = pFrame->N; vector<g2o::EdgeSE3ProjectXYZOnlyPose*> vpEdgesMono; vector<size_t> vnIndexEdgeMono; vpEdgesMono.reserve(N); vnIndexEdgeMono.reserve(N); vector<g2o::EdgeStereoSE3ProjectXYZOnlyPose*> vpEdgesStereo; vector<size_t> vnIndexEdgeStereo; vpEdgesStereo.reserve(N); vnIndexEdgeStereo.reserve(N); const float deltaMono = sqrt(5.991); const float deltaStereo = sqrt(7.815); { unique_lock<mutex> lock(MapPoint::mGlobalMutex); for(int i=0; i<N; i++) { MapPoint* pMP = pFrame->mvpMapPoints[i]; if(pMP) { // Monocular observation if(pFrame->mvuRight[i]<0) { nInitialCorrespondences++; pFrame->mvbOutlier[i] = false; Eigen::Matrix<double,2,1> obs; const cv::KeyPoint &kpUn = pFrame->mvKeysUn[i]; obs << kpUn.pt.x, kpUn.pt.y; g2o::EdgeSE3ProjectXYZOnlyPose* e = new g2o::EdgeSE3ProjectXYZOnlyPose(); e->setVertex(0, dynamic_cast<g2o::OptimizableGraph::Vertex*>(optimizer.vertex(0))); e->setMeasurement(obs); const float invSigma2 = pFrame->mvInvLevelSigma2[kpUn.octave]; e->setInformation(Eigen::Matrix2d::Identity()*invSigma2); g2o::RobustKernelHuber* rk = new g2o::RobustKernelHuber; e->setRobustKernel(rk); rk->setDelta(deltaMono); e->fx = pFrame->fx; e->fy = pFrame->fy; e->cx = pFrame->cx; e->cy = pFrame->cy; cv::Mat Xw = pMP->GetWorldPos(); e->Xw[0] = Xw.at<float>(0); e->Xw[1] = Xw.at<float>(1); e->Xw[2] = Xw.at<float>(2); optimizer.addEdge(e); vpEdgesMono.push_back(e); vnIndexEdgeMono.push_back(i); } else // Stereo observation { nInitialCorrespondences++; pFrame->mvbOutlier[i] = false; //SET EDGE Eigen::Matrix<double,3,1> obs; const cv::KeyPoint &kpUn = pFrame->mvKeysUn[i]; const float &kp_ur = pFrame->mvuRight[i]; obs << kpUn.pt.x, kpUn.pt.y, kp_ur; g2o::EdgeStereoSE3ProjectXYZOnlyPose* e = new g2o::EdgeStereoSE3ProjectXYZOnlyPose(); e->setVertex(0, dynamic_cast<g2o::OptimizableGraph::Vertex*>(optimizer.vertex(0))); e->setMeasurement(obs); const float invSigma2 = pFrame->mvInvLevelSigma2[kpUn.octave]; Eigen::Matrix3d Info = Eigen::Matrix3d::Identity()*invSigma2; e->setInformation(Info); g2o::RobustKernelHuber* rk = new g2o::RobustKernelHuber; e->setRobustKernel(rk); rk->setDelta(deltaStereo); e->fx = pFrame->fx; e->fy = pFrame->fy; e->cx = pFrame->cx; e->cy = pFrame->cy; e->bf = pFrame->mbf; cv::Mat Xw = pMP->GetWorldPos(); e->Xw[0] = Xw.at<float>(0); e->Xw[1] = Xw.at<float>(1); e->Xw[2] = Xw.at<float>(2); optimizer.addEdge(e); vpEdgesStereo.push_back(e); vnIndexEdgeStereo.push_back(i); } } } } if(nInitialCorrespondences<3) return 0; // We perform 4 optimizations, after each optimization we classify observation as inlier/outlier // At the next optimization, outliers are not included, but at the end they can be classified as inliers again. const float chi2Mono[4]={5.991,5.991,5.991,5.991}; const float chi2Stereo[4]={7.815,7.815,7.815, 7.815}; const int its[4]={10,10,10,10}; int nBad=0; for(size_t it=0; it<4; it++) { vSE3->setEstimate(Converter::toSE3Quat(pFrame->mTcw)); optimizer.initializeOptimization(0); optimizer.optimize(its[it]); nBad=0; for(size_t i=0, iend=vpEdgesMono.size(); i<iend; i++) { g2o::EdgeSE3ProjectXYZOnlyPose* e = vpEdgesMono[i]; const size_t idx = vnIndexEdgeMono[i]; if(pFrame->mvbOutlier[idx]) { e->computeError(); } const float chi2 = e->chi2(); if(chi2>chi2Mono[it]) { pFrame->mvbOutlier[idx]=true; e->setLevel(1); nBad++; } else { pFrame->mvbOutlier[idx]=false; e->setLevel(0); } if(it==2) e->setRobustKernel(0); } for(size_t i=0, iend=vpEdgesStereo.size(); i<iend; i++) { g2o::EdgeStereoSE3ProjectXYZOnlyPose* e = vpEdgesStereo[i]; const size_t idx = vnIndexEdgeStereo[i]; if(pFrame->mvbOutlier[idx]) { e->computeError(); } const float chi2 = e->chi2(); if(chi2>chi2Stereo[it]) { pFrame->mvbOutlier[idx]=true; e->setLevel(1); nBad++; } else { e->setLevel(0); pFrame->mvbOutlier[idx]=false; } if(it==2) e->setRobustKernel(0); } if(optimizer.edges().size()<10) break; } // Recover optimized pose and return number of inliers g2o::VertexSE3Expmap* vSE3_recov = static_cast<g2o::VertexSE3Expmap*>(optimizer.vertex(0)); g2o::SE3Quat SE3quat_recov = vSE3_recov->estimate(); cv::Mat pose = Converter::toCvMat(SE3quat_recov); pFrame->SetPose(pose); return nInitialCorrespondences-nBad; }(4)解析

a.构建优化器

//创建g2o优化器 g2o::SparseOptimizer optimizer; //定义优化变量维度 g2o::BlockSolver_6_3::LinearSolverType * linearSolver; //LinearSolverDense,使用稠密线性求解器 linearSolver = new g2o::LinearSolverDense<g2o::BlockSolver_6_3::PoseMatrixType>(); g2o::BlockSolver_6_3 * solver_ptr = new g2o::BlockSolver_6_3(linearSolver); //选择非线性优化算法:Levenberg-Marquardt(LM)算法 g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg(solver_ptr); optimizer.setAlgorithm(solver);b.设置相机位姿

// Set Frame vertex //创建一个 SE3 类型的顶点 g2o::VertexSE3Expmap * vSE3 = new g2o::VertexSE3Expmap(); //将当前帧位姿矩阵由cv::Mat转为SE3Quat,告诉优化器这个顶点的初始估计值是这帧的当前位姿 vSE3->setEstimate(Converter::toSE3Quat(pFrame->mTcw)); //给顶点赋予唯一ID vSE3->setId(0); //顶点不固定,需要优化 vSE3->setFixed(false); //把顶点加入优化器 optimizer.addVertex(vSE3);c.添加观测边(投影误差)

// Set MapPoint vertices const int N = pFrame->N; //定义容器 //vpEdgesMono存放单目观测边 vector<g2o::EdgeSE3ProjectXYZOnlyPose*> vpEdgesMono; vector<size_t> vnIndexEdgeMono; vpEdgesMono.reserve(N); vnIndexEdgeMono.reserve(N); vector<g2o::EdgeStereoSE3ProjectXYZOnlyPose*> vpEdgesStereo; vector<size_t> vnIndexEdgeStereo; vpEdgesStereo.reserve(N); vnIndexEdgeStereo.reserve(N); //定义鲁棒核参数 const float deltaMono = sqrt(5.991); const float deltaStereo = sqrt(7.815); { unique_lock<mutex> lock(MapPoint::mGlobalMutex); //遍历当前帧特征点 for(int i=0; i<N; i++) { MapPoint* pMP = pFrame->mvpMapPoints[i]; if(pMP) { // Monocular observation if(pFrame->mvuRight[i]<0) { //计算特征匹配有效数 nInitialCorrespondences++; //将该特征点标记为非外点 pFrame->mvbOutlier[i] = false; //构造观测值 Eigen::Matrix<double,2,1> obs; const cv::KeyPoint &kpUn = pFrame->mvKeysUn[i]; obs << kpUn.pt.x, kpUn.pt.y; //创建边对象,优化相机位姿、已知地图点世界坐标、观测像素坐标、存在投影误差 g2o::EdgeSE3ProjectXYZOnlyPose* e = new g2o::EdgeSE3ProjectXYZOnlyPose(); //设置顶点与观测 e->setVertex(0, dynamic_cast<g2o::OptimizableGraph::Vertex*>(optimizer.vertex(0))); e->setMeasurement(obs); //设置权重 const float invSigma2 = pFrame->mvInvLevelSigma2[kpUn.octave]; e->setInformation(Eigen::Matrix2d::Identity()*invSigma2); //设置鲁棒核 g2o::RobustKernelHuber* rk = new g2o::RobustKernelHuber; e->setRobustKernel(rk); rk->setDelta(deltaMono); //相机参数 e->fx = pFrame->fx; e->fy = pFrame->fy; e->cx = pFrame->cx; e->cy = pFrame->cy; cv::Mat Xw = pMP->GetWorldPos(); e->Xw[0] = Xw.at<float>(0); e->Xw[1] = Xw.at<float>(1); e->Xw[2] = Xw.at<float>(2); //加入优化器 optimizer.addEdge(e); vpEdgesMono.push_back(e); vnIndexEdgeMono.push_back(i); } else // Stereo observation { nInitialCorrespondences++; pFrame->mvbOutlier[i] = false; //SET EDGE Eigen::Matrix<double,3,1> obs; const cv::KeyPoint &kpUn = pFrame->mvKeysUn[i]; const float &kp_ur = pFrame->mvuRight[i]; obs << kpUn.pt.x, kpUn.pt.y, kp_ur; g2o::EdgeStereoSE3ProjectXYZOnlyPose* e = new g2o::EdgeStereoSE3ProjectXYZOnlyPose(); e->setVertex(0, dynamic_cast<g2o::OptimizableGraph::Vertex*>(optimizer.vertex(0))); e->setMeasurement(obs); const float invSigma2 = pFrame->mvInvLevelSigma2[kpUn.octave]; Eigen::Matrix3d Info = Eigen::Matrix3d::Identity()*invSigma2; e->setInformation(Info); g2o::RobustKernelHuber* rk = new g2o::RobustKernelHuber; e->setRobustKernel(rk); rk->setDelta(deltaStereo); e->fx = pFrame->fx; e->fy = pFrame->fy; e->cx = pFrame->cx; e->cy = pFrame->cy; e->bf = pFrame->mbf; cv::Mat Xw = pMP->GetWorldPos(); e->Xw[0] = Xw.at<float>(0); e->Xw[1] = Xw.at<float>(1); e->Xw[2] = Xw.at<float>(2); optimizer.addEdge(e); vpEdgesStereo.push_back(e); vnIndexEdgeStereo.push_back(i); } } } }d.过滤匹配数量

//有效匹配数小于3,退出 if(nInitialCorrespondences<3) return 0;e.迭代优化+外点剔除

// We perform 4 optimizations, after each optimization we classify observation as inlier/outlier // At the next optimization, outliers are not included, but at the end they can be classified as inliers again. //chi2Mono单目观测误差的卡方阈值,阀值根据自由度置信度决定 const float chi2Mono[4]={5.991,5.991,5.991,5.991}; const float chi2Stereo[4]={7.815,7.815,7.815, 7.815}; //每次优化最大迭代次数10 const int its[4]={10,10,10,10}; int nBad=0; for(size_t it=0; it<4; it++) { //设置当前位姿并执行优化 vSE3->setEstimate(Converter::toSE3Quat(pFrame->mTcw)); optimizer.initializeOptimization(0); optimizer.optimize(its[it]); nBad=0; for(size_t i=0, iend=vpEdgesMono.size(); i<iend; i++) { //取出边和索引 g2o::EdgeSE3ProjectXYZOnlyPose* e = vpEdgesMono[i]; const size_t idx = vnIndexEdgeMono[i]; //如果上一轮判断为外点,重新再进行判断 if(pFrame->mvbOutlier[idx]) { e->computeError(); } //计算重投影误差平方 const float chi2 = e->chi2(); //观测的重投影误差太大 if(chi2>chi2Mono[it]) { //标记为外点 pFrame->mvbOutlier[idx]=true; //下一轮优化时排除这条边 e->setLevel(1); nBad++; } else { pFrame->mvbOutlier[idx]=false; e->setLevel(0); } //第三轮后关闭鲁棒核,进行精调 if(it==2) e->setRobustKernel(0); } //双目 for(size_t i=0, iend=vpEdgesStereo.size(); i<iend; i++) { g2o::EdgeStereoSE3ProjectXYZOnlyPose* e = vpEdgesStereo[i]; const size_t idx = vnIndexEdgeStereo[i]; if(pFrame->mvbOutlier[idx]) { e->computeError(); } const float chi2 = e->chi2(); if(chi2>chi2Stereo[it]) { pFrame->mvbOutlier[idx]=true; e->setLevel(1); nBad++; } else { e->setLevel(0); pFrame->mvbOutlier[idx]=false; } if(it==2) e->setRobustKernel(0); } if(optimizer.edges().size()<10) break; }f.更新相机位姿

// Recover optimized pose and return number of inliers //获取优化后的相机顶点 g2o::VertexSE3Expmap* vSE3_recov = static_cast<g2o::VertexSE3Expmap*>(optimizer.vertex(0)); //获取优化后位姿 g2o::SE3Quat SE3quat_recov = vSE3_recov->estimate(); //格式转换 cv::Mat pose = Converter::toCvMat(SE3quat_recov); //写回当前帧 pFrame->SetPose(pose);g.返回内点数量

return nInitialCorrespondences-nBad;

4.6 剔除外点

// Discard outliers int nmatchesMap = 0; for(int i =0; i<mCurrentFrame.N; i++) { if(mCurrentFrame.mvpMapPoints[i]) { if(mCurrentFrame.mvbOutlier[i]) { MapPoint* pMP = mCurrentFrame.mvpMapPoints[i]; mCurrentFrame.mvpMapPoints[i]=static_cast<MapPoint*>(NULL); //重置外点标记,用于下一帧重新计算 mCurrentFrame.mvbOutlier[i]=false; //该点不被当前帧跟踪 pMP->mbTrackInView = false; pMP->mnLastFrameSeen = mCurrentFrame.mnId; nmatches--; } else if(mCurrentFrame.mvpMapPoints[i]->Observations()>0) nmatchesMap++; } }

4.7 返回结果

return nmatchesMap>=10;

1309

1309

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?