一、环境准备

1、

python3.8.3

pycharm

创建项目前先安装管理虚拟环境的第三方包

pip install virtualenv -i https://pypi.douban.com/simple

2、

找一个地方创建文件夹,用于保存项目

3、

然后进入目录

4、

继续创建目录并进入目录

5、

目录栏输入cmd打开黑窗口

6、

创建虚拟环境

7、

cd到scripts并激活虚拟环境

8、下载环境所需第三方包(作者已经安装了)

pip install fake-useragent scrapy requests selenium -i https://pypi.douban.com/simple

9、

创建scrapy项目

10、

使用pycharm打开项目

注意一点打开项目地方!!!!!!与虚拟环境同一级别目录

打开后的目录结构

打开后的目录结构

11、配置环境

一般的到这里,pycharm会自动将创建好的环境添加进来,如果没有自动添加,就手动添加,找到创建虚拟环境的位置进入到scripts先择python.exe

12、

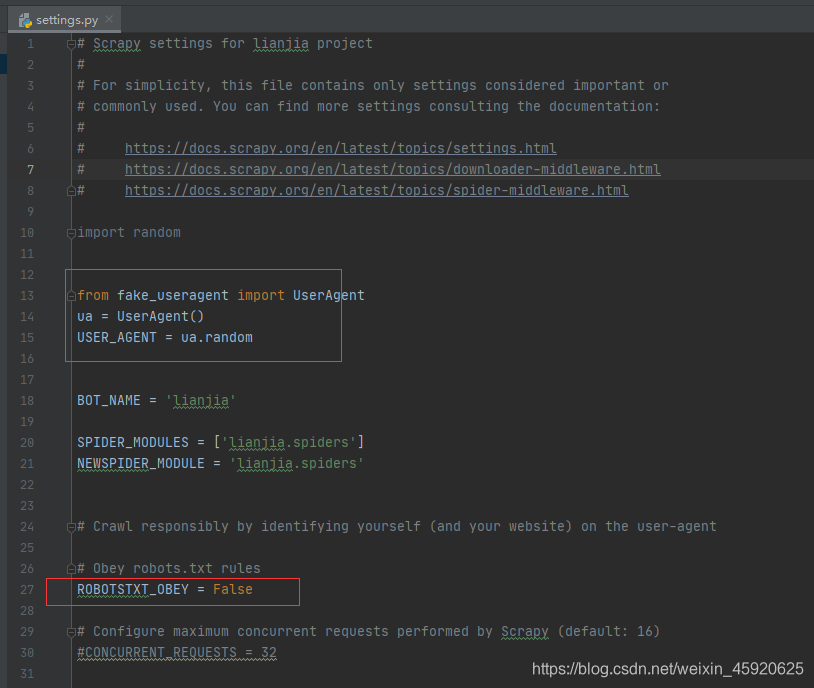

打开setting文件配置设置

from fake_useragent import UserAgent

ua = UserAgent()

USER_AGENT = ua.random # 随机头

ROBOTSTXT_OBEY = False # 不遵守君子协议(懂得都懂)

13、创建spider

14、创建start文件,用于启动项目

from scrapy import cmdline

cmdline.execute(['scrapy', 'crawl', 'vip'])

二、问题分析

先查看源码后发现,HTML文件中并没有想要的数据,那就联想到到ajax或者js动态加载数据

查找JSON文件,数据保存在json文件中

分析请求头的构成

请求头里存在productIds=(我们要找的产品它的pid)

查找用于存放pid的文件

翻页分析

最后,还有一项筛选设置,拿到cookie、referer、user-agent设置在请求头中

要爬取的字段有:

商品标题

商品品牌

商品库存

商品在售价格

商品优惠价

商品折扣

商品原价

商品描述

商品优惠券

那么规律找到了开始写代码开始爬

三、spider

import scrapy

import json

import re

import requests

from lianjia.items import vip_Item

class VipSpider(scrapy.Spider):

name = 'vip'

allowed_domains = ['category.vip.com', 'mapi.vip.com', 'mapi.vip.com/vips-mobile/']

start_urls = ['https://mapi.vip.com/vips-mobile/rest/shopping/pc/product/module/list/v2?callback=getMerchandiseDroplets1&app_name=shop_pc&app_version=4.0&warehouse=VIP_NH&fdc_area_id=104104103&client=pc&mobile_platform=1&province_id=104104&api_key=70f71280d5d547b2a7bb370a529aeea1&user_id=460579443&mars_cid=1617348704851_6b3755f845b09e9dd849dab8e08d3def&wap_consumer=b&productIds=6918280773714633166%2C6919269347502569224%2C6919280038768963651%2C6918885016940643930%2C6919071994858488649%2C6919038672613470427%2C6918882160631488842%2C6918944418440356620%2C6918657420873328142%2C6919228828850383940%2C6918961803150259853%2C6918709860697376973%2C6919240213138614543%2C6919209651872870738%2C6919196552415286617%2C6919194325836784216%2C6918712916393076509%2C6919225847387002266%2C6919210128307013331%2C6917920034126091456%2C6919277800534602909%2C6918880877665560279%2C6919206873399311069%2C6918787804532167757%2C6919217392772102349%2C6919210128323851987%2C6918803863670351821%2C6919195528701215319%2C6919125909840774746%2C6919146118144910145%2C6919234540818978640%2C6919210125982622042%2C6918895204979993664%2C6919056471484139018%2C6919037482518575834%2C6918840358797634383%2C6919225847387010458%2C6918996718319520475%2C6919199922610960832%2C6918575143040210899%2C6918838847877534477%2C6918792607319747469%2C6918605636189480593%2C6918941973275163476%2C6919195144384309124%2C6918712848504084507%2C6918730080246712157%2C6919256286245991952%2C6919134732356604044%2C6919153169612501840%2C&scene=search&standby_id=nature&extParams=%7B%22stdSizeVids%22%3A%22%22%2C%22preheatTipsVer%22%3A%223%22%2C%22couponVer%22%3A%22v2%22%2C%22exclusivePrice%22%3A%221%22%2C%22iconSpec%22%3A%222x%22%2C%22ic2label%22%3A1%7D&context=&_=1620194960239']

def parse(self, response):

item = vip_Item()

jsons = json.loads(re.findall(f'getMerchandiseDroplets\d+\((.*)\)', response.text)[0])

json_datas = jsons.get('data')

json_products = json_datas.get('products')

for json_product in json_products:

goods_title = json_product.get('title')

goods_brandName = json_product.get('brandShowName')

try:

goods_stock = json_product.get('stockLabel').get('value')

except:

goods_stock = ''

goods_salePrice = json_product.get('price').get('salePrice')

goods_couponPrice = json_product.get('price').get('couponPrice')

goods_saleDiscount = json_product.get('price').get('saleDiscount')

goods_marketPrice = json_product.get('price').get('marketPrice')

goods_function = json_product.get('attrs')[0].get('value')

goods_sole_Softness = json_product.get('attrs')[1].get('value')

goods_Shopping_hot_spots = json_product.get('attrs')[2].get('value')

goods_Closure_mode = json_product.get('attrs')[3].get('value')

goods_detail = goods_function + ',' + goods_sole_Softness + ',' + goods_Shopping_hot_spots + ',' + goods_Closure_mode

try:

goods_coupon = json_product.get('labels')[0].get('value')

except:

goods_coupon = ''

item['goods_title'] = goods_title

item['goods_brandName'] = goods_brandName

item['goods_stock'] = goods_stock

item['goods_salePrice'] = goods_salePrice

item['goods_couponPrice'] = goods_couponPrice

item['goods_saleDiscount'] = goods_saleDiscount

item['goods_marketPrice'] = goods_marketPrice

item['goods_detail'] = goods_detail

item['goods_coupon'] = goods_coupon

yield item

headers = {

'cookie': 'vip_address=%257B%2522pid%2522%253A%2522104104%2522%252C%2522cid%2522%253A%2522104104103%2522%252C%2522pname%2522%253A%2522%255Cu5e7f%255Cu4e1c%255Cu7701%2522%252C%2522cname%2522%253A%2522%255Cu6df1%255Cu5733%255Cu5e02%2522%257D; vip_province=104104; vip_province_name=%E5%B9%BF%E4%B8%9C%E7%9C%81; vip_city_name=%E6%B7%B1%E5%9C%B3%E5%B8%82; vip_city_code=104104103; vip_wh=VIP_NH; mars_pid=0; cps=adp%3Accyfv6er%3A%3A%3A%3A; mars_sid=8c589482bcc7ace51ef3c34ed5be66b5; PHPSESSID=imgcjoljcmad3qoep0bcrr5v43; visit_id=88E436B3D2FABF7D8B5D4770B4937E9C; vip_access_times=%7B%22list%22%3A0%2C%22detail%22%3A3%7D; vipshop_passport_src=https%3A%2F%2Fcategory.vip.com%2F; _jzqco=%7C%7C%7C%7C%7C1.2119763071.1620185453935.1620185453935.1620185453935.1620185453935.1620185453935.0.0.0.1.1; VipRUID=460579443; VipUID=c4852fba2af788c3d79ddc84e3e2cba4; VipRNAME=177*****296; VipDegree=D1; user_class=b; PASSPORT_ACCESS_TOKEN=60CB80AF8E17F8B42D155476D7996D9C61D0C1A4; VipLID=0%7C1620185504%7Cb6023f; VipUINFO=luc%3Ab%7Csuc%3Ab%7Cbct%3Ac_new%7Chct%3Ac_new%7Cbdts%3A0%7Cbcts%3A0%7Ckfts%3A0%7Cc10%3A0%7Crcabt%3A0%7Cp2%3A0%7Cp3%3A1%7Cp4%3A0%7Cp5%3A1%7Cul%3A3105; pg_session_no=14; vip_tracker_source_from=; mars_cid=1617348704851_6b3755f845b09e9dd849dab8e08d3def; waitlist=%7B%22pollingId%22%3A%2223DBDE3C-ED51-414A-9DE2-C9C6CE3AA34B%22%2C%22pollingStamp%22%3A1620186762305%7D',

'referer': 'https://category.vip.com/suggest.php?keyword=%E7%94%B7%E8%BF%90%E5%8A%A8%E9%9E%8B&ff=235|12|1|1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/90.0.4430.72 Safari/537.36'

}

for number in range(120, 28*120, 120):

url = f'https://mapi.vip.com/vips-mobile/rest/shopping/pc/search/product/rank?callback=getMerchandiseIds&app_name=shop_pc&app_version=4.0&warehouse=VIP_NH&fdc_area_id=104104103&client=pc&mobile_platform=1&province_id=104104&api_key=70f71280d5d547b2a7bb370a529aeea1&user_id=460579443&mars_cid=1617348704851_6b3755f845b09e9dd849dab8e08d3def&wap_consumer=b&standby_id=nature&keyword=%E7%94%B7%E8%BF%90%E5%8A%A8%E9%9E%8B&lv3CatIds=&lv2CatIds=&lv1CatIds=&brandStoreSns=&props=&priceMin=&priceMax=&vipService=&sort=0&pageOffset={number}&channelId=1&gPlatform=PC&batchSize=120&_=1620197821047'

res = requests.get(url=url, headers=headers)

jsons = json.loads(re.findall(f'getMerchandiseIds\((.*)\)', res.text)[0])

jsons_data = jsons.get('data')

for pid in jsons_data.get('products'):

next_page_url = f'https://mapi.vip.com/vips-mobile/rest/shopping/pc/product/module/list/v2?callback=getMerchandiseDroplets1&app_name=shop_pc&app_version=4.0&warehouse=VIP_NH&fdc_area_id=104104103&client=pc&mobile_platform=1&province_id=104104&api_key=70f71280d5d547b2a7bb370a529aeea1&user_id=460579443&mars_cid=1617348704851_6b3755f845b09e9dd849dab8e08d3def&wap_consumer=b&productIds={pid["pid"]}&scene=search&standby_id=nature&extParams=%7B%22stdSizeVids%22%3A%22%22%2C%22preheatTipsVer%22%3A%223%22%2C%22couponVer%22%3A%22v2%22%2C%22exclusivePrice%22%3A%221%22%2C%22iconSpec%22%3A%222x%22%2C%22ic2label%22%3A1%7D&context=&_=1620197821050'

yield scrapy.Request(url=next_page_url, callback=self.parse)

三、item

import scrapy

class vip_Item(scrapy.Item):

# define the fields for your item here like:

goods_title = scrapy.Field()

goods_brandName = scrapy.Field()

goods_stock = scrapy.Field()

goods_salePrice = scrapy.Field()

goods_couponPrice = scrapy.Field()

goods_saleDiscount = scrapy.Field()

goods_marketPrice = scrapy.Field()

goods_detail = scrapy.Field()

goods_coupon = scrapy.Field()

四、setting

from fake_useragent import UserAgent

ua = UserAgent()

USER_AGENT = ua.random

ROBOTSTXT_OBEY = False

DEFAULT_REQUEST_HEADERS = {

'cookie': 'vip_address=%257B%2522pid%2522%253A%2522104104%2522%252C%2522cid%2522%253A%2522104104103%2522%252C%2522pname%2522%253A%2522%255Cu5e7f%255Cu4e1c%255Cu7701%2522%252C%2522cname%2522%253A%2522%255Cu6df1%255Cu5733%255Cu5e02%2522%257D; vip_province=104104; vip_province_name=%E5%B9%BF%E4%B8%9C%E7%9C%81; vip_city_name=%E6%B7%B1%E5%9C%B3%E5%B8%82; vip_city_code=104104103; vip_wh=VIP_NH; mars_pid=0; cps=adp%3Accyfv6er%3A%3A%3A%3A; mars_sid=8c589482bcc7ace51ef3c34ed5be66b5; PHPSESSID=imgcjoljcmad3qoep0bcrr5v43; visit_id=88E436B3D2FABF7D8B5D4770B4937E9C; vip_access_times=%7B%22list%22%3A0%2C%22detail%22%3A3%7D; vipshop_passport_src=https%3A%2F%2Fcategory.vip.com%2F; _jzqco=%7C%7C%7C%7C%7C1.2119763071.1620185453935.1620185453935.1620185453935.1620185453935.1620185453935.0.0.0.1.1; VipRUID=460579443; VipUID=c4852fba2af788c3d79ddc84e3e2cba4; VipRNAME=177*****296; VipDegree=D1; user_class=b; PASSPORT_ACCESS_TOKEN=60CB80AF8E17F8B42D155476D7996D9C61D0C1A4; VipLID=0%7C1620185504%7Cb6023f; VipUINFO=luc%3Ab%7Csuc%3Ab%7Cbct%3Ac_new%7Chct%3Ac_new%7Cbdts%3A0%7Cbcts%3A0%7Ckfts%3A0%7Cc10%3A0%7Crcabt%3A0%7Cp2%3A0%7Cp3%3A1%7Cp4%3A0%7Cp5%3A1%7Cul%3A3105; pg_session_no=14; vip_tracker_source_from=; mars_cid=1617348704851_6b3755f845b09e9dd849dab8e08d3def; waitlist=%7B%22pollingId%22%3A%2223DBDE3C-ED51-414A-9DE2-C9C6CE3AA34B%22%2C%22pollingStamp%22%3A1620186762305%7D',

'referer': 'https://category.vip.com/suggest.php?keyword=%E7%94%B7%E8%BF%90%E5%8A%A8%E9%9E%8B&ff=235|12|1|1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/90.0.4430.72 Safari/537.36'

}

ITEM_PIPELINES = {

'lianjia.pipelines.vip_Pipeline': 300,

}

五、pipelines

import pymysql

class vip_Pipeline:

def open_spider(self, spider):

self.conn = pymysql.connect(host='127.0.0.1', port=3306, user='root', passwd='密码', db='pachong_save')

self.cursor = self.conn.cursor()

print('连接成功')

def process_item(self, item, spider):

insert_sql = 'insert into vip_goods(goods_title, goods_brandName, goods_stock, goods_salePrice, goods_couponPrice, goods_saleDiscount, goods_marketPrice, goods_detail, goods_coupon) values ' \

'("%s", "%s", "%s", "%s", "%s", "%s", "%s", "%s", "%s")' % (

item['goods_title'], item['goods_brandName'], item['goods_stock'], item['goods_salePrice'], item['goods_couponPrice'], item['goods_saleDiscount'], item['goods_marketPrice'], item['goods_detail'], item['goods_coupon'])

print('执行sql语句')

try:

self.cursor.execute(insert_sql)

self.conn.commit()

print('成功')

except Exception:

self.conn.rollback()

print('失败')

return item

查看MySQL数据库

六、使用jupyter进行简单的数据处理和分析

import re

import os

import jieba

import wordcloud

import pandas as pd

import numpy as np

from PIL import Image

import seaborn as sns

from docx import Document

from docx.shared import Inches

import matplotlib.pyplot as plt

from pandas import DataFrame,Series

# 设置画图字体样式

sns.set_style('darkgrid')

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

# 读取文件

df_vip = pd.read_excel('./vip_goods.xlsx')

# 查看数据大体情况

df_vip.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1065 entries, 0 to 1064

Data columns (total 9 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 goods_title 1065 non-null object

1 goods_brandName 1065 non-null object

2 goods_stock 102 non-null object

3 goods_salePrice 1065 non-null int64

4 goods_couponPrice 1065 non-null int64

5 goods_saleDiscount 1058 non-null object

6 goods_marketPrice 1065 non-null int64

7 goods_detail 1065 non-null object

8 goods_coupon 1065 non-null object

dtypes: int64(3), object(6)

memory usage: 75.0+ KB

# 转换库存

def convert_stock(s):

if re.findall(f'剩(\d+)件', str(s)) != []:

stock = re.findall(f'剩(\d+)件', str(s))[0]

return stock

df_vip['goods_stock'] = df_vip['goods_stock'].map(convert_stock)

# 转换优惠券

def convert_coupon(s):

if re.findall(f'券¥(\d+)', str(s)) != []:

coupon = re.findall(f'券¥(\d+)', str(s))[0]

return coupon

df_vip['goods_coupon'] = df_vip['goods_coupon'].map(convert_coupon)

# 转换折扣

def convert_saleDiscount(s):

if re.findall(f'(\d+\.\d+)折', str(s)) != []:

saleDiscount = re.findall(f'(\d+\.\d+)折', str(s))[0]

return saleDiscount

df_vip['goods_saleDiscount'] = df_vip['goods_saleDiscount'].map(convert_saleDiscount)

# 查看又哪些品牌

df_vip['goods_brandName'].unique()

array(['adidas', 'CBA', '高蒂', '鸿星尔克', 'Z.SUO', '双星八特', '骆驼', '南极人', '特步',

'Lee Cooper', '公牛巨人', '红蜻蜓', '卡帝乐鳄鱼', '法派', '固特异', '彪马', '乔丹',

'澳伦', 'Nike', "I'm David", '稻草人', 'CAMELJEANS', '木林森',

'POLO SPORT', "L'ALPINA", '回力', '斯凯奇', '皓顿', '奥康', 'JEEP',

"CHARLES JANG'S HAPPY HEART", '卡宾', '花花公子', 'SUPERRUN', '保罗·盖帝',

'公牛世家', '高尔普', '金贝勒', 'adidas NEO', '森马', 'CLAW MONEY', '马克华菲',

'安踏', '361°', '欧利萨斯', '奇安达', 'PLOVER', 'Kappa', '绅诺',

'LA CHAPELLE HOMME', '名典', 'New Balance', '荣仕', '卡丹路', '大洋洲•袋鼠',

'回力1927', '意尔康', 'EXR', 'adidas三叶草', '喜得龙', 'BELLE', 'NAVIGARE',

'J&M', 'ONITSUKA TIGER', 'spider king', '卓诗尼', '贵人鸟',

'Rifugio Vo’', 'FILA', '匹克', 'HLA海澜之家', '歌妮璐', '森达', 'Reebok',

'龙狮戴尔', '与狼共舞', 'Joma', 'VANS', 'Sprandi', 'VEXX', '热风', '环球',

'拓路者', 'Jordan', '杉杉', '沙驰', '暇步士', 'UNDER ARMOUR', 'Trumppipe',

'人本', 'MIZUNO', 'UGG', 'KEKAFU', 'nautica', 'teenmix',

'TIMBERLAND', 'KELME', '他她', 'LILANZ', '康奈', '梦特娇', '千百度', '李宁',

'ALPINE PRO', 'The North Face', '米菲', '百思图', 'luogoks', '凯撒',

'七匹狼', '乐卡克', 'PELLIOT', '凯撒大帝', '百事', 'GXG', 'Bata', 'GOG',

'飞跃1931', '康龙', '富贵鸟', 'AIRWALK', 'H8', 'LAIKAJINDUN',

'ROBERTA DI CAMERINO', '金利来', 'converse', 'Satchi Sport',

'Artsdon', 'JEEP SPIRIT 1941 ESTD', '老爷车', '真维斯', 'BOSSSUNWEN',

'足力健', '斯米尔', '皮尔·卡丹', '比音勒芬', '兽霸', 'DIENJI', '乐步', '图思龙', '枫叶',

'健足乐', 'ASICS', '哥仑步', 'M 60', 'Columbia', 'Ecco', '飞跃', '波尔谛奇',

'TWEAK', '火枪手', 'clarks', '鳄鱼恤', '卡文', 'ZERO'], dtype=object)

# 查看价格分布情况

plt.figure(figsize=(10, 8), dpi=100)

ax = sns.boxenplot(y='goods_salePrice', data=df_vip).set_title('价格分布情况')

boxplot_fig = ax.get_figure()

boxplot_fig.savefig('价格分布情况.png', dpi=400)

# 查看大于1000RMB的品牌是哪些

plt.figure(figsize=(10, 8), dpi=100)

ax = sns.barplot(x='goods_brandName', y='goods_salePrice', data=df_vip.query('goods_salePrice>=1000').copy(), hue='goods_detail').set_title('大于1000RMB的品牌及信息')

boxplot_fig = ax.get_figure()

boxplot_fig.savefig('大于1000RMB的品牌及信息.png', dpi=400)

# 查看价格与优惠券的关系

plt.figure(figsize=(10, 8), dpi=100)

df_vip['goods_coupon'] = df_vip['goods_coupon'].astype('float64')

ax = sns.jointplot(x="goods_salePrice", y="goods_coupon", data=df_vip, kind="reg", truncate=False,color="m", height=10)

ax.fig.savefig('价格与优惠券的关系.png')

import imageio

# 将特征转换为列表

ls = df_vip['goods_detail'].to_list()

# 替换非中英文的字符

feature_points = [re.sub(r'[^a-zA-Z\u4E00-\u9FA5]+',' ',str(feature)) for feature in ls]

# 读取停用词

stop_world = list(pd.read_csv('./百度停用词表.txt', engine='python', encoding='utf-8', names=['stopwords'])['stopwords'])

feature_points2 = []

for feature in feature_points: # 遍历每一条商品描述

words = jieba.lcut(feature) # 精确模式,没有冗余.对每一条评论进行jieba分词

ind1 = np.array([len(word) > 1 for word in words]) # 判断每个分词的长度是否大于1

ser1 = pd.Series(words)

ser2 = ser1[ind1] # 筛选分词长度大于1的分词留下

ind2 = ~ser2.isin(stop_world) # 注意取反负号

ser3 = ser2[ind2].unique() # 筛选出不在停用词表的分词留下,并去重

if len(ser3) > 0:

feature_points2.append(list(ser3))

# 将所有分词存储到一个列表中

wordlist = [word for feature in feature_points2 for word in feature]

# 将列表中所有的分词拼接成一个字符串

feature_str = ' '.join(wordlist)

# 标题分析

font_path = r'./simhei.ttf'

shoes_box_jpg = imageio.imread('./home.jpg')

wc=wordcloud.WordCloud(

background_color='black',

mask=shoes_box_jpg,

font_path = font_path,

min_font_size=5,

max_font_size=50,

width=260,

height=260,

)

wc.generate(feature_str)

plt.figure(figsize=(10, 8), dpi=100)

plt.imshow(wc)

plt.axis('off')

plt.savefig('商品描述关键词')

# 查看低库存的品牌

df_vip_stock = df_vip.query('goods_stock>"0"').copy()

df_vip_stock

goods_title goods_brandName goods_stock goods_salePrice goods_couponPrice goods_saleDiscount goods_marketPrice goods_detail goods_coupon

0 中性款boost运动鞋透气缓震休闲时尚跑步鞋小黑鞋 adidas 3 473 473 4.4 1099 透气/耐磨,适中,专柜同款,系带 10.0

20 RS-X TOYS 男女款拼接透气休闲运动鞋男鞋女鞋老爹鞋 彪马 1 222 222 2.3 999 运动鞋,平底,无,偏硬 25.0

45 春秋上新运动鞋气垫减震透气飞织跑步鞋休闲鞋男鞋 红蜻蜓 6 142 142 2.4 599 跑步鞋,平底,无,适中 7.0

111 新款百搭网面鞋时尚跑步鞋简约休闲鞋潮流男鞋透气网面鞋老爹鞋男 I'm David 5 93 93 1.6 599 圆头,系带,防滑/耐磨/透气,低帮 5.0

134 【透气飞织】21夏季新款透气运动鞋男百搭轻便跑步鞋男鞋椰子鞋 CLAW MONEY 9 139 139 2.7 519 休闲鞋/跑步鞋/运动鞋,平底,无,适中 10.0

... ... ... ... ... ... ... ... ... ...

1024 新品男士百搭休闲布鞋套脚男鞋时尚简约运动休闲鞋男板鞋 鳄鱼恤 6 134 134 1.1 1280 圆头,套脚,休闲,耐磨 7.0

1031 夏季男鞋透气网面男士休闲鞋时尚运动鞋男老爹鞋男舒适跑步鞋子男 马克华菲 9 284 284 2.6 1095 老爹鞋/休闲鞋/跑步鞋/网鞋/运动鞋,平底,无,适中 15.0

1041 【潮流热销】小白鞋男潮流百搭鞋子男运动鞋男板鞋男休闲鞋男鞋 欧利萨斯 3 48 48 1.5 329 圆头,系带,低帮,小白鞋 3.0

1045 头层牛皮休闲鞋低帮拉链板鞋男女潮鞋情侣款休闲鞋 防滑耐磨 TWEAK 2 73 73 1.7 439 帆布鞋,平底,无,适中 5.0

1054 零度男鞋男士时尚格纹经典百搭运动休闲鞋板鞋 ZERO 2 539 539 4.2 1299 圆头,系带,简约风/运动风/休闲,防滑/透气/吸汗/轻便 30.0

本文介绍了一次针对唯品会网站的商品数据抓取实践,包括环境搭建、问题分析、爬虫实现等环节,并展示了如何利用爬取的数据进行初步的数据处理和分析。

本文介绍了一次针对唯品会网站的商品数据抓取实践,包括环境搭建、问题分析、爬虫实现等环节,并展示了如何利用爬取的数据进行初步的数据处理和分析。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?