云计算课程设计(Prometheus+grafana监控windows服务器、ganglia+flume监控日志系统、flume聚集mysql日志)

准备工作

-

prometheus下载地址: https://github.com/prometheus/prometheus/releases/download/v2.52.0-rc.1/prometheus-2.52.0-rc.1.windows-amd64.zip

-

grafana下载地址: https://dl.grafana.com/enterprise/release/grafana-enterprise-10.4.2.windows-amd64.zip

-

windows_exporter下载地址: https://github.com/prometheus-community/windows_exporter/releases/download/v0.25.1/windows_exporter-0.25.1-amd64.msi

-

flume下载地址: https://archive.apache.org/dist/flume/1.7.0/apache-flume-1.7.0-bin.tar.gz

-

jdk(linux)下载地址: https://cola-yunos-1305721388.cos.ap-guangzhou.myqcloud.com/20210813/jdk-8u401-linux-x64.tar.gz

-

mysql下载地址

https://downloads.mysql.com/archives/get/p/23/file/mysql-community-server-5.7.26-1.el7.x86_64.rpm

https://downloads.mysql.com/archives/get/p/23/file/mysql-community-client-5.7.26-1.el7.x86_64.rpm

https://downloads.mysql.com/archives/get/p/23/file/mysql-community-common-5.7.26-1.el7.x86_64.rpm

https://downloads.mysql.com/archives/get/p/23/file/mysql-community-libs-5.7.26-1.el7.x86_64.rpm

-

驱动包下载地址: https://downloads.mysql.com/archives/get/p/3/file/mysql-connector-java-5.1.16.tar.gz

-

idea下载地址: https://www.jetbrains.com/zh-cn/idea/download/download-thanks.html?platform=windows

-

maven下载地址: https://dlcdn.apache.org/maven/maven-3/3.8.8/binaries/apache-maven-3.8.8-bin.zip

-

jdk(windows)下载地址: https://cola-yunos-1305721388.cos.ap-guangzhou.myqcloud.com/20210813/jdk-8u411-windows-x64.exe

监视面板搭建

-

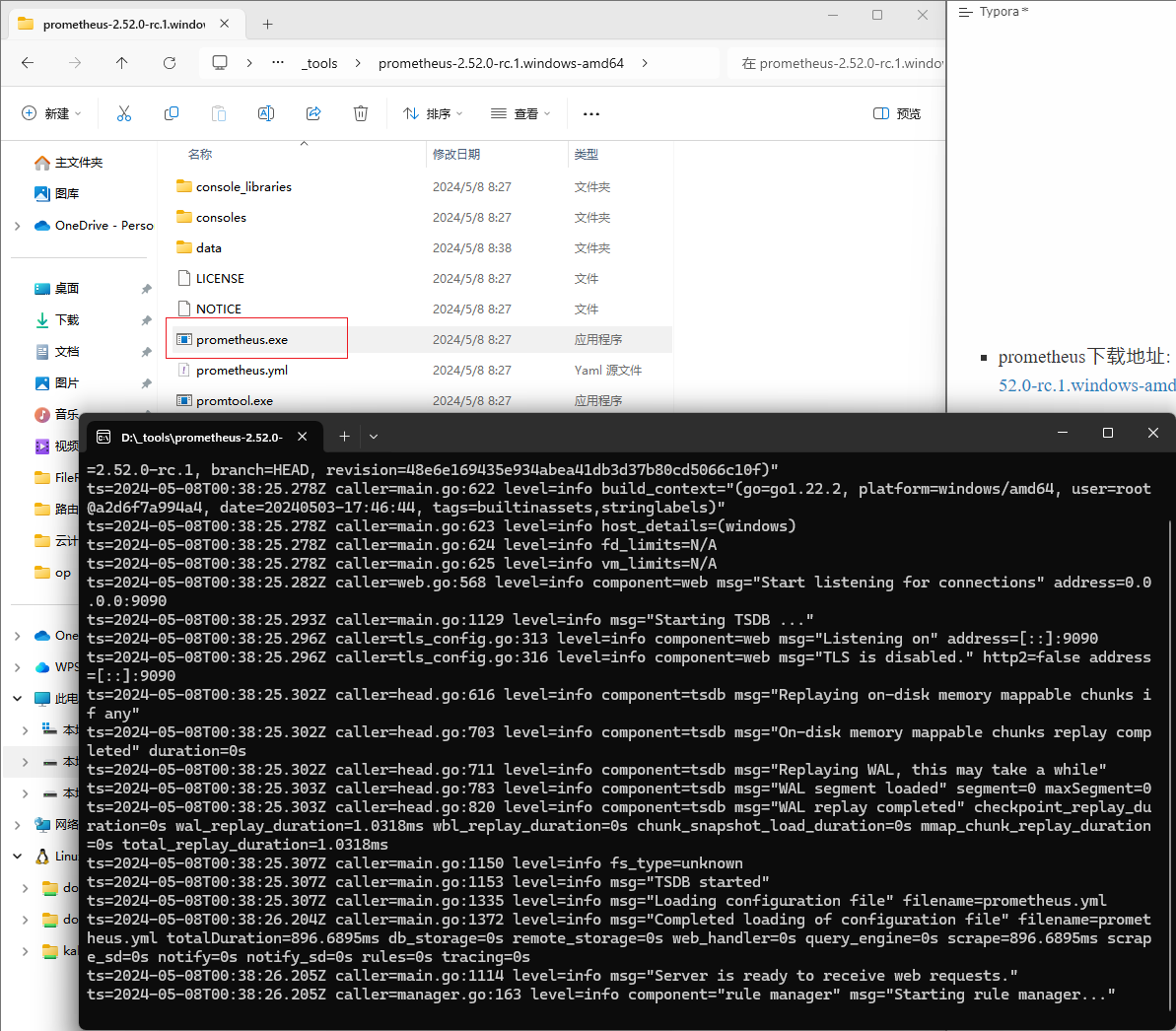

解压Prometheus,运行prometheus.exe

-

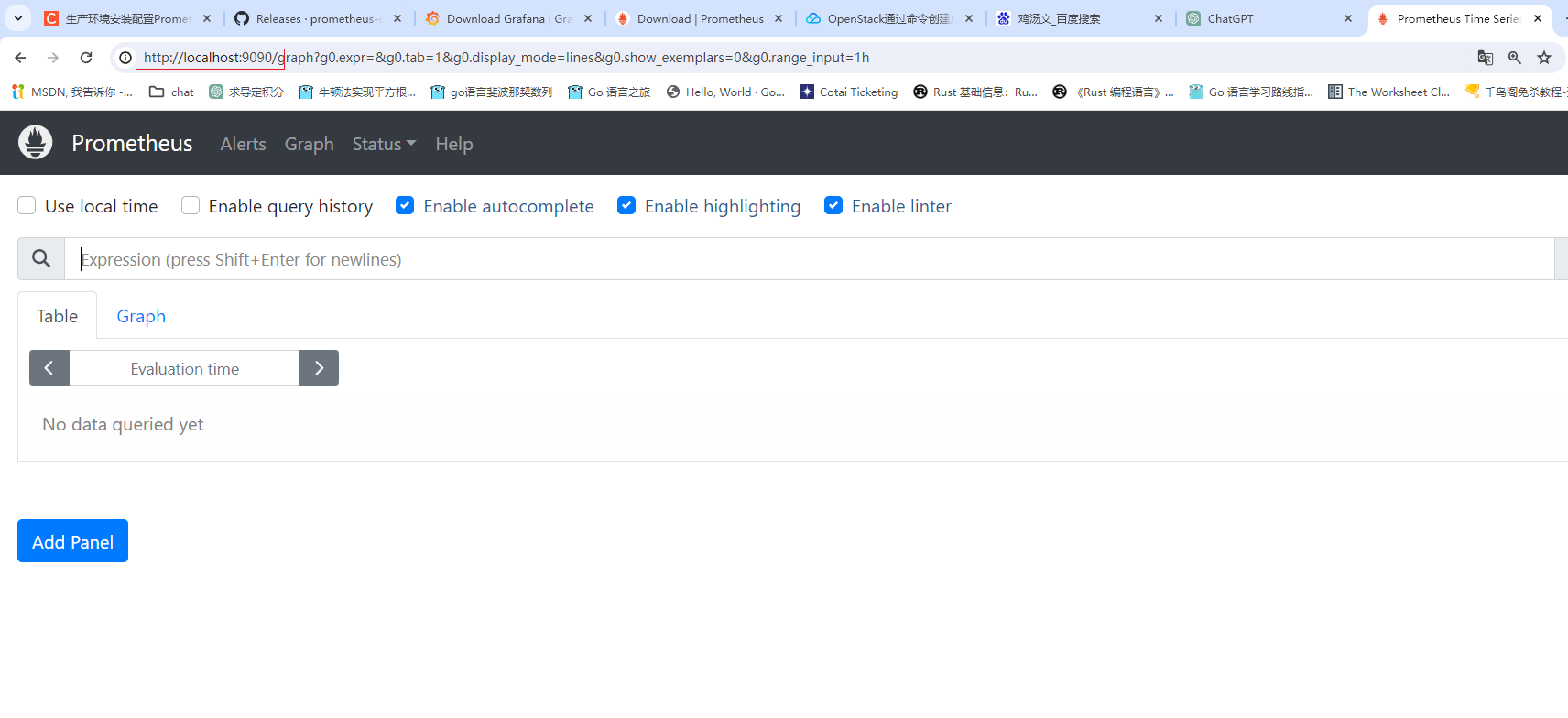

打开网址

localhost:9090

-

配置windows服务器监视

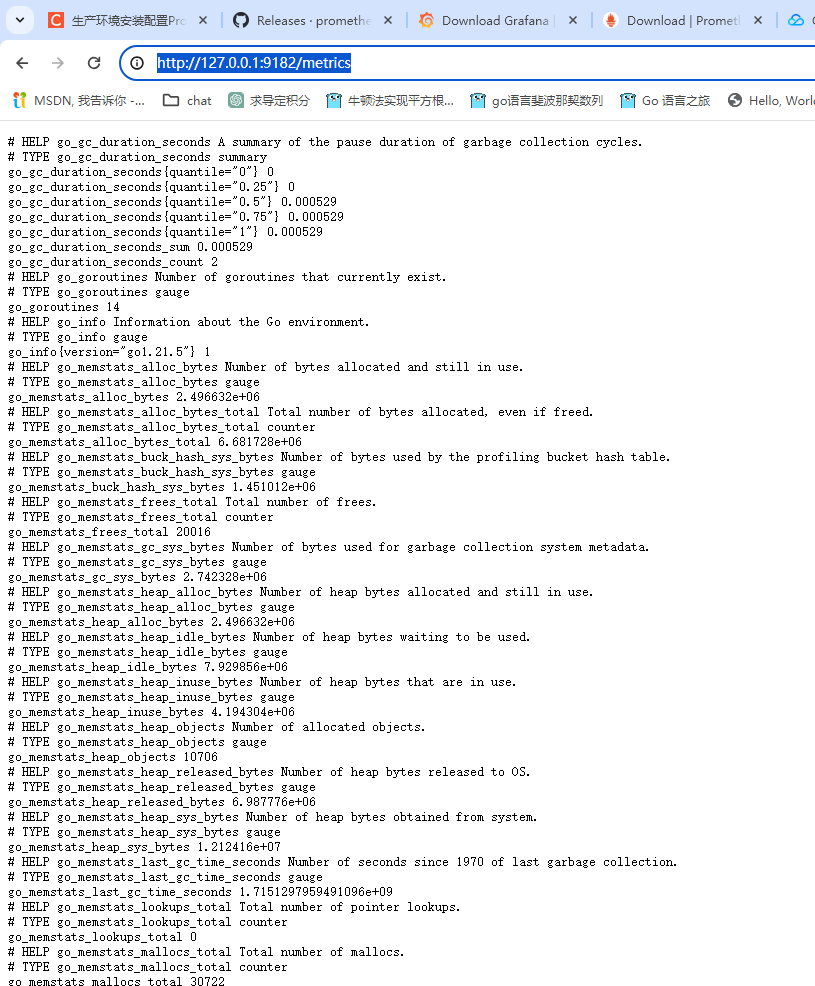

双击运行windows_exporter-0.25.1-amd64.msi,在浏览器进入以下地址

http://127.0.0.1:9182/metrics出现如下页面即为运行成功

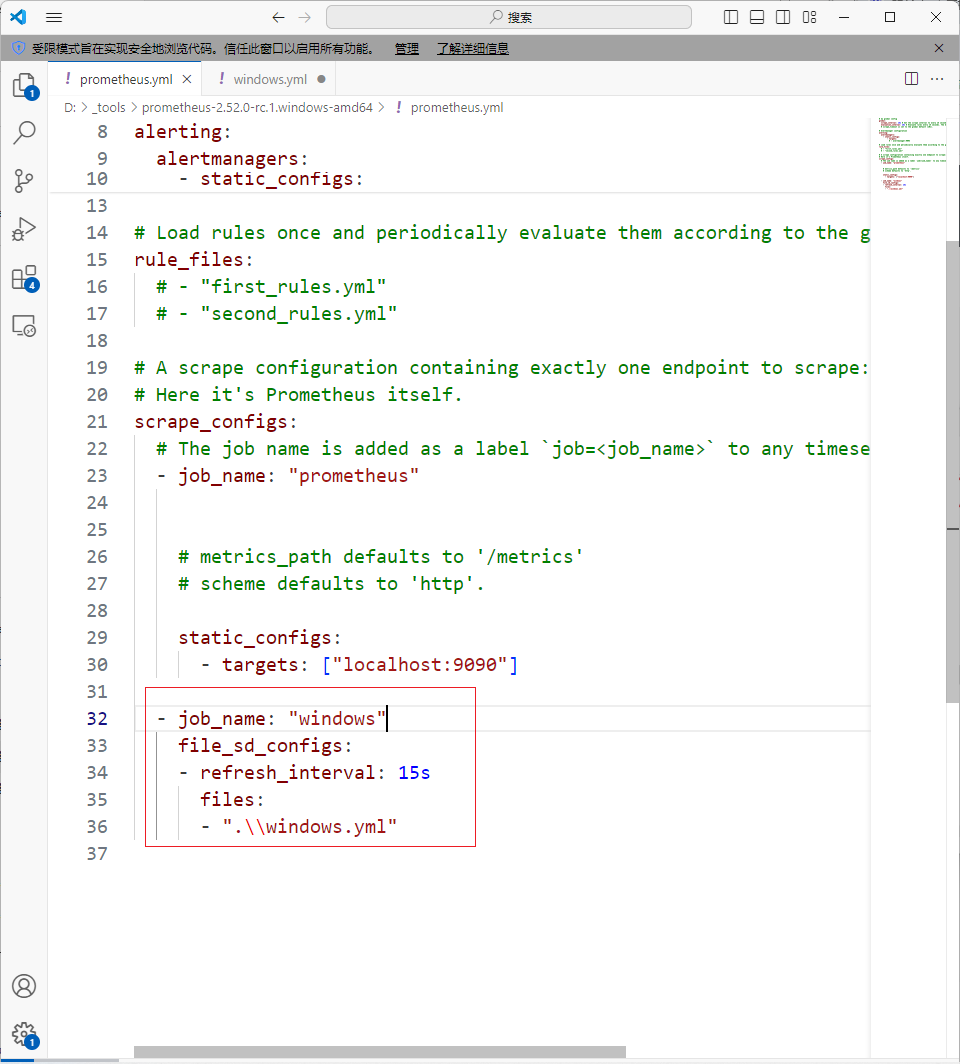

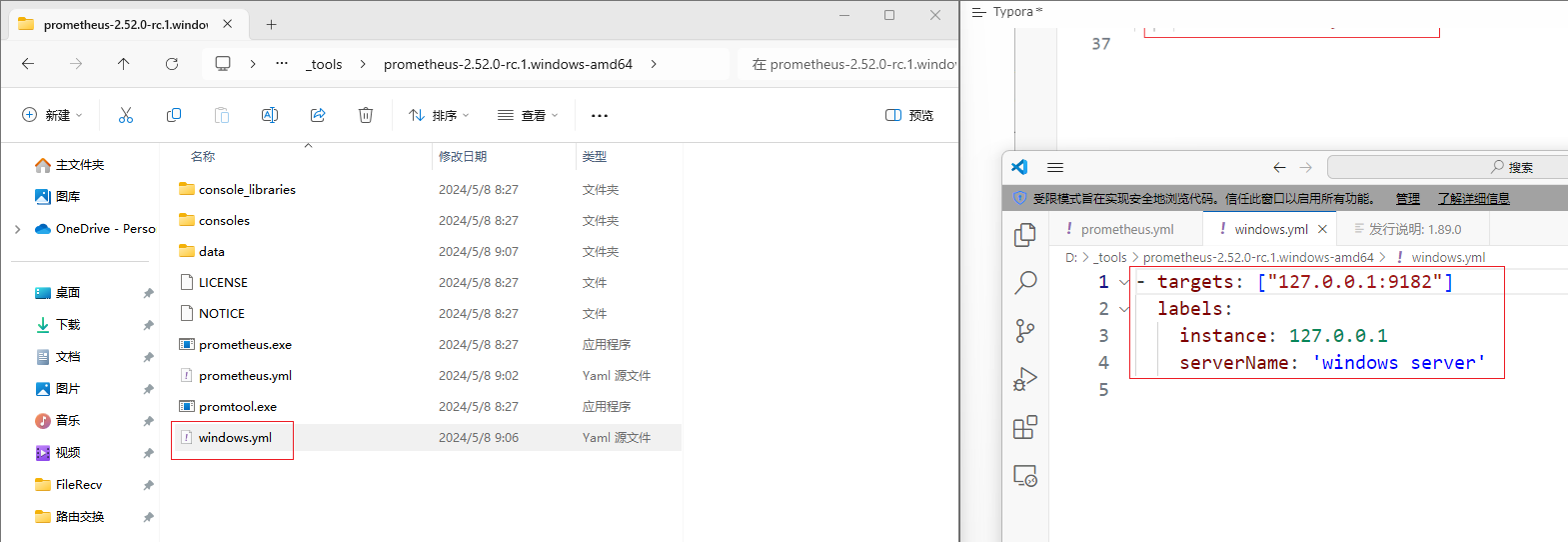

编辑prometheus.yml(此文件在解压的Prometheus里面)

尖叫提示:这里一定要注意缩进!!!!!!!

- job_name: "windows" file_sd_configs: - refresh_interval: 15s files: - ".\\windows.yml"

在同级目录下新建windows.yml

- targets: ["127.0.0.1:9182"] labels: instance: 127.0.0.1 serverName: 'windows server'

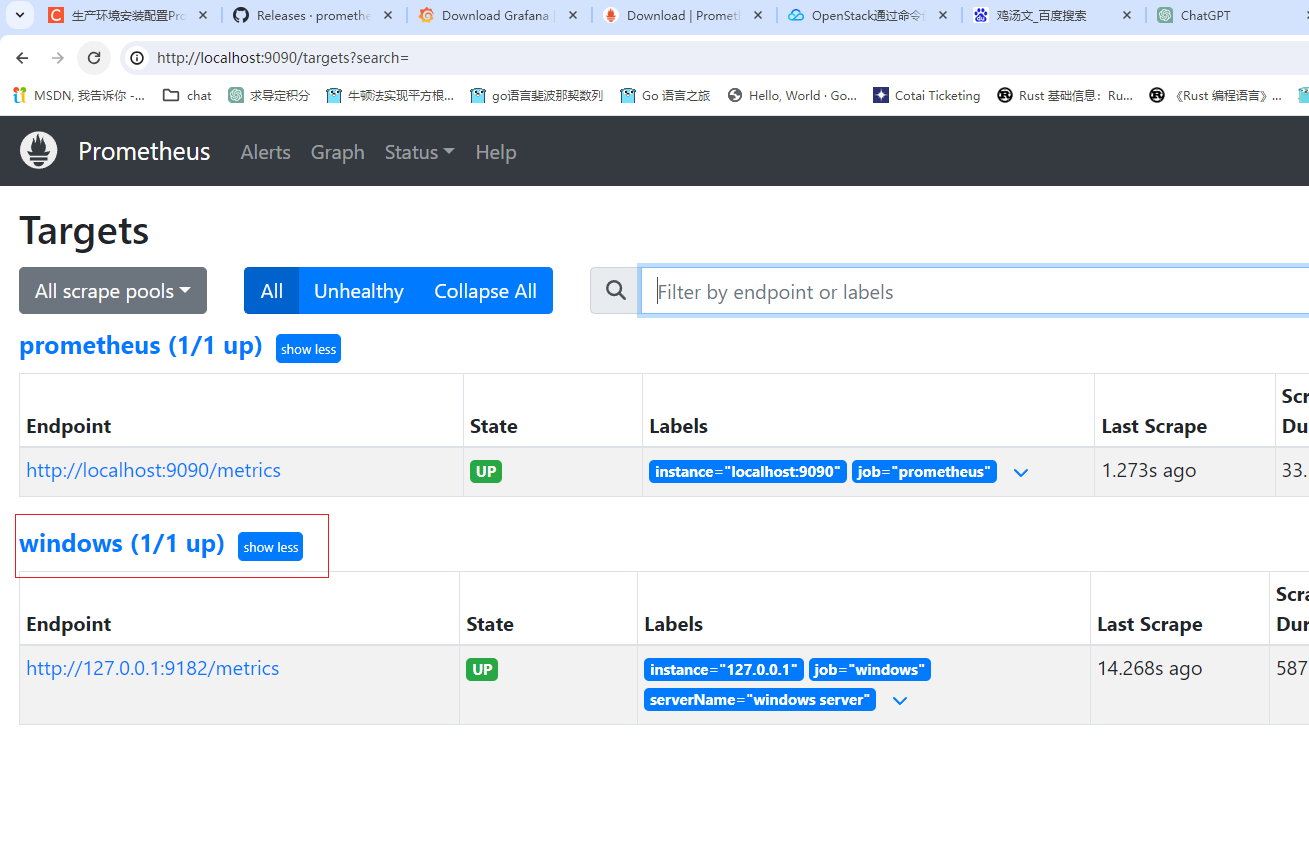

重启prometheus(就是把黑框框关掉,然后再双击prometheus.exe)

在浏览器打开

http://localhost:9090/targets

-

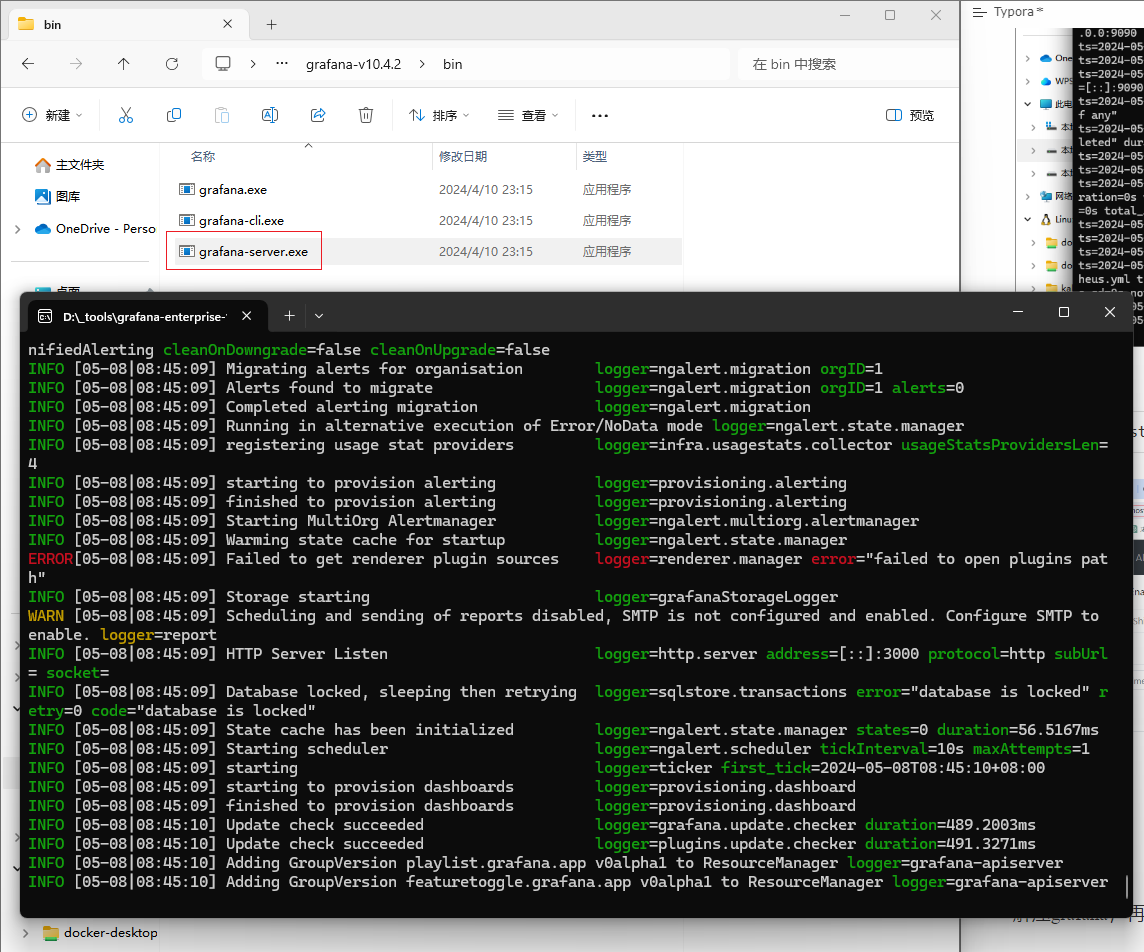

解压grafana,在bin目录打开grafana-server.exe

-

打开网址

http://127.0.0.1:3000默认的账号密码都是admin

-

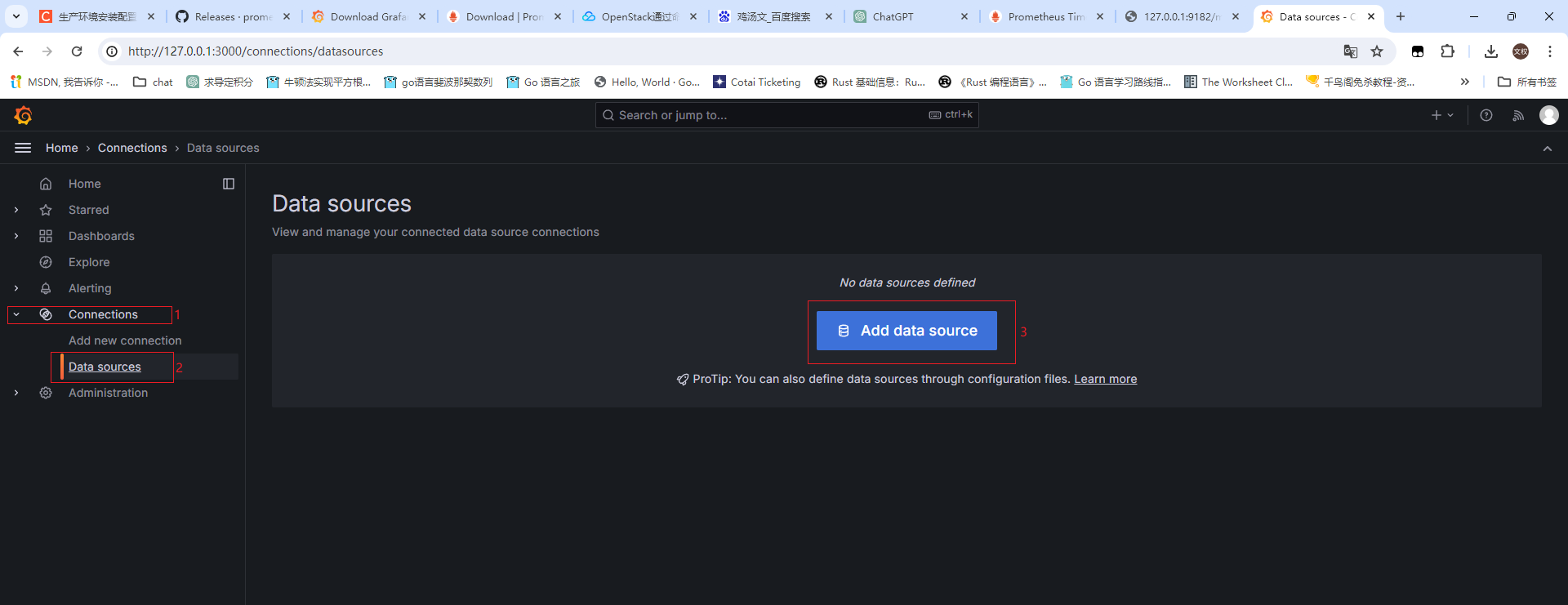

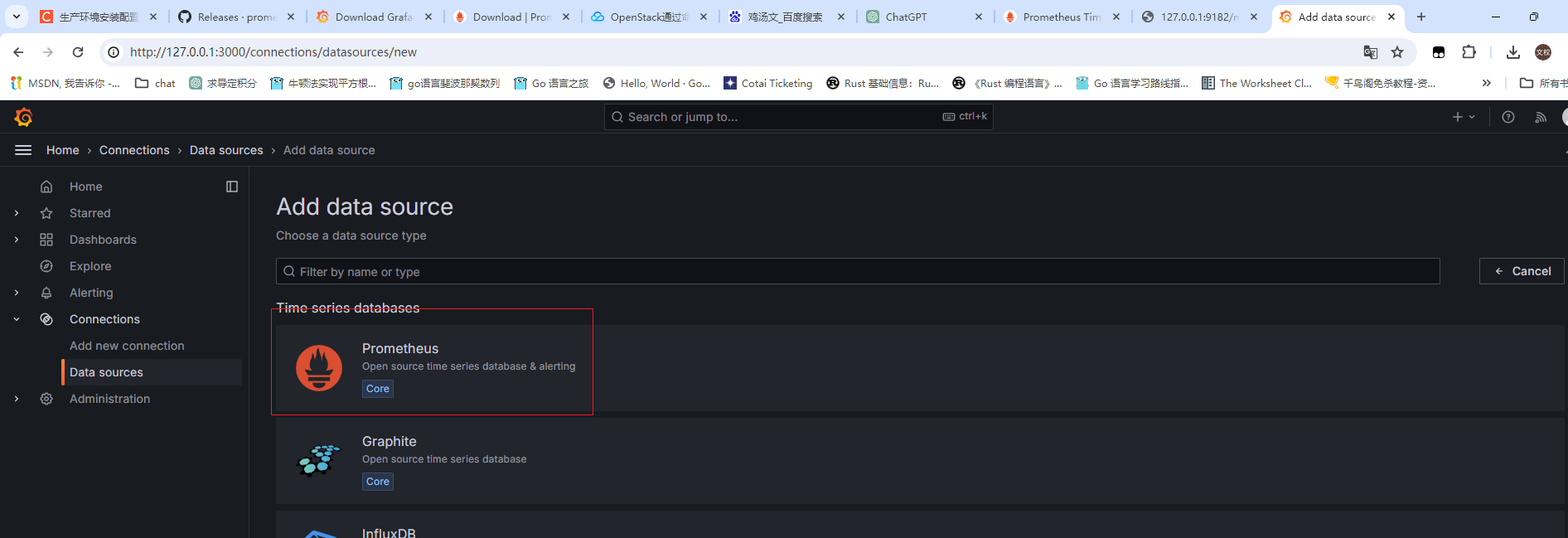

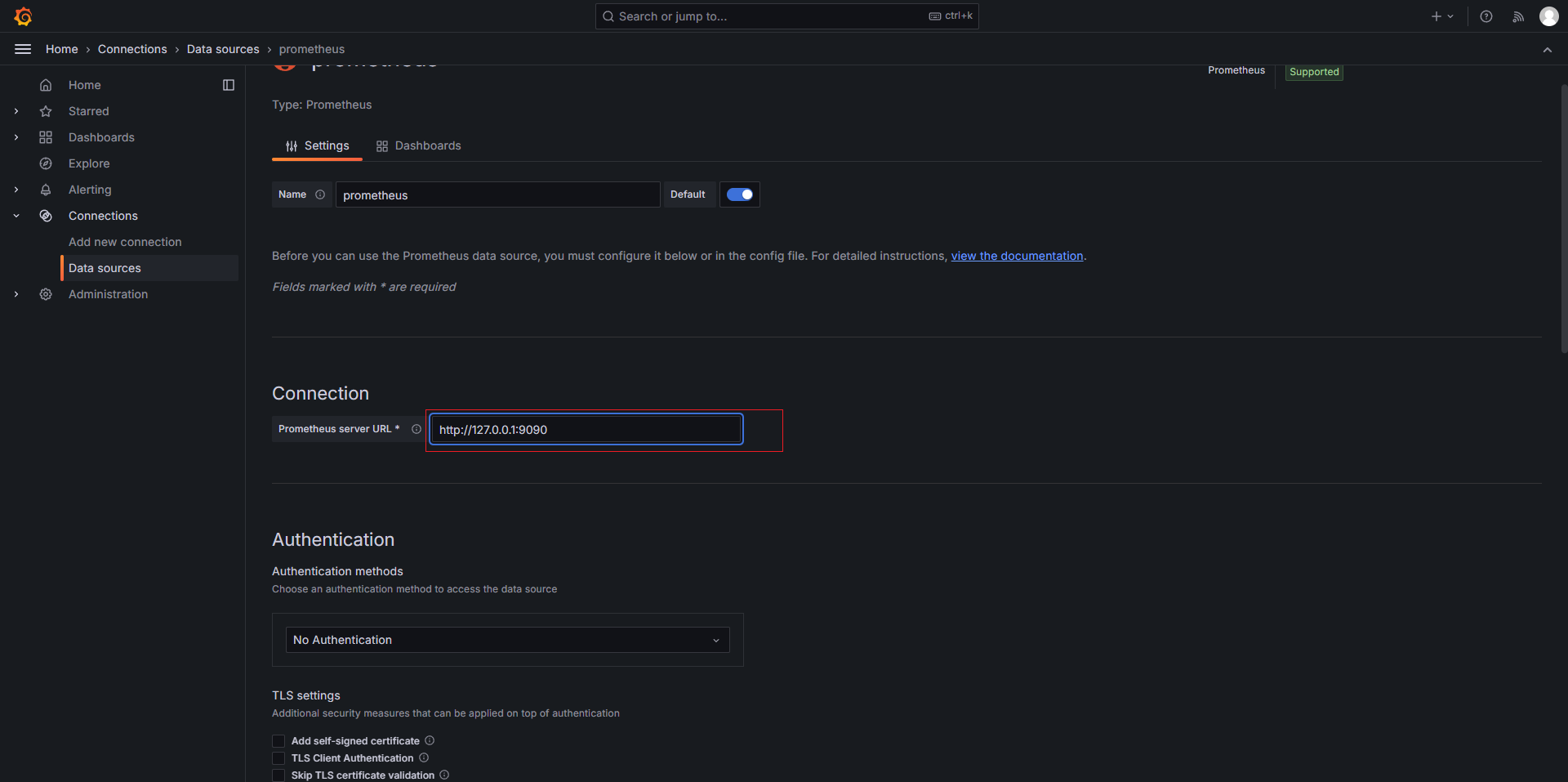

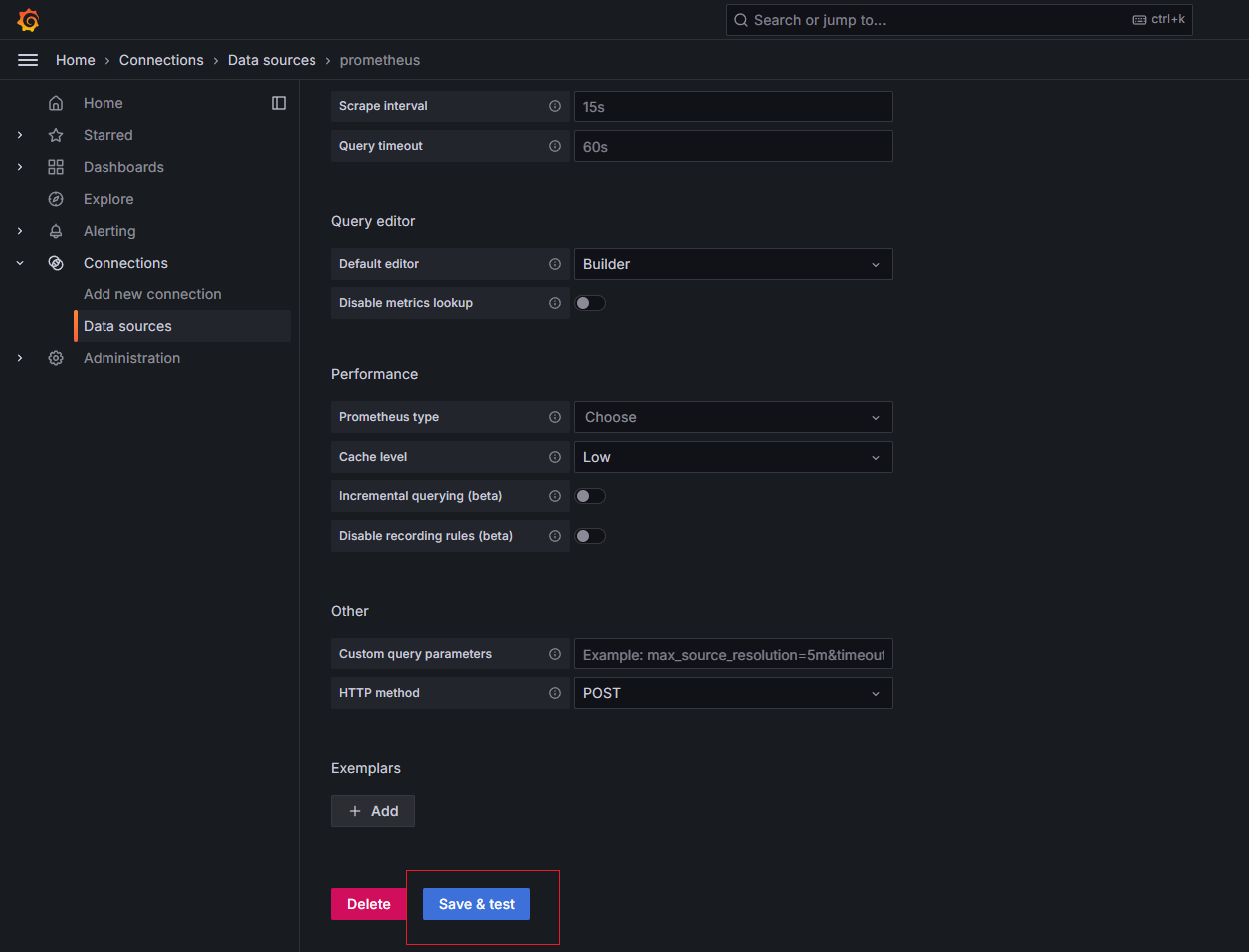

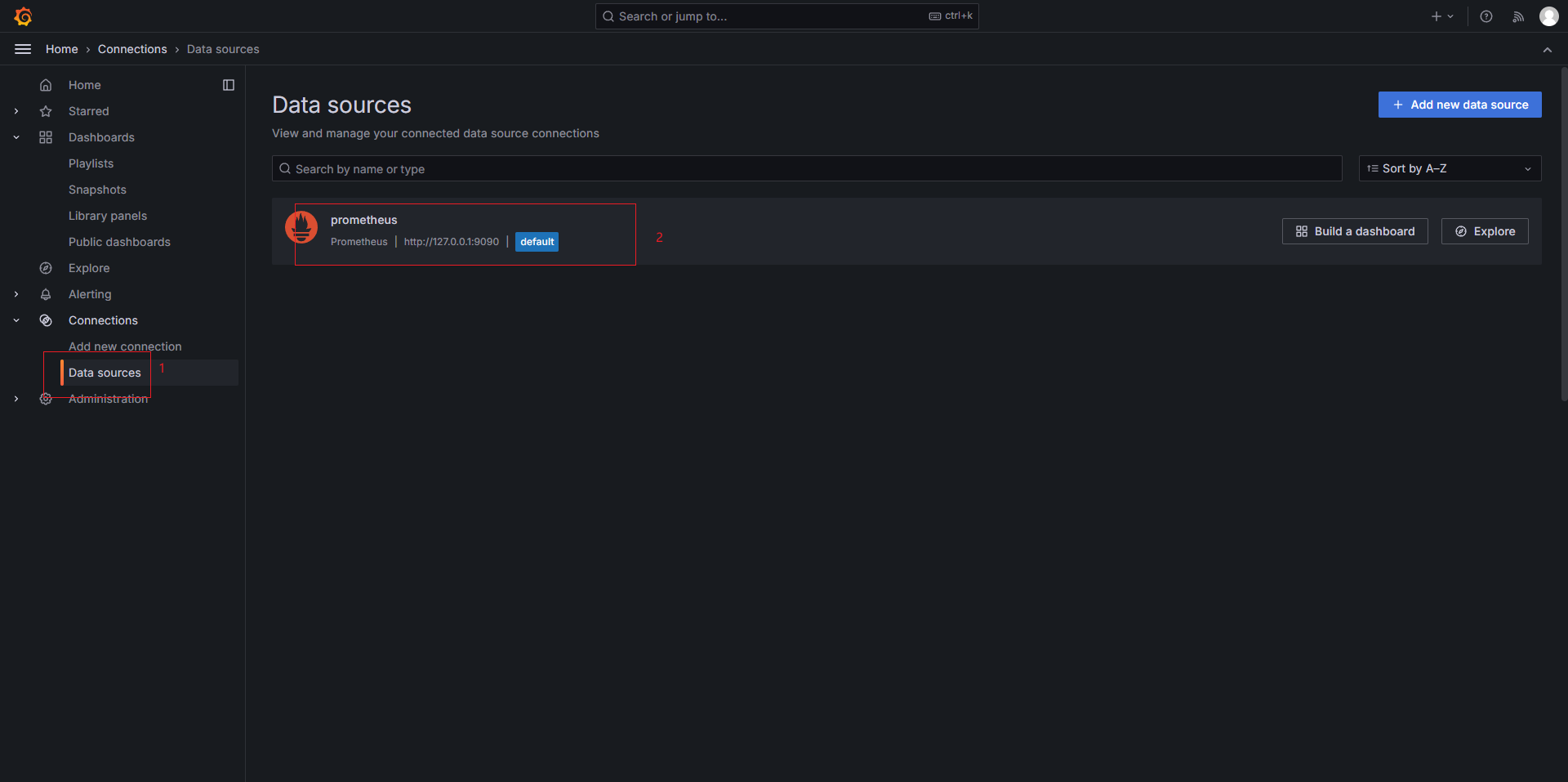

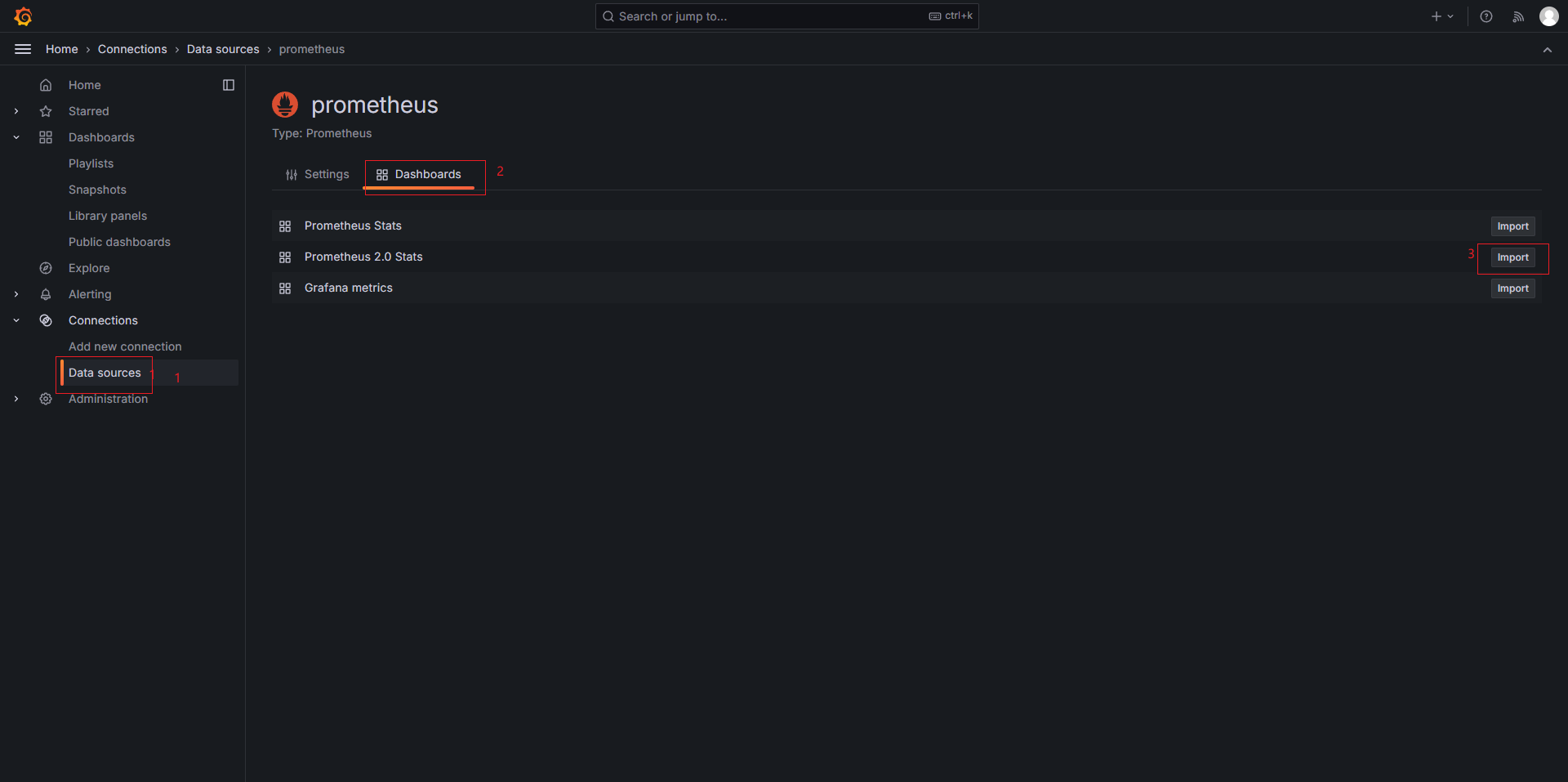

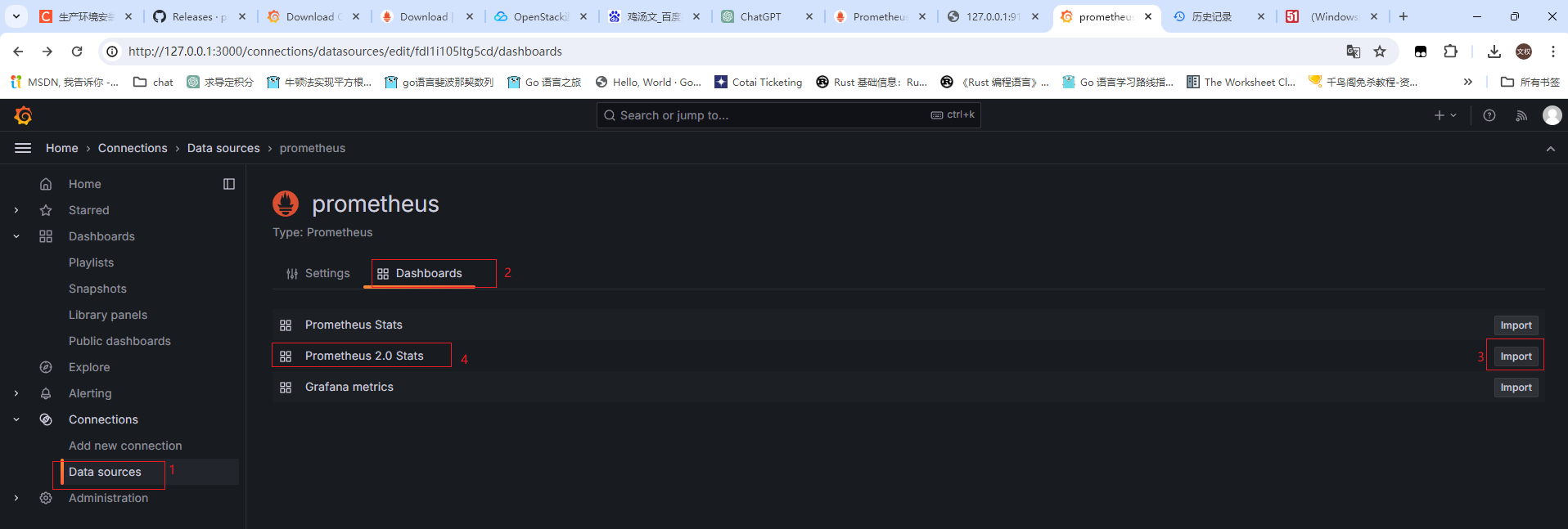

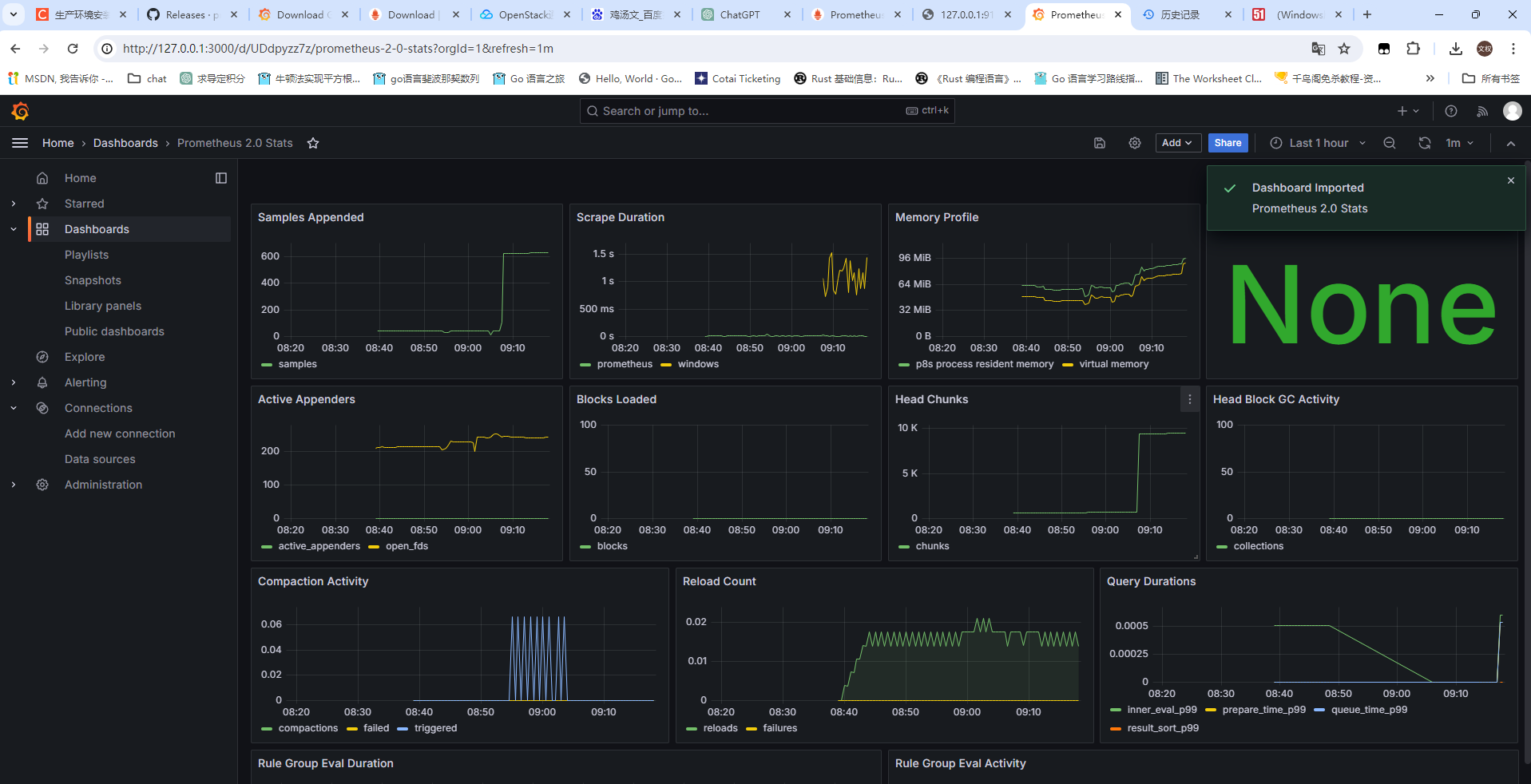

添加datasource

出现下边页面即为搭建成功

Flume日志聚集搭建

-

打开一台centos7虚拟机

-

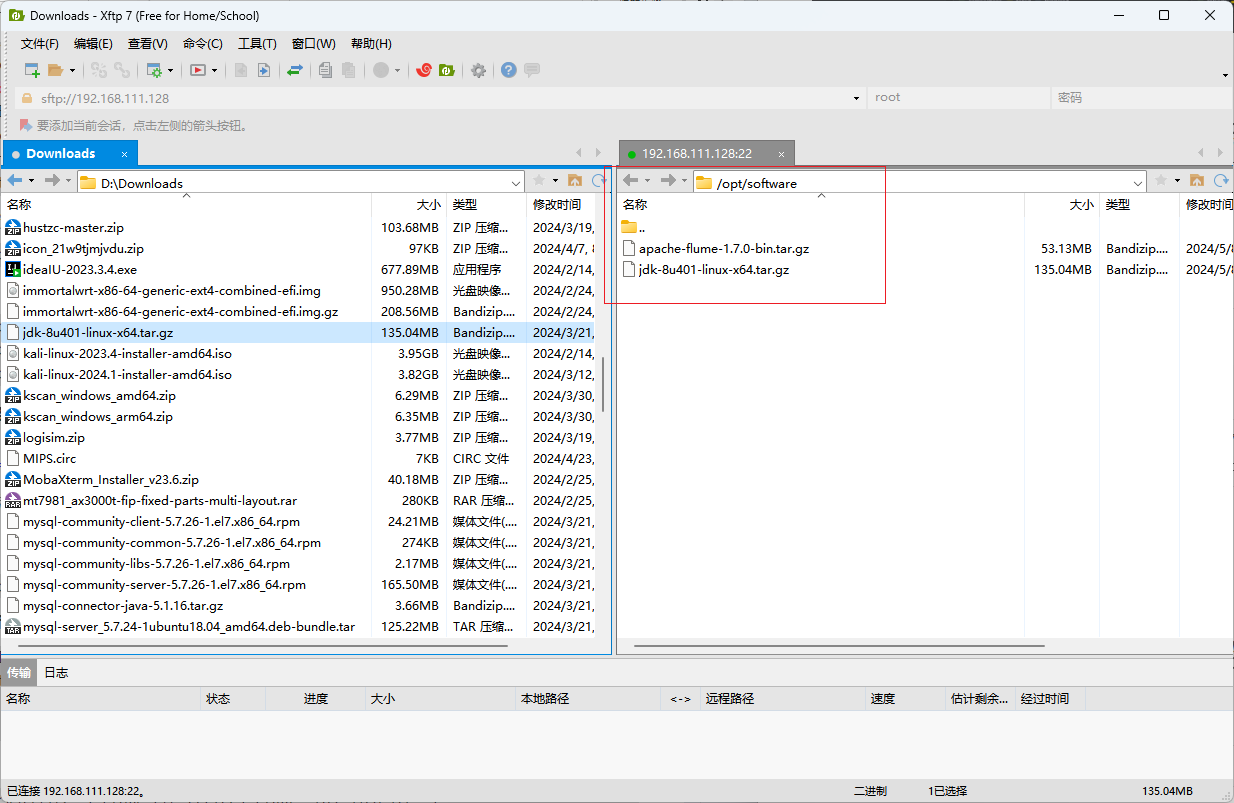

使用xtfp上传flume、JDK安装包

mkdir /opt/software && cd /opt/software && mkdir /opt/module

-

解压

tar -zxvf /opt/software/apache-flume-1.7.0-bin.tar.gz -C /opt/module/ && mv /opt/module/apache-flume-1.7.0-bin/ /opt/module/flumetar -zxvf jdk-8u401-linux-x64.tar.gz -C /opt/module/ && mv /opt/module/jdk1.8.0_401/ /opt/module/jdk -

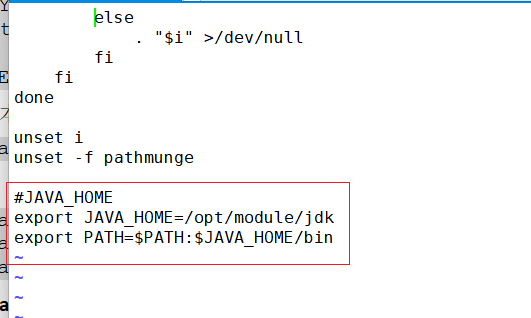

配置环境变量

vi /etc/profile#JAVA_HOME export JAVA_HOME=/opt/module/jdk export PATH=$PATH:$JAVA_HOME/bin

刷新环境变量

source /etc/profile -

启能flume配置文件

mv /opt/module/flume/conf/flume-env.sh.template /opt/module/flume/conf/flume-env.sh -

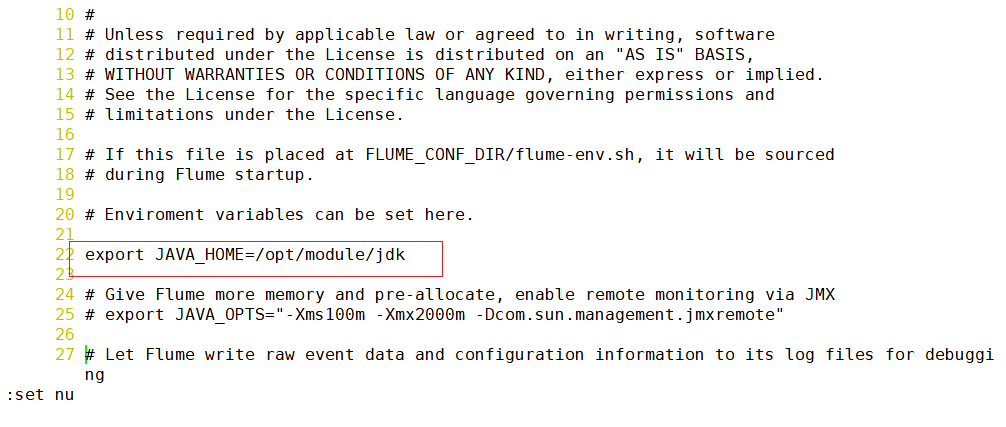

编辑配置文件

vi /opt/module/flume/conf/flume-env.sh

贴心小提示:在命令模式下可以输入set nu显示行号噢!

ganglia监控搭建

-

安装httpd和php

yum -y install httpd phpyum -y install rrdtool perl-rrdtool rrdtool-develyum -y install apr-devel -

安装ganglia

yum install -y epel-releaseyum -y install ganglia-gmetadyum -y install ganglia-webyum install -y ganglia-gmond -

安装telnet

yum install telnet -y -

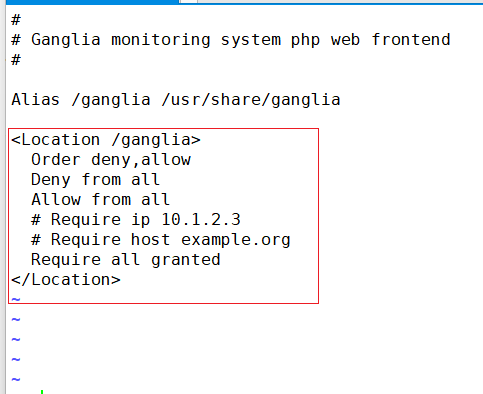

修改配置文件

vi /etc/httpd/conf.d/ganglia.conf# Ganglia monitoring system php web frontend # Alias /ganglia /usr/share/ganglia <Location /ganglia> Order deny,allow Deny from all Allow from all # Require ip 10.1.2.3 # Require host example.org Require all granted </Location>

-

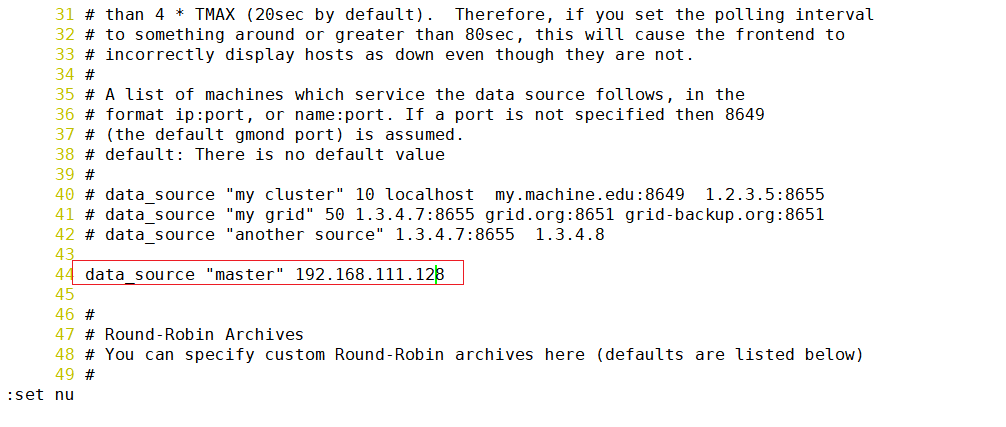

修改配置文件

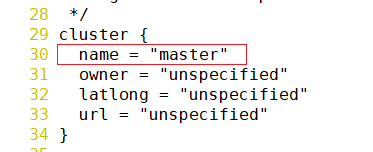

vi /etc/ganglia/gmetad.conf

-

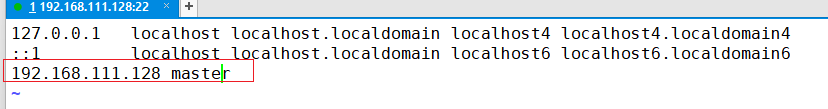

修改hosts文件

vi /etc/hosts

-

修改配置文件

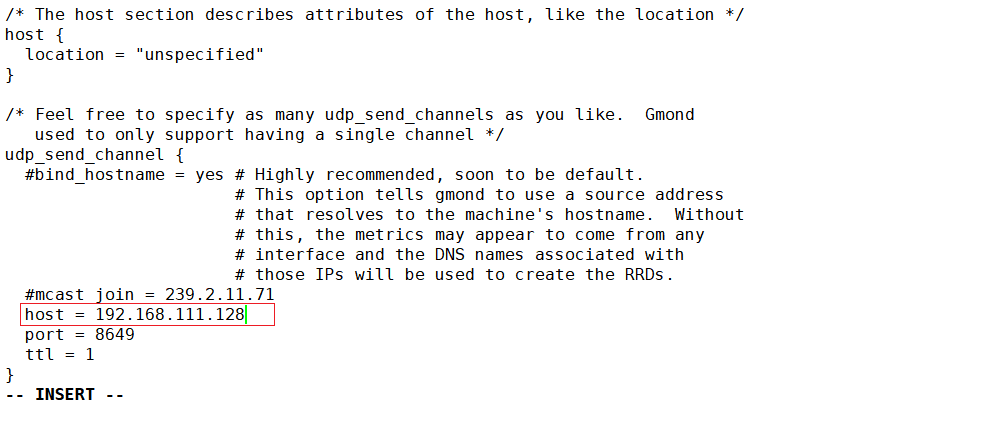

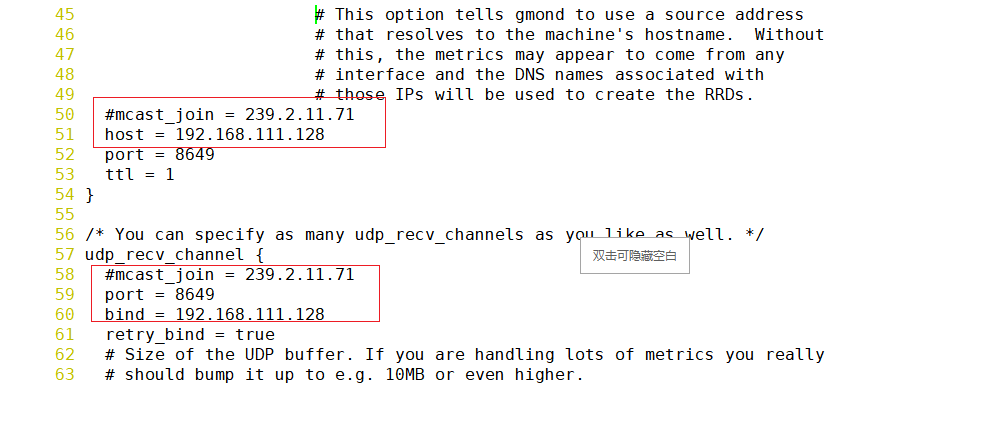

vi /etc/ganglia/gmond.conf

尖叫提示! 这里一定不要漏掉注释!!(也就是井号)

-

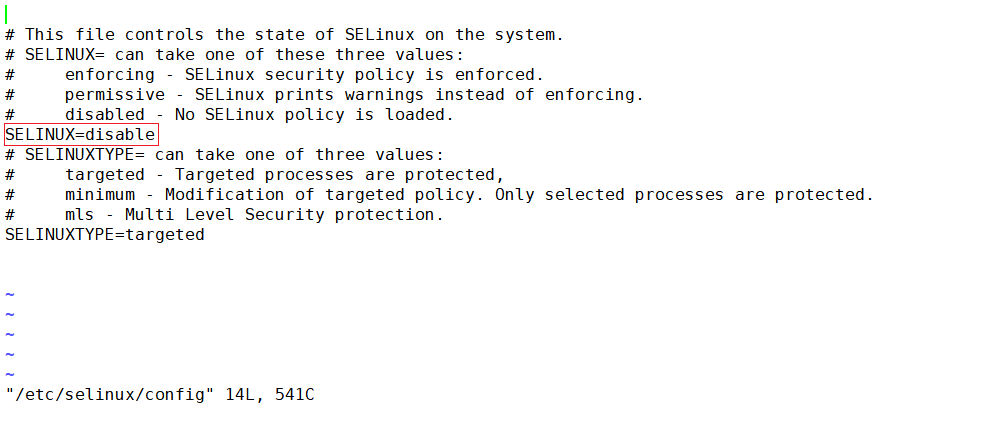

禁用selinux

vi /etc/selinux/config

-

设置服务自启动

systemctl enable httpd && systemctl enable gmetad && systemctl enable gmond -

关闭防火墙

systemctl stop firewalld && systemctl disable firewalld -

赋权

chmod -R 777 /var/lib/ganglia -

重启虚拟机

init 6 -

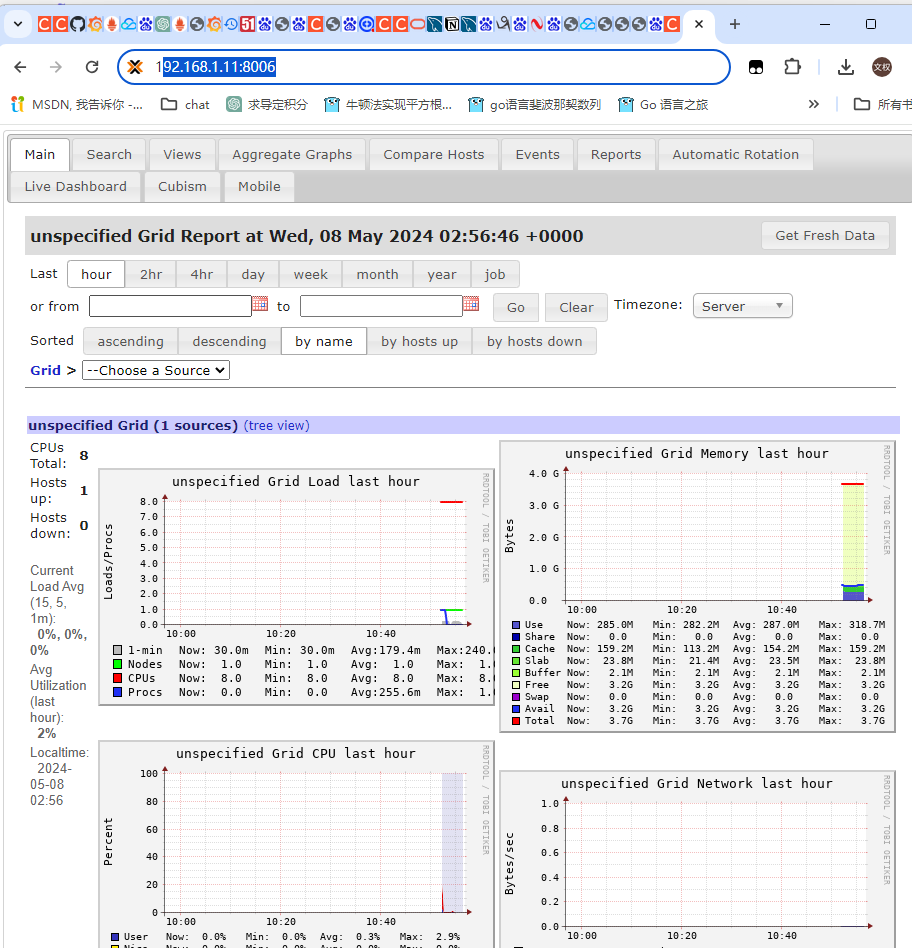

访问

http://192.168.111.128/ganglia/

-

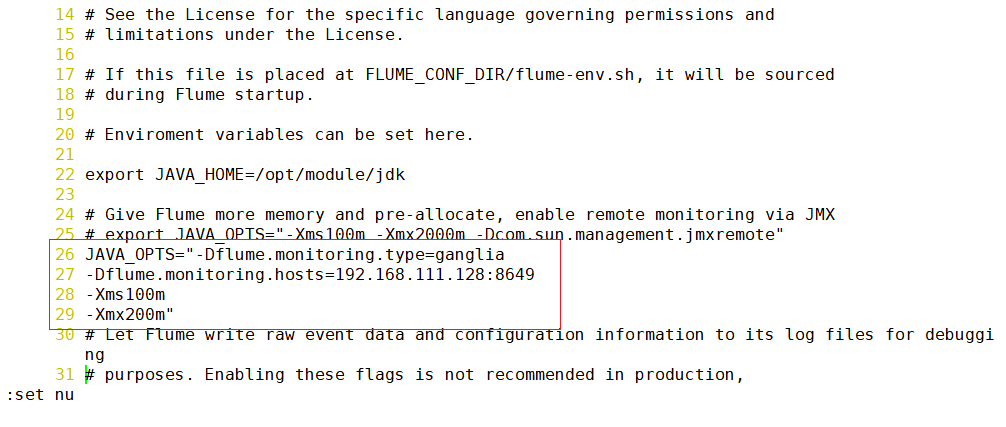

修改flume配置文件

vi /opt/module/flume/conf/flume-env.shJAVA_OPTS="-Dflume.monitoring.type=ganglia -Dflume.monitoring.hosts=192.168.111.128:8649 -Xms100m -Xmx200m"

-

创建job文件

mkdir /opt/module/flume/jobvi /opt/module/flume/job/flume-telnet-logger.conf# Name the components on this agent a1.sources = r1 a1.sinks = k1 a1.channels = c1 # Describe/configure the source a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 44444 # Describe the sink a1.sinks.k1.type = logger # Use a channel which buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1 -

启动flume任务

cd /opt/module/flume && /opt/module/flume/bin/flume-ng agent --conf conf/ --name a1 --conf-file job/flume-telnet-logger.conf -Dflume.root.logger==INFO,console -Dflume.monitoring.type=ganglia -Dflume.monitoring.hosts=192.168.111.128:8649 -

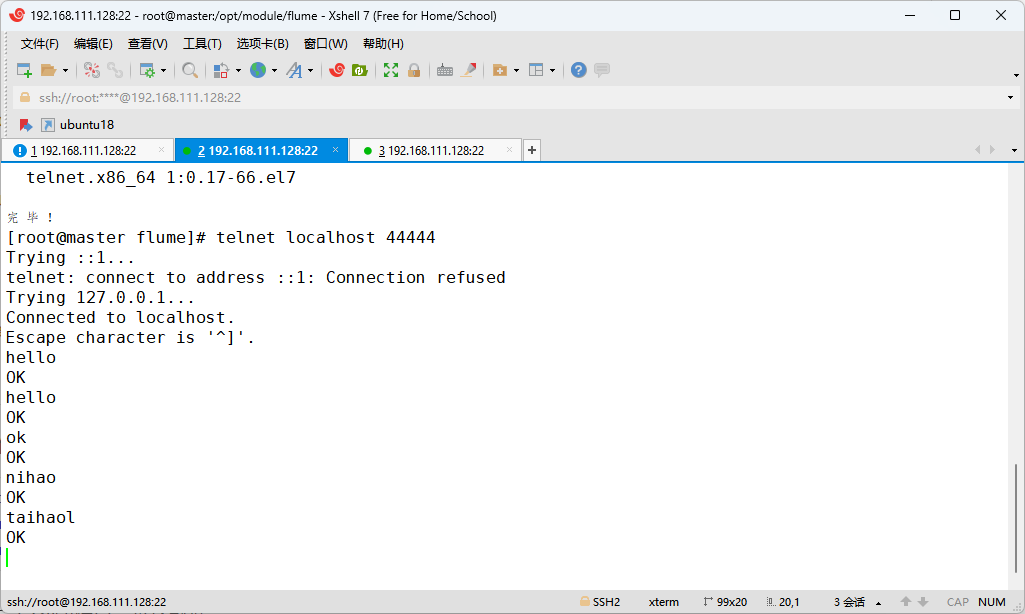

重新开启一个xshell端口,发送数据

telnet localhost 44444 -

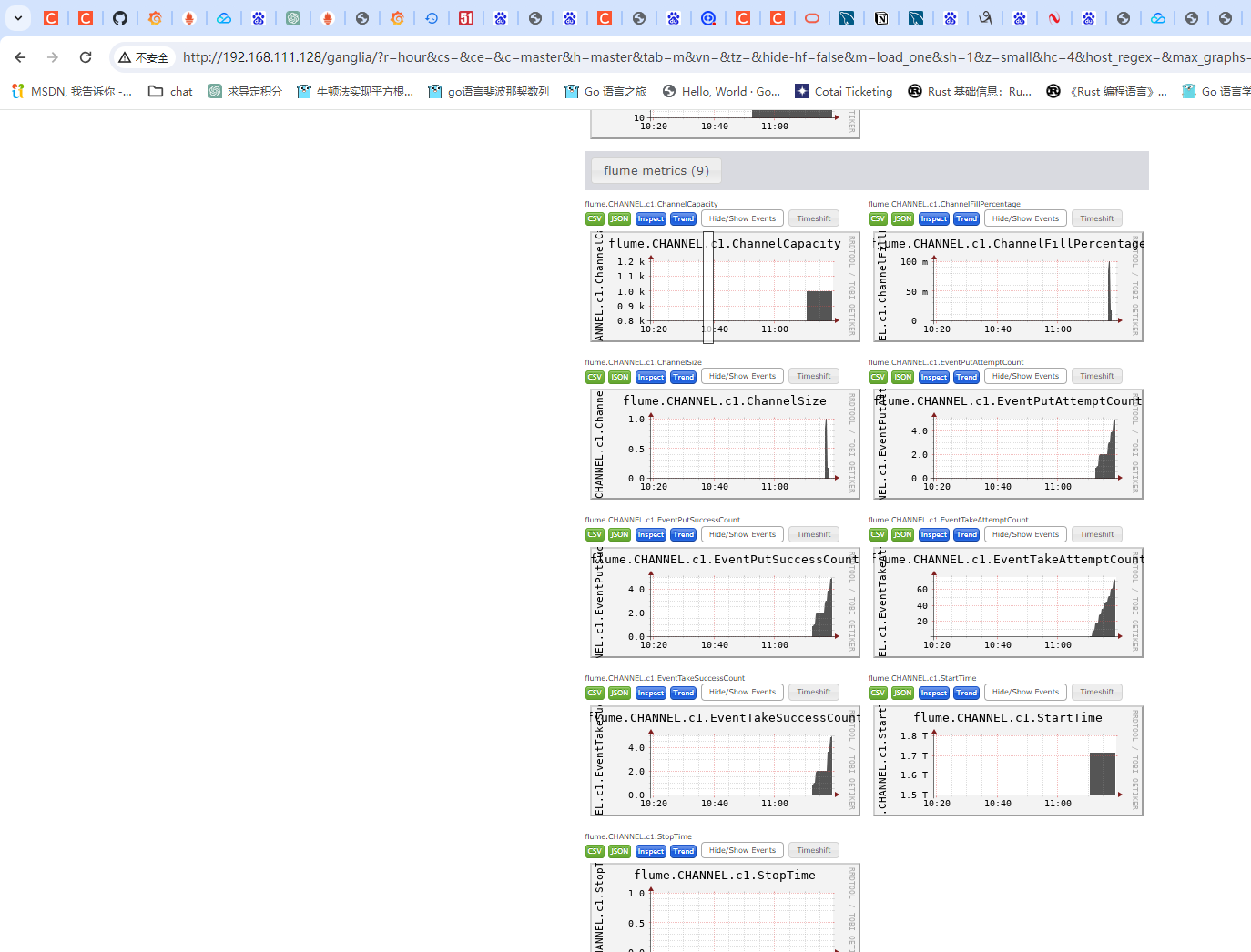

查看网页端监控情况

http://192.168.111.128/ganglia/?r=hour&cs=&ce=&c=master&h=master&tab=m&vn=&tz=&hide-hf=false&m=load_one&sh=1&z=small&hc=4&host_regex=&max_graphs=0&s=by+name

flume日志聚集(MySQL)

安装mysql

-

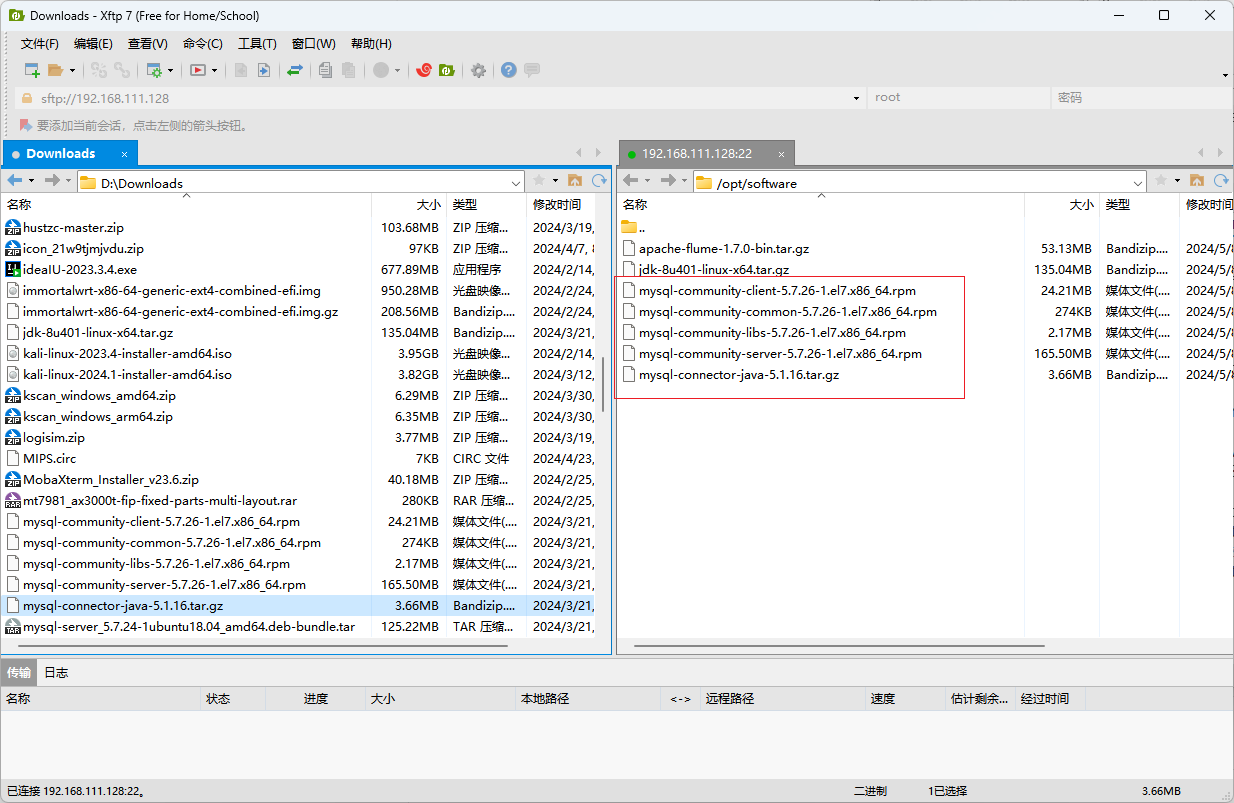

使用xftp上传到/opt/software目录

-

删除mariadb依赖

rpm -e mariadb-libs-5.5.68-1.el7.x86_64 --nodeps -

安装mysql服务

cd /opt/softwarerpm -ivh mysql-community-common-5.7.26-1.el7.x86_64.rpm --nodeps rpm -ivh mysql-community-libs-5.7.26-1.el7.x86_64.rpm --nodeps rpm -ivh mysql-community-client-5.7.26-1.el7.x86_64.rpm rpm -ivh mysql-community-server-5.7.26-1.el7.x86_64.rpm --nodeps -

启动mysql服务

systemctl start mysqld -

查找mysql密码

grep "password" /var/log/mysqld.log -

登录mysql 注意 ! ! mysql的密码输入也是没有回显的

mysql -uroot -p -

设置密码复杂度

set global validate_password_policy=LOW; set global validate_password_length=4; -

修改密码, 这里设置为root

alter user 'root'@'localhost' identified by 'root'; -

开启远程访问

GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' IDENTIFIED BY 'root' WITH GRANT OPTION; -

退出mysql

exit

创建msyql source jar包

-

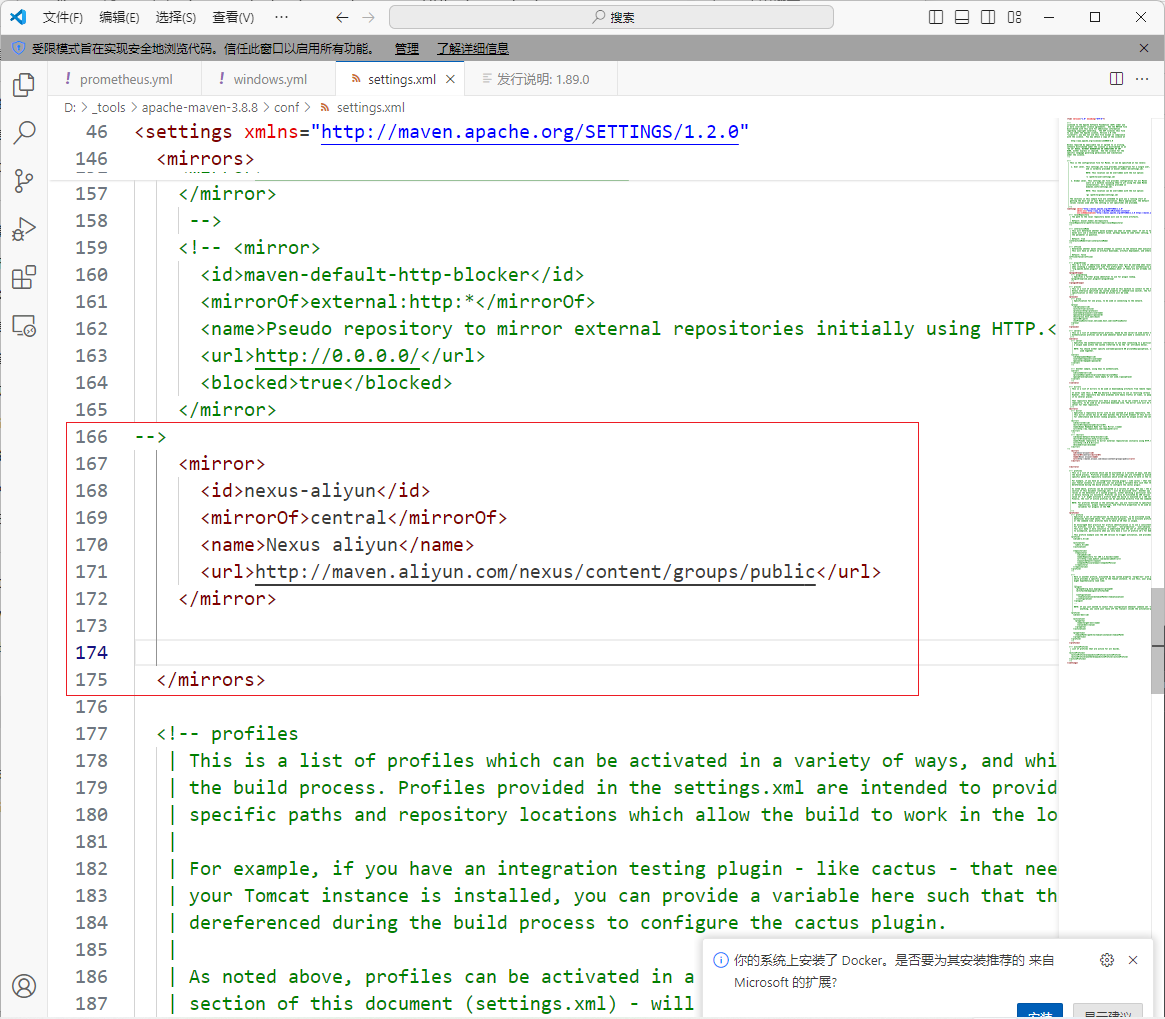

安装maven

在windows本机解压后打开conf目录下的setting.xml,添加阿里云镜像源

-

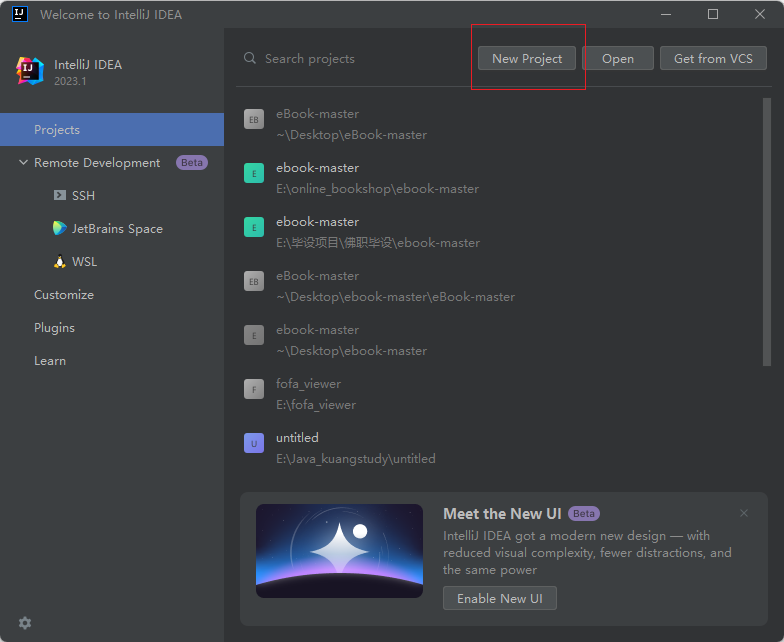

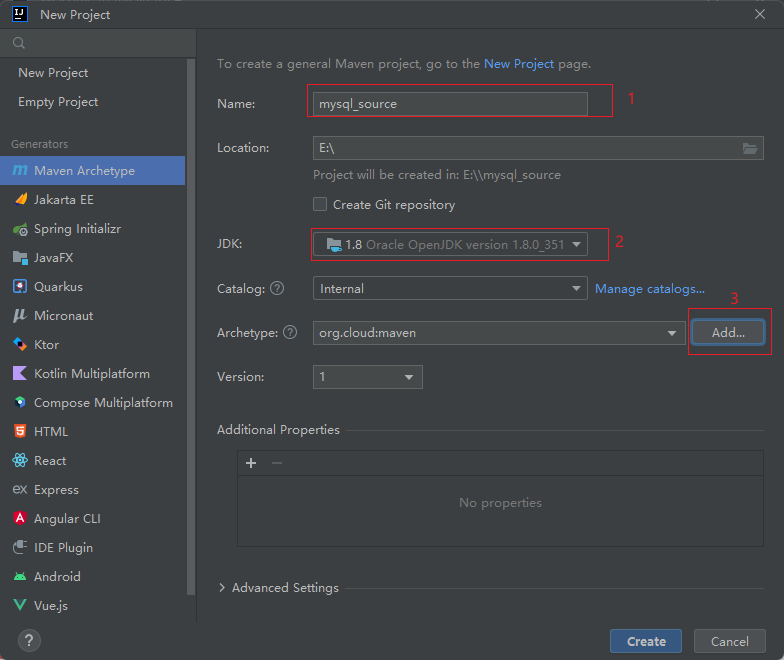

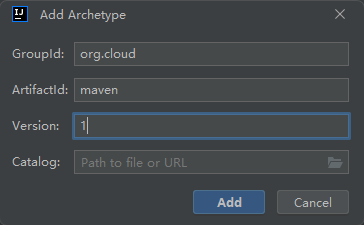

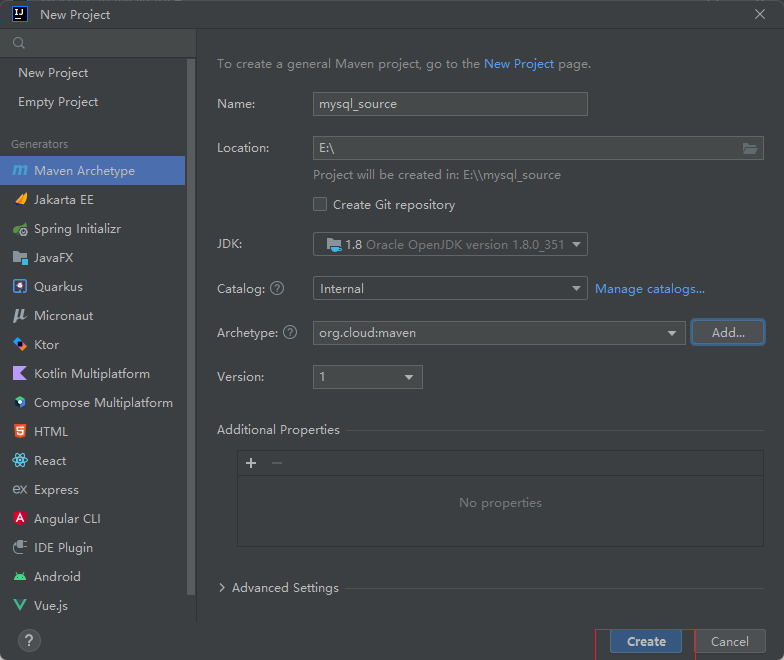

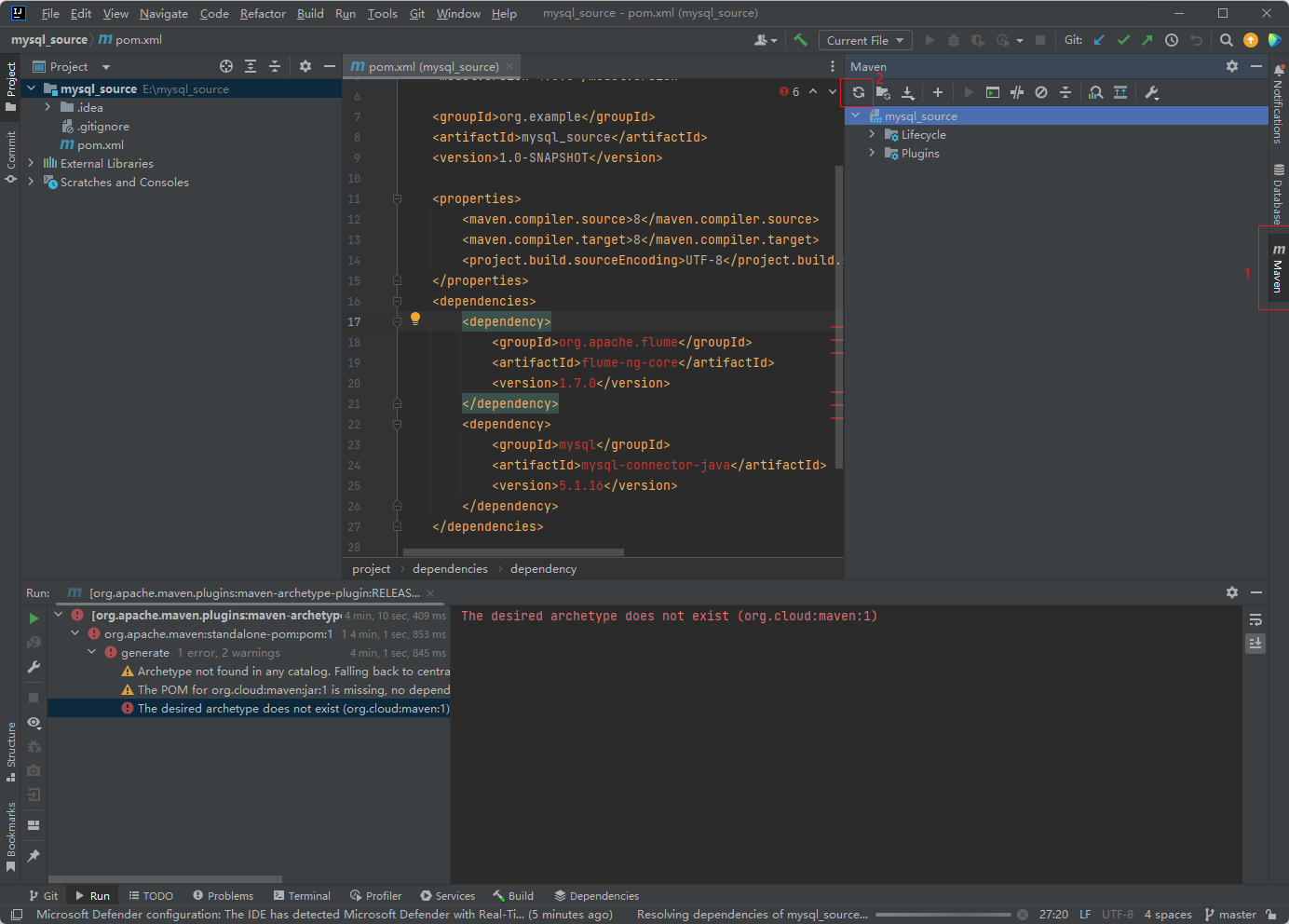

打开idea,新建一个maven项目

-

项目详细信息,如果本机没有jdk需要安装jdk

-

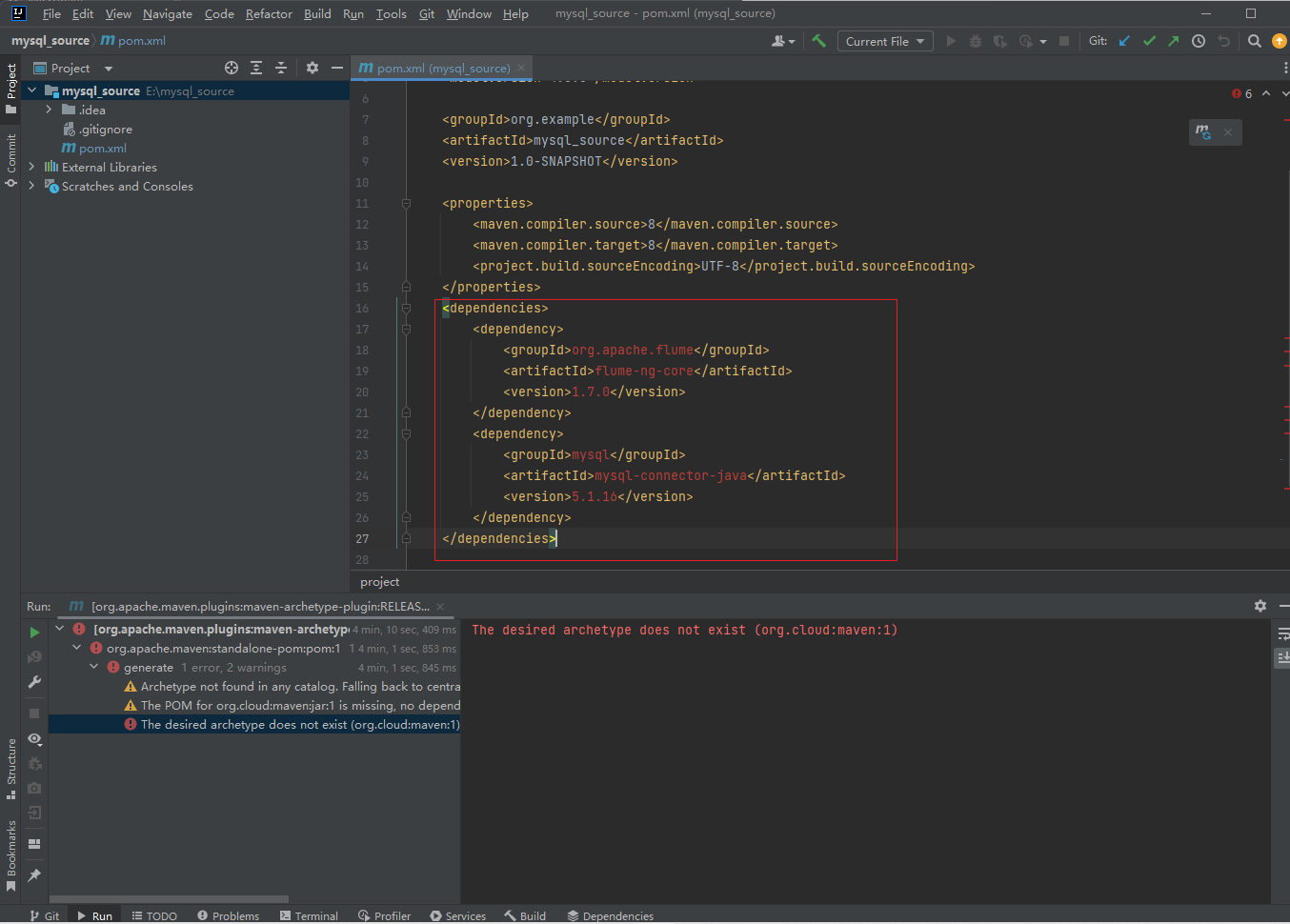

导入依赖

<dependencies> <dependency> <groupId>org.apache.flume</groupId> <artifactId>flume-ng-core</artifactId> <version>1.7.0</version> </dependency> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>5.1.16</version> </dependency> <dependency> <groupId>log4j</groupId> <artifactId>log4j</artifactId> <version>1.2.17</version> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-api</artifactId> <version>1.7.12</version> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> <version>1.7.12</version> </dependency> </dependencies>

-

我们需要刷新

成功导入就不会爆红了

-

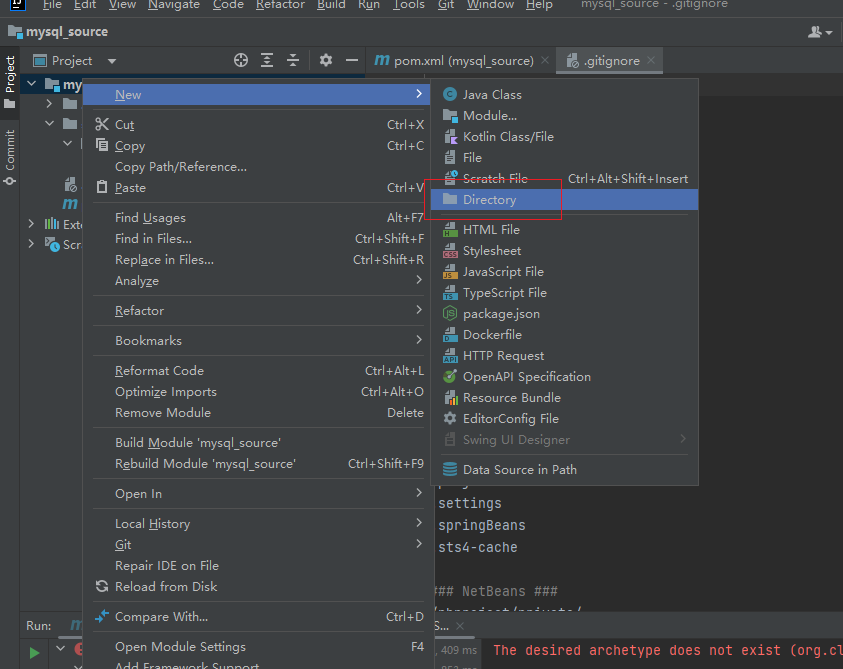

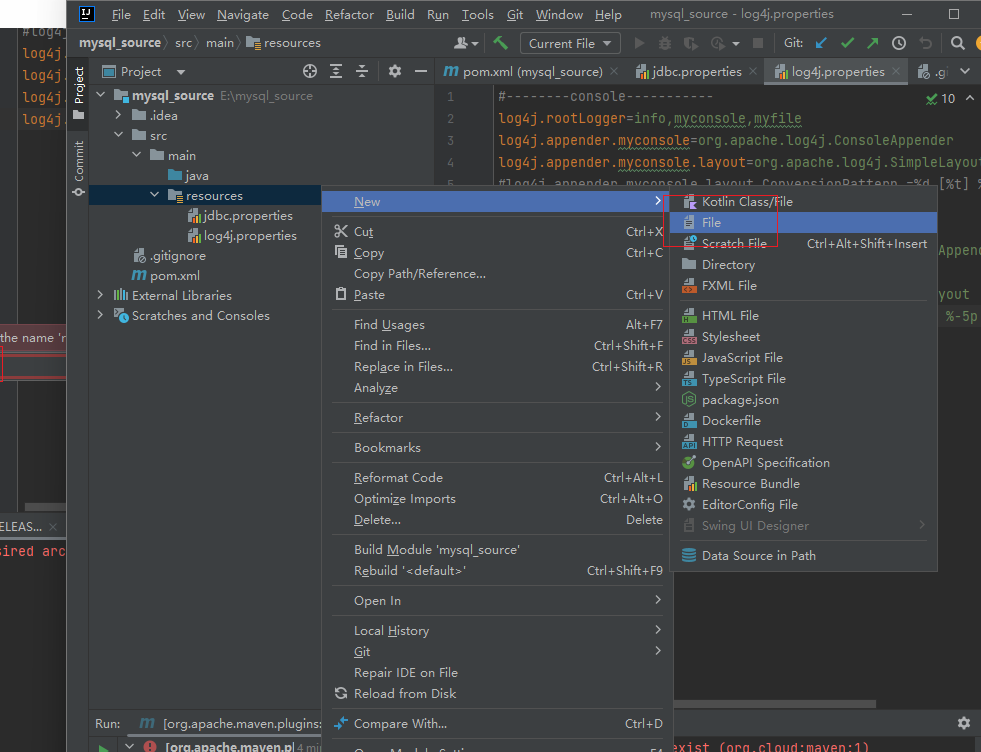

右键根目录,创建目录

-

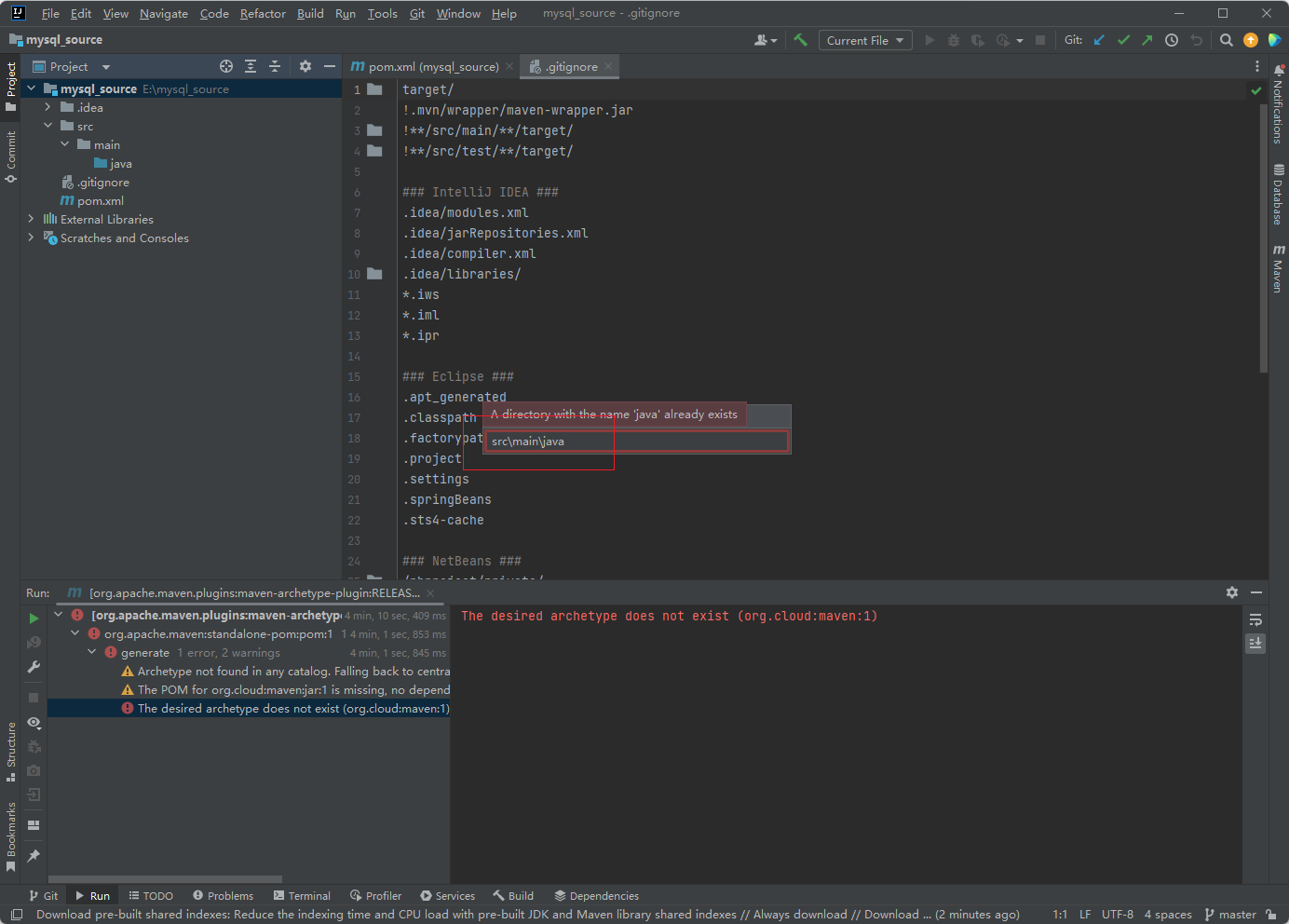

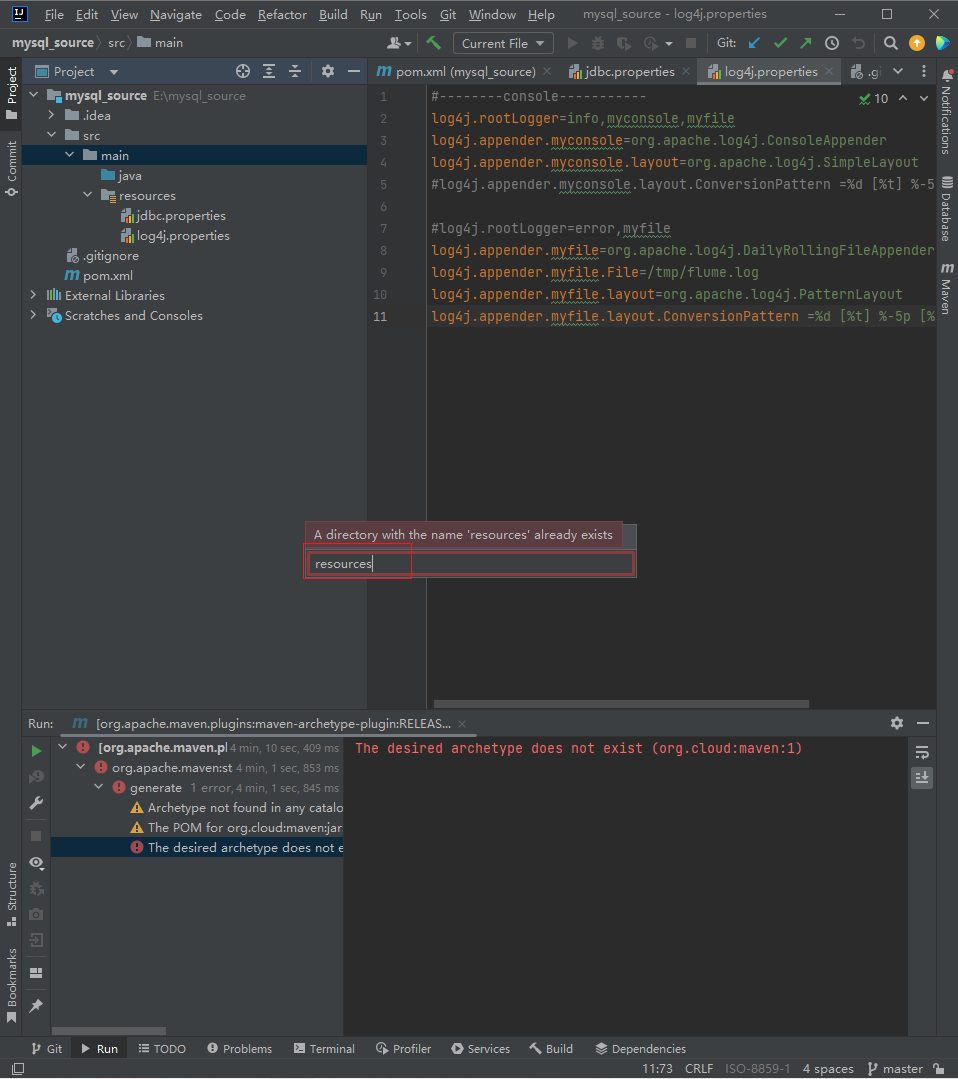

创建资源文件夹

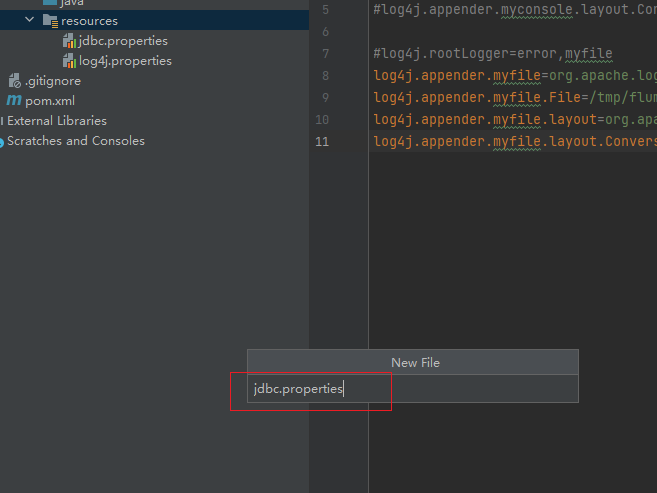

创建两个资源文件jdbc.properties和log4j.properties

log4j.properties操作同上

添加配置

-

jdbc.properties

dbDriver=com.mysql.jdbc.Driver dbUrl=jdbc:mysql://192.168.111.128:3306/mysqlsource?useUnicode=true&characterEncoding=utf-8 dbUser=root dbPassword=root -

log4j.properties

#--------console----------- log4j.rootLogger=info,myconsole,myfile log4j.appender.myconsole=org.apache.log4j.ConsoleAppender log4j.appender.myconsole.layout=org.apache.log4j.SimpleLayout #log4j.appender.myconsole.layout.ConversionPattern =%d [%t] %-5p [%c] - %m%n #log4j.rootLogger=error,myfile log4j.appender.myfile=org.apache.log4j.DailyRollingFileAppender log4j.appender.myfile.File=/tmp/flume.log log4j.appender.myfile.layout=org.apache.log4j.PatternLayout log4j.appender.myfile.layout.ConversionPattern =%d [%t] %-5p [%c] - %m%n

-

-

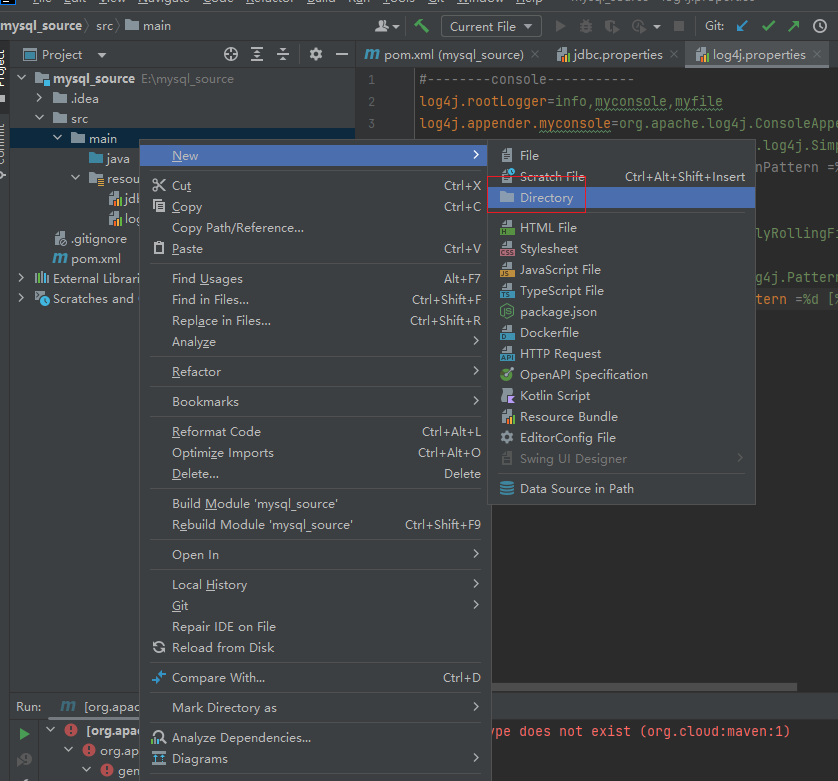

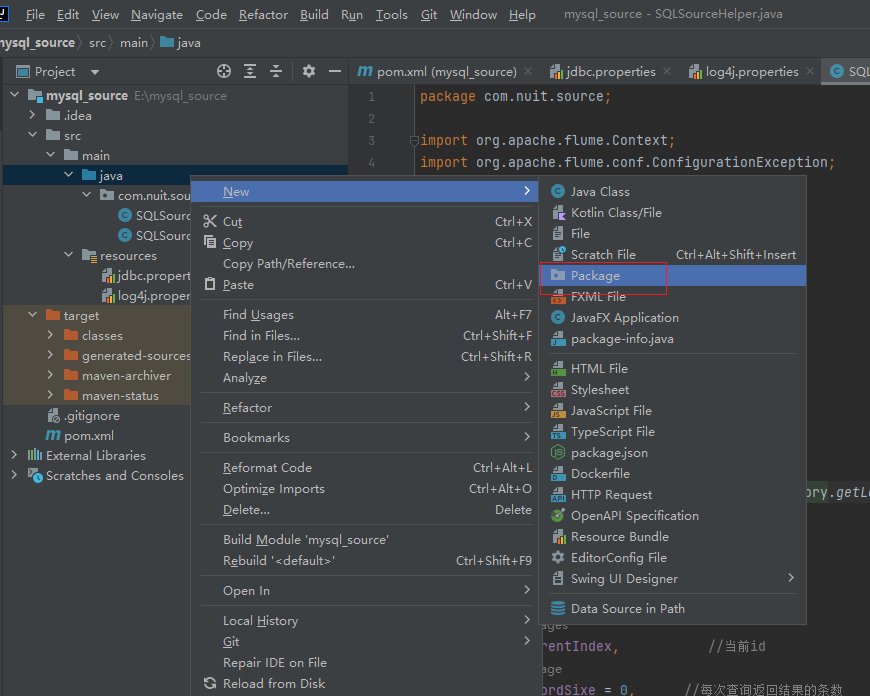

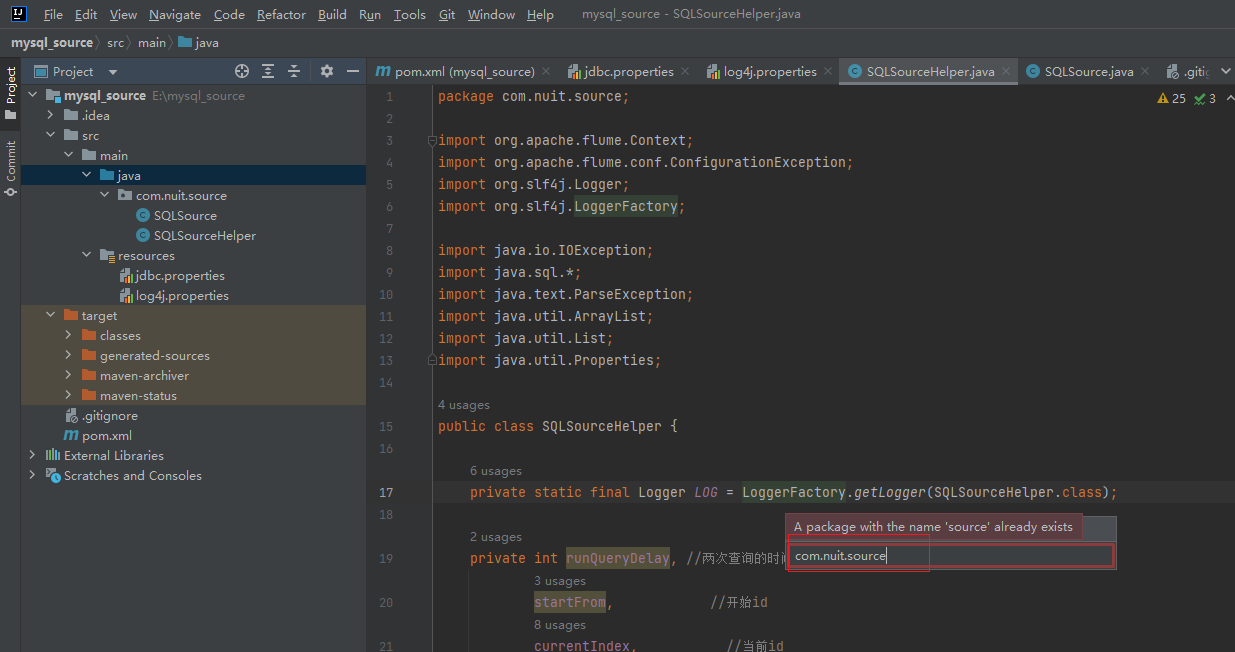

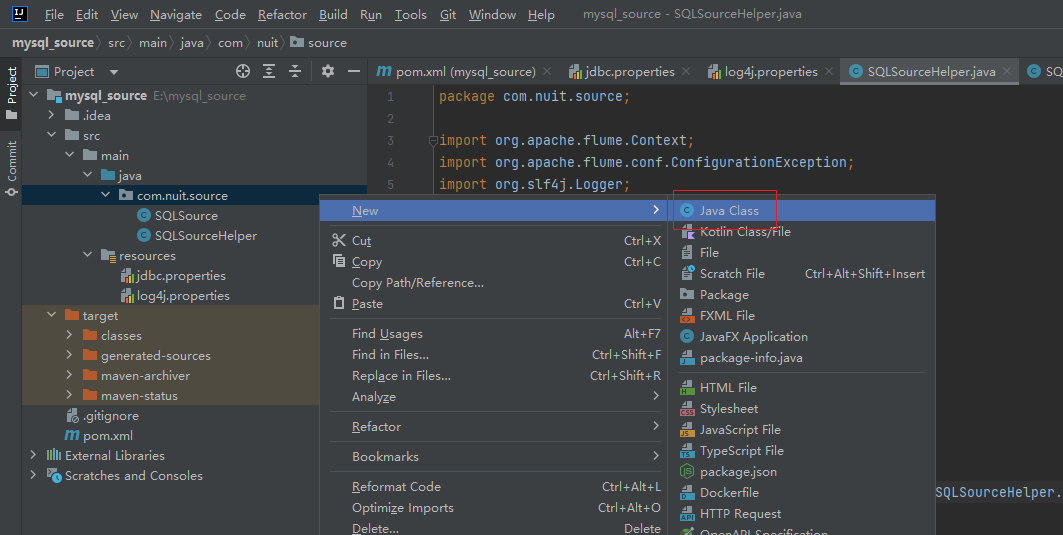

右键java目录,创建包

-

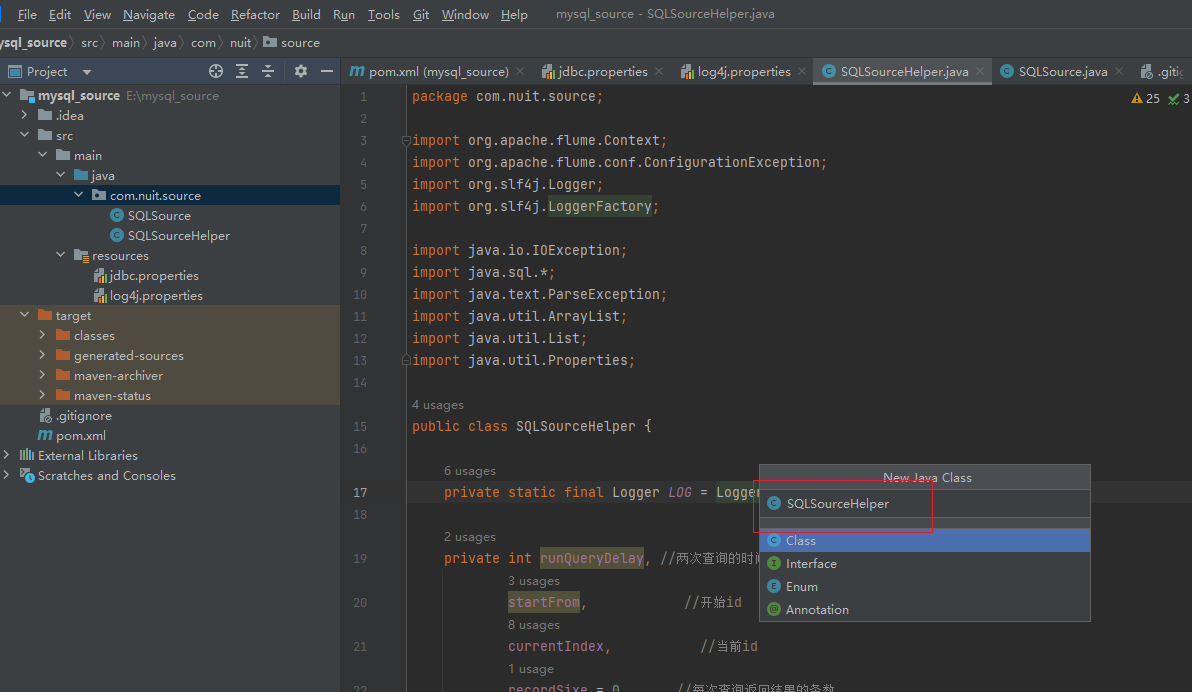

在包内新建两个类

再新建一个SQLSource类,操作同上

-

编写代码

-

SQLSourceHelper

package com.nuit.source; import org.apache.flume.Context; import org.apache.flume.conf.ConfigurationException; import org.slf4j.Logger; import org.slf4j.LoggerFactory; import java.io.IOException; import java.sql.*; import java.text.ParseException; import java.util.ArrayList; import java.util.List; import java.util.Properties; public class SQLSourceHelper { private static final Logger LOG = LoggerFactory.getLogger(SQLSourceHelper.class); private int runQueryDelay, //两次查询的时间间隔 startFrom, //开始id currentIndex, //当前id recordSixe = 0, //每次查询返回结果的条数 maxRow; //每次查询的最大条数 private String table, //要操作的表 columnsToSelect, //用户传入的查询的列 customQuery, //用户传入的查询语句 query, //构建的查询语句 defaultCharsetResultSet;//编码集 //上下文,用来获取配置文件 private Context context; //为定义的变量赋值(默认值),可在flume任务的配置文件中修改 private static final int DEFAULT_QUERY_DELAY = 10000; private static final int DEFAULT_START_VALUE = 0; private static final int DEFAULT_MAX_ROWS = 2000; private static final String DEFAULT_COLUMNS_SELECT = "*"; private static final String DEFAULT_CHARSET_RESULTSET = "UTF-8"; private static Connection conn = null; private static PreparedStatement ps = null; private static String connectionURL, connectionUserName, connectionPassword; //加载静态资源 static { Properties p = new Properties(); try { p.load(SQLSourceHelper.class.getClassLoader().getResourceAsStream("jdbc.properties")); connectionURL = p.getProperty("dbUrl"); connectionUserName = p.getProperty("dbUser"); connectionPassword = p.getProperty("dbPassword"); Class.forName(p.getProperty("dbDriver")); } catch (IOException | ClassNotFoundException e) { LOG.error(e.toString()); } } //获取JDBC连接 private static Connection InitConnection(String url, String user, String pw) { try { Connection conn = DriverManager.getConnection(url, user, pw); if (conn == null) throw new SQLException(); return conn; } catch (SQLException e) { e.printStackTrace(); } return null; } //构造方法 SQLSourceHelper(Context context) throws ParseException { //初始化上下文 this.context = context; //有默认值参数:获取flume任务配置文件中的参数,读不到的采用默认值 this.columnsToSelect = context.getString("columns.to.select", DEFAULT_COLUMNS_SELECT); this.runQueryDelay = context.getInteger("run.query.delay", DEFAULT_QUERY_DELAY); this.startFrom = context.getInteger("start.from", DEFAULT_START_VALUE); this.defaultCharsetResultSet = context.getString("default.charset.resultset", DEFAULT_CHARSET_RESULTSET); //无默认值参数:获取flume任务配置文件中的参数 this.table = context.getString("table"); this.customQuery = context.getString("custom.query"); connectionURL = context.getString("connection.url"); connectionUserName = context.getString("connection.user"); connectionPassword = context.getString("connection.password"); conn = InitConnection(connectionURL, connectionUserName, connectionPassword); //校验相应的配置信息,如果没有默认值的参数也没赋值,抛出异常 checkMandatoryProperties(); //获取当前的id currentIndex = getStatusDBIndex(startFrom); //构建查询语句 query = buildQuery(); } //校验相应的配置信息(表,查询语句以及数据库连接的参数) private void checkMandatoryProperties() { if (table == null) { throw new ConfigurationException("property table not set"); } if (connectionURL == null) { throw new ConfigurationException("connection.url property not set"); } if (connectionUserName == null) { throw new ConfigurationException("connection.user property not set"); } if (connectionPassword == null) { throw new ConfigurationException("connection.password property not set"); } } //构建sql语句 private String buildQuery() { String sql = ""; //获取当前id currentIndex = getStatusDBIndex(startFrom); LOG.info(currentIndex + ""); if (customQuery == null) { sql = "SELECT " + columnsToSelect + " FROM " + table; } else { sql = customQuery; } StringBuilder execSql = new StringBuilder(sql); //以id作为offset if (!sql.contains("where")) { execSql.append(" where "); execSql.append("id").append(">").append(currentIndex); return execSql.toString(); } else { int length = execSql.toString().length(); return execSql.toString().substring(0, length - String.valueOf(currentIndex).length()) + currentIndex; } } //执行查询 List<List<Object>> executeQuery() { try { //每次执行查询时都要重新生成sql,因为id不同 customQuery = buildQuery(); //存放结果的集合 List<List<Object>> results = new ArrayList<>(); if (ps == null) { // ps = conn.prepareStatement(customQuery); } ResultSet result = ps.executeQuery(customQuery); while (result.next()) { //存放一条数据的集合(多个列) List<Object> row = new ArrayList<>(); //将返回结果放入集合 for (int i = 1; i <= result.getMetaData().getColumnCount(); i++) { row.add(result.getObject(i)); } results.add(row); } LOG.info("execSql:" + customQuery + "\nresultSize:" + results.size()); return results; } catch (SQLException e) { LOG.error(e.toString()); // 重新连接 conn = InitConnection(connectionURL, connectionUserName, connectionPassword); } return null; } //将结果集转化为字符串,每一条数据是一个list集合,将每一个小的list集合转化为字符串 List<String> getAllRows(List<List<Object>> queryResult) { List<String> allRows = new ArrayList<>(); if (queryResult == null || queryResult.isEmpty()) return allRows; StringBuilder row = new StringBuilder(); for (List<Object> rawRow : queryResult) { Object value = null; for (Object aRawRow : rawRow) { value = aRawRow; if (value == null) { row.append(","); } else { row.append(aRawRow.toString()).append(","); } } allRows.add(row.toString()); row = new StringBuilder(); } return allRows; } //更新offset元数据状态,每次返回结果集后调用。必须记录每次查询的offset值,为程序中断续跑数据时使用,以id为offset void updateOffset2DB(int size) { //以source_tab做为KEY,如果不存在则插入,存在则更新(每个源表对应一条记录) String sql = "insert into flume_meta(source_tab,currentIndex) VALUES('" + this.table + "','" + (recordSixe += size) + "') on DUPLICATE key update source_tab=values(source_tab),currentIndex=values(currentIndex)"; LOG.info("updateStatus Sql:" + sql); execSql(sql); } //执行sql语句 private void execSql(String sql) { try { ps = conn.prepareStatement(sql); LOG.info("exec::" + sql); ps.execute(); } catch (SQLException e) { e.printStackTrace(); } } //获取当前id的offset private Integer getStatusDBIndex(int startFrom) { //从flume_meta表中查询出当前的id是多少 String dbIndex = queryOne("select currentIndex from flume_meta where source_tab='" + table + "'"); if (dbIndex != null) { return Integer.parseInt(dbIndex); } //如果没有数据,则说明是第一次查询或者数据表中还没有存入数据,返回最初传入的值 return startFrom; } //查询一条数据的执行语句(当前id) private String queryOne(String sql) { ResultSet result = null; try { ps = conn.prepareStatement(sql); result = ps.executeQuery(); while (result.next()) { return result.getString(1); } } catch (SQLException e) { e.printStackTrace(); } return null; } //关闭相关资源 void close() { try { ps.close(); conn.close(); } catch (SQLException e) { e.printStackTrace(); } } int getCurrentIndex() { return currentIndex; } void setCurrentIndex(int newValue) { currentIndex = newValue; } int getRunQueryDelay() { return runQueryDelay; } String getQuery() { return query; } String getConnectionURL() { return connectionURL; } private boolean isCustomQuerySet() { return (customQuery != null); } Context getContext() { return context; } public String getConnectionUserName() { return connectionUserName; } public String getConnectionPassword() { return connectionPassword; } String getDefaultCharsetResultSet() { return defaultCharsetResultSet; } } -

SQLSource

package com.nuit.source; import org.apache.flume.Context; import org.apache.flume.Event; import org.apache.flume.EventDeliveryException; import org.apache.flume.PollableSource; import org.apache.flume.conf.Configurable; import org.apache.flume.event.SimpleEvent; import org.apache.flume.source.AbstractSource; import org.slf4j.Logger; import org.slf4j.LoggerFactory; import java.text.ParseException; import java.util.ArrayList; import java.util.HashMap; import java.util.List; public class SQLSource extends AbstractSource implements Configurable, PollableSource { //打印日志 private static final Logger LOG = LoggerFactory.getLogger(SQLSource.class); //定义sqlHelper private SQLSourceHelper sqlSourceHelper; @Override public long getBackOffSleepIncrement() { return 0; } @Override public long getMaxBackOffSleepInterval() { return 0; } @Override public void configure(Context context) { try { //初始化 sqlSourceHelper = new SQLSourceHelper(context); } catch (ParseException e) { e.printStackTrace(); } } @Override public Status process() throws EventDeliveryException { try { //查询数据表 List<List<Object>> result = sqlSourceHelper.executeQuery(); //存放event的集合 List<Event> events = new ArrayList<>(); //存放event头集合 HashMap<String, String> header = new HashMap<>(); //如果有返回数据,则将数据封装为event if (!result.isEmpty()) { List<String> allRows = sqlSourceHelper.getAllRows(result); Event event = null; for (String row : allRows) { event = new SimpleEvent(); event.setBody(row.getBytes()); event.setHeaders(header); events.add(event); } //将event写入channel this.getChannelProcessor().processEventBatch(events); //更新数据表中的offset信息 sqlSourceHelper.updateOffset2DB(result.size()); } //等待时长 Thread.sleep(sqlSourceHelper.getRunQueryDelay()); return Status.READY; } catch (InterruptedException e) { LOG.error("Error procesing row", e); return Status.BACKOFF; } } @Override public synchronized void stop() { LOG.info("Stopping sql source {} ...", getName()); try { //关闭资源 sqlSourceHelper.close(); } finally { super.stop(); } } }

-

-

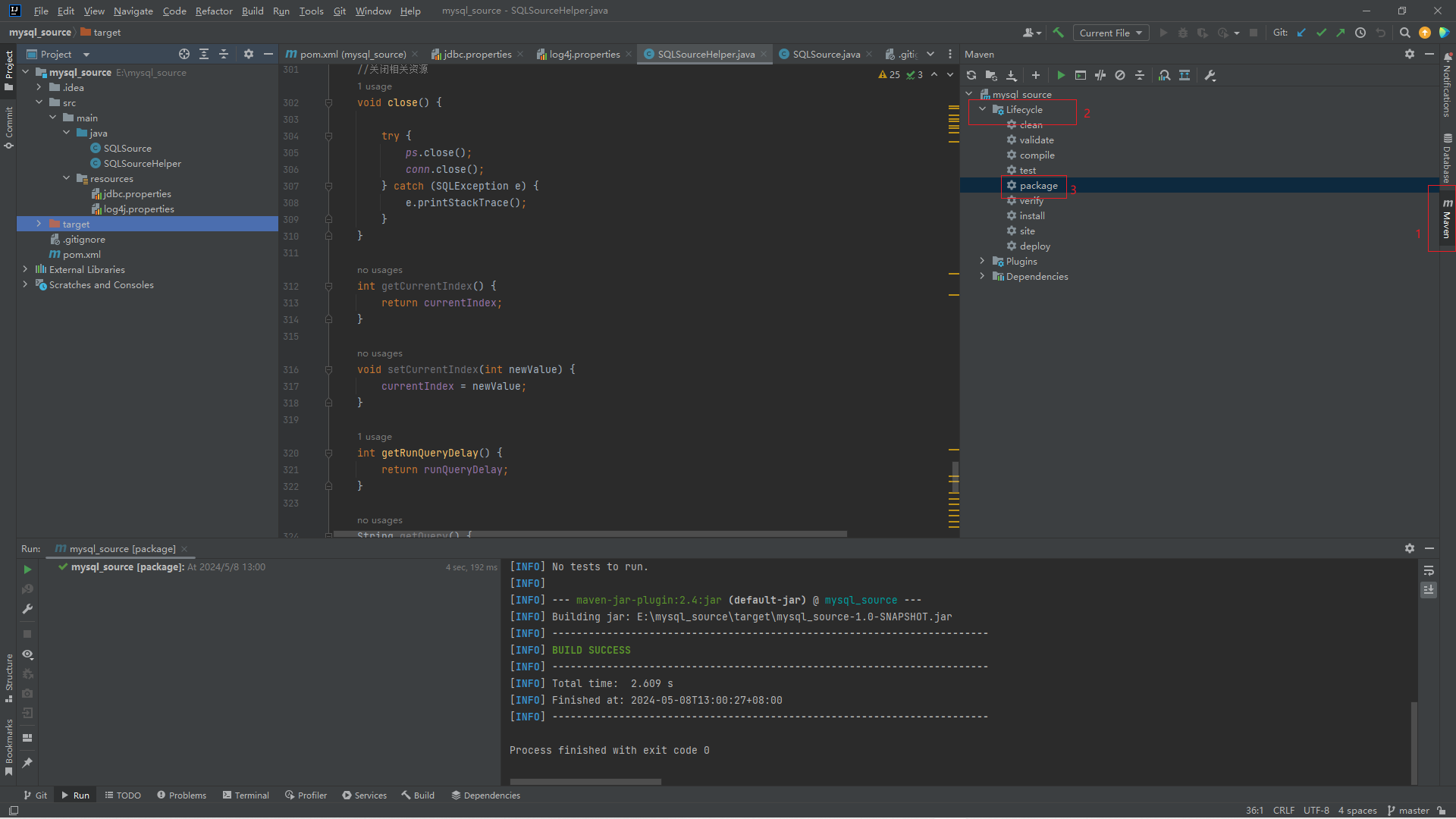

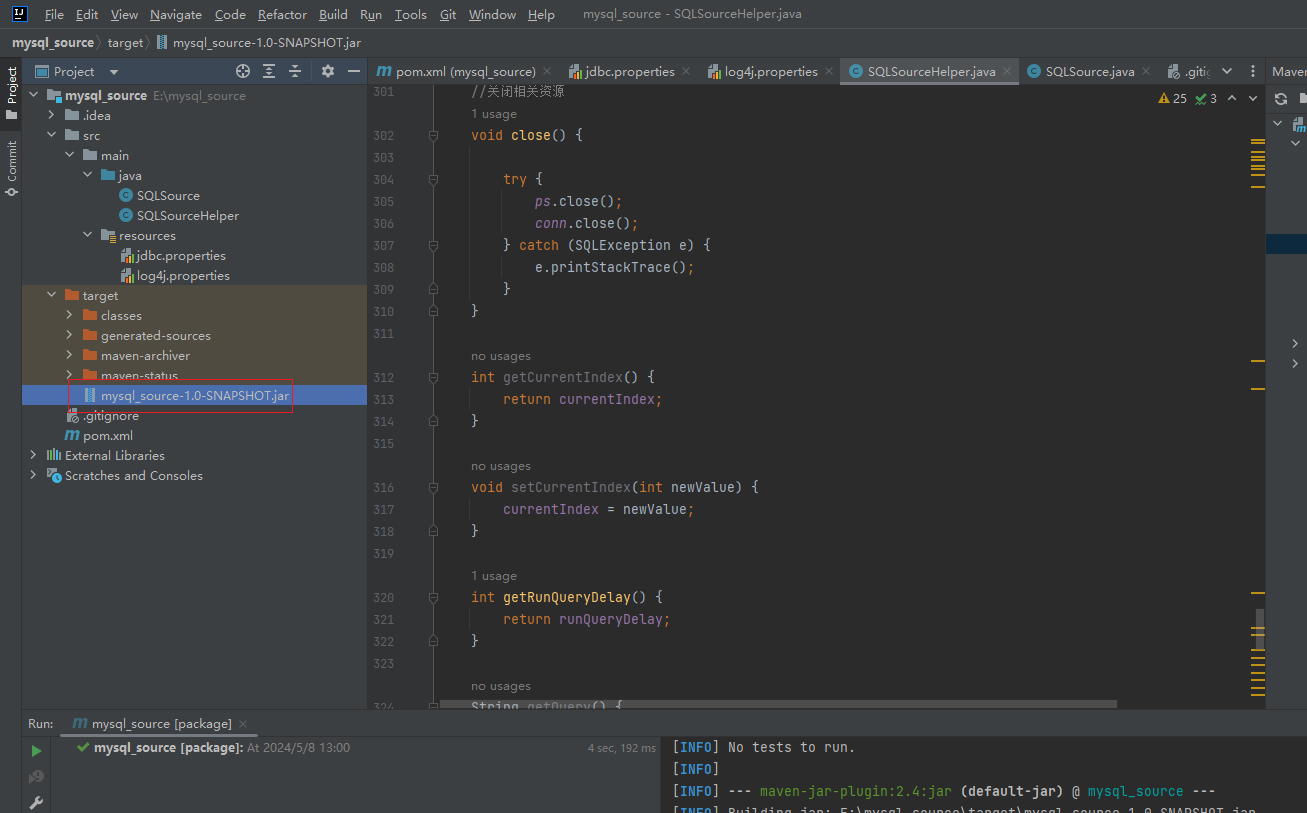

打Jar包

打包成功后,target目录会出现一个jar包

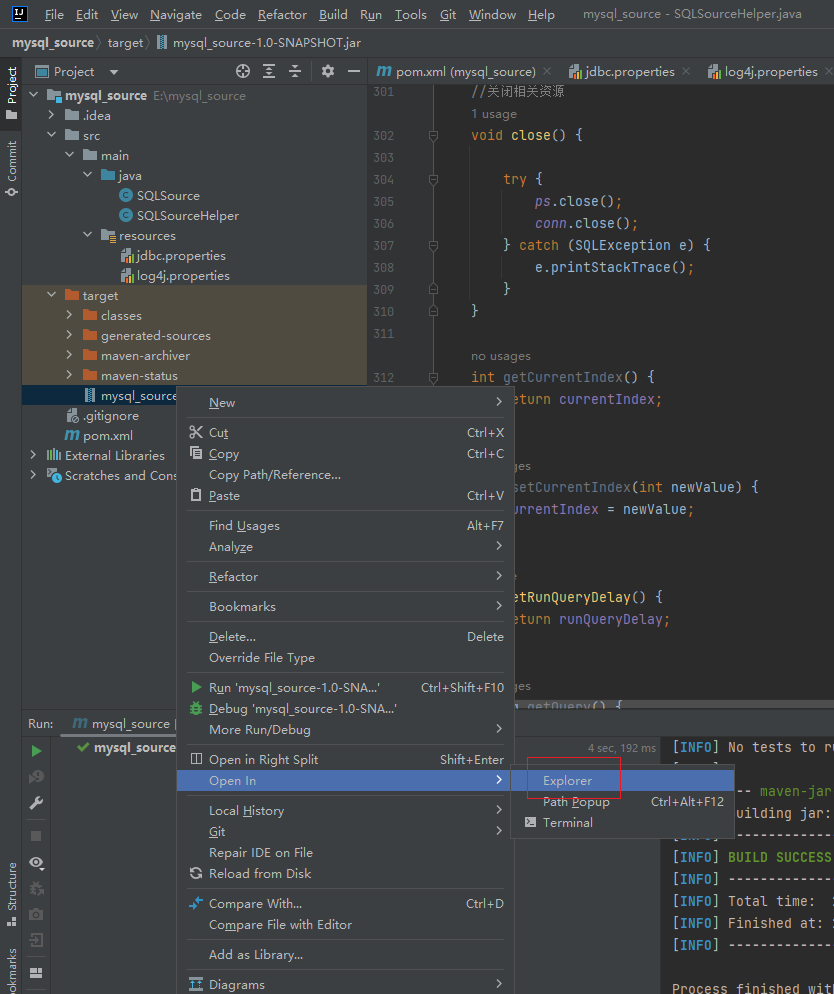

右键jar包,在文件资源管理器打开

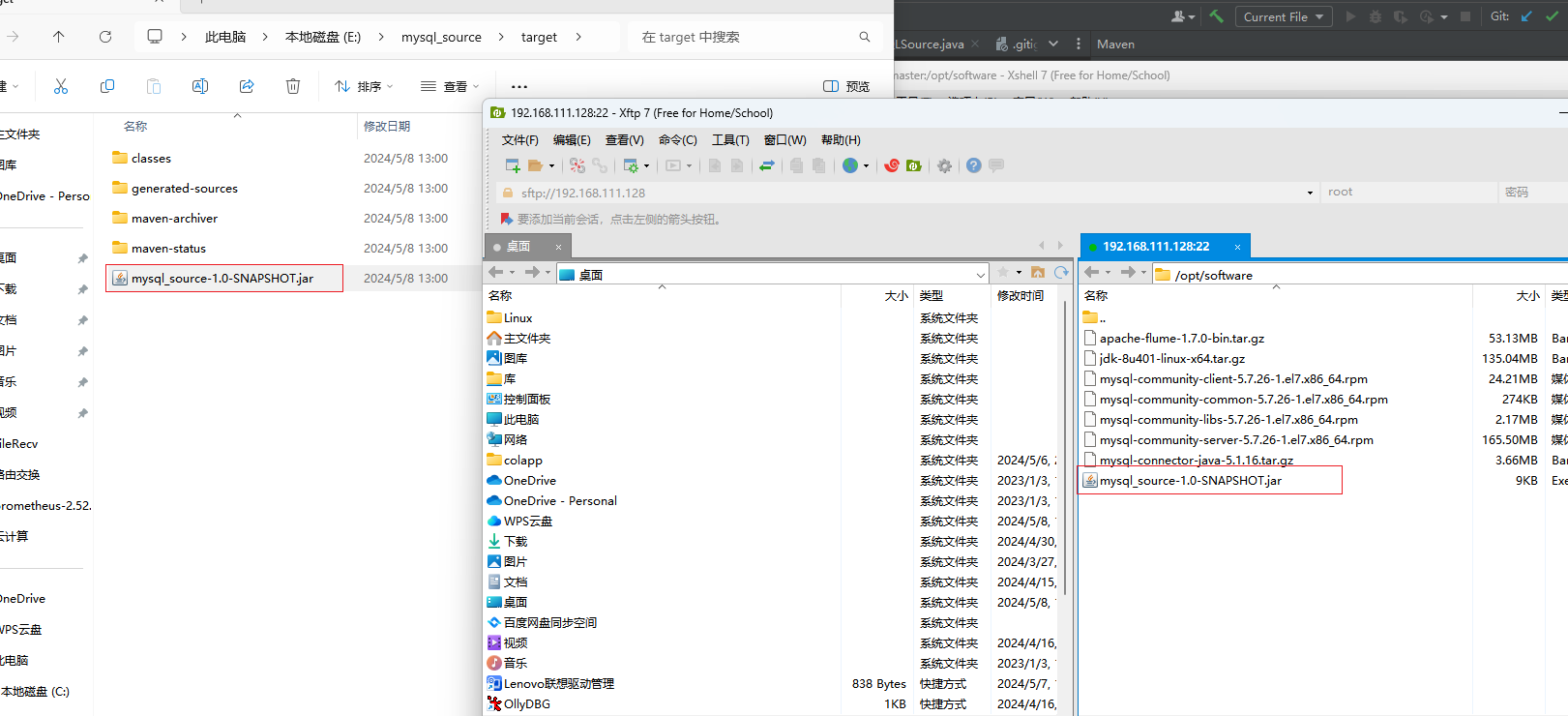

使用xftp上传到虚拟机

-

添加jar包依赖

打开虚拟机

tar -zxvf /opt/software/mysql-connector-java-5.1.16.tar.gzcp /opt/software/mysql-connector-java-5.1.16/mysql-connector-java-5.1.16-bin.jar /opt/module/flume/lib/cp /opt/software/mysql_source-1.0-SNAPSHOT.jar /opt/module/flume/lib/ -

创建job文件

vi /opt/module/flume/job/mysql.conf# Name the components on this agent a1.sources = r1 a1.sinks = k1 a1.channels = c1 # Describe/configure the source a1.sources.r1.type = com.nuit.source.SQLSource a1.sources.r1.connection.url = jdbc:mysql://192.168.111.128:3306/mysqlsource a1.sources.r1.connection.user = root a1.sources.r1.connection.password = root a1.sources.r1.table = student a1.sources.r1.columns.to.select = * #a1.sources.r1.incremental.column.name = id #a1.sources.r1.incremental.value = 0 a1.sources.r1.run.query.delay=5000 # Describe the sink a1.sinks.k1.type = logger # Describe the channel a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1 -

创建mysql表

mysql -uroot -prootCREATE DATABASE mysqlsource;use mysqlsourceCREATE TABLE `student` ( `id` int(11) NOT NULL AUTO_INCREMENT, `name` varchar(255) NOT NULL, PRIMARY KEY (`id`) ); CREATE TABLE `flume_meta` ( `source_tab` varchar(255) NOT NULL, `currentIndex` varchar(255) NOT NULL, PRIMARY KEY (`source_tab`) ); -

向表中添加数据

insert into student values(1,'zhangsan'); insert into student values(2,'lisi'); insert into student values(3,'wangwu'); insert into student values(4,'zhaoliu'); -

退出

exit -

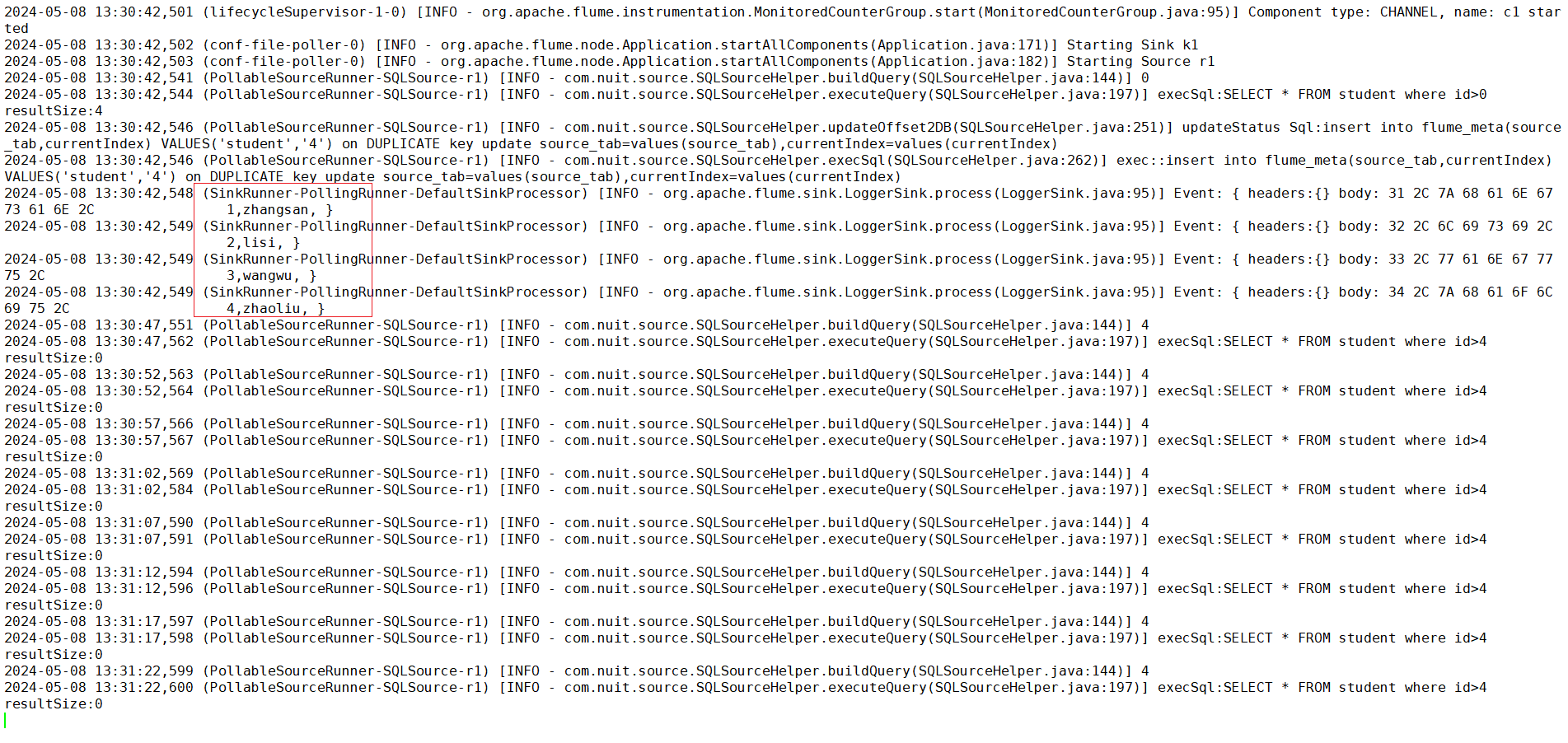

开启flume任务

cd /opt/module/flumebin/flume-ng agent --conf conf/ --name a1 \ --conf-file job/mysql.conf -Dflume.root.logger=INFO,console发现我们插入数据的日志,至此搭建完成!

250

250

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?