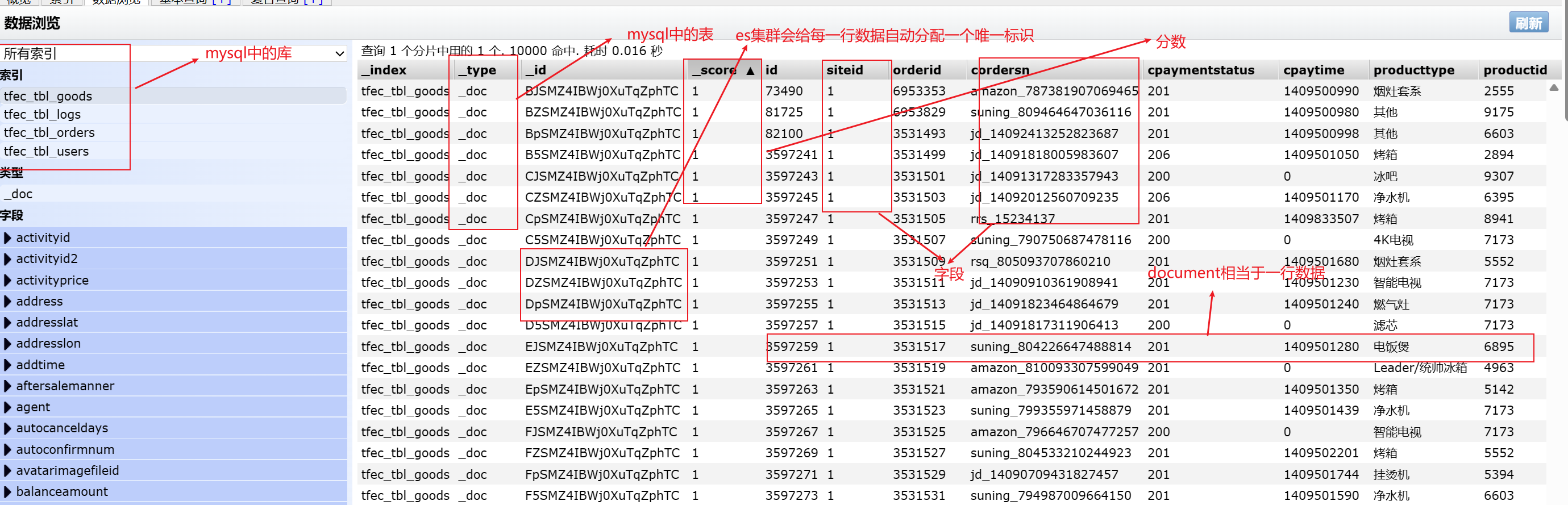

SQL与ElasticSearch对应关系(※)

SQL Elasticsearch column(列) field(字段) row(行) document(文档) table(表) index(索引) schema(模式) mapping(映射) database(数据库) Elasticsearch集群实例

Python操作ElasticSearch

上面说到ElasticSearch既然是个数据库那么必然会像MySQL一样可以通过pymysql这中类似的组件进行各种操作.

但是局限于原生的ElasticSearch的命令基本都是Restful风格的代码, 学习的难度未免会有所增加.

原生的RestfulAPI风格在上篇文章介绍过了,这里就不多bb了.

创建(配置)虚拟环境

如果你的虚拟环境中已经有了ElasticSearch这个插件,那么直接切换到虚拟环境就好.

如果没有就需要手动安装了.

# 使用Anaconda虚拟环境管理器, 方便解决版本冲突的问题

conda create -n es_env python==3.7.13

# 进入虚拟环境:

conda activate es_env

# 安装python操作ES的库:

pip install elasticsearch==7.17.3 -i 清华源

Python远程连接虚拟环境

好像在ETL中有过连接的介绍, 这里就省略.

Python操作ElasticSearch(※)

- 首先第一步就是导包

from elasticsearch import Elasticsearch- 将需要传递的数据封装成一个类, 解决参数不统一问题导致document(行)不能生成, 从而创建对象, 通过对象名.方法名的方式生成一个标准的字典类型的数据

from UserProfile.jobclazz import JobClazz- 总结一下: 插入数据, 删除数据, 修改数据, 根据id查询数据 统一格式为: es.操作(index=‘表名’, id=‘要操作的数据id’)

from elasticsearch import Elasticsearch

from UserProfile.jobclazz import JobClazz

if __name__ == '__main__':

es = Elasticsearch(hosts='192.168.88.166')

print(es)

job_clazz = JobClazz("00001", "工作地区:郑州-航空港区", "应届毕业生", "博士", "¥ 2千/月", "全职", "链家",

"9236人浏览过 / 8000人正在关注", "销售姐姐",

"做销售选链家五大理由 一、百分百真房源,放心买房找链家 二、科技化平台支持:链家网、贝壳找房网 三、市场占有率全市前列 四、没有空降兵,所有的管理者都是内部员工晋升 五、我们的理念:客户至上.诚实可信")

# 插入数据

res_insert = es.index(index='job_idx', id='0001', document=job_clazz.getJobClazzDict())

print(res_insert)

# 删除数据(删除不存在的数据会报错

# res_delete = es.delete(index='job_idx', id='0001')

# print(res_delete)

# 修改数据

res_update = es.update(index='job_idx', id='0001', doc={'salary': '$ 2k/月'})

print(res_update)

# 根据id查询

res_query = es.get(index='job_idx', id='0001')

print(res_query)

# 根据字段进行搜索

res_query_fields = es.search(index='job_idx', query={

'match': {

"title": "销售姐姐"

}

}, from_=0, size=5)

print(res_query_fields)

# 高亮显示(通过search 然后写表名, 查询(匹配的项), 哪些区域

res_highlight = es\

.search(

index='job_idx',

query={"match": {"jd": "销售"}},

highlight={"fields": {"jd": {}, "title": {}}})

print(res_highlight)

#{ 'took': 9,

# 'timed_out': False,

# '_shards':

# { 'total': 1,

# 'successful': 1,

# 'skipped': 0,

# 'failed': 0

# },

# 'hits':

# {

# 'total': {'value': 1, 'relation': 'eq'},

# 'max_score': 0.18461011,

# 'hits': [

# { '_index': 'job_idx',

# '_type': '_doc',

# '_id': '0001',

# '_score': 0.18461011,

# '_source': {'id': '00001', 'area': '工作地区:郑州-航空港区', 'exp': '应届毕业生', 'edu': '博士', 'salary': '$ 2k/月', 'job_type': '全职', 'cmp': '链家', 'pv': '9236人浏览过 / 8000人正在关注', 'title': '销售姐姐', 'jd': '做销售选链家五大理由 一、百分百真房源,放心买房找链家 二、科技化平台支持:链家网、贝壳找房网 三、市场占有率全市前列 四、没有空降兵,所有的管理者都是内部员工晋升 五、我们的理念:客户至上.诚实可信'},

# 'highlight': {'jd': ['做<em>销售</em>选链家五大理由 一、百分百真房源,放心买房找链家 二、科技化平台支持:链家网、贝壳找房网 三、市场占有率全市前列 四、没有空降兵,所有的管理者都是内部员工晋升 五、我们的理念:客户至上.诚实可信']}}]}}

# 关闭连接

# 关闭连接

es.close()

高亮显示

# coding:utf-8

from elasticsearch import Elasticsearch

if __name__ == '__main__':

# 创建es对象

es = Elasticsearch(hosts='192.168.88.166:9200')

# 使用es对象进行高亮查询

res = es.search(index='job_idx', query={"multi_match": {

"fields": ["jd", "title"],

"query": "销售"

}}, highlight={'fields': {'title': {}, 'jd': {}}})

# print(res['hits']['hits'])

for document in res['hits']['hits']:

# print(document['_source'])

# print(document['highlight'])

if 'title' in document['highlight']:

document['_source']['title'] = document['highlight']['title']

if 'jd' in document['highlight']:

document['_source']['jd'] = document['highlight']['jd']

print(document['_source'])

# 关闭连接

es.close()

ElasticSearch读写流程及准实时索引

ES写入原理

- 请求

- 路由

- 写入数据到主分片

- 1, 客户端选择一个DataNode节点发送写入请求, 此时该DataNode就是一个协调节点

- 2, 协调节点会对document(MySQL中叫行)的id进行哈希值计算, 然后对主分片的个数取余得到最终的路由,

- 公式为: (hash(routing) % num of primary shard) 其中routing默认为每个document的id,可以设置为其他的值

- 3, 到对应的主分片处理写入请求, 将数据写入到index中(将数据保存在_source字段, 根据分词构建倒排索引)

- 4, 副本(replica)从主分片同步数据

- 5, 当主分片和副本分片数据都写入成功后由协调节点将结果返回给客户端

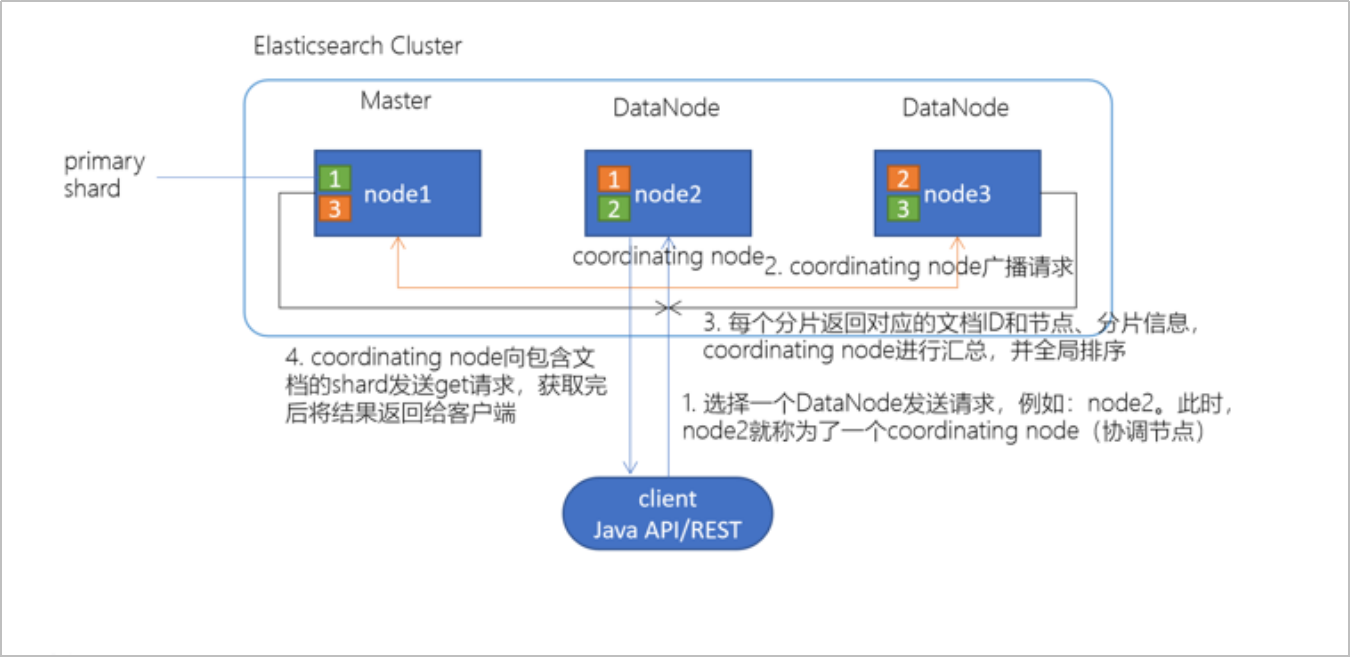

ES检索(读取)原理

- 1, 客户端选择一个DataNode阶段, 发起查询请求, 此时该DataNode就是一个协调节点

- 2, 协调节点将查询请求进行广播

- 3, 每个节点会对每个分片(多个文件, 每个文件对应一个倒排索引) 根据倒排索引进行查询, 将查询的文档ID, 分数, 分片信息返回给协调节点

- 4, 协调节点汇总(排序)每个节点数据发来的文档信息 — 通过发送get(根据ID)请求, 获取最终结果返回给客户端

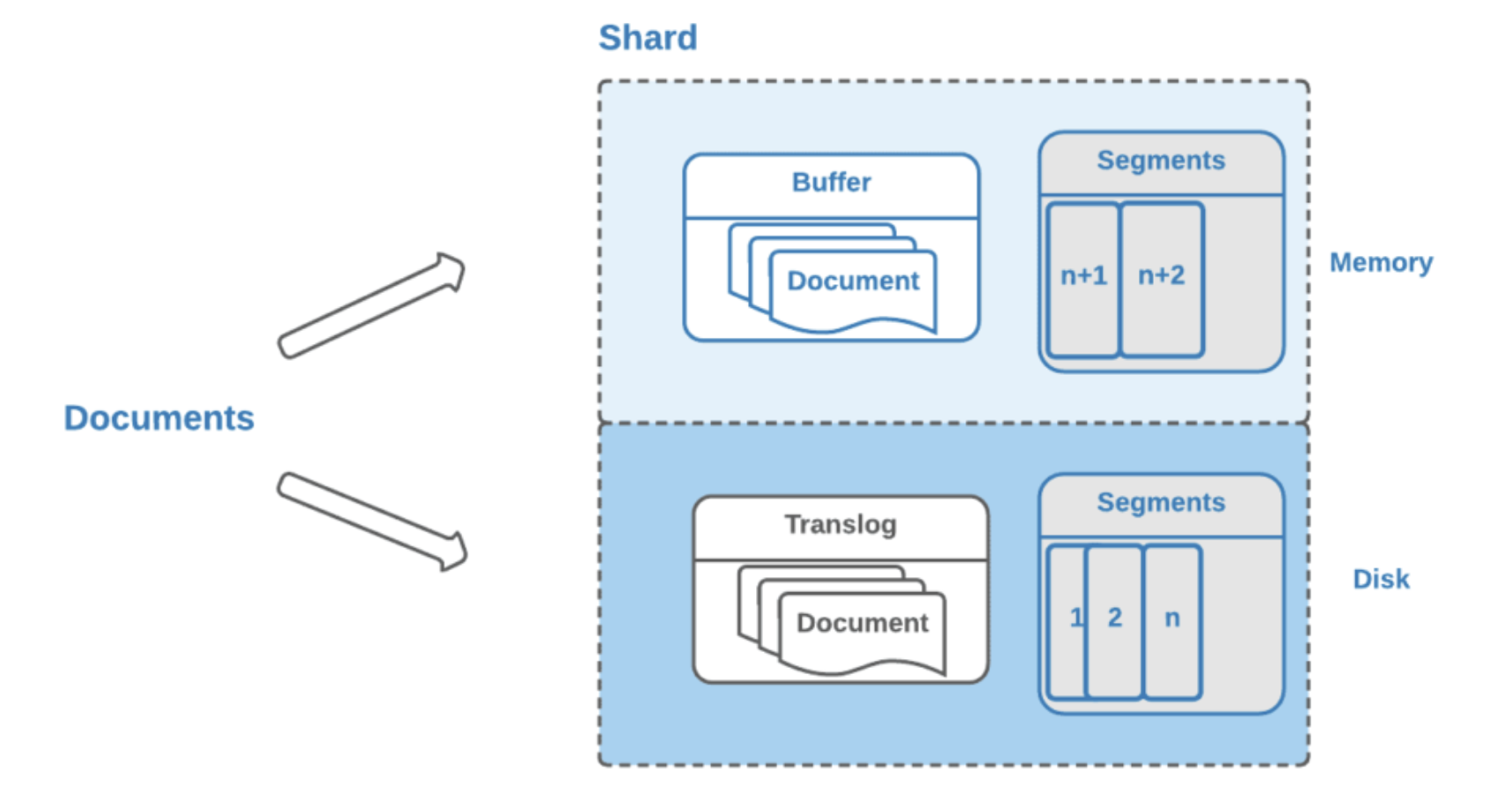

准实时索引实现

溢写到文件系统缓存

- 当数据写入到ES分片中, 首先会写入到内存中, 然后通过内存的buffer生成一个segment(每个segment对应一个倒排索引), 并刷新文件到缓存中, 数据可以被检索(注意不是直接刷到磁盘)

- ES中默认1秒refresh一次(refresh是将buffer中的文件写入文件缓冲区)

写translog保障容错

- 在写入到内存的同时, 也会记录translog日志, 在refresh期间出现异常, 会根据translog来进行数据恢复, 等到文件系统缓存中的segment数据都刷到磁盘中, 清空translog文件

flush到磁盘

- ES默认每隔30分钟会将文件系统缓存的数据刷入到磁盘

segment合并

- Segment太多时,ES定期会将多个segment合并成为大的segment,减少索引查询时IO开销,此阶段ES会真正的物理删除(之前执行过的delete的数据)

总结

ES支持SQL

原生的RestfulAPI支持

同时Python中的ElasticSearch插件也支持

原生API支持

- 分页查询/将SQL转化为DSL/匹配查询

-- 分页查询

GET /_sql?format=txt

{

"query": "SELECT * FROM job_idx limit 1"

}

-- 将SQL转化为DSL

GET /_sql/translate

{

"query": "SELECT * FROM job_idx limit 1"

}

-- 匹配查询

GET /_sql?format=txt

{

"query": "select * from job_idx where MATCH(title, 'hadoop') or MATCH(jd, 'hadoop') limit 10"

}

Python外部API支持

# 使用sql查询

res_sql = es.sql.query(body={"query": "select payway, count(*) from order_idx group by payway"})

案例

创建索引(使用RestfulAPI-在Chrome插件中/VSCode插件中创建)

PUT /order_idx/

{

"mappings": {

"properties": {

"id": {

"type": "keyword",

"store": true

},

"status": {

"type": "keyword",

"store": true

},

"pay_money": {

"type": "double",

"store": true

},

"payway": {

"type": "byte",

"store": true

},

"userid": {

"type": "keyword",

"store": true

},

"operation_date": {

"type": "date",

"format": "yyyy-MM-dd HH:mm:ss",

"store": true

},

"category": {

"type": "keyword",

"store": true

}

}

}

}

导入测试数据

- 首先将order_data.json先放到Linux本地的root文件夹下

- 然后cd ~ (因为本身就是root用户, 所以会进到/root目录下)

- 输入命令(此时ElasticSearch中的order_idx表中就会有数据了)

curl -H "Content-Type: application/json" -XPOST "up01:9200/order_idx/_bulk?pretty&refresh" --data-binary "@order_data.json"

统计不同支付方式的的订单数量

目前Elasticsearch SQL还存在一些限制。例如:目前from只支持一个表、不支持JOIN、不支持较复杂的子查询。所以,有一些相对复杂一些的功能,还得借助于DSL方式来实现。

-- SQL方式

GET /_sql?format=txt

{

"query": "select payway, count(*) as order_cnt from order_idx group by payway"

}

-- DSL 方式

GET /order_idx/_search

{

"size": 0,

"aggs": {

"group_by_state": {

"terms": {

"field": "payway"

}

}

}

}

ElasticSearch整合Hive

整合Hive是什么意思? (想想两个数据库之间能干什么,不就是导来导去) 其实就是将Hive中的表数据导入到ElasticSearch中

创建ES中的表(在Hive中创建外部表)

创建表后,直接在使用es插件查询ES中的表是查询不到的.(需要往表中插入数据才能在ES中看到索引[表]的出现)

而且创建表之前需要再Datagrip中写/或者在Hive终端中执行(注意在哪里建表就在哪里跑下面的代码)

add jar hdfs:///libs/es-hadoop/elasticsearch-hadoop-7.10.2.jar;

- 创建ES中的表使用Hive就行, 但是需要指定一些表的存储方式

create external table XXX (...) stored by 'org.elasticsearch.hadoop.hive.EsStorageHandler' -- 指定了使用eshadoop 中哪个文件来处理数的导入 tblproperties('es.resource'='tfec_tbl_goods', -- 数据要导入到哪个ES的索引中 'es.nodes'='up01:9200', -- es集群的ip地址 'es.index.auto.create'='TRUE', -- 如果es中没有对应的index 自动创建一个 'es.index.refresh_interval' = '-1', -- 关掉refresh 导入数据的时候先不创建索引 'es.index.number_of_replicas' = '0', -- 设置副本数量 es8才会起作用 当前es7 这个没用 'es.batch.write.retry.count' = '6', -- 传输出现问题的时候, 重试的次数 'es.batch.write.retry.wait' = '60s', -- 传输等待多久没有响应进行重试 60s没有响应, 就重新连接 'es.mapping.names' = 'id:id,siteid:siteid' -- hive字段和ES字段之间的映射关系, 如果hive字段和es字段名字一样, 这个不需要设置, 默认es会使用hive的字段名字和数据类型, 如果需要修改映射关系 hive字段1名字:es字段1名字,hive字段2名字:es字段2名字 ... );

-- 商品表

create external table if not exists tags_tfec_userprofile.tfec_tbl_goods(

`id` bigint,

`siteid` bigint,

`istest` boolean comment '是否是测试网单',

`hasread` boolean comment '是否已读,测试字段',

`supportonedaylimit` boolean comment '是否支持24小时限时达',

`orderid` bigint comment '订单ID',

`cordersn` string comment 'child order sn 子订单编码 C0919293',

`isbook` boolean comment '是否是预订网单',

`cpaymentstatus` int comment '子订单付款状态',

`cpaytime` bigint comment '子订单付款时间',

`producttype` string comment '商品类型',

`productid` bigint comment '抽象商品id(可能是商品规格,也可能是套装,由商品类型决定)',

`productname` string comment '商品名称:可能是商品名称加颜色规格,也可能是套装名称',

`sku` string comment '货号',

`price` double comment '商品单价',

`number` int comment '数量',

`lockednumber` bigint comment '曾经锁定的库存数量',

`unlockednumber` bigint comment '经解锁的库存数量',

`productamount` double comment '此字段专为同步外部订单而加,商品总金额=price*number+shippingFee-优惠金额,但优惠金额没在本系统存储',

`balanceamount` double comment '余额扣减',

`couponamount` double comment '优惠券抵扣金额',

`esamount` double comment '节能补贴金额',

`giftcardnumberid` bigint comment '礼品卡号ID,关联GiftCardNumbers表的主键',

`usedgiftcardamount` double comment '礼品卡抵用的金额',

`couponlogid` bigint comment '使用的优惠券记录ID',

`activityprice` double comment '活动价,当有活动价时price的值来源于activityPrice',

`activityid` bigint comment '活动ID',

`cateid` int comment '分类ID',

`brandid` int comment '品牌ID',

`netpointid` int comment '网点id',

`shippingfee` double comment '配送费用',

`settlementstatus` boolean comment '结算状态0 未结算 1已结算',

`receiptorrejecttime` bigint comment '确认收货时间或拒绝收货时间',

`iswmssku` boolean comment '是否淘宝小家电',

`scode` string comment '库位编码',

`tscode` string comment '转运库房编码',

`tsshippingtime` int comment '转运时效(小时)',

`status` int comment '状态',

`productsn` string comment '商品条形码',

`invoicenumber` string comment '运单号',

`expressname` string comment '快递公司',

`invoiceexpressnumber` string comment '发票快递单号',

`postman` string comment '送货人',

`postmanphone` string comment '送货人电话',

`isnotice` int comment '是否开启预警',

`noticetype` int,

`noticeremark` string,

`noticetime` string comment '预警时间',

`shippingtime` int comment '发货时间',

`lessordersn` string comment '订单号',

`waitgetlesshippinginfo` boolean comment '是否等待获取LES物流配送节点信息',

`getlesshippingcount` bigint comment '已获取LES配送节点信息的次数',

`outping` string comment '出库凭证',

`lessshiptime` int comment 'less出库时间',

`closetime` bigint comment '网单完成关闭或取消关闭时间',

`isreceipt` bigint comment '是否需要发票',

`ismakereceipt` int comment '开票状态',

`receiptnum` string comment '发票号',

`receiptaddtime` string comment '开票时间',

`makereceipttype` tinyint comment '开票类型 0:初始值 1:库房开票 2:共享开票',

`shippingmode` string comment '物流模式,值为B2B2C或B2C',

`lasttimeforshippingmode` bigint comment '最后修改物流模式的时间',

`lasteditorforshippingmode` string comment '最后修改物流模式的管理员',

`systemremark` string comment '系统备注,不给用户显示',

`tongshuaiworkid` int comment '统帅定制作品ID',

`orderpromotionid` bigint comment '下单立减活动ID',

`orderpromotionamount` double comment '下单立减金额',

`externalsalesettingid` bigint comment '淘宝套装设置ID',

`recommendationid` bigint comment '推荐购买ID',

`hassendalertnum` boolean comment '是否已发送了购买数据报警邮件(短信)',

`isnolimitstockproduct` boolean comment '是否是无限制库存量的商品',

`hpregisterdate` int comment 'HP注册时间',

`hpfaildate` int comment 'HP派工失败时间',

`hpfinishdate` int comment 'HP派工成功时间',

`hpreservationdate` int comment 'HP回传预约送货时间',

`shippingopporunity` tinyint comment '活动订单发货时机,0:定金发货 1:尾款发货',

`istimeoutfree` tinyint comment '是否超时免单 0:未设置 1:是 2:否',

`itemshareamount` double comment '订单优惠价格分摊',

`lessshiptintime` int comment 'less转运入库时间',

`lessshiptouttime` int comment 'less转运出库时间',

`cbsseccode` string comment 'cbs库位',

`points` int comment '网单使用积分',

`modified` string comment '最后更新时间',

`splitflag` tinyint comment '拆单标志,0:未拆单;1:拆单后旧单;2:拆单后新单',

`splitrelatecordersn` string comment '拆单关联单号',

`channelid` tinyint comment '区分EP和商城',

`activityid2` int comment '运营活动id',

`pdorderstatus` int comment '日日单状态',

`omsordersn` string comment '集团OMS单号',

`couponcode` string comment '优惠码编码',

`couponcodevalue` double comment '优惠码优惠金额',

`storeid` bigint comment '店铺ID',

`storetype` tinyint comment '店铺类型',

`stocktype` string,

`o2otype` tinyint comment 'o2o网单类型 1:非O2O网单 2:线下用户分销商城 3:商城分销旗舰店 4:创客',

`brokeragetype` string,

`ogcolor` string comment '算法预留字段'

) comment '商品详情es外部表'

stored by 'org.elasticsearch.hadoop.hive.EsStorageHandler'

tblproperties('es.resource'='tfec_tbl_goods/_doc',

'es.nodes'='up01:9200',

'es.index.auto.create'='TRUE',

'es.index.refresh_interval' = '-1',

'es.index.number_of_replicas' = '0',

'es.batch.write.retry.count' = '6',

'es.batch.write.retry.wait' = '60s',

'es.mapping.name' = 'id:id,siteid:siteid,istest:istest,hasread:hasread,supportonedaylimit:supportonedaylimit,orderid:orderid,cordersn:cordersn,isbook:isbook,cpaymentstatus:cpaymentstatus,cpaytime:cpaytime,producttype:producttype,productid:productid,productname:productname,sku:sku,price:price,number:number,lockednumber:lockednumber,unlockednumber:unlockednumber,productamount:productamount,balanceamount:balanceamount,couponamount:couponamount,esamount:esamount,giftcardnumberid:giftcardnumberid,usedgiftcardamount:usedgiftcardamount,couponlogid:couponlogid,activityprice:activityprice,activityid:activityid,cateid:cateid,brandid:brandid,netpointid:netpointid,shippingfee:shippingfee,settlementstatus:settlementstatus,receiptorrejecttime:receiptorrejecttime,iswmssku:iswmssku,scode:scode,tscode:tscode,tsshippingtime:tsshippingtime,status:status,productsn:productsn,invoicenumber:invoicenumber,expressname:expressname,invoiceexpressnumber:invoiceexpressnumber,postman:postman,postmanphone:postmanphone,isnotice:isnotice,noticetype:noticetype,noticeremark:noticeremark,noticetime:noticetime,shippingtime:shippingtime,lessordersn:lessordersn,waitgetlesshippinginfo:waitgetlesshippinginfo,getlesshippingcount:getlesshippingcount,outping:outping,lessshiptime:lessshiptime,closetime:closetime,isreceipt:isreceipt,ismakereceipt:ismakereceipt,receiptnum:receiptnum,receiptaddtime:receiptaddtime,makereceipttype:makereceipttype,shippingmode:shippingmode,lasttimeforshippingmode:lasttimeforshippingmode,lasteditorforshippingmode:lasteditorforshippingmode,systemremark:systemremark,tongshuaiworkid:tongshuaiworkid,orderpromotionid:orderpromotionid,orderpromotionamount:orderpromotionamount,externalsalesettingid:externalsalesettingid,recommendationid:recommendationid,hassendalertnum:hassendalertnum,isnolimitstockproduct:isnolimitstockproduct,hpregisterdate:hpregisterdate,hpfaildate:hpfaildate,hpfinishdate:hpfinishdate,hpreservationdate:hpreservationdate,shippingopporunity:shippingopporunity,istimeoutfree:istimeoutfree,itemshareamount:itemshareamount,lessshiptintime:lessshiptintime,lessshiptouttime:lessshiptouttime,cbsseccode:cbsseccode,points:points,modified:modified,splitflag:splitflag,splitrelatecordersn:splitrelatecordersn,channelid:channelid,activityid2:activityid2,pdorderstatus:pdorderstatus,omsordersn:omsordersn,couponcode:couponcode,couponcodevalue:couponcodevalue,storeid:storeid,storetype:storetype,stocktype:stocktype,o2otype:o2otype,brokeragetype:brokeragetype,ogcolor:ogcolor'

);

-- 订单表

create external table if not exists tags_tfec_userprofile.tfec_tbl_orders(

`id` bigint,

`siteid` bigint,

`istest` boolean comment '是否是测试订单',

`hassync` boolean comment '是否已同步(临时添加)',

`isbackend` tinyint comment '是否为后台添加的订单',

`isbook` tinyint,

`iscod` boolean comment '是否是货到付款订单',

`notautoconfirm` boolean comment '是否是非自动确认订单',

`ispackage` boolean comment '是否为套装订单',

`packageid` bigint comment '套装ID',

`ordersn` string comment '订单号',

`relationordersn` string comment '关联订单编号',

`memberid` bigint comment '会员ID',

`predictid` bigint comment '会员购买预测ID',

`memberemail` string comment '会员邮件',

`addtime` bigint,

`synctime` bigint comment '同步到此表中的时间',

`orderstatus` int comment '订单状态',

`paytime` bigint comment '在线付款时间',

`paymentstatus` int comment '付款状态,0:买家未付款 1:买家已付款',

`receiptconsignee` string comment '发票收件人',

`receiptaddress` string comment '发票地址',

`receiptzipcode` string comment '发票邮编',

`receiptmobile` string comment '发票联系电话',

`productamount` double comment '商品金额,等于订单中所有的商品的单价乘以数量之和',

`orderamount` double comment '订单总金额,等于商品总金额+运费',

`paidbalance` double comment '金额账户支付总金额',

`giftcardamount` double comment '礼品卡抵用金额',

`paidamount` double comment '已支付金额',

`shippingamount` double comment '淘宝运费',

`totalesamount` double comment '网单中总的节能补贴金额之和',

`usedcustomerbalanceamount` double comment '使用的客户的余额支付金额',

`customerid` bigint comment '用余额支付的客户ID',

`bestshippingtime` string comment '最佳配送时间描述',

`paymentcode` string comment '支付方式code',

`paybankcode` string comment '网银代码',

`paymentname` string comment '支付方式名称',

`consignee` string comment '收货人',

`originregionname` string comment '原淘宝收货地址信息',

`originaddress` string comment '原淘宝收货人详细收货信息',

`province` bigint comment '收货地址中国省份',

`city` bigint comment '收货地址中的城市',

`region` bigint comment '收货地址中城市中的区',

`street` bigint comment '街道ID',

`markbuilding` int comment '标志建筑物',

`poiid` string comment '标建ID',

`poiname` string comment '标建名称',

`regionname` string comment '地区名称(如:北京 北京 昌平区 兴寿镇)',

`address` string comment '收货地址中用户输入的地址,一般是区以下的详细地址',

`zipcode` string comment '收货地址中的邮编',

`mobile` string comment '收货人手机号',

`phone` string comment '收货人固定电话号',

`receiptinfo` string comment '发票信息,序列化数组array(title =>.., receiptType =>..,needReceipt => ..,companyName =>..,taxSpotNum =>..,regAddress=>..,regPhone=>..,bank=>..,bankAccount=>..)',

`delayshiptime` bigint comment '延迟发货日期',

`remark` string comment '订单备注',

`bankcode` string comment '银行代码,用于银行直链支付',

`agent` string comment '处理人',

`confirmtime` int comment '确认时间',

`firstconfirmtime` bigint comment '首次确认时间',

`firstconfirmperson` string comment '第一次确认人',

`finishtime` int comment '订单完成时间',

`tradesn` string comment '在线支付交易流水号',

`signcode` string comment '收货确认码',

`source` string comment '订单来源',

`sourceordersn` string comment '外部订单号',

`onedaylimit` tinyint comment '是否支持24小时限时达',

`logisticsmanner` int comment '物流评价',

`aftersalemanner` int comment '售后评价',

`personmanner` int comment '人员态度',

`visitremark` string comment '回访备注',

`visittime` int comment '回访时间',

`visitperson` string comment '回访人',

`sellpeople` string comment '销售代表',

`sellpeoplemanner` int comment '销售代表服务态度',

`ordertype` tinyint comment '订单类型 默认:0 团购预付款:1 团购正式单:2',

`hasreadtaobaoordercomment` boolean comment '是否已读取过淘宝订单评论',

`memberinvoiceid` bigint comment '订单发票ID,MemberInvoices表的主键',

`taobaogroupid` bigint comment '淘宝万人团活动ID',

`tradetype` string comment '交易类型,值参考淘宝',

`steptradestatus` string comment '分阶段付款的订单状态,值参考淘宝',

`steppaidfee` double comment '分阶段付款的已付金额',

`depositamount` double comment '定金应付金额',

`balanceamount` double comment '尾款应付金额',

`autocanceldays` bigint comment '未付款过期的天数',

`isnolimitstockorder` boolean comment '是否是无库存限制订单',

`ccborderreceivedlogid` bigint comment '建行订单接收日志ID',

`ip` string comment '订单来源IP,针对商城前台订单',

`isgiftcardorder` boolean comment '是否为礼品卡订单',

`giftcarddownloadpassword` string comment '礼品卡下载密码',

`giftcardfindmobile` string comment '礼品卡密码找回手机号',

`autoconfirmnum` bigint comment '已自动确认的次数',

`codconfirmperson` string comment '货到付款确认人',

`codconfirmtime` int comment '货到付款确认时间',

`codconfirmremark` string comment '货到付款确认备注',

`codconfirmstate` boolean comment '货到侍确认状态0无需未确认,1待确认,2确认通过可以发货,3确认无效,订单可以取消',

`paymentnoticeurl` string comment '付款结果通知URL',

`addresslon` double comment '地址经度',

`addresslat` double comment '地址纬度',

`smconfirmstatus` tinyint comment '标建确认状态。1 = 初始状态;2 = 已发HP,等待确认;3 = 待人工处理;4 = 待自动处理;5 = 已确认',

`smconfirmtime` int comment '请求发送HP时间,格式为时间戳',

`smmanualtime` int comment '转人工确认时间',

`smmanualremark` string comment '转人工确认备注',

`istogether` tinyint comment '货票通行',

`isnotconfirm` boolean comment '是否是无需确认的订单',

`tailpaytime` int comment '尾款付款时间',

`points` int comment '网单使用积分',

`modified` string comment '最后更新时间',

`channelid` tinyint comment '区分EP和商城',

`isproducedaily` int comment '是否日日单(1:是,0:否)',

`couponcode` string comment '优惠码编码',

`couponcodevalue` double comment '优惠码优惠金额',

`ckcode` string

) comment '订单es外部表'

stored by 'org.elasticsearch.hadoop.hive.EsStorageHandler'

tblproperties('es.resource'='tfec_tbl_orders/_doc',

'es.nodes'='up01:9200',

'es.index.auto.create'='TRUE',

'es.index.refresh_interval' = '-1',

'es.index.number_of_replicas' = '0',

'es.batch.write.retry.count' = '6',

'es.batch.write.retry.wait' = '60s',

'es.mapping.name' = 'id:id,siteid:siteid,istest:istest,hassync:hassync,isbackend:isbackend,isbook:isbook,iscod:iscod,notautoconfirm:notautoconfirm,ispackage:ispackage,packageid:packageid,ordersn:ordersn,relationordersn:relationordersn,memberid:memberid,predictid:predictid,memberemail:memberemail,addtime:addtime,synctime:synctime,orderstatus:orderstatus,paytime:paytime,paymentstatus:paymentstatus,receiptconsignee:receiptconsignee,receiptaddress:receiptaddress,receiptzipcode:receiptzipcode,receiptmobile:receiptmobile,productamount:productamount,orderamount:orderamount,paidbalance:paidbalance,giftcardamount:giftcardamount,paidamount:paidamount,shippingamount:shippingamount,totalesamount:totalesamount,usedcustomerbalanceamount:usedcustomerbalanceamoun,customerid:customerid,bestshippingtime:bestshippingtime,paymentcode:paymentcode,paybankcode:paybankcode,paymentname:paymentname,consignee:consignee,originregionname:originregionname,originaddress:originaddress,province:province,city:city,region:region,street:street,markbuilding:markbuilding,poiid:poiid,poiname:poiname,regionname:regionname,address:address,zipcode:zipcode,mobile:mobile,phone:phone,receiptinfo:receiptinfo,delayshiptime:delayshiptime,remark:remark,bankcode:bankcode,agent:agent,confirmtime:confirmtime,firstconfirmtime:firstconfirmtime,firstconfirmperson:firstconfirmperson,finishtime:finishtime,tradesn:tradesn,signcode:signcode,source:source,sourceordersn:sourceordersn,onedaylimit:onedaylimit,logisticsmanner:logisticsmanner,aftersalemanner:aftersalemanner,personmanner:personmanner,visitremark:visitremark,visittime:visittime,visitperson:visitperson,sellpeople:sellpeople,sellpeoplemanner:sellpeoplemanner,ordertype:ordertype,hasreadtaobaoordercomment:hasreadtaobaoordercommen,memberinvoiceid:memberinvoiceid,taobaogroupid:taobaogroupid,tradetype:tradetype,steptradestatus:steptradestatus,steppaidfee:steppaidfee,depositamount:depositamount,balanceamount:balanceamount,autocanceldays:autocanceldays,isnolimitstockorder:isnolimitstockorder,ccborderreceivedlogid:ccborderreceivedlogid,ip:ip,isgiftcardorder:isgiftcardorder,giftcarddownloadpassword:giftcarddownloadpassword,giftcardfindmobile:giftcardfindmobile,autoconfirmnum:autoconfirmnum,codconfirmperson:codconfirmperson,codconfirmtime:codconfirmtime,codconfirmremark:codconfirmremark,codconfirmstate:codconfirmstate,paymentnoticeurl:paymentnoticeurl,addresslon:addresslon,addresslat:addresslat,smconfirmstatus:smconfirmstatus,smconfirmtime:smconfirmtime,smmanualtime:smmanualtime,smmanualremark:smmanualremark,istogether:istogether,isnotconfirm:isnotconfirm,tailpaytime:tailpaytime,points:points,modified:modified,channelid:channelid,isproducedaily:isproducedaily,couponcode:couponcode,couponcodevalue:couponcodevalue,ckcode:ckcode'

);

-- 用户表

create external table if not exists tags_tfec_userprofile.tfec_tbl_users(

`id` bigint,

`siteid` bigint,

`avatarimagefileid` string,

`email` string,

`username` string comment '用户名',

`password` string comment '密码',

`salt` string comment '扰码',

`registertime` bigint comment '注册时间',

`lastlogintime` bigint comment '最后登录时间',

`lastloginip` string comment '最后登录ip',

`memberrankid` bigint comment '特殊会员等级id,0表示非特殊会员等级',

`bigcustomerid` bigint comment '所属的大客户ID',

`lastaddressid` bigint comment '上次使用的收货地址',

`lastpaymentcode` string comment '上次使用的支付方式',

`gender` tinyint comment '性别, 0:保密 1:男 2:女',

`birthday` string comment '生日',

`qq` string comment '',

`job` string comment '职业;1学生、2公务员、3军人、4警察、5教师、6白领',

`mobile` string comment '手机',

`politicalface` bigint comment '政治面貌:1群众、2党员、3无党派人士',

`nationality` string comment '国籍:1中国大陆、2中国香港、3中国澳门、4中国台湾、5其他',

`validatecode` string comment '找回密码时的验证code',

`pwderrcount` tinyint comment '密码输入错误次数',

`source` string comment '会员来源',

`marriage` string comment '婚姻状况:1未婚、2已婚、3离异',

`money` double comment '账户余额',

`moneypwd` string comment '余额支付密码',

`isemailverify` boolean comment '是否验证email',

`issmsverify` boolean comment '是否验证短信',

`smsverifycode` string comment '短信验证码',

`emailverifycode` string comment '邮件验证码',

`verifysendcoupon` boolean comment '是否验证发送优惠券',

`canreceiveemail` boolean comment '是否接收邮件',

`modified` string comment '最后更新时间',

`channelid` tinyint,

`grade_id` bigint comment '等级ID',

`nick_name` string comment '昵称',

`is_blacklist` boolean comment '是否黑名单,0:非黑名单 1:黑名单'

) comment '用户es外部表'

stored by 'org.elasticsearch.hadoop.hive.EsStorageHandler'

tblproperties('es.resource'='tfec_tbl_users/_doc',

'es.nodes'='up01:9200',

'es.index.auto.create'='TRUE',

'es.index.refresh_interval' = '-1',

'es.index.number_of_replicas' = '0',

'es.batch.write.retry.count' = '6',

'es.batch.write.retry.wait' = '60s',

'es.mapping.name' = 'id:id,siteid:siteid,avatarimagefileid:avatarimagefileid,email:email,username:username,password:password,salt:salt,registertime:registertime,lastlogintime:lastlogintime,lastloginip:lastloginip,memberrankid:memberrankid,bigcustomerid:bigcustomerid,lastaddressid:lastaddressid,lastpaymentcode:lastpaymentcode,gender:gender,birthday:birthday,qq:qq,job:job,mobile:mobile,politicalface:politicalface,nationality:nationality,validatecode:validatecode,pwderrcount:pwderrcount,source:source,marriage:marriage,money:money,moneypwd:moneypwd,isemailverify:isemailverify,issmsverify:issmsverify,smsverifycode:smsverifycode,emailverifycode:emailverifycode,verifysendcoupon:verifysendcoupon,canreceiveemail:canreceiveemail,modified:modified,channelid:channelid,grade_id:grade_id,nick_name:nick_name,is_blacklist:is_blacklist'

);

-- 日志表

create external table if not exists tags_tfec_userprofile.tfec_tbl_logs(

`id` bigint,

`log_id` string comment '日志ID',

`remote_ip` string comment '访问IP',

`site_global_ticket` string comment '访问的站点入口',

`site_global_session` string comment '站点会话信息',

`global_user_id` string comment '用户ID',

`cookie_text` string comment '客户端信息',

`user_agent` string comment '用户客户端详细信息',

`ref_url` string comment '访问的关联url',

`loc_url` string comment '访问的本地url',

`log_time` string comment '访问的时间'

) comment '日志es外部表'

stored by 'org.elasticsearch.hadoop.hive.EsStorageHandler'

tblproperties('es.resource'='tfec_tbl_logs/_doc',

'es.nodes'='up01:9200',

'es.index.auto.create'='TRUE',

'es.index.refresh_interval' = '-1',

'es.index.number_of_replicas' = '0',

'es.batch.write.retry.count' = '6',

'es.batch.write.retry.wait' = '60s',

'es.mapping.name' = 'id:id,log_id:log_id,remote_ip:remote_ip,site_global_ticket:site_global_ticket,site_global_session:site_global_session,global_user_id:global_user_id,cookie_text:cookie_text,user_agent:user_agent,ref_url:ref_url,loc_url:loc_url,log_time:log_time'

);

向ES中的表插入数据

其实ES的表就是Hive的外部表, 直接insert overwrite table 就行了

增加Jar包

-- 从hive表,导入数据到es中 -- 加载es-hadoop依赖jar add jar hdfs:///libs/es-hadoop/elasticsearch-hadoop-7.10.2.jar;insert overwrite table 外部表 select 字段 from hive表;

-- 增量导入

-- insert into table insurance_userprofile.claim_info select pol_no, user_id, buy_datetime, insur_code, claim_date, claim_item, claim_mnt from insurance_ods.claim_info where dt = '2022-06-20';

-- 全量导入

-- 向tfec_tbl_goods商品表中插入数据

insert overwrite table tags_tfec_userprofile.tfec_tbl_goods select id, siteid, istest, hasread, supportonedaylimit, orderid, cordersn, isbook, cpaymentstatus, cpaytime, producttype, productid, productname, sku, price, number, lockednumber, unlockednumber, productamount, balanceamount, couponamount, esamount, giftcardnumberid, usedgiftcardamount, couponlogid, activityprice, activityid, cateid, brandid, netpointid, shippingfee, settlementstatus, receiptorrejecttime, iswmssku, scode, tscode, tsshippingtime, status, productsn, invoicenumber, expressname, invoiceexpressnumber, postman, postmanphone, isnotice, noticetype, noticeremark, noticetime, shippingtime, lessordersn, waitgetlesshippinginfo, getlesshippingcount, outping, lessshiptime, closetime, isreceipt, ismakereceipt, receiptnum, receiptaddtime, makereceipttype, shippingmode, lasttimeforshippingmode, lasteditorforshippingmode, systemremark, tongshuaiworkid, orderpromotionid, orderpromotionamount, externalsalesettingid, recommendationid, hassendalertnum, isnolimitstockproduct, hpregisterdate, hpfaildate, hpfinishdate, hpreservationdate, shippingopporunity, istimeoutfree, itemshareamount, lessshiptintime, lessshiptouttime, cbsseccode, points, modified, splitflag, splitrelatecordersn, channelid, activityid2, pdorderstatus, omsordersn, couponcode, couponcodevalue, storeid, storetype, stocktype, o2otype, brokeragetype, ogcolor from tags_dat.tbl_goods;

-- 向订单表中插入数据

insert overwrite table tags_tfec_userprofile.tfec_tbl_orders select id, siteid, istest, hassync, isbackend, isbook, iscod, notautoconfirm, ispackage, packageid, ordersn, relationordersn, memberid, predictid, memberemail, addtime, synctime, orderstatus, paytime, paymentstatus, receiptconsignee, receiptaddress, receiptzipcode, receiptmobile, productamount, orderamount, paidbalance, giftcardamount, paidamount, shippingamount, totalesamount, usedcustomerbalanceamount, customerid, bestshippingtime, paymentcode, paybankcode, paymentname, consignee, originregionname, originaddress, province, city, region, street, markbuilding, poiid, poiname, regionname, address, zipcode, mobile, phone, receiptinfo, delayshiptime, remark, bankcode, agent, confirmtime, firstconfirmtime, firstconfirmperson, finishtime, tradesn, signcode, source, sourceordersn, onedaylimit, logisticsmanner, aftersalemanner, personmanner, visitremark, visittime, visitperson, sellpeople, sellpeoplemanner, ordertype, hasreadtaobaoordercomment, memberinvoiceid, taobaogroupid, tradetype, steptradestatus, steppaidfee, depositamount, balanceamount, autocanceldays, isnolimitstockorder, ccborderreceivedlogid, ip, isgiftcardorder, giftcarddownloadpassword, giftcardfindmobile, autoconfirmnum, codconfirmperson, codconfirmtime, codconfirmremark, codconfirmstate, paymentnoticeurl, addresslon, addresslat, smconfirmstatus, smconfirmtime, smmanualtime, smmanualremark, istogether, isnotconfirm, tailpaytime, points, modified, channelid, isproducedaily, couponcode, couponcodevalue, ckcode from tags_dat.tbl_orders;

-- 向用户表中插入数据

insert overwrite table tags_tfec_userprofile.tfec_tbl_users select id, siteid, avatarimagefileid, email, username, password, salt, registertime, lastlogintime, lastloginip, memberrankid, bigcustomerid, lastaddressid, lastpaymentcode, gender, birthday, qq, job, mobile, politicalface, nationality, validatecode, pwderrcount, source, marriage, money, moneypwd, isemailverify, issmsverify, smsverifycode, emailverifycode, verifysendcoupon, canreceiveemail, modified, channelid, grade_id, nick_name, is_blacklist from tags_dat.tbl_users;

-- 向日志表中插入数据

insert overwrite table tags_tfec_userprofile.tfec_tbl_logs select id, log_id, remote_ip, site_global_ticket, site_global_session, global_user_id, cookie_text, user_agent, ref_url, loc_url, log_time from tags_dat.tbl_logs;

ES整合Hive出现的问题及解决方法

问题:集群告警

解决方案:修改副本数为0

# es集群告警的问题,可以通过命令解决,官方解决方式,测试后确认无法通过配置文件方式解决:

curl -XPUT http://192.168.88.166:9200/_settings?pretty -d '{ "index": { "number_of_replicas": 0 } }' -H "Content-Type: application/json"

问题:数据重复

解决方案:开启upsert模式

'es.write.operation' = 'upsert'- 使用upsert模式, 需要指定id

'es.mapping.id' = 'id' 可以选择数据中, 没有重复数据的字段, 做为_id- 如果不指定es.mapping.id,es会默认自动生成一个不重复的数据作为_id

问题:索引不刷新

解决方案:修改refresh

PUT ec_tbl_orders/_settings

{

"index" : {

"refresh_interval" : "1m"

}

}

PUT ec_tbl_goods/_settings

{

"index" : {

"refresh_interval" : "1m"

}

}

PUT ec_tbl_logs/_settings

{

"index" : {

"refresh_interval" : "1m"

}

}

PUT ec_tbl_users/_settings

{

"index" : {

"refresh_interval" : "1m"

}

}

ElasticSearch整合Spark

就是在Python中通过SparkSession对象读取ElasticSearch中的内容, 然后将数据转为DataFrame.进行数据的操作.

先把ES-Hadoop的jar包放到 spark的jars目录下

/root/anaconda3/envs/pyspark_env/lib/python3.7/site-packages/pyspark/jars没有jar包可以去Maven的官网下载

https://mvnrepository.com/artifact/org.elasticsearch/elasticsearch-hadoop

得到数据之后可以对数据进行清洗转换.

案例Demo

from pyspark.sql import SparkSession

from pyspark.sql.types import StructType, StringType, DoubleType

if __name__ == '__main__':

# 创建Spark运行环境

sparkSession = SparkSession\

.builder\

.appName("spark读取ES中的数据")\

.master("local[*]")\

.config("spark.sql.shuffle.partitions", 2)\

.getOrCreate()

# 连接ES并读取数据

schema = StructType()\

.add("userid", StringType())\

.add("pay_money", DoubleType())

es_df = sparkSession\

.read\

.format("es")\

.option("es.nodes", "192.168.88.166:9200")\

.option("es.resource","order_idx")\

.option("es.index.read.missing.as.empty", "yes")\

.option("es.read.field.include","userid, pay_money, category, name")\

.load()

es_df.show()

# es_df.printSchema()

es_df = sparkSession.read.format.option必选参数

es.resource 读取的index名称

es.nodes es集群的连接地址

es_df = sparkSession.read.format.option可选参数

es.index.auto.create (default yes)

Whether elasticsearch-hadoop should create an index (if its missing) when writing data to Elasticsearch or fail.当将数据到es中的时候是否会自动创建索引,默认会自动创建索引或索引库

---------------------------------------------------------------

es.index.read.missing.as.empty (default no)

Whether elasticsearch-hadoop will allow reading of non existing indices (and return an empty data set) or not (and throw an exception)是否可以读取空索引,默认是no不读取

---------------------------------------------------------------

es.query (default none)

Holds the query used for reading data from the specified es.resource. By default it is not set/empty, meaning the entire data under the specified index/type is returned. es.query can have three forms:

保存用于从指定es读取数据的查询。资源默认情况下,它不设置/为空,这意味着返回指定索引/类型下的整个数据。查询可以

uri query 作为上述的参数

using the form ?uri_query, one can specify a query string. Notice the leading ?.

----------------------------------------------------------------------

es.write.operation (default index)

The write operation elasticsearch-hadoop should perform - can be any of:

(1)index (default)

new data is added while existing data (based on its id) is replaced (reindexed).

添加新数据的同时替换(重新索引)现有数据(基于其id)。

(2)create

添加新数据-如果数据已经存在(基于其id),将引发异常。

adds new data - if the data already exists (based on its id), an exception is thrown.

(3)update

updates existing data (based on its id). If no data is found, an exception is thrown.

更新现有数据(基于其id)。如果找不到数据,将引发异常。

(4)upsert

known as merge or insert if the data does not exist, updates if the data exists (based on its id).

如果数据不存在,则称为合并或插入;如果数据存在,则更新(基于其id)。

(5)delete

deletes existing data (based on its id). If no data is found, an exception is thrown.

删除现有数据(基于其id)。如果找不到数据,将引发异常。

官方Demo

#!/usr/bin/env python

# @desc :

__coding__ = "utf-8"

__author__ = "itcast team"

from pyspark import SparkContext

from pyspark.sql import SparkSession

# 0.准备Spark开发环境

from pyspark.sql.types import StructType, StringType

import os

# 这里可以选择本地PySpark环境执行Spark代码,也可以使用虚拟机中PySpark环境,通过os可以配置

# 1-本地路径

# SPARK_HOME = 'F:\\ProgramCJ\\spark-2.4.8-bin-hadoop2.7'

# PYSPARK_PYTHON = 'F:\\ProgramCJ\\Python\\Python37\\python'

# 2-服务器路径

SPARK_HOME = '/export/server/spark'

PYSPARK_PYTHON = '/root/anaconda3/envs/pyspark_env/bin/python'

# 导入路径

os.environ['SPARK_HOME'] = SPARK_HOME

os.environ["PYSPARK_PYTHON"] = PYSPARK_PYTHON

spark = SparkSession.builder \

.master("local[*]") \

.appName("AgeModel") \

.config("es.index.auto.create", "true")\

.getOrCreate()

sc: SparkContext = spark.sparkContext

sc.setLogLevel("WARN")

# 这里是什么类型,可以参考es的mapping的类型

schema = StructType()\

.add("userId", StringType(), nullable=True)\

.add("tagsId", StringType(), nullable=True)

#指定配置文件,读取es的数据

ruleDict = {"inType": "ElasticSearch",

"esNodes": "192.168.88.166:9200",

"esIndex": "tfec_userprofile_result",

"esType": "_doc",

"selectFields": "userId,tagsId"}

# 直接初始化,不支持直接传入字典的类型

# esMeta的对象就可以直接输出属性

esMeta = ESMeta.fromDict(ruleDict)

# 这里?q=之后可以使用_all

#es查询某个索引的全部数据:三种方式【官方说明:https://www.elastic.co/guide/en/elasticsearch/reference/7.10/search-search.html#search-api-query-params-q】

# 1.默认查询全部的数据,不需要定义查询语句 默认查询所有

# 默认查所有数据

# 2.使用query数据,*代表所有的数据 *

# .option("es.query", "?q=*") \

# 3.使用字符串查询全部数据,也就是match_all语法 _all

# .option("es.query", "{\"match_all\":{}}") \

# inferSchema spark读取数据的时候默认读取内置的scheme(解析器认为字段的类型)

df = spark.read \

.format("es") \

.option("es.resource", f"{esMeta.esIndex}/{esMeta.esType}") \

.option("es.nodes", f"{esMeta.esNodes}") \

.option("es.index.read.missing.as.empty", "yes") \

.option("es.query", "?q=*") \

.option("es.read.field.include", f"{esMeta.selectFields}") \

.load()

df.printSchema()

df.show()

print(len(df.head(1)) > 0)

ElasticSearch整合MySQL

也就是读取MySQL中的内容到ElasticSearch中

核心代码

# 创建spark运行环境

spark = SparkSession\

.builder\

.appName("tag-4 process")\

.master('local[*]')\

.config("spark.sql.shuffle.partitions", 1)\

.getOrCreate()

sc: SparkContext = spark.sparkContext

# 连接mysql读取四级标签的rule

url = "jdbc:mysql://192.168.88.166:3306/tfec_tags?useUnicode=true&characterEncoding=UTF-8&serverTimezone=UTC&useSSL=false&user=root&password=123456"

tableName = "tbl_basic_tag"

tag_mate_df= spark\

.read\

.jdbc(url=url, table=tableName)\

.select('rule')\

.where('id=4')

tag_mate_df.show()

979

979

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?