常用命令

| 命令 | 功能 |

|---|---|

| etcdctl mk /etc/ylm 12345 ps:get查看 | etcd创建一个值 |

| kubectl get componentstatus | 查看组件状态 |

| kubectl get node | 查看Node状态 |

| kubectl describe pod nginx | 动态查看资源类型|详细描述(排错使用) |

| kubectl get pod | 查看pod列表 |

| kubectl get pod -o wide | 查看容器的Ip |

| kubectl create -f $*.yaml | 基于Ymal文件创建 |

| kubectl get pod |rc | 查看pod或者rc |

| kubectl deletet pod nginx | 删除某个pod |

| kubectl delete -f $*.yaml | 删除某个对应的资源 |

| kubectl edit pod nginx | 修改资源的配置文件 |

| kubectl deletet rc --all | |

| kubectl rollout history deployment nginx-deployment | 查看deployment的历史版本 |

| kubectl rollout undo deployment nginx-deployment | undo默认回滚到上一个版本 |

| kubectl expose rc readiness --port=80 --type=NodePort | 给rc readines80端口做映射 |

| kubectl get all -n kube-system | 指定查看某个命名空间的pod |

| kubectl -n kube-system describe pod heapster-bdt5v | 查看命名空间下得pod |

| kubectl apply -f $name.yaml | 应用这个文件,常适用于更新后得 |

| kubectl autoscale -n ylm deployment nginx-deployment --max=8 --min=1 --cpu-percent=7 | 弹性伸缩 资源nginx-deployment的一旦超过cpu的百分之7 |

| kubectl edit -n ylm hpa/nginx-deployment | 编辑ylm命名空间下的弹性伸缩 |

| kubectl explain pv.spec.persistentVolumeReclaimPolicy | 查看某个字段得参数 |

| mount -t glusterfs 10.0.0.47:/ylm /mnt | 挂载到本地/mnt下 |

1.K8S的架构

K8s核心组件介绍

两种角色:

- master节点

- 数据库:etcd(nosql数据库)

- API Server(通过API server操作k8s)

- scheduler(选择合适的节点)来进行创建容器,使用调度器进行调度,选择合适的额节点,选择好节点胡,pai-server会调用每个节点上的kubelete去启动容器;kubete再去调用每台机器上的docker去启动容器;这个和openstack也是一样的,他本身没有虚拟化技术,也调用计算节点上的libvirt起的虚拟机

- controller manager每秒检查容器的状态,发现挂了立马重启;如果发现node节点挂了,那么会转移到可用的节点上;会进行都数据库,发现没有就会启动;始终保持服务的高可用;

- node节点;受master节点进行控制;

- kube_proxy给容器进行端口映射;

- kubelet会调用docker起容器;

- 用户访问node节点,kube-proxy服务自动帮我们容器做端口映射;

- cAdVisor :收集容器得数据

- 里面可以看到每个容器得资源情况

K8s附加组件

- 在后面做容器伸缩的时候使用到了Heapster(监控)

- Dashboard ui界面,之需要点点就可以控制集群;

- Federation 可以搭建多个集群,用它进行管理

2.K8s安装

1.修改ip地址、主机和host域名解析

10.0.0.61 k8s-m01

10.0.0.62 k8s-n01

10.0.0.63 k8s-n02

cat >>/etc/hosts<<EOF

10.0.0.61 k8s-m01

10.0.0.62 k8s-m02

10.0.0.63 k8s-m03

EOF

- 只用base源

cp /etc/yum.repos.d/epel.repo{,.bak}

rm -rf /etc/yum.repos.d/epel.repo

2.master安装etcd

- 61上操作

yum install etcd -y

[root@k8s-m01 ~]# vim /etc/etcd/etcd.conf

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.61:2379"

systemctl enable etcd.service

systemctl start etcd.service

#使用mk set 进行写值

[root@k8s-m01 ~]# etcdctl mk /test/oldboy 123456

123456

#get进行读值

[root@k8s-m01 ~]# etcdctl get /test/oldboy

123456

etcdctl -C http://10.0.0.61:2379 cluster-health #检测etcd集群状态

PS:etcd是原生就可以支持做集群的

3.master安装kubernetes

- 61上操作

yum install kubernetes-master.x86_64 -y

[root@k8s-m01 ~]# vim /etc/kubernetes/apiserver

# The address on the local server to listen to.

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

# The port on the local server to listen on.

KUBE_API_PORT="--port=8080"

# Port minions listen on #minions是努力的意思 访问10250来控制kubelet

KUBELET_PORT="--kubelet-port=10250"

#这个是etcd的端口

# Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=http://10.0.0.61:2379"

#这个是vip

# Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

#删除service字段 否则出问题

# default admission control policies

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRa

nger,SecurityContextDeny,ResourceQuota"

[root@k8s-m01 ~]# vim /etc/kubernetes/config

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://10.0.0.61:8080"

#设置apiserver的端口port

systemctl enable kube-apiserver.service

systemctl restart kube-apiserver.service

systemctl enable kube-controller-manager.service

systemctl restart kube-controller-manager.service

systemctl enable kube-scheduler.service

systemctl restart kube-scheduler.service

[root@k8s-m01 ~]# kubectl get componentstatus #查看组件状态

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

4.node节点安装

- 62 63 上操作

#62 63都执行

yum install kubernetes-node.x86_64 -y

docker -v

Docker version 1.13.1, build 64e9980/1.13.1

vim /etc/kubernetes/config

KUBE_MASTER="--master=http://10.0.0.61:8080"

[root@k8s-n01 ~]# vim /etc/kubernetes/kubelet

KUBELET_ADDRESS="--address=0.0.0.0"

KUBELET_PORT="--port=10250"

KUBELET_HOSTNAME="--hostname-override=10.0.0.62"

KUBELET_API_SERVER="--api-servers=http://10.0.0.61:8080"

[root@k8s-n02 ~]# egrep -v "^$|#" /etc/kubernetes/kubelet |head -4

KUBELET_ADDRESS="--address=0.0.0.0"

KUBELET_PORT="--port=10250"

KUBELET_HOSTNAME="--hostname-override=10.0.0.63"

KUBELET_API_SERVER="--api-servers=http://10.0.0.61:8080"

systemctl enable kubelet.service

systemctl start kubelet.service

systemctl enable kube-proxy.service

systemctl start kube-proxy.service

[root@k8s-m01 ~]# kubectl get node

NAME STATUS AGE

10.0.0.62 Ready 3m

10.0.0.63 Ready 3m

5.所有节点安装flannel插件

- Flannel的工作原理

Flannel实质上是一种“覆盖网络(overlay network)”,也就是将TCP数据包装在另一种网络包里面进行路由转发和通信,

- 工作原理

数据从源容器中发出后,经由所在主机的docker0虚拟网卡转发到flannel0虚拟网卡,这是个P2P的虚拟网卡,flanneld服务监听在网卡的另外一端。

-

Flannel通过Etcd服务维护了一张节点间的路由表,详细记录了各节点子网网段 。

-

源主机的flanneld服务将原本的数据内容UDP封装后根据自己的路由表投递给目的节点的flanneld服务,数据到达以后被解包,然后直接进入目的节点的flannel0虚拟网卡,然后被转发到目的主机的docker0虚拟网卡,最后就像本机容器通信一下的有docker0路由到达目标容器。

-

解决所有node节点上的容器相互通信,跨宿主机额容器间的通讯;

-

Flannel使用Etcd进行配置,来保证多个Flannel实例之间的配置一致性;

yum install flannel -y

sed -i 's#http://127.0.0.1:2379#http://10.0.0.61:2379#g' /etc/sysconfig/flanneld

master节点:

etcdctl mk /atomic.io/network/config '{"Network":"172.16.0.0/16"}'

yum install docker -y

systemctl enable flanneld.service

systemctl restart flanneld.service

service docker restart

systemctl restart kube-apiserver.service

systemctl restart kube-controller-manager.service

systemctl restart kube-scheduler.service

node节点

systemctl enable flanneld.service

systemctl restart flanneld.service

service docker restart

systemctl restart kubelet.service

systemctl restart kube-proxy.service

#重启完flannel网络后重启docker docker的默认网络是flannel

ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.16.25.1 netmask 255.255.255.0 broadcast 0.0.0.0

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 172.16.25.0 netmask 255.255.0.0 destination 172.16.25.0

- 在所有的宿主机上起一个容器 测试跨宿主机ping通

#所有节点

docker run -it docker.io/busybox:latest

#查看ip地址,进行互ping ,发现是不通的,原因是我们安装了docker 1.13.1版本,有了bug,它改了我们iptables规则

#ping不同修改文件 重启docker

vim /usr/lib/systemd/system/docker.service

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT

systemctl daemon-reload

systemctl restart docker

ps:这个方法可能不行

$ iptables -L -n

Chain FORWARD (policy DROP) #改为accept即可

target prot opt source destination

7.配置master为镜像仓库

- 所有节点上操作

vim /etc/sysconfig/docker

OPTIONS='--selinux-enabled --log-driver=journald --signature-verification=false --regi

stry-mirror=https://registry.docker-cn.com --insecure-registry=10.0.0.61:5000'

systemctl restart docker

- master节点上

docker run -d -p 5000:5000 --restart=always --name registry -v /opt/myregistry:/var/lib/registry registry:latest

3 K8s的历史

https://kubernetes.io

K8s是一个docker集群的管理工具

1.K8s的核心功能

- 自愈:重新启动失败的容器,在节点不可用时,替换和重新调度节点上的容器,对用户定义的健康检查不响应的容器会被中止,并且在容器准备好服务之前不会把其向客户端广播。

- node pod宕机后,自动重启

- 弹性伸缩:通过监控容器的cpu的负载值,如果这个平均高于80%,增加容器的数量,如果这个平均低于10%,减少容器的数量

- 并发比较大,自动增加容器的数量 ,并发量小了,自动释放相对应的资源

- 服务的自动发现和负载均衡:不需要修改您的应用程序来使用不熟悉的服务发现机制,Kubernetes为容器提供了自己的IP地址和一组容器的单个DNS名称,并可以在它们之间进行负载均衡。

- 如果启动了相应的资源,自动把他添加至负载均衡池

- 滚动升级和一键回滚: Kubernetes逐渐部署对应用程序或其配置的更改,同时监视应用程序运行状况,以确保它不会同时终止所有实例。如果出现问题,Kubernetes会为您恢复更改,利用日益增长的部署解决方案的生态系统。

- 一个一个的进行升级,发现问题,立马爆出,可以一键回滚

2.K8s的历史

2014年docker容器编排工具

2015年7月 发布kubernetes 1.0 计入cncf

2016 1.1 1.2 1.4

2017

2018 k8s从CNCF基金会毕业

2019 1.15

cncf cloud native compute foundation

kubernetes ( k8s ):希腊语舵手,领航容器编排领域,

谷歌16年容器使用经验,borg容器管理平台,使用golang重构borg , kubernetes

3.K8s的安装

- yum 安装 1.5 最容易安装成功的 最适合学习

[root@k8s-m01 ~]# rpm -qa| grep kubernetes

kubernetes-client-1.5.2-0.7.git269f928.el7.x86_64

kubernetes-master-1.5.2-0.7.git269f928.el7.x86_64

- 源码编译安装—难度最大可以安装最新版

- 二进制安装—步骤繁琐可以安装最新版shell,ansible,saltstack

- kubeadm安装最容易,网络︰可以安装最新版

- minikube适合开发人员体验k8s,网络

4.k8s的应用场景

k8s最适合跑微服务;比如京东,一个模块一个虚拟机,每一个功能,一个与域名,一个集群架构,一个数据库

微服务,支持更大的并发,减少发布更新的时候,降低开发难度,集群健壮性更高

4.K8s的常用资源

1.创建pod资源

pod是k8s里最小的资源单位

Pod资源:至少有两个容器组成,pod资源基础容器和业务容器组成

- k8s yaml的主要组成

apiVersion: v1 api版本

kind: pod 资源类型

metadata: 属性

spec: 详细

- k8s_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.61:5000/nginx:1.13

ports:

- containerPort: 80

- 运行一个pod资源

[root@k8s-m01 pod]# kubectl create -f k8s_pod.yaml

pod "nginx" created

[root@k8s-m01 pod]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 26m

解决 pod一直是ContainerCreating https://blog.csdn.net/weixin_46380571/article/details/109790532

[root@k8s-m01 pod]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 27m 172.16.86.2 10.0.0.63

[root@k8s-m01 pod]# curl -I 172.16.86.2

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Wed, 18 Nov 2020 15:32:00 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Mon, 09 Apr 2018 16:01:09 GMT

Connection: keep-alive

ETag: "5acb8e45-264"

Accept-Ranges: bytes

- pod:配置文件2

[root@k8s-m01 pod]# cat k8s_pod2.yaml

apiVersion: v1

kind: Pod

metadata:

name: test

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.61:5000/nginx:1.13

ports:

- containerPort: 80

- name: busybox

image: 10.0.0.61:5000/busybox:v62

command: ["sleep","1000"]

ps:三个容器共用一个ip

2.ReplicationController资源

保证pod资源高可用

rc:保证指定数量的pod始终存活,rc通过标签选择器来关联pod

简单来时 rc起几个pod

k8s资源的常见操作:

kubectl create -f xxx.yaml

kubectl get pod | rc

kubectl describe pod nginx

kubectl delete pod nginx或者kubectl delete -f xxx.yaml

kubectl edit pod nginx

- 创建一个rci资源:

apiVersion: v1 #版本

kind: ReplicationController #类型

metadata: #指定名字

name: nginx

spec: #详细信息

replicas: 5 #5个副本

selector: #标签选择器

app: myweb #定义标签为myweb的pod只能有5个,多一个就会被干点 下面演示

template: 定义一个模板

metadata: 这里是pod的属性

labels:

app: myweb

spec:

containers:

- name: myweb

image: 10.0.0.61:5000/nginx:1.13

ports:

- containerPort: 80

- 创建rc资源

[root@k8s-m01 rc]# kubectl create -f k8s_rc.yaml

replicationcontroller "nginx" created

[root@k8s-m01 rc]# kubetctl get rc

-bash: kubetctl: command not found

[root@k8s-m01 rc]# kubectl get rc

NAME DESIRED CURRENT READY AGE

nginx 5 5 5 27s

期望 创建了 准备 启动了27S

#启动了5个pod 均匀的启动在不通的node上

[root@k8s-m01 rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 10m 172.16.86.2 10.0.0.63

nginx-80sxq 1/1 Running 0 2m 172.16.86.5 10.0.0.63

nginx-gs310 1/1 Running 0 2m 172.16.86.4 10.0.0.63

nginx-rtw14 1/1 Running 0 2m 172.16.86.3 10.0.0.63

nginx-t0jdq 1/1 Running 0 2m 172.16.100.4 10.0.0.62

nginx-xmwll 1/1 Running 0 2m 172.16.100.3 10.0.0.62

test 2/2 Running 0 10m 172.16.100.2 10.0.0.62

- 演示自愈 删除一个pod 自动创建一个

[root@k8s-m01 rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 10m 172.16.86.2 10.0.0.63

nginx-80sxq 1/1 Running 0 2m 172.16.86.5 10.0.0.63

nginx-gs310 1/1 Running 0 2m 172.16.86.4 10.0.0.63

nginx-rtw14 1/1 Running 0 2m 172.16.86.3 10.0.0.63

nginx-t0jdq 1/1 Running 0 2m 172.16.100.4 10.0.0.62

nginx-xmwll 1/1 Running 0 2m 172.16.100.3 10.0.0.62

test 2/2 Running 0 10m 172.16.100.2 10.0.0.62

[root@k8s-m01 rc]# kubectl delete pod nginx-gs310

pod "nginx-gs310" deleted

[root@k8s-m01 rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 10m 172.16.86.2 10.0.0.63

nginx-80sxq 1/1 Running 0 2m 172.16.86.5 10.0.0.63

nginx-rtw14 1/1 Running 0 2m 172.16.86.3 10.0.0.63

nginx-s306l 1/1 Running 0 2s 172.16.100.5 10.0.0.62

nginx-t0jdq 1/1 Running 0 2m 172.16.100.4 10.0.0.62

nginx-xmwll 1/1 Running 0 2m 172.16.100.3 10.0.0.62

test 2/2 Running 0 10m 172.16.100.2 10.0.0.62

- 演示 删除Node节点:pod自动在可操作的node节点上自动重启给i

[root@k8s-m01 rc]# kubectl delete node 10.0.0.63

node "10.0.0.63" deleted

#pod资源 全部移动到62上面了

[root@k8s-m01 rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-dq6bf 1/1 Running 0 4s 172.16.100.7 10.0.0.62

nginx-jt0xk 1/1 Running 0 4s 172.16.100.6 10.0.0.62

nginx-s306l 1/1 Running 0 4m 172.16.100.5 10.0.0.62

nginx-t0jdq 1/1 Running 0 7m 172.16.100.4 10.0.0.62

nginx-xmwll 1/1 Running 0 7m 172.16.100.3 10.0.0.62

test 2/2 Running 0 15m 172.16.100.2 10.0.0.62

- 删除后的node 节点自动加入

[root@k8s-m03 ~]# systemctl restart kubelet.service

[root@k8s-m01 rc]# kubectl get node

NAME STATUS AGE

10.0.0.62 Ready 11h

10.0.0.63 Ready 4

- 我们修改Nginx这个pod的标签为myweb

[root@k8s-m01 rc]# kubectl edit pod nginx

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: 2020-11-19T00:16:58Z

labels:

app: myweb

- 自动删除最年轻的pod

[root@k8s-m01 rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-65r4g 1/1 Running 0 8m 172.16.86.2 10.0.0.63

nginx-dq6bf 1/1 Running 0 11m 172.16.100.7 10.0.0.62

nginx-jt0xk 1/1 Running 0 11m 172.16.100.6 10.0.0.62

nginx-t0jdq 1/1 Running 0 19m 172.16.100.4 10.0.0.62

nginx-xmwll 1/1 Running 0 19m 172.16.100.3 10.0.0.62

test 2/2 Running 1 26m 172.16.100.2 10.0.0.62

3.rc的一级升级和一键回滚

升级

- 新建要是升级的rc配置文件

[root@k8s-m01 rc]# cat k8s_rc2.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx2

spec:

replicas: 5

selector:

app: myweb2

template:

metadata:

labels:

app: myweb2

spec:

containers:

- name: myweb2

image: 10.0.0.61:5000/nginx:1.15

ports:

- containerPort: 80

- 执行命令进行滚动升级

[root@k8s-m01 rc]# kubectl rolling-update nginx -f k8s_rc2.yaml --update-period=10s

#--update-period=10s 10秒升级一次 不指定 默认一分钟升级一次

[root@k8s-m01 rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx2-0fjvh 1/1 Running 0 56s 172.16.86.4 10.0.0.63

nginx2-7nz71 1/1 Running 0 1m 172.16.100.5 10.0.0.62

nginx2-8v1x0 1/1 Running 0 1m 172.16.86.3 10.0.0.63

nginx2-jfwgg 1/1 Running 0 1m 172.16.86.2 10.0.0.63

nginx2-jzhrh 1/1 Running 0 1m 172.16.100.7 10.0.0.62

test 2/2 Running 47 13h 172.16.100.2 10.0.0.62

#验证

[root@k8s-m01 rc]# curl -I 172.16.100.7 2>/dev/null|head -3

HTTP/1.1 200 OK

Server: nginx/1.15.5 #已经是1.15了

Date: Thu, 19 Nov 2020 13:07:38 GMT

回滚

[root@k8s-m01 rc]# kubectl rolling-update nginx2 -f k8s_rc.yaml --update-period=1s

[root@k8s-m01 rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-rcxpk 1/1 Running 0 21s 172.16.86.4 10.0.0.63

nginx-tfmrd 1/1 Running 0 17s 172.16.86.2 10.0.0.63

nginx-w84v1 1/1 Running 0 23s 172.16.100.3 10.0.0.62

nginx-xp9hk 1/1 Running 0 19s 172.16.100.4 10.0.0.62

nginx-znsw5 1/1 Running 0 25s 172.16.86.5 10.0.0.63

test 2/2 Running 48 13h 172.16.100.2 10.0.0.62

[root@k8s-m01 rc]# curl -I 172.16.100.4 2>/dev/null|head -3

HTTP/1.1 200 OK

Server: nginx/1.13.12 #已经回滚到1.13

Date: Thu, 19 Nov 2020 13:14:04 GMT

4.service资源

在内部实现4层的负载均衡

service 会直接调用kube-proxy来做端口映射,通过端口映射的方式让外部访问某个pod,

service帮助Pod暴露端口

创建一个service

- nginx_svc.yaml

apiversion: v1

kind: Service

metadata:

name: myweb

spec :

type: NodePort

ports:

- port: 80 #clusterIP

nodePort: 3000 #nodeport

targetPort: 80 #pod

selector:

app: myweb

kubectl create -f nginx_svc.yaml

[root@k8s-m01 svc]# kubectl describe svc myweb

Name: myweb

Namespace: default

Labels: <none>

Selector: app=myweb

Type: NodePort

IP: 10.254.219.175

Port: <unset> 80/TCP

NodePort: <unset> 30000/TCP

Endpoints: 172.16.100.3:80,172.16.100.4:80,172.16.86.2:80 + 2 more...

Session Affinity: None

No events.

访问62/63 :30000端口

修改nodeport范围

[root@k8s-m01 ~]# vim /etc/kubernetes/apiserver

# Add your own!

KUBE_API_ARGS="--service-node-port-range=3000-50000"

service默认使用iptables来实现负载均衡,新版本种使用lvs(四层负载均衡)

5.deployment资源

有rc在滚动升级之后,会造成服务器中断,于是k8s引入deployment资源

- 创建deployment

- k8s_deploy.yaml

apiVersion: extensions/v1beta1 #扩展的v1beta版

kind: Deployment #资源类型

metadata: #指定名字

name: nginx-deployment

spec: #详细信息

replicas: 3

template:

metadata:

labels: #标签

app: nginx

spec:

containers:

- name: nginx

image: 10.0.0.61:5000/nginx:1.13

ports:

- containerPort: 80

resources: #资源 限制

limits: #限制

cpu: 100m

requests: #需要使用的

cpu: 100m

- 在启动一个svc就能被访问

vim nginx_svc.yaml

apiVersion: v1

kind: Service

metadata:

name: my-nginx

spec:

type: NodePort

ports:

- port: 80

nodePort: 3000 #不加nodePOrt就会生成一个随机端口

targetPort: 80

selector:

app: nginx

[root@k8s-m01 deploy]# kubectl get all -o wide

svc/my-nginx 10.254.223.210 <nodes> 80:25516/TCP 4s app=nginx

升级

进行版本升级:更改配置文件

[root@k8s-m01 deploy]# kubectl edit deployment nginx-deployment

deployment "nginx-deployment" edited

[root@k8s-m01 deploy]# kubectl get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx-deployment 3 3 3 3 13m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 <none> 443/TCP 1d <none>

svc/my-nginx 10.254.223.210 <nodes> 80:25516/TCP 5m app=nginx

svc/myweb 10.254.219.175 <nodes> 80:30000/TCP 2h app=myweb2

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/nginx-deployment-3330225768 0 0 0 13m nginx 10.0.0.61:5000/nginx:1.13 app=nginx,pod-template-hash=3330225768

rs/nginx-deployment-3537057386 3 3 3 3s nginx 10.0.0.61:5000/nginx:1.15 app=nginx,pod-template-hash=3537057386 #会启动一个新的rs,来启动pod

NAME READY STATUS RESTARTS AGE IP NODE

po/nginx-deployment-3537057386-njfq9 1/1 Running 0 2s 172.16.86.4 10.0.0.63

po/nginx-deployment-3537057386-r2dr0 1/1 Running 0 3s 172.16.86.3 10.0.0.63

po/nginx-deployment-3537057386-xc0n2 1/1 Running 0 3s 172.16.100.4 10.0.0.62

[root@k8s-m01 deploy]# curl -I 10.0.0.63:25516

HTTP/1.1 200 OK

Server: nginx/1.15.5 #版本已经升级

Date: Thu, 19 Nov 2020 16:41:27 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

回滚

- 使用undo 默认回滚到上一个版本

[root@k8s-m01 deploy]# kubectl rollout history deployment nginx-deployment

deployments "nginx-deployment"

REVISION CHANGE-CAUSE

1 <none>

2 <none>

[root@k8s-m01 deploy]# kubectl rollout undo deployment nginx-deployment

deployment "nginx-deployment" rolled back

[root@k8s-m01 deploy]# kubectl rollout history deployment nginx-deployment

deployments "nginx-deployment"

REVISION CHANGE-CAUSE

2 <none> #更改了什么 我们不知道

3 <none>

[root@k8s-m01 deploy]# curl -I 10.0.0.63:25516

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Thu, 19 Nov 2020 16:48:21 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Mon, 09 Apr 2018 16:01:09 GMT

Connection: keep-alive

ETag: "5acb8e45-264"

Accept-Ranges: bytes

- 指定版本回滚

[root@k8s-m01 deploy]# kubectl rollout undo deployment nginx-deployment --to-revision=2

deployment "nginx-deployment" rolled back

[root@k8s-m01 deploy]# curl -I 10.0.0.63:25516

HTTP/1.1 200 OK

Server: nginx/1.15.5

Date: Thu, 19 Nov 2020 16:51:11 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

记录回滚和升级的命令

- 使用record 所以然可以记录历史操作 但是没有看到具体的操作信息

[root@k8s-m01 deploy]# kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

deployment "nginx" created

[root@k8s-m01 deploy]# kubectl rollout history deployment nginx-deployment

deployments "nginx-deployment"

REVISION CHANGE-CAUSE

3 <none>

4 <none>

[root@k8s-m01 deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

- 升级成功

[root@k8s-m01 deploy]# kubectl edit deployment nginx

deployment "nginx" edited

[root@k8s-m01 deploy]# curl -I 10.0.0.63:25516

HTTP/1.1 200 OK

Server: nginx/1.15.5

Date: Thu, 19 Nov 2020 16:57:57 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

[root@k8s-m01 deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

2 kubectl edit deployment nginx

- 用 run 和 set imgae 来显示升级的操作

[root@k8s-m01 deploy]# kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

deployment "nginx" created

[root@k8s-m01 deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

#如果deployment里面有多个容器,就指定容器

[root@k8s-m01 deploy]# kubectl set image deploy nginx nginx=10.0.0.61:5000/nginx:1.15

deployment "nginx" image updated

[root@k8s-m01 deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

2 kubectl set image deploy nginx nginx=10.0.0.61:5000/nginx:1.15

演示一个deploy里有两个容器

[root@k8s-m01 deploy]# cat k8s_deploy.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: 10.0.0.61:5000/nginx:1.13

ports:

- containerPort: 80

resources:

limits:

cpu: 100m

requests:

cpu: 100m

- name: busybox

image: 10.0.0.61:5000/busybox:v62

command: ["sleep","1000"]

[root@k8s-m01 deploy]# kubectl create -f k8s_deploy.yaml

deployment "nginx-deployment" created

[root@k8s-m01 deploy]# kubectl get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx 3 3 3 3 9m

deploy/nginx-deployment 3 3 3 3 5s

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 <none> 443/TCP 1d <none>

svc/my-nginx 10.254.223.210 <nodes> 80:25516/TCP 6h app=nginx

svc/myweb 10.254.219.175 <nodes> 80:30000/TCP 9h app=myweb2

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/nginx-1377738351 3 3 3 7m nginx 10.0.0.61:5000/nginx:1.15 pod-template-hash=1377738351,run=nginx

rs/nginx-847814248 0 0 0 9m nginx 10.0.0.11:5000/nginx:1.13 pod-template-hash=847814248,run=nginx

rs/nginx-deployment-2437158682 3 3 3 5s nginx,busybox 10.0.0.61:5000/nginx:1.13,10.0.0.61:5000/busybox:v62 app=nginx,pod-template-hash=2437158682

NAME READY STATUS RESTARTS AGE IP NODE

po/nginx-1377738351-0g25j 1/1 Running 0 7m 172.16.100.3 10.0.0.62

po/nginx-1377738351-1ld76 1/1 Running 0 7m 172.16.86.4 10.0.0.63

po/nginx-1377738351-29hhc 1/1 Running 0 7m 172.16.100.2 10.0.0.62

po/nginx-deployment-2437158682-l74vs 2/2 Running 0 5s 172.16.86.3 10.0.0.63

po/nginx-deployment-2437158682-r3s0s 2/2 Running 0 5s 172.16.100.4 10.0.0.62

po/nginx-deployment-2437158682-wsj2c 2/2 Running 0 5s 172.16.86.2 10.0.0.63

- 因为有两个镜像,所以我们在升级容器的时候,要指定容器的名字 例如 nginx busybox busybox=10.0.0.51:5000 这样的就是 前面一个deployment 后面是指定的容器

deployment 升级回滚命令

deployment升级和回滚

命令行创建deployment

kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

命令行升级版本

kubectl set image deploy nginx nginx=10.0.0.11:5000/nginx:1.15

查看deployment所有历史版本

kubectl rollout history deployment nginx deployment

回滚到上一个版本

kubectl rollout undo deployment nginx deployment

回滚到指定版本

kubectl rollout undo deployment nginx --to-revision=2

现在 我们使用的都是deloyment 不用 rc

6.tomcat+mysql

应用和应用之间访问,不能用podIP,因为如果pod宕机了,就无法访问了,所以我们使用cluster ip(vip),进行访问。

我们可以使用node selector把资源调度到某一个node上,

- 先起mysql

[root@k8s-m01 tomcat-demo]# cat mysql-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: 10.0.0.61:5000/mysql:5.7

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'

[root@k8s-m01 tomcat-demo]# kubectl create -f mysql-rc.yml

[root@k8s-m01 tomcat-demo]# kubectl get pod -o wide

- 创建一个service

帮助pod暴露端口

[root@k8s-m01 tomcat-demo]# vim mysql-svc.yml

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

ports:

- port: 3306

targetPort: 3306 #标签选择器,和rc的标签选择器一样

selector:

app: mysql

svc默认是clusterIP,因为不想让mysql数据库对外访问,所以我们不设置类型,让他使用clusterIP

- 拿到mysql svc的ip

[root@k8s-m01 tomcat-demo]# kubectl get all -o wide

- 将ip地址写入tomcat的rc种

[root@k8s-m01 tomcat-demo]# cat tomcat-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: myweb

spec:

replicas: 1

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

nodeSelector:

disktype: 10.0.0.62

containers:

- name: myweb

image: 10.0.0.61:5000/tomcat-app:v2

ports:

- containerPort: 8080

env:

- name: MYSQL_SERVICE_HOST

value: '10.254.214.216' #这里

- name: MYSQL_SERVICE_PORT

value: '3306'

#创建tomcat rc

kubectl create -f tomcat-rc.yml

#提示存在

[root@k8s-m01 tomcat-demo]# kubectl create -f mysql-svc.yml

Error from server (AlreadyExists): error when creating "mysql-svc.yml": services "mysql" already exists

#查看已经存在一个svc/myweb

[root@k8s-m01 tomcat-demo]# kubectl get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx 3 3 3 3 1d

deploy/nginx-deployment 3 3 3 3 1d

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/mysql 1 1 1 12h mysql 10.0.0.61:5000/mysql:5.7 app=mysql

rc/myweb 1 1 0 1d myweb 10.0.0.61:5000/tomcat-app:v2 app=myweb

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 <none> 443/TCP 2d <none>

svc/my-nginx 10.254.223.210 <nodes> 80:25516/TCP 1d app=nginx

svc/mysql 10.254.214.216 <none> 3306/TCP 12h app=mysql

svc/myweb 10.254.219.175 <nodes> 80:30000/TCP 1d app=myweb2

#使用edit在这基础上进行修改

[root@k8s-m01 tomcat-demo]# kubectl edit svc myweb

更改为myweb

#svc和rc的标签都为myweb 即可外部访问

[root@k8s-m01 tomcat-demo]# kubectl get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx 3 3 3 3 1d

deploy/nginx-deployment 3 3 3 3 1d

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/mysql 1 1 1 12h mysql 10.0.0.61:5000/mysql:5.7 app=mysql

rc/myweb 1 1 0 1d myweb 10.0.0.61:5000/tomcat-app:v2 app=myweb

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 <none> 443/TCP 2d <none>

svc/my-nginx 10.254.223.210 <nodes> 80:25516/TCP 1d app=nginx

svc/mysql 10.254.214.216 <none> 3306/TCP 12h app=mysql

svc/myweb 10.254.219.175 <nodes> 80:30000/TCP 1d app=myweb

正确删除pod https://blog.csdn.net/qq_38695182/article/details/84988901

spec:

nodeSelector:

disktype: 10.0.0.62

- 此时无法访问

[root@k8s-m01 tomcat-demo]# kubectl get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx 3 3 3 3 1d

deploy/nginx-deployment 3 3 3 3 1d

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/mysql 1 1 1 13h mysql 10.0.0.61:5000/mysql:5.7 app=mysql

rc/myweb 1 1 1 19s myweb 10.0.0.61:5000/tomcat-app:v2 app=myweb

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 <none> 443/TCP 2d <none>

svc/my-nginx 10.254.223.210 <nodes> 80:25516/TCP 1d app=nginx

svc/mysql 10.254.214.216 <none> 3306/TCP 12h app=mysql

svc/myweb 10.254.219.175 <nodes> 80:30000/TCP 1d app=myweb

# 我们到是80:30000 因为我们使用的是nginx的svc进行改的 所以导致无法访问

[root@k8s-m01 tomcat-demo]# curl -I 10.0.0.63:30000

curl: (7) Failed connect to 10.0.0.63:30000; Connection refused

[root@k8s-m01 tomcat-demo]# curl -I 172.16.86.9:8080

HTTP/1.1 200 OK

Server: Apache-Coyote/1.1

Content-Type: text/html;charset=UTF-8

Transfer-Encoding: chunked

Date: Sat, 21 Nov 2020 03:22:58 GMT

是因为我们在原先的svc的基础上改的 ,所以会导致端口不对

我们要删除svc再重新创建一个

[root@k8s-m01 tomcat-demo]# kubectl delete -f tomcat-svc.yml

[root@k8s-m01 tomcat-demo]# kubectl create -f tomcat-svc.yml

[root@k8s-m01 tomcat-demo]# kubectl get all -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/myweb 10.254.118.239 <nodes> 8080:30008/TCP 8s app=myweb

- 访问30008

- 验证已经连接到数据库

-

提交数据

-

进数据库查看数据

只能指定pod进入数据库

[root@k8s-m01 tomcat-demo]# kubectl exec -it mysql-bf8gw /bin/bash

root@mysql-bf8gw:/# mysql -uroot -p123456 #密码是rc文件里面定义的

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 5

Server version: 5.7.15 MySQL Community Server (GPL)

Copyright (c) 2000, 2016, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| HPE_APP |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.00 sec)

mysql> use HPE_APP;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> show tables;

+-------------------+

| Tables_in_HPE_APP |

+-------------------+

| T_USERS |

+-------------------+

1 row in set (0.00 sec)

mysql> select * from T_USERS;

+----+-----------+----------+

| ID | USER_NAME | LEVEL |

+----+-----------+----------+

| 1 | me | 100 |

| 2 | our team | 100 |

| 3 | HPE | 100 |

| 4 | teacher | 100 |

| 5 | docker | 100 |

| 6 | google | 100 |

| 7 | lm | 10000000 | #有数据说明我们已经定义好了

+----+-----------+----------+

7 rows in set (0.00 sec)

PS:用户和用户之间 通过VIP来连接 实现容器和容器之间互相访问;

5.K8s的附加组件

1.dns服务

就是为了解析vip地址

因为我们可能每次删除一个SVC之后,它的VIP就会变,但是我们使用dns之后,它的VIP每次变就没关系;我们使用SVC的名字,这样SVC就会自动转发它的名字;这样就不用写vip的地址;防止变化;

docker pull docker.io/gysan/kubedns-amd64

docker pull docker.io/gysan/kube-dnsmasq-amd64

docker pull docker.io/gysan/dnsmasq-metrics-amd64

docker pull docker.io/gysan/exechealthz-amd64

- skydns

- coredns

[root@k8s-m01 dns]# ls

skydns-rc.yaml skydns-svc.yaml

[root@k8s-m01 dns]# cat skydns-rc.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

spec:

replicas: 1

# replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter.

# 2. Default is 1.

# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

rollingUpdate: #升级策略

maxSurge: 10% #最大一次性升级不成功的是

maxUnavailable: 0 #允许出现不可用的服务的数量是0

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

scheduler.alpha.kubernetes.io/tolerations: '[{"key":"CriticalAddonsOnly", "operator":"Exists"}]'

spec:

containers:

- name: kubedns

image: myhub.fdccloud.com/library/kubedns-amd64:1.9

resources:

# TODO: Set memory limits when we've profiled the container for large

# clusters, then set request = limit to keep this container in

# guaranteed class. Currently, this container falls into the

# "burstable" category so the kubelet doesn't backoff from restarting it.

limits:

memory: 170Mi #限制使用170M内存 一旦超过170M 会把pod容器重启

requests: #没有70M内存 跑不起来

cpu: 100m

memory: 70Mi

livenessProbe: #健康检查 判断容器是否存活

httpGet: #这里用的是httpGet

path: /healthz-kubedns

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe: #存活检查

httpGet:

path: /readiness

port: 8081

scheme: HTTP

# we poll on pod startup for the Kubernetes master service and

# only setup the /readiness HTTP server once that's available.

initialDelaySeconds: 3

timeoutSeconds: 5

args: #启动的CMD的参数

- --domain=cluster.local.

- --dns-port=10053

- --config-map=kube-dns

- --kube-master-url=http://10.0.0.61:8080 #连接mater地址

# This should be set to v=2 only after the new image (cut from 1.5) has

# been released, otherwise we will flood the logs.

- --v=0

#__PILLAR__FEDERATIONS__DOMAIN__MAP__

env:

- name: PROMETHEUS_PORT

value: "10055"

ports:

- containerPort: 10053

name: dns-local

protocol: UDP

- containerPort: 10053

name: dns-tcp-local

protocol: TCP

- containerPort: 10055

name: metrics

protocol: TCP

- name: dnsmasq #两个功能 dhcp,dns

image: myhub.fdccloud.com/library/kube-dnsmasq-amd64:1.4 #镜像改成自己的

livenessProbe:

httpGet:

path: /healthz-dnsmasq

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- --cache-size=1000

- --no-resolv

- --server=127.0.0.1#10053

#- --log-facility=-

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

# see: https://github.com/kubernetes/kubernetes/issues/29055 for details

resources:

requests:

cpu: 150m

memory: 10Mi

- name: dnsmasq-metrics

image: myhub.fdccloud.com/library/dnsmasq-metrics-amd64:1.0

livenessProbe:

httpGet:

path: /metrics

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- --v=2

- --logtostderr

ports:

- containerPort: 10054

name: metrics

protocol: TCP

resources:

requests:

memory: 10Mi

- name: healthz

image: myhub.fdccloud.com/library/exechealthz-amd64:1.2

resources:

limits:

memory: 50Mi

requests:

cpu: 10m

# Note that this container shouldn't really need 50Mi of memory. The

# limits are set higher than expected pending investigation on #29688.

# The extra memory was stolen from the kubedns container to keep the

# net memory requested by the pod constant.

memory: 50Mi

args:

- --cmd=nslookup kubernetes.default.svc.cluster.local 127.0.0.1 >/dev/null

- --url=/healthz-dnsmasq

- --cmd=nslookup kubernetes.default.svc.cluster.local 127.0.0.1:10053 >/dev/null

- --url=/healthz-kubedns

- --port=8080

- --quiet

ports:

- containerPort: 8080

protocol: TCP

dnsPolicy: Default # Don't use cluster DNS.

-

服务介绍

里面有4个服务

[root@k8s-m01 dns]# grep -i 'name' skydns-rc.yaml

- name: kubedns 接收k8s调用的

- name: dnsmasq 提供dns服务

- name: dnsmasq-metrics 配合prometheus监控

- name: healthz 自动服务的健康检查

- svc资源

[root@k8s-m01 dns]# vim skydns-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "KubeDNS"

spec: #没指定就是cluster IP

selector:

k8s-app: kube-dns

clusterIP: 10.254.230.254

ports:

- name: dns #如果有多个端口用 -name区分

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- 创建

[root@k8s-m01 dns]# kubectl create -f skydns-rc.yaml

[root@k8s-m01 dns]# kubectl create -f skydns-svc.yaml

[root@k8s-m01 dns]# kubectl get all --namespace=kube-system

- 更改kubelet

[root@k8s-m03 ~]# vim /etc/kubernetes/kubelet

KUBELET_ARGS="--cluster_dns=10.254.230.254 --cluster_domain=cluster.local"

[root@k8s-m03 ~]# systemctl restart kubelet.service

- 我们以tomcat为例 使用域名去进行访问

[root@k8s-m01 tomcat-demo]# kubectl delete -f .

[root@k8s-m01 tomcat-demo]# cat mysql-svc.yml

apiVersion: v1

kind: Service

metadata:

name: mysql #svc名字是mysql

spec:

ports:

- port: 3306

targetPort: 3306

selector:

app: mysql

[root@k8s-m01 tomcat-demo]# cat tomcat-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: myweb

spec:

replicas: 1

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: 10.0.0.61:5000/tomcat-app:v2

ports:

- containerPort: 8080

env:

- name: MYSQL_SERVICE_HOST

value: 'mysql' #把ip地址改成mysql

- name: MYSQL_SERVICE_PORT

value: '3306'

- 创建后 验证是否连接到数据库

[root@k8s-m01 tomcat-demo]# kubectl create -f .

- 验证DNS服务是否正常

[root@k8s-m01 tomcat-demo]# kubectl exec -it myweb-5wlbf /bin/bash

root@myweb-5wlbf:/usr/local/tomcat# cat /etc/resolv.conf

search default.svc.cluster.local svc.cluster.local cluster.local

nameserver 10.254.230.254

nameserver 223.5.5.5

nameserver 223.6.6.6

options ndots:5

root@myweb-5wlbf:/usr/local/tomcat# host

hostid hostname hostnamectl

root@myweb-5wlbf:/usr/local/tomcat# ping

ping ping6

root@myweb-5wlbf:/usr/local/tomcat# ping mysql

PING mysql.default.svc.cluster.local (10.254.121.26): 56 data bytes

[root@k8s-m01 tomcat-demo]# kubectl get all

svc/mysql 10.254.121.26 <none> 3306/TCP 6m

#dns就是帮助解析VIP地址,把SVC的名字解析成对应的clusterIP VIP 再也不同于配固定的地址

2.namespace

资源隔离

我们看一下 资源隔离的效果

[root@k8s-m01 tomcat-demo]# kubectl get all

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx 3 3 3 3 1d

deploy/nginx-deployment 3 3 3 3 1d

NAME DESIRED CURRENT READY AGE

rc/mysql 1 1 1 16m

rc/myweb 1 1 1 16m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.254.0.1 <none> 443/TCP 2d

svc/my-nginx 10.254.223.210 <nodes> 80:25516/TCP 1d

svc/mysql 10.254.121.26 <none> 3306/TCP 16m

svc/myweb 10.254.160.187 <nodes> 8080:30008/TCP 16m

NAME DESIRED CURRENT READY AGE

rs/nginx-1377738351 3 3 3 1d

rs/nginx-847814248 0 0 0 1d

rs/nginx-deployment-2437158682 3 3 3 1d

NAME READY STATUS RESTARTS AGE

po/mysql-rjpvm 1/1 Running 0 16m

po/myweb-5wlbf 1/1 Running 0 16m

po/nginx-1377738351-073hs 1/1 Running 0 19h

po/nginx-1377738351-1ld76 1/1 Running 0 1d

po/nginx-1377738351-5xd5w 1/1 Running 0 19h

po/nginx-deployment-2437158682-f3wht 2/2 Running 68 19h

po/nginx-deployment-2437158682-l74vs 2/2 Running 121 1d

po/nginx-deployment-2437158682-wsj2c 2/2 Running 121 1d

[root@k8s-m01 tomcat-demo]# kubectl get all --namespace=kube-system

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-dns 1 1 1 1 2h

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kube-dns 10.254.230.254 <none> 53/UDP,53/TCP 2h

NAME DESIRED CURRENT READY AGE

rs/kube-dns-88051145 1 1 1 2h

NAME READY STATUS RESTARTS AGE

po/kube-dns-88051145-qk158 4/4 Running 0 2h

[root@k8s-m01 tomcat-demo]#

1.创建一个namespace

[root@k8s-m01 tomcat-demo]# kubectl create namespace ylm

namespace "ylm" created

[root@k8s-m01 tomcat-demo]# kubectl get namespace

NAME STATUS AGE

default Active 2d

kube-system Active 2d

ylm Active 29s

基于namespace创建一个资源

[root@k8s-m01 tomcat-demo]# head -4 *.yml

[root@k8s-m01 tomcat-demo]# sed -i '3a \ \ namespace: ylm' *.yml |head -5

[root@k8s-m01 tomcat-demo]# head -5 *.yml

[root@k8s-m01 tomcat-demo]# cat tomcat-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:

namespace: ylm

name: myweb

spec:

replicas: 1

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: 10.0.0.61:5000/tomcat-app:v2

ports: 删除nodepoint使期使用随机端口

- containerPort: 8080

env:

- name: MYSQL_SERVICE_HOST

value: 'mysql'

- name: MYSQL_SERVICE_PORT

value: '3306'

[root@k8s-m01 tomcat-demo]# kubectl create -f .

[root@k8s-m01 tomcat-demo]# kubectl get all -n ylm

NAME DESIRED CURRENT READY AGE

rc/mysql 1 1 1 58s

rc/myweb 1 1 1 58s

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/mysql 10.254.245.83 <none> 3306/TCP 58s

svc/myweb 10.254.98.188 <nodes> 8080:16046/TCP 35s

NAME READY STATUS RESTARTS AGE

po/mysql-xg386 1/1 Running 0 58s

po/myweb-hgq04 1/1 Running 0 58s

3.pod资源的健康检查

1.探针的的种类

livenessProbe :健康状态检查,周期性检查服务是否存活,检查结果失败,将重启容器

检查存活,

readinessProbe :可用性检查,周期性检查服务是否可用,不可用将从service的endpoints中移除

[root@k8s-m01 tomcat-demo]# kubectl describe svc mysql

Endpoints: 172.16.86.5:3306 检查 pod没问题 就自动加入这个service后端服务中来,

readiness会询问它准备好了嘛《如果没准备好,就不会把他加入到service中,来,就无法被service进行调度;流量也不会分发到这来

clusterIP 自动会关联后端的pod,假设起了三个pod,其中两个pod是正常的,这个时候,我把副本的数量,调了,那么原本会在新增一个pod,那么他在启动pod的时候,会先做这个readiness健康检查,如果这个readiness健康检查没通过,就不会把他加入到负载均衡,知道健康检查通过,如果检查,这个pod不好使了,就会把他从负载均衡剔出去,就无法介收到流量的分发主备好了嘛?用的最多的使readiness,特别是在滚动升级的时候,如果没准备好,就不会把流量分给它;

2.探针的检测方法

- exec :执行一段命令

- httpGet:检测某个http请求的返回状态码

- tcpSocket: 测试某个端口是否能连接

- telnet 检查

3.liveness探针的exec使用

检查文件 没有了 ,就判定了pod不健康 ,就重启;

[root@k8s-m01 liveness]# cat pod_nginx_exec.yaml

apiVersion: v1

kind: Pod

metadata:

name: exec

spec:

containers:

- name: nginx

image: 10.0.0.61:5000/nginx:1.13

ports:

- containerPort: 80

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5 #第一次检查得时间为5秒

periodSeconds: 5 #每个5秒检查一次

[root@k8s-m01 health]# kubectl create -f pod_nginx_exec.yaml

pod "exec" created

[root@k8s-m01 health]# kubectl get pod #看这里 已经重启了

NAME READY STATUS RESTARTS AGE

exec 1/1 Running 1 1m

#查看详细信息

[root@k8s-m01 health]# kubectl describe pod exec

29s 29s 1 {kubelet 10.0.0.63} spec.containers{nginx} Normal Killing Killing container with docker id 9f1d08a882a5: pod "exec_default(86444142-2bf0-11eb-97a7-000c299b7352)" container "nginx" is unhealthy, it will be killed and re-created.

4.liveness探针得httpGet使用

检查首页文件

[root@k8s-m01 health]# cat pod_nginx_httpget.yaml

apiVersion: v1

kind: Pod

metadata:

name: httpget

spec:

containers:

- name: nginx

image: 10.0.0.61:5000/nginx:1.13

ports:

- containerPort: 80

livenessProbe:

httpGet:

path: /index.html #检查首页文件

port: 80

initialDelaySeconds: 3

periodSeconds: 3

[root@k8s-m01 health]# kubectl create -f pod_nginx_httpget.yaml

[root@k8s-m01 health]# kubectl get pod

NAME READY STATUS RESTARTS AGE

mysql-rjpvm 1/1 Running 0 3h

- 进入容器删除index.html

[root@k8s-m01 health]# kubectl exec -it httpget /bin/bash

root@httpget:/# cd /usr/share/nginx/html/

root@httpget:/usr/share/nginx/html# ls

50x.html index.html

root@httpget:/usr/share/nginx/html# rm -rf index.html

root@httpget:/usr/share/nginx/html# exit

[root@k8s-m01 health]# kubectl describe pod httpget

11s 5s 3 {kubelet 10.0.0.63} spec.containers{nginx} Warning Unhealthy Liveness probe failed: HTTP probe failed with statuscode: 404

5s 5s 1 {kubelet 10.0.0.63} spec.containers{nginx} Normal Killing Killing container with docker id 732e1f45b632: pod "httpget_default(80572412-2bf2-11eb-97a7-000c299b7352)" container "nginx" is unhealthy, it will be killed and re-created.

[root@k8s-m01 health]# kubectl get pod #已经重启了1次

NAME READY STATUS RESTARTS AGE

httpget 1/1 Running 1 2m

5.liveness的tcpSocket使用

更改配置文件得端口,改成81端口 ,就可以形成效果;

[root@k8s-m01 health]# cat pod_nginx_tcpSocket.yaml

apiVersion: v1

kind: Pod

metadata:

name: tcpSocket

spec:

containers:

- name: nginx

image: 10.0.0.61:5000/nginx:1.13

ports:

- containerPort: 80

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 3

periodSeconds: 3

6.readiness探针的httpGet使用

在启动容器时,检查服务是否可用,如果不可用,就不会被service服务 掌握,就不会被服务引流

[root@k8s-m01 health]# cat nginx-rc-httpGet.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: readiness

spec:

replicas: 2

selector:

app: readiness

template:

metadata:

labels:

app: readiness

spec:

containers:

- name: readiness

image: 10.0.0.61:5000/nginx:1.13

ports:

- containerPort: 80

readinessProbe:

httpGet:

path: /ylm.html #容器准备好的条件是有这个文件

port: 80

initialDelaySeconds: 3

periodSeconds: 3

- 创建

[root@k8s-m01 health]# kubectl create -f nginx-rc-httpGet.yaml

#虽然显示running,但是没有准备好

[root@k8s-m01 health]# kubectl get pod

NAME READY STATUS RESTARTS AGE

readiness-2jr24 0/1 Running 0 4m

readiness-xhgfq 0/1 Running 0 4m

- 手动做都端口映射

[root@k8s-m01 health]# kubectl expose rc readiness --port=80 --type=NodePort

service "readiness" exposed

[root@k8s-m01 health]# kubetctl get svc

-bash: kubetctl: command not found

[root@k8s-m01 health]# kubectl get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes 10.254.0.1 <none> 443/TCP 3d

my-nginx 10.254.223.210 <nodes> 80:25516/TCP 1d

mysql 10.254.121.26 <none> 3306/TCP 4h

myweb 10.254.160.187 <nodes> 8080:30008/TCP 4h

readiness 10.254.49.115 <nodes> 80:22113/TCP 10s

- 查看SVC

我们发现,后端的pod没有准备好的情况下,svc里面也没有后端资源

[root@k8s-m01 health]# kubectl describe svc readiness

Name: readiness

Namespace: default

Labels: app=readiness

Selector: app=readiness

Type: NodePort

IP: 10.254.49.115

Port: <unset> 80/TCP

NodePort: <unset> 22113/TCP

Endpoints: #没有

Session Affinity: None

No events.

因为我们在rc里面定义的条件是由ylm.html

- 我们去创建ylm.html

在其中一个pod里面

[root@k8s-m01 health]# kubectl exec -it readiness-2jr24 /bin/bash

root@readiness-2jr24:/# cd /usr/share/nginx/html/

root@readiness-2jr24:/usr/share/nginx/html# echo 1234 >ylm.html

root@readiness-2jr24:/usr/share/nginx/html# ls

50x.html index.html ylm.html

root@readiness-2jr24:/usr/share/nginx/html# exit

exit

#被写入ylm.html的那个pod已经准备好了

[root@k8s-m01 health]# kubectl get pod

NAME READY STATUS RESTARTS AGE

readiness-2jr24 1/1 Running 0 14m

readiness-xhgfq 0/1 Running 0 14m

- svc里面也有了后端的一个节点

[root@k8s-m01 health]# kubectl describe svc readiness

Endpoints: 172.16.86.16:80

我们可以使用tcpsocket去请求后端的mysql的3306,如果不能访问到,就不会被svc管理,就不让他访问到;

参考liveness;

4.dashboard服务

web界面;不操作命令行,就能管理k8s;

- 上传并导入镜像。打标签

- 创建dashboard的deployment和service

- 访问http://10.0.0.61:8080/ui/

[root@k8s-m01 dashboard]# cat dashboard-deploy.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

# Keep the name in sync with image version and

# gce/coreos/kube-manifests/addons/dashboard counterparts

name: kubernetes-dashboard-latest

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

version: latest

kubernetes.io/cluster-service: "true"

spec:

containers:

- name: kubernetes-dashboard

image: 10.0.0.61:5000/kubernetes-dashboard-amd64:v1.4.1

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 100m

memory: 50Mi

requests:

cpu: 100m

memory: 50Mi

ports:

- containerPort: 9090

args:

- --apiserver-host=http://10.0.0.61:8080 #通过web界面管理调用apiserver

livenessProbe:

httpGet:

path: /

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

[root@k8s-m01 dashboard]# kubectl get all -n kube-system

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-dns 1 1 1 1 7h

deploy/kubernetes-dashboard-latest 1 1 1 1 2m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kube-dns 10.254.230.254 <none> 53/UDP,53/TCP 7h

NAME DESIRED CURRENT READY AGE

rs/kube-dns-88051145 1 1 1 7h

rs/kubernetes-dashboard-latest-2566485142 1 1 1 2m

NAME READY STATUS RESTARTS AGE

po/kube-dns-88051145-qk158 4/4 Running 0 7h

po/kubernetes-dashboard-latest-2566485142-7cjh6 1/1 Running 0 2m

[root@k8s-m01 dashboard]# cat dashboard-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

spec:

selector:

k8s-app: kubernetes-dashboard #通过标签进行关联 唯一的

ports:

- port: 80 #没有指定类型 就是clusterIP类型

targetPort: 9090

[root@k8s-m01 dashboard]# kubectl create -f dashboard-svc.yaml

service "kubernetes-dashboard" created

- 访问http://10.0.0.61:8080/ui/

- web界面上起一个app

- 进行查看

http://10.0.0.61:8080/api/v1/proxy/namespaces/kube-system/services/kubernetes-dashboard/

http://10.0.0.61:8080 api-server

proxy 代理

namespaces============kube-system

services================kubernetes-dashboard

dashboard并没有做端口映射,是通过apiserver 做了一个proxy反向代理来访问的;

cluserIP类型可以通过反向代理的方式访问;

第一种: NodePort类型

type: NodeePort

ports:

- port: 80

targetPort: 80

nodePort: 30008

第二种: ClusterIP类型

type: ClusterIP

ports:

- port: 80

targetPort: 80

- 演示proxy类型

[root@k8s-m01 proxy]# cat k8s_rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx

spec:

replicas: 2

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: 10.0.0.61:5000/nginx:1.13

ports:

- containerPort: 80

[root@k8s-m01 proxy]# cat nginx_svc.yaml

apiVersion: v1

kind: Service

metadata:

name: myweb2

spec :

ports:

- port: 80

targetPort: 80

selector:

app: myweb #后端额rc的服务名称

- 通过反向代理访问service

http://10.0.0.61:8080/api/v1/proxy/namespace/default/services/myweb2

- 如果访问不到Nginx 参考如下配置

[root@k8s-m01 proxy]# kubectl edit rc nginx

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

kind: ReplicationController

metadata:

creationTimestamp: 2020-11-21T14:59:57Z

generation: 2

labels:

app: nginx

name: nginx

namespace: default

resourceVersion: "346447"

selfLink: /api/v1/namespaces/default/replicationcontrollers/nginx

uid: 38a106ff-2c0a-11eb-97a7-000c299b7352

spec:

replicas: 2

selector:

app: nginx

template:

metadata:

creationTimestamp: null

labels:

app: nginx

spec:

containers:

- image: 10.0.0.61:5000/nginx:1.13

imagePullPolicy: IfNotPresent

name: myweb

ports:

- containerPort: 80

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

dnsPolicy: ClusterFirst

restartPolicy: Always

securityContext: {}

terminationGracePeriodSeconds: 30

status:

availableReplicas: 2

fullyLabeledReplicas: 2

observedGeneration: 2

readyReplicas: 2

replicas: 2

~

--------

[root@k8s-m01 proxy]# kubectl edit svc myweb2

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

kind: Service

metadata:

creationTimestamp: 2020-11-21T15:01:32Z

name: myweb2

namespace: default

resourceVersion: "346543"

selfLink: /api/v1/namespaces/default/services/myweb2

uid: 7171fe18-2c0a-11eb-97a7-000c299b7352

spec:

clusterIP: 10.254.130.110

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {}

~

6.弹性伸缩

弹性伸缩:通过监控容器的cpu的负载值,如果这个平均高于80%,增加容器的数量,如果这个平均低于10%,减少容器的数量

- 并发比较大,自动增加容器的数量 ,并发量小了,自动释放相对应的资源

要实现弹性伸缩,必须使用监控,会监控pod的使用率,监控cpu的使用率,当值大了的时候,再加一个pod,就会把他加入到负载均衡里面,连接的是同一个Mysql,pod会无缝的连接进去,压力降下来,就减少,最终保留最小值,把pod像弹簧一样的进行管理;

1.安装heapster监控

- 上传镜像

#!/bin/bash

for n in ls *.tar.gz

do

docker load -i $n;

done

5 years ago 251 MB

docker tag docker.io/kubernetes/heapster_grafana:v2.6.0 10.0.0.61:5000/heapster_grafana:v2.6.0

docker tag docker.io/kubernetes/heapster_influxdb:v0.5 10.0.0.61:5000/heapster_influxdb:v0.5

docker tag docker.io/kubernetes/heapster:canary 10.0.0.61:5000/heapster:canary

docker push 10.0.0.61:5000/heapster_grafana:v2.6.0

docker push 10.0.0.61:5000/heapster_influxdb:v0.5

docker push 10.0.0.61:5000/heapster:canary

- 上传配置文件

[root@k8s-m01 heapster]# ls

grafana-service.yaml heapster-service.yaml influxdb-service.yaml

heapster-controller.yaml influxdb-grafana-controller.yaml

InfluxDB是一个由InfluxData开发的开源时序型数据库。它由Go写成;

Grafana是一個多平台的開源分析和交互式可視化Web應用程序;

heapster采集数据

- 配置文件简析

[root@k8s-m01 heapster]# cat influxdb-grafana-controller.yaml

apiVersion: v1

kind: ReplicationController #RC

metadata:

labels:

name: influxGrafana

name: influxdb-grafana

namespace: kube-system #名称空间

spec:

replicas: 1 #副本数

selector: #因为是RC,所以定义了标签选择器

name: influxGrafana

template: #pod的模板,必须打标签

metadata:

labels:

name: influxGrafana #标签是成对的

spec:

containers:

- name: influxdb #容器

image: kubernetes/heapster_influxdb:v0.5

imagePullPolicy: IfNotPresent

volumeMounts: #挂载一个卷

- mountPath: /data #容器/data挂载到influxdb-storage这个卷上面了

name: influxdb-storage

- name: grafana

imagePullPolicy: IfNotPresent

image: 10.0.0.61:5000/heapster_grafana:v2.6.0

env:

- name: INFLUXDB_SERVICE_URL

value: http://monitoring-influxdb:8086

# The following env variables are required to make Grafana accessible via

# the kubernetes api-server proxy. On production clusters, we recommend

# removing these env variables, setup auth for grafana, and expose the grafana

# service using a LoadBalancer or a public IP.

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

value: /api/v1/proxy/namespaces/kube-system/services/monitoring-grafana/

volumeMounts:

- mountPath: /var #把容器里面 的/var目录挂载到 下面这个卷上

name: grafana-storage

nodeSelector: #v按这个删了 1.5不支持起到master上

kubernetes.io/hostname: k8s-master

volumes: #这个yaml文件用到了两个卷 系统得创建2个卷

- name: influxdb-storage #第一个卷

emptyDir: {} #用宿主机得空目录

- name: grafana-storage #第二卷

emptyDir: {}

[root@k8s-m01 heapster]# vim heapster-controller.yaml

apiVersion: v1

kind: ReplicationController

metadata:

labels:

k8s-app: heapster

name: heapster

version: v6

name: heapster

namespace: kube-system

spec:

replicas: 1

selector:

k8s-app: heapster

version: v6

template:

metadata:

labels:

k8s-app: heapster

version: v6

spec:

nodeSelector: #v按这个删了 1.5不支持起到master上

kubernetes.io/hostname: k8s-master

containers:

- name: heapster

image: 10.0.0.61:5000/heapster:canary

imagePullPolicy: IfNotPresent #在镜像已经存在得情况下 不去拉取镜像

command:

- /heapster

- --source=kubernetes:http://10.0.0.61:8080?inClusterConfig=false

- --sink=influxdb:http://monitoring-influxdb:8086

[root@k8s-m01 heapster]# cat grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-grafana

name: monitoring-grafana

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 3000

selector:

name: influxGrafana

[root@k8s-m01 heapster]# cat heapster-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: Heapster

name: heapster

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 8082

selector:

k8s-app: heapster

[root@k8s-m01 heapster]# cat influxdb-service.yaml

apiVersion: v1

kind: Service

metadata:

labels: null

name: monitoring-influxdb

namespace: kube-system

spec:

ports:

- name: http

port: 8083

targetPort: 8083

- name: api

port: 8086

targetPort: 8086

selector:

name: influxGrafana

- 启动

[root@k8s-m01 heapster]# kubectl create -f .

[root@k8s-m01 heapster]# kubectl get all -o wide -n kube-system

- web界面就是有数据了

cadVisor: 资源来自于它 http://10.0.0.63:4194 内嵌在kublete

heapster取数据 从cAdvisor里面取

----influxdb存数据

-----granfan出图

2.弹性伸缩 (HPA)

要实现 弹性伸缩,就要对yaml文件进行做限制;

如果不限制,那么使用弹性伸缩就太难了;

- 修改配置文件

[root@k8s-m01 hpa]# cat k8s_deploy.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

namespace: ylm

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: 10.0.0.61:5000/nginx:1.13

ports:

- containerPort: 80

resources:

limits:

cpu: 100m

requests:

cpu: 100m

- 创建弹性伸缩(hpa)规则

kubectl autoscale -n ylm deployment nginx-deployment --max=8 --min=1 --cpu-percent=7

最大起8个容器,最小1个容器 cpu的百分比7

- 执行完命令立即生效,因为现在压力小,所以自动变为一个 我们进行压力测试 看看 最高能几个

hpa

- 压力测试

ab -n 200000 -c 50 http://172.16.86.12/index.html

-n 总数

-c 并发数

- 压力大的时候

CURRENT:表示现在的 CPU压力

- 压力小的时候

7.持久化存储

我们以使用PV类型是NFS为例:

- 先装NFS的服务端;

- 服务端提供PV

- PV提供给PVC

- PVC挂载到RC里面 ,使其指定一个特定的目录;

pv: persistent volume全局的资源pv , node

pvc: persistent volume claim局部的资源( namespace ) pod , rc , svc

1.安装nfs服务端(10.0.0.61)

yum install nfs-utils.x86_64 -y

mkdir /data

vim /etc/exports

/data 10.0.0.0/24(rw,async,no_root_squash,no_all_squash)

async: 实时同步 rsync是异步同步,会有丢失数据的风险

no_root_squash #不做uid映射

no_all_squash #不做其他用户的映射

[root@k8s-m01 ~]# systemctl restart rpcbind.service

[root@k8s-m01 ~]# systemctl restart nfs.service

[root@k8s-m01 ~]# showmount -e 10.0.0.61

Export list for 10.0.0.61:

/data 10.0.0.0/24

2.在node节点安装nfs客户端

yum install nfs-utils.x86_64 -y

[root@k8s-m03 heapster]# showmount -e 10.0.0.61

Export list for 10.0.0.61:

/data 10.0.0.0/24

3.创建PV和PVC

- 创建pv

apiVersion: v1

kind: PersistentVolume

metadata:

name: tomcat-mysql2 #pv得名字,全局唯一

labels:

type: nfs001 #pv得类型

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Recycle #支持回收

nfs:

path: "/data/tomcat-mysql2" #挂载得目录

server: 10.0.0.61 #服务端地址

readOnly: false #不是只读得类型

#查看某个字段的参数 explain

[root@k8s-m01 volume]# kubectl explain pv.spec.persistentVolumeReclaimPolicy

FIELD: persistentVolumeReclaimPolicy <string>

DESCRIPTION:

What happens to a persistent volume when released from its claim. Valid

options are Retain (default) and Recycle. Recycling must be supported by

Retain: 保留

Recycle: 不保留 回收

#创建PV

[root@k8s-m01 volume]# kubectl get pv

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM REASON AGE

tomcat-mysql 6Gi RWX Recycle Available 19s

CLAIM: 代表还没被分配出去

- 创建pvc

[root@k8s-m01 volume]# cat mysql_pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: tomcat-mysql

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

#出现乱码 使用命令

yum install dos2unix.x86_64 -y &>/dev/null

[root@k8s-m01 volume]# dos2unix mysql-rc-pvc.yml

dos2unix: converting file mysql-rc-pvc.yml to Unix format ...

[root@k8s-m01 volume]# vim

4.创建mysql-rc,pod模板里使用volume

volumeMounts:

- name: mysql #容器得卷

mountPath: /var/lib/mysql

volumes :

- name: mysql

persistentvolumec1aim:

claimName: tomcat-mysql #pvc得名字 tomcat-mysql

一个pod里面可能起多个容器, 通过卷得名字来关联,确定那个容器挂载到了那个卷;

[root@k8s-m01 volume]# cat mysql-rc-pvc.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: 10.0.0.61:5000/mysql:5.7

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'

volumeMounts:

- name: mysql

mountPath: /var/lib/mysql

volumes:

- name: mysql

persistentVolumeClaim:

claimName: tomcat-mysql

#因为rc已经存在 我们更改了 就直接应用 不删除了

[root@k8s-m01 volume]# kubectl apply -f mysql-rc-pvc.yml

#但是 现在pod得内容 还没有变 我们应该删除pod 重新创建的pod才能应用刚刚被修改过得yaml文件

#查看已经有数据了 说明持久化了

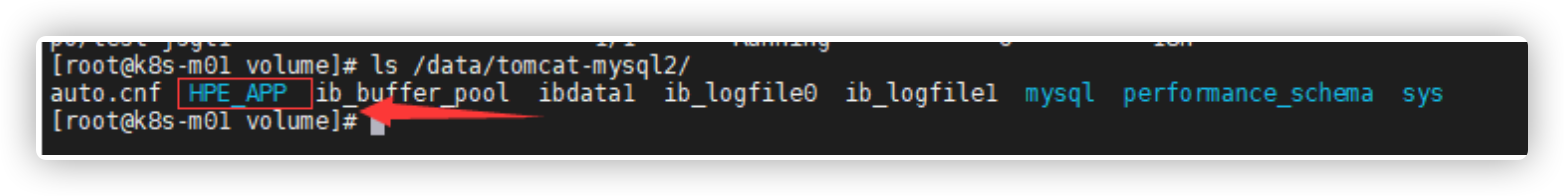

[root@k8s-m01 volume]# ls /data/tomcat-mysql2/

auto.cnf ib_buffer_pool ibdata1 ib_logfile0 ib_logfile1 ibtmp1 mysql performance_schema sys

5.验证持久化

- 提交表格

-

删除pod

[root@k8s-m01 volume]# kubectl delete po/mysql-xlb1p pod "mysql-xlb1p" deleted -

查看数据

6.分布式存储glusterfs

是文件类型存储

每个节点都是主节点

Glusterfs是一个开源分布式文件系统,具有强大的横向扩展能力,可支持数PB存储容量和数千客户端,通过网络互联成一个并行的网络文件系统。具有可扩展性、高性能、高可用性等特点。

| 主机名 | ip地址 | 环境 |

|---|---|---|

| glusterfs01 | 10.0.0.47 | Centos7.7内存512M,增加两块硬盘10G,host解析 |

| glusterfs02 | 10.0.0.48 | Centos7.7内存512M,增加两块硬盘10G,host解析 |

| glusterfs03 | 10.0.0.49 | Centos7.7内存512M,增加两块硬盘10G,host解析 |

环境准备

- 所有节点

-

更改为阿里云得yum源 不用epel源

https://developer.aliyun.com/mirror/centos?spm=a2c6h.13651102.0.0.3e221b11Xwx3vU

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo -

下载gluster

yum install centos-release-gluster -y yum install install glusterfs-server -y -

域名解析

cat >>/etc/hosts<<EOF 10.0.0.61 k8s-m01 10.0.0.62 k8s-m02 10.0.0.63 k8s-m03 10.0.0.47 glusterfs01 10.0.0.48 glusterfs02 10.0.0.49 glusterfs03 EOF -

启动

systemctl start glusterd.service systemctl enable glusterd.service mkdir -p /gfs/test1 mkdir -p /gfs/test2 -

格式化新增两块盘 并挂载

fdisk -l mkfs.xfs /dev/sdb mkfs.xfs /dev/sdc 查看UUID blkid 使其开机自动挂载 [root@glusterfs01 ~]# cat /etc/fstab /dev/mapper/centos-root / xfs defaults 0 0 UUID=ab74d5bf-4c43-40d0-81b8-bff0f76c593d /gfs/test1 xfs defaults 0 0 UUID=241af1a7-c4ed-4ede-b9e3-7ae4fecf34ec /gfs/test2 xfs defaults 0 0 #自动挂载 mount -a #查看 df -h -

添加存储资源池

- master节点上做

[root@glusterfs01 ~]# gluster pool list UUID Hostname State 5a56d08e-7098-48e8-b842-a33f527b809e localhost Connected [root@glusterfs01 ~]# gluster peer probe glusterfs02 peer probe: success. [root@glusterfs01 ~]# gluster peer probe glusterfs03 peer probe: success. [root@glusterfs01 ~]# gluster pool list UUID Hostname State 104b6fae-9cfe-4c68-a4b8-4fe008d26c88 glusterfs02 Connected 51b50aa7-61fd-4e77-ad3a-09ed510f8983 glusterfs03 Connected 5a56d08e-7098-48e8-b842-a33f527b809e localhost Connected -

创建分布式复制卷

[root@glusterfs01 ~]# gluster volume create ylm replica 2 glusterfs01:/gfs/test1 glusterfs01:/gfs/test2 glusterfs02:/gfs/test1 glusterfs02:/gfs/test2 force #查看状态 [root@glusterfs01 ~]# gluster volume info ylm #启用 [root@glusterfs01 ~]# gluster volume start ylm [root@glusterfs02 ~]# gluster volume info ylm Volume Name: ylm Type: Distributed-Replicate Volume ID: 9860defe-5fab-41d0-bc1e-418ca85dbe40 Status: Started #处于started状态就可以挂载了 -

写入数据 并验证

[root@glusterfs03 /]# mount -t glusterfs 10.0.0.47:/ylm /mnt [root@glusterfs03 /]# df -h 10.0.0.47:/ylm 20G 270M 20G 2% /mnt #写入数据 [root@glusterfs03 /]# cp -a /etc/* /mnt [root@glusterfs03 /]# ls /mnt #去01上查看 有两个副本 [root@glusterfs01 ~]# find /gfs -name "crontab" /gfs/test1/crontab /gfs/test2/crontab -

扩容 增加 brick

[root@glusterfs01 ~]# gluster volume add-brick ylm glusterfs03:/gfs/test1 glusterfs03:/gfs/test2 force [root@glusterfs01 ~]# gluster volume info ylm Number of Bricks: 3 x 2 = 6 Transport-type: tcp Bricks: Brick1: glusterfs01:/gfs/test1 Brick2: glusterfs01:/gfs/test2 Brick3: glusterfs02:/gfs/test1 Brick4: glusterfs02:/gfs/test2 Brick5: glusterfs03:/gfs/test1 Brick6: glusterfs03:/gfs/test2 [root@glusterfs03 ~]# ls /gfs/test1/ [root@glusterfs03 ~]# ls /gfs/test2/ #使服务重新调度均衡 [root@glusterfs01 ~]# gluster volume rebalance ylm start #此时就有文件了 [root@glusterfs03 ~]# ls /gfs/test1/ [root@glusterfs03 ~]# ls /gfs/test2/ -

容量不够就加存储节点 然后加brick

扩容前查看容量 df -h 扩容命令 gluster volume add-brick ylm glusterfs03:/gfs/test1 glusterfs03:/gfs/test2 force 扩容后查看容量 df -hpool: 存储资源池

peer:节点

volume: 卷

brick: 存储单元

7.k8s对接glusterfs

技术重点是在用pv得时候;

#查找字段的参数

kubectl explain pv.spec.glusterfs

-

创建endpoint 和service是通过名字进行关联

[root@glusterfs02 ~]# netstat -ntlp tcp 0 0 0.0.0.0:49152 0.0.0.0:* LISTEN 2647/glusterfsd [root@k8s-m01 glusterfs]# cat glusterfs-ep.yaml apiVersion: v1 kind: Endpoints metadata: name: glusterfs namespace: default subsets : - addresses : - ip: 10.0.0.47 - ip: 10.0.0.48 - ip: 10.0.0.49 ports : - port: 49152 #这个是glusterfs的端口 protocol: TCP [root@k8s-m01 glusterfs]# kubectl create -f glusterfs-ep.yaml [root@k8s-m01 glusterfs]# kubectl get endpoints NAME ENDPOINTS AGE glusterfs 10.0.0.47:49152,10.0.0.48:49152,10.0.0.49:49152 3s -

创建service

vim glusterfs-svc.yaml apiVersion: v1 kind: Service metadata: name: glusterfs namespace: default spec: ports: - port: 49152 protocol: TCP targetPort: 49152 sessionAffinity: None type: ClusterIP [root@k8s-m01 glusterfs]# kubectl create -f glusterfs-svc.yaml #查看已经被创建的资源 [root@k8s-m01 glusterfs]# kubectl describe svc glusterfs Name: glusterfs Namespace: default Labels: <none> Selector: <none> Type: ClusterIP IP: 10.254.49.37 Port: <unset> 49152/TCP Endpoints: 10.0.0.47:49152,10.0.0.48:49152,10.0.0.49:49152 Session Affinity: None No events. -

创建gluster类型pv

vim gluster_pv.yaml apiVersion: v1 kind: PersistentVolume metadata: name: gluster labels: type: glusterfs spec: capacity: storage: 10Gi accessModes: - ReadWriteMany glusterfs: endpoints: "glusterfs" path: "ylm" readOnly: false [root@k8s-m01 glusterfs]# kubectl get pv NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM REASON AGE gluster 10Gi RWX Retain Available 48s Retain: 保留 默认 -

创建pvc

[root@k8s-m01 glusterfs]# cat gluster_pvc.yaml kind: PersistentVolumeClaim apiVersion: v1 metadata: name: gluster spec: selector: matchLabels: type: glusterfs accessModes: - ReadWriteMany resources: requests: storage: 10Gi [root@k8s-m01 glusterfs]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESSMODES AGE gluster Bound gluster 10Gi RWX 7s -

创建项目

出现乱码

[root@k8s-m01 glusterfs]# dos2unix mysql-rc.yml

-

创建rc

[root@k8s-m01 glusterfs]# cat mysql-rc.yml apiVersion: v1 kind: ReplicationController metadata: name: mysql spec: replicas: 1 selector: app: mysql template: metadata: labels: app: mysql spec: volumes: - name: mysql persistentVolumeClaim: claimName: gluster containers: - name: mysql image: 10.0.0.61:5000/mysql:5.7 volumeMounts: - name: mysql mountPath: /var/lib/mysql ports: - containerPort: 3306 env: - name: MYSQL_ROOT_PASSWORD value: '123456' -

node节点安装glusterfs客户端 63上安装

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo yum install centos-release-gluster -y yum install install glusterfs-server -y -

glsuterfs服务端的目录 不能有任何文件 ,有的话就删除

[root@glusterfs03 mnt]# ls /mnt -

启动文件

[root@k8s-m01 glusterfs]# kubectl apply -f mysql-rc.yml [root@k8s-m01 glusterfs]# kubectl delete pod mysql-lcw21 [root@k8s-m01 glusterfs]# kubectl get pod NAME READY STATUS RESTARTS AGE exec 0/1 CrashLoopBackOff 453 1d httpget 1/1 Running 1 1d mysql-4x9ss 1/1 Running 0 4m [root@glusterfs03 mnt]# ls auto.cnf ib_buffer_pool ibdata1 ib_logfile0 ib_logfile1 ibtmp1 mysql performance_schema sys -

验证

-

删除pod

-

再次删除 Mysqlpod 刷新页面 看到数据还在 就OK

8.与jenkins集成实现CI/CD

| ip地址 | 服务 | 内存 |

|---|---|---|

| 10.0.0.61 | kube-apiserver 8080 | 4G |

| 10.0.0.63 | k8s-m03 | 3G |

| 10.0.0.48 | jenkins(tomcat+jdk)8080 +kubelet | 1G |

| 10.0.0.48 | gitlab 8080,80 | 2G |

1.安装gitlab

#a :安装

wget https://mirrors.tuna.tsinghua.edu.cn/gitlab-ce/yum/el7/gitlab-ce-11.9.11-ce.0.el7.x86_64.rpm

yum localinstall gitlab-ce-11.9.11-ce.0.el7.x86_64.rpm -y

#b :配置

$ egrep -v "^$|#" /etc/gitlab/gitlab.rb

external_url 'http://10.0.0.49'

prometheus_monitoring['enable'] = false #更改这个 才能使内存变小

#c:应用并启动服务

gitlab-ctl reconfigure

root

12345678

2.上传代码

[root@glusterfs03 srv]# pwd

/srv

[root@glusterfs03 srv]# ls

2000.png 21.js icon.png img index.html sound1.mp3

[root@glusterfs03 srv]# git config --global user.email "ylmcr7@163.com"

[root@glusterfs03 srv]# git config --global user.name "ylmcr7"

[root@glusterfs03 srv]# git init

[root@glusterfs03 srv]# git remote add origin http://10.0.0.49/root/xiaoniao.git

[root@glusterfs03 srv]# git add .

[root@glusterfs03 srv]# git commit -m "Initial commit"

[root@glusterfs03 srv]# git push -u origin master

Username for 'http://10.0.0.49': root

Password for 'http://root@10.0.0.49': 12345678

3.编写dockerfile并测试

[root@glusterfs03 ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

[root@glusterfs03 ~]# yum install docker -y

[root@glusterfs03 ~]# vim /etc/sysconfig/docker

OPTIONS='--selinux-enabled --log-driver=journald --signature-verification=false --registry-mirror=htt

ps://registry.docker-cn.com --insecure-registry=10.0.0.61:5000'

[root@glusterfs03 ~]# systemctl restart docker.service

[root@glusterfs03 srv]# ls

2000.png 21.js Dockerfile icon.png img index.html sound1.mp3

[root@glusterfs03 srv]# cat Dockerfile

FROM 10.0.0.61:5000/nginx:1.13

ADD . /usr/share/nginx/html/

[root@glusterfs03 srv]# docker build -t xiaoniaov1 ./

[root@glusterfs03 srv]# docker run --name xiaoniao -p 88:80 -itd xiaoniaov1:latest

4.将dockerfile推送到

[root@glusterfs03 srv]# git add .

[root@glusterfs03 srv]# git commit -m "dockerfile"

[root@glusterfs03 srv]# git push

5.安装Jenkins

[root@glusterfs02 ~]# ls

anaconda-ks.cfg jenkins.war apache-tomcat-8.0.27.tar.gz

gitlab-ce-11.9.11-ce.0.el7.x86_64.rpm jdk-8u102-linux-x64.rpm

[root@glusterfs02 ~]# rpm -ivh jdk-8u102-linux-x64.rpm

[root@glusterfs02 ~]# mkdir /app

[root@glusterfs02 ~]# tar xf apache-tomcat-8.0.27.tar.gz -C /app

[root@glusterfs02 ~]# rm -rf /app/apache-tomcat-8.0.27/webapps/*

[root@glusterfs02 ~]# mv jenkins.war /app/apache-tomcat-8.0.27/webapps/ROOT.war

[root@glusterfs02 ~]# tar xf jenkin-data.tar.gz -C /root

[root@glusterfs02 ~]# /app/apache-tomcat-8.0.27/bin/startup.sh

[root@glusterfs02 ~]# netstat -lntup

6.配置gitlab jenkins免密钥

- jenkins的公钥 放到jenkins

[root@glusterfs02 ~]# ssh-keygen -t rsa

[root@glusterfs02 ~]# ls /root/.ssh/

id_rsa id_rsa.pub

[root@glusterfs02 ~]# cat /root/.ssh/id_rsa.pub

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDkiJclz5K5uwepP9NIY/VWjauYQ3Z7tVGrcliCjCKC44hjtiLp0dZDo+ZomHPWKO2LPidchfUV3YPjJNSykt0ewGyfakwqt0xFOokin+6fve4qFQioQjNSNWRRrEjyqSIIQIPorL6OEXoBNJAc3LcLAbnqclkNKQsRtGXxqmauL3rRc8jntQSOT62PVBF4Yjx41xBE5TxhT4dGUQLQU3otAvVUY2/3vqBLpLWU+0UrtBA7iC7VnoSRCRSjeGeABHLS5sea96R2xJW2Q8pKA2KfXSjnw/fF5iWwrP5CeuYV0NpaM9MEroiZnUeIzRp28qNFj0fyQhqtaDKmpvlFJMuL root@glusterfs02

- Jenkins的私钥放到jenkins上

7.新建test项目测试是否成功拉取

- 构建完查看 输出

8.jenkins构建镜像

[root@glusterfs02 ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

[root@glusterfs02 ~]# yum install docker -y

#使其可以拉取61的私有仓库地址

[root@glusterfs02 ~]# scp -rp 10.0.0.63:/etc/sysconfig/docker /etc/sysconfig/docker

[root@glusterfs02 ~]# systemctl restart docker

9.Jenkins自动化发布到K8s

-

jenkins服务器安装kubelet命令

[root@glusterfs02 ~]# yum -y install kubernetes-client.x86_64 -y [root@glusterfs02 ~]# kubectl -s 10.0.0.61:8080 get nodes NAME STATUS AGE 10.0.0.62 NotReady 5d 10.0.0.63 Ready 4d -

jenkins自动化部署应用到k8s