目录

1、先查看目前集群版本

root@k8s-deploy:~# kubectl get node

NAME STATUS ROLES AGE VERSION

172.31.7.101 Ready,SchedulingDisabled master 5h22m v1.24.2

172.31.7.102 Ready,SchedulingDisabled master 5h22m v1.24.2

172.31.7.103 Ready,SchedulingDisabled master 3h8m v1.24.2

172.31.7.111 Ready node 5h20m v1.24.2

172.31.7.112 Ready node 5h20m v1.24.2

172.31.7.113 Ready node 3h22m v1.24.2

root@k8s-deploy:~#

注意事项:集群升级最好是跨小版本,不要跨大版本升级,比如1.24.2可以升级到1.24.X但是最好不要直接升级到1.25版本,因为不同版本的server文件未必是百分百兼容的升级方式主要是通过替换二进制实现的,把api server的二进制,kubelet、kubeporxy等二进制替换掉实现升级一、下载

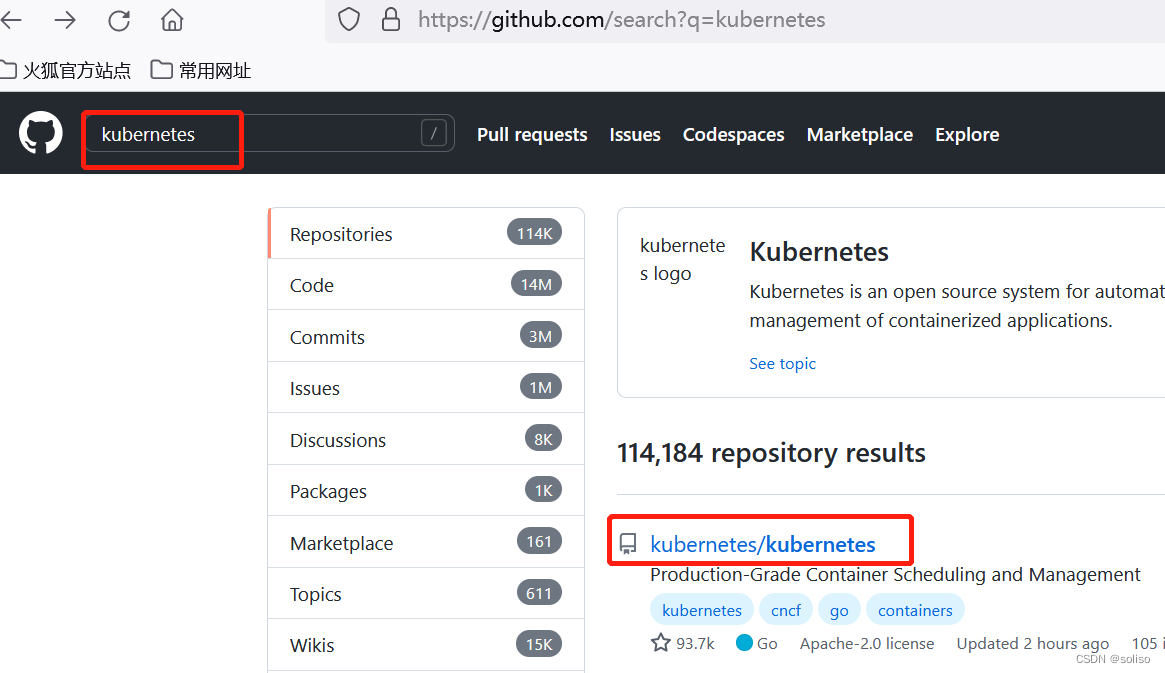

进入github官网,下载升级需要用的新版本包

1、搜索kubernetes,而后点击kubernetes

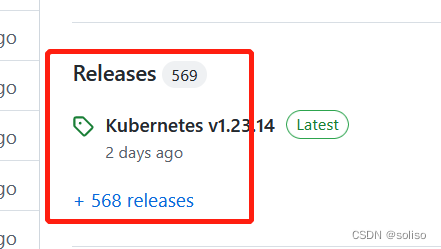

2、基于上一步进入的页面右下框,点击版本

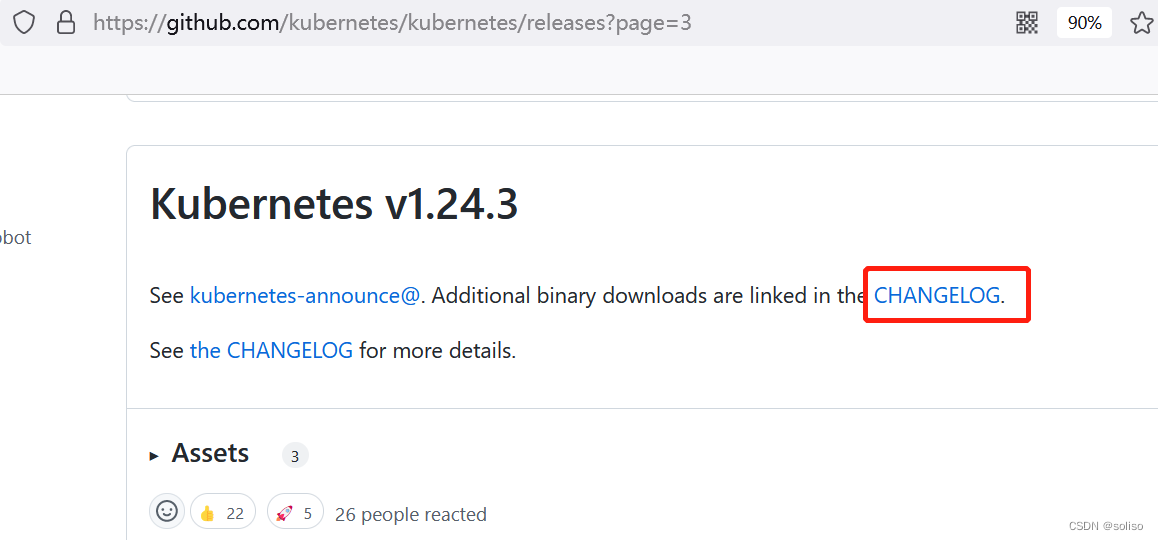

3、找到需要升级的版本1.24.3

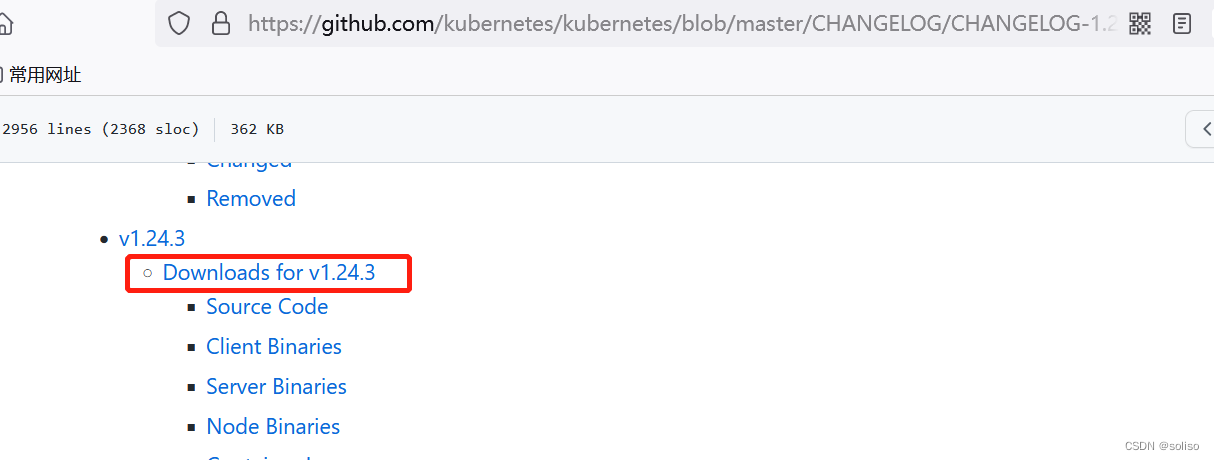

4、点击下载

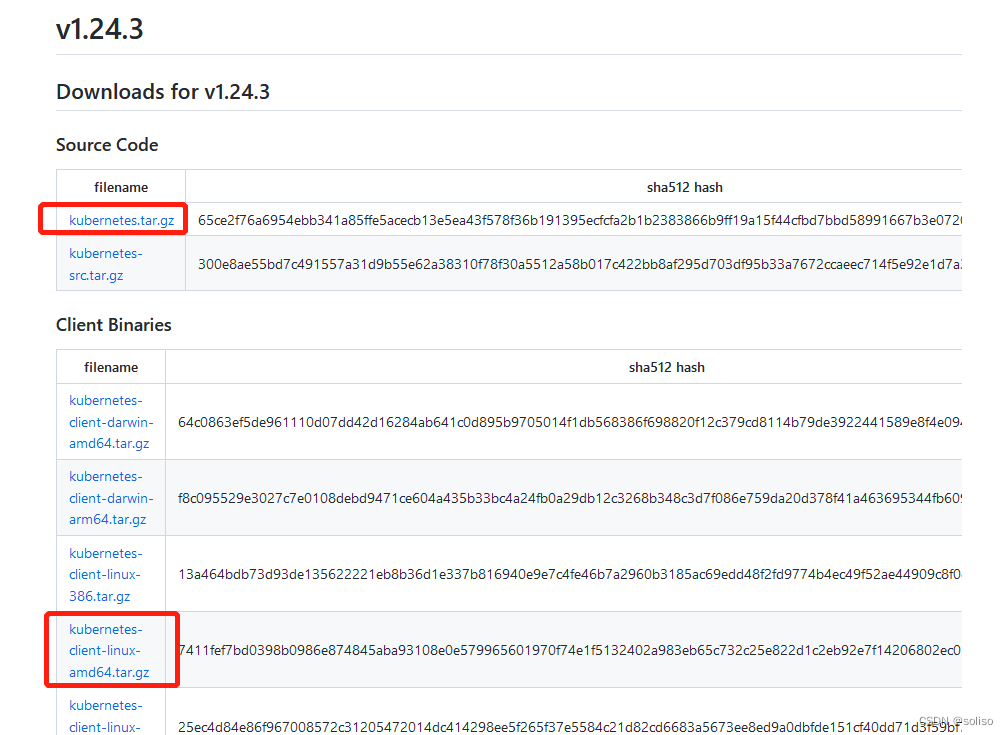

5、下载升级需要的四个包文件

下载的链接和截图

https://dl.k8s.io/v1.24.3/kubernetes.tar.gz

https://dl.k8s.io/v1.24.3/kubernetes-client-linux-amd64.tar.gz

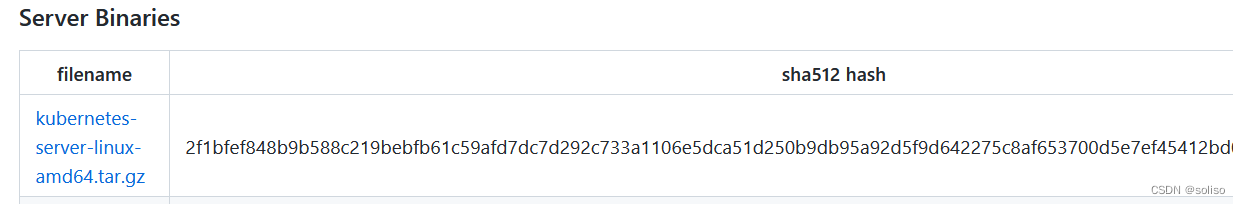

下载链接和截图

https://dl.k8s.io/v1.24.3/kubernetes-server-linux-amd64.tar.gz

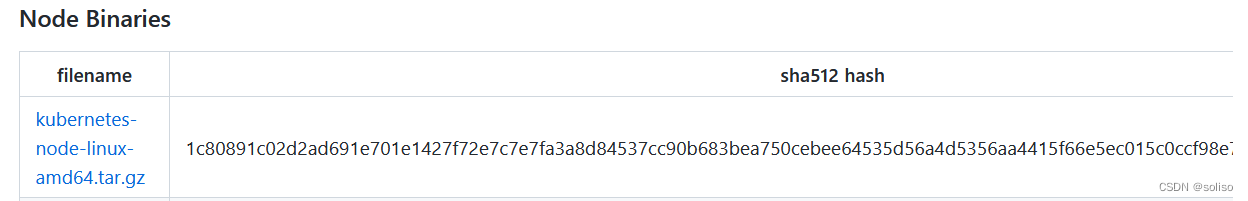

下载链接和截图

https://dl.k8s.io/v1.24.3/kubernetes-node-linux-amd64.tar.gz

二、上传

把下载好的四个包文件上传至部署服务器

1、上传到部署服务器

root@k8s-deploy:~# ls

ezdown kubernetes-client-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz ls

kubernetes-node-linux-amd64.tar.gz kubernetes.tar.gz snap

2、解压

root@k8s-deploy:~# tar xf kubernetes-client-linux-amd64.tar.gz

root@k8s-deploy:~# tar xf kubernetes-node-linux-amd64.tar.gz

root@k8s-deploy:~# tar xf kubernetes-server-linux-amd64.tar.gz

root@k8s-deploy:~# tar xf kubernetes.tar.gz

解压后有个kubernetes目录

root@k8s-deploy:~# ls

ezdown kubernetes-client-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz ls

kubernetes kubernetes-node-linux-amd64.tar.gz kubernetes.tar.gz snap

root@k8s-deploy:~# cd kubernetes/

在/kubernetes/cluster/addons/目录中有很多插件

root@k8s-deploy:~/kubernetes# ll cluster/addons/

total 80

drwxr-xr-x 18 root root 4096 Jul 13 14:48 ./

drwxr-xr-x 9 root root 4096 Jul 13 14:48 ../

drwxr-xr-x 2 root root 4096 Jul 13 14:48 addon-manager/

drwxr-xr-x 3 root root 4096 Jul 13 14:48 calico-policy-controller/

drwxr-xr-x 3 root root 4096 Jul 13 14:48 cluster-loadbalancing/

drwxr-xr-x 3 root root 4096 Jul 13 14:48 device-plugins/

drwxr-xr-x 5 root root 4096 Jul 13 14:48 dns/

drwxr-xr-x 2 root root 4096 Jul 13 14:48 dns-horizontal-autoscaler/

drwxr-xr-x 4 root root 4096 Jul 13 14:48 fluentd-gcp/

drwxr-xr-x 3 root root 4096 Jul 13 14:48 ip-masq-agent/

drwxr-xr-x 2 root root 4096 Jul 13 14:48 kube-proxy/

drwxr-xr-x 3 root root 4096 Jul 13 14:48 metadata-agent/

drwxr-xr-x 3 root root 4096 Jul 13 14:48 metadata-proxy/

drwxr-xr-x 2 root root 4096 Jul 13 14:48 metrics-server/

drwxr-xr-x 5 root root 4096 Jul 13 14:48 node-problem-detector/

-rw-r--r-- 1 root root 104 Jul 13 14:48 OWNERS

drwxr-xr-x 8 root root 4096 Jul 13 14:48 rbac/

-rw-r--r-- 1 root root 1655 Jul 13 14:48 README.md

drwxr-xr-x 8 root root 4096 Jul 13 14:48 storage-class/

drwxr-xr-x 4 root root 4096 Jul 13 14:48 volumesnapshots/

比如dns目录里面有官方提供的yaml文件,我们用coredns的话可以再到下一级目录看下

root@k8s-deploy:~/kubernetes# ll cluster/addons/dns

total 24

drwxr-xr-x 5 root root 4096 Jul 13 14:48 ./

drwxr-xr-x 18 root root 4096 Jul 13 14:48 ../

drwxr-xr-x 2 root root 4096 Jul 13 14:48 coredns/

drwxr-xr-x 2 root root 4096 Jul 13 14:48 kube-dns/

drwxr-xr-x 2 root root 4096 Jul 13 14:48 nodelocaldns/

-rw-r--r-- 1 root root 141 Jul 13 14:48 OWNERS

只需要把coredns目录里的yaml文件拷贝后修改就行

root@k8s-deploy:~/kubernetes# ll cluster/addons/dns/coredns/

total 44

drwxr-xr-x 2 root root 4096 Jul 13 14:48 ./

drwxr-xr-x 5 root root 4096 Jul 13 14:48 ../

-rw-r--r-- 1 root root 5060 Jul 13 14:48 coredns.yaml.base

-rw-r--r-- 1 root root 5110 Jul 13 14:48 coredns.yaml.in

-rw-r--r-- 1 root root 5112 Jul 13 14:48 coredns.yaml.sed

-rw-r--r-- 1 root root 1075 Jul 13 14:48 Makefile

-rw-r--r-- 1 root root 344 Jul 13 14:48 transforms2salt.sed

-rw-r--r-- 1 root root 287 Jul 13 14:48 transforms2sed.sed

这里暂时不做修改,后面会用到

找以下二进制文件kube-apiserver、kube-controller-manager、kube-scheduler、kube-proxy、kubectl、kubelet主要这几个二进制这几个二进制都是官方已经编译好的

root@k8s-deploy:~/kubernetes# ll server/bin/

total 1089112

drwxr-xr-x 2 root root 4096 Jul 13 14:43 ./

drwxr-xr-x 3 root root 4096 Jul 13 14:48 ../

-rwxr-xr-x 1 root root 55402496 Jul 13 14:43 apiextensions-apiserver*

-rwxr-xr-x 1 root root 44376064 Jul 13 14:43 kubeadm*

-rwxr-xr-x 1 root root 49496064 Jul 13 14:43 kube-aggregator*

-rwxr-xr-x 1 root root 125865984 Jul 13 14:43 kube-apiserver*

-rw-r--r-- 1 root root 8 Jul 13 14:41 kube-apiserver.docker_tag

-rw------- 1 root root 131066880 Jul 13 14:41 kube-apiserver.tar

-rwxr-xr-x 1 root root 115515392 Jul 13 14:43 kube-controller-manager*

-rw-r--r-- 1 root root 8 Jul 13 14:41 kube-controller-manager.docker_tag

-rw------- 1 root root 120716288 Jul 13 14:41 kube-controller-manager.tar

-rwxr-xr-x 1 root root 45711360 Jul 13 14:43 kubectl*

-rwxr-xr-x 1 root root 55036584 Jul 13 14:43 kubectl-convert*

-rwxr-xr-x 1 root root 116013432 Jul 13 14:43 kubelet*

-rwxr-xr-x 1 root root 1486848 Jul 13 14:43 kube-log-runner*

-rwxr-xr-x 1 root root 41762816 Jul 13 14:43 kube-proxy*

-rw-r--r-- 1 root root 8 Jul 13 14:41 kube-proxy.docker_tag

-rw------- 1 root root 111863808 Jul 13 14:41 kube-proxy.tar

-rwxr-xr-x 1 root root 47144960 Jul 13 14:43 kube-scheduler*

-rw-r--r-- 1 root root 8 Jul 13 14:41 kube-scheduler.docker_tag

-rw------- 1 root root 52345856 Jul 13 14:41 kube-scheduler.tar

-rwxr-xr-x 1 root root 1413120 Jul 13 14:43 mounter*

检查下版本,是否我们需要的版本

root@k8s-deploy:~/kubernetes# ./server/bin/kube-apiserver --version

Kubernetes v1.24.3三、升级

1、升级master1

升级需要把所有node节点上的负载均衡器中的api server拿掉,这样就不会有请求再转给那台机器了

下面先升级master1 172.31.7.101

1、node1修改配置文件

root@node1:~# vim /etc/kube-lb/conf/kube-lb.conf

把升级的节点注释掉

upstream backend {

server 172.31.7.103:6443 max_fails=2 fail_timeout=3s;

# server 172.31.7.101:6443 max_fails=2 fail_timeout=3s;

server 172.31.7.102:6443 max_fails=2 fail_timeout=3s;

}

root@node1:~# systemctl reload kube-lb.service

2、node2拿掉api server

root@node2:~# sed -i 's/server 172.31.7.101/#server 172.31.7.101/' /etc/kube-lb/conf/kube-lb.conf

root@node2:~# systemctl reload kube-lb.service

3、node3拿掉api server

root@node3:~# sed -i 's/server 172.31.7.101/#server 172.31.7.101/' /etc/kube-lb/conf/kube-lb.conf

root@node2:~# systemctl reload kube-lb.service

以上做完后就不会有请求再转给master1了,就相当于这台api server服务器已经下线了

然后把master1的一些管理端服务关掉,不然现在进程已经正在占用二进制了

关停下面五个服务

kube-apiserver.service

kube-controller-manager.service

kubelet.service

kube-proxy.service

kube-scheduler.service

4、关闭服务

root@master1:~# systemctl stop kube-apiserver.service kube-controller-manager.service kubelet.service kube-proxy.service kube-scheduler.service

root@master1:~#

这几个二进制文件都是在/usr/local/bin/目录下

root@master1:~# ll /usr/local/bin/

total 826520

drwxr-xr-x 2 root root 4096 Nov 12 12:02 ./

drwxr-xr-x 10 root root 4096 Aug 24 2021 ../

-rwxr-xr-x 1 root root 4159518 Nov 12 12:02 bandwidth*

-rwxr-xr-x 1 root root 4221977 Nov 11 07:46 bridge*

-rwxr-xr-x 1 root root 41472000 Nov 12 12:02 calico*

-rwxr-xr-x 1 root root 44978176 Nov 11 08:51 calicoctl*

-rwxr-xr-x 1 root root 41472000 Nov 12 12:02 calico-ipam*

-rwxr-xr-x 1 root root 59584224 Nov 11 03:51 containerd*

-rwxr-xr-x 1 root root 7389184 Nov 11 03:51 containerd-shim*

-rwxr-xr-x 1 root root 9555968 Nov 11 03:51 containerd-shim-runc-v1*

-rwxr-xr-x 1 root root 9580544 Nov 11 03:51 containerd-shim-runc-v2*

-rwxr-xr-x 1 root root 33228961 Nov 11 03:52 crictl*

-rwxr-xr-x 1 root root 30256896 Nov 11 03:52 ctr*

-rwxr-xr-x 1 root root 3069556 Nov 12 12:02 flannel*

-rwxr-xr-x 1 root root 3614480 Nov 12 12:02 host-local*

-rwxr-xr-x 1 root root 41472000 Nov 12 12:02 install*

-rwxr-xr-x 1 root root 125865984 Nov 11 07:45 kube-apiserver*

-rwxr-xr-x 1 root root 115507200 Nov 11 07:45 kube-controller-manager*

-rwxr-xr-x 1 root root 45711360 Nov 11 07:45 kubectl*

-rwxr-xr-x 1 root root 116353400 Nov 11 07:46 kubelet*

-rwxr-xr-x 1 root root 41762816 Nov 11 07:46 kube-proxy*

-rwxr-xr-x 1 root root 47144960 Nov 11 07:45 kube-scheduler*

-rwxr-xr-x 1 root root 3209463 Nov 12 12:02 loopback*

-rwxr-xr-x 1 root root 3939867 Nov 12 12:02 portmap*

-rwxr-xr-x 1 root root 9419136 Nov 11 03:52 runc*

-rwxr-xr-x 1 root root 3356587 Nov 12 12:02 tuning*

目前都是v1.24.2版本

root@master1:~# /usr/local/bin/kube-apiserver --version

Kubernetes v1.24.2

把新的二进制文件拷贝到/usr/local/bin/即可完成升级

5、拷贝二进制,进行升级

root@k8s-deploy:~/kubernetes/server/bin# scp kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubectl 172.31.7.101:/usr/local/bin/

kube-apiserver 100% 120MB 74.6MB/s 00:01

kube-controller-manager 100% 110MB 80.7MB/s 00:01

kube-scheduler 100% 45MB 54.7MB/s 00:00

kube-proxy 100% 40MB 73.5MB/s 00:00

kubelet 100% 111MB 60.1MB/s 00:01

kubectl 100% 44MB 68.0MB/s 00:00

6、此时再到master1上验证下版本,是否已经升级了

root@master1:~# /usr/local/bin/kube-apiserver --version

Kubernetes v1.24.3

7、启动服务

root@master1:~# systemctl start kube-apiserver.service kube-controller-manager.service kubelet.service kube-proxy.service kube-scheduler.service

root@master1:~#

8、可以再看下集群版本信息了,172.31.7.101已经是v1.24.3版本了

root@k8s-deploy:~/kubernetes/server/bin# kubectl get node

NAME STATUS ROLES AGE VERSION

172.31.7.101 Ready,SchedulingDisabled master 6h36m v1.24.3

172.31.7.102 Ready,SchedulingDisabled master 6h36m v1.24.2

172.31.7.103 Ready,SchedulingDisabled master 4h21m v1.24.2

172.31.7.111 Ready node 6h33m v1.24.2

172.31.7.112 Ready node 6h33m v1.24.2

172.31.7.113 Ready node 4h36m v1.24.2

这个版本是由kubelet决定的,只要把kubelet更新了,哪怕没有更新kube-proxy也会显示1.24.3版本

2、升级master2,master3

1、把master2.master3拿掉,此时node节点还可以通过master1正常工作

root@node1:~# vim /etc/kube-lb/conf/kube-lb.conf

# server 172.31.7.103:6443 max_fails=2 fail_timeout=3s;

server 172.31.7.101:6443 max_fails=2 fail_timeout=3s;

# server 172.31.7.102:6443 max_fails=2 fail_timeout=3s;

root@node1:~# systemctl reload kube-lb.service

2、其它两个node节点也依次修改,重启服务

把master1,master2五个服务都停掉

root@master2:~# systemctl stop kube-apiserver.service kube-controller-manager.service kubelet.service kube-proxy.service kube-scheduler.service

root@master3:~# systemctl stop kube-apiserver.service kube-controller-manager.service kubelet.service kube-proxy.service kube-scheduler.service

3、把部署节点上的五个新版本二进制文件拷贝到master1,master2

root@k8s-deploy:~/kubernetes/server/bin# scp kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubectl 172.31.7.102:/usr/local/bin/

root@k8s-deploy:~/kubernetes/server/bin# scp kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubectl 172.31.7.102:/usr/local/bin/

4、文件拷贝好后,把master1,master2上的服务都启动

root@master2:~# systemctl start kube-apiserver.service kube-controller-manager.service kubelet.service kube-proxy.service kube-scheduler.service

root@master3:~# systemctl start kube-apiserver.service kube-controller-manager.service kubelet.service kube-proxy.service kube-scheduler.service

5、查看集群版本信息三个master节点都完成了升级

root@k8s-deploy:~/kubernetes/server/bin# kubectl get node

NAME STATUS ROLES AGE VERSION

172.31.7.101 Ready,SchedulingDisabled master 6h56m v1.24.3

172.31.7.102 Ready,SchedulingDisabled master 6h56m v1.24.3

172.31.7.103 Ready,SchedulingDisabled master 4h41m v1.24.3

172.31.7.111 Ready node 6h53m v1.24.2

172.31.7.112 Ready node 6h53m v1.24.2

172.31.7.113 Ready node 4h56m v1.24.23、升级node节点

node升级和master还不太一样,因为master上面没有运行业务容器,但是node上是运行业务容器的

所以要先把node节点驱逐出k8s环境,驱逐后node就没有业务容器了,就可以做升级操作了

需要驱逐node

每个node节点上都有daemonset容器,没法驱逐比如calico,且每个node节点上都有这个pod,因此无法驱逐到其它节点上运行,但是这种pod也不需要重建,因为每个节点都有了,而它无法驱逐,所以可以把这pod忽略掉

root@k8s-deploy:~/kubernetes/server/bin# kubectl get pod -o wide -n kube-system

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-69f77c86d8-2bzzt 1/1 Running 1 (103m ago) 7h1m 172.31.7.111 172.31.7.111 <none> <none>

calico-node-9cg2k 1/1 Running 1 (4h59m ago) 4h59m 172.31.7.111 172.31.7.111 <none> <none>

calico-node-dctqp 1/1 Running 1 (102m ago) 5h1m 172.31.7.102 172.31.7.102 <none> <none>

calico-node-l7zf8 1/1 Running 0 5h4m 172.31.7.113 172.31.7.113 <none> <none>

calico-node-qw6c6 1/1 Running 1 (10m ago) 4h50m 172.31.7.103 172.31.7.103 <none> <none>

calico-node-rcvkr 1/1 Running 2 (102m ago) 4h59m 172.31.7.101 172.31.7.101 <none> <none>

calico-node-vgvzm 1/1 Running 0 5h4m 172.31.7.112 172.31.7.112 <none> <none>

1、驱逐node

root@k8s-deploy:~# kubectl drain 172.31.7.111 --ignore-daemonsets --force

root@k8s-deploy:~# kubectl get node

NAME STATUS ROLES AGE VERSION

172.31.7.101 Ready,SchedulingDisabled master 25h v1.24.3

172.31.7.102 Ready,SchedulingDisabled master 25h v1.24.3

172.31.7.103 Ready,SchedulingDisabled master 23h v1.24.3

172.31.7.111 Ready,SchedulingDisabled node 25h v1.24.2

172.31.7.112 Ready node 25h v1.24.2

172.31.7.113 Ready node 23h v1.24.2

这时172.31.7.111也被打上SchedulingDisabled的标签了,业务容器都迁移了,不会再向这个主机调度新的pod

2、node节点停服务,升级

root@node1:~# systemctl stop kubelet.service kube-proxy.service

3、拷贝新版本的二进制文件到node节点上

root@k8s-deploy:~/kubernetes/server/bin# scp kubelet kube-proxy kubectl 172.31.7.111:/usr/local/bin/

4、启动服务

root@node1:~# systemctl start kubelet.service kube-proxy.service

5、查看node1版本

root@node1:~# kubectl get node

NAME STATUS ROLES AGE VERSION

172.31.7.101 Ready,SchedulingDisabled master 26h v1.24.3

172.31.7.102 Ready,SchedulingDisabled master 26h v1.24.3

172.31.7.103 Ready,SchedulingDisabled master 23h v1.24.3

172.31.7.111 Ready,SchedulingDisabled node 25h v1.24.3

172.31.7.112 Ready node 25h v1.24.2

172.31.7.113 Ready node 24h v1.24.2

6、恢复node1的调度状态,让它恢复正常

root@k8s-deploy:~/kubernetes/server/bin# kubectl uncordon 172.31.7.111

node/172.31.7.111 uncordoned

7、查看集群节点调度状态

root@k8s-deploy:~/kubernetes/server/bin# kubectl get node

NAME STATUS ROLES AGE VERSION

172.31.7.101 Ready,SchedulingDisabled master 26h v1.24.3

172.31.7.102 Ready,SchedulingDisabled master 26h v1.24.3

172.31.7.103 Ready,SchedulingDisabled master 23h v1.24.3

172.31.7.111 Ready node 26h v1.24.3

172.31.7.112 Ready node 26h v1.24.2

172.31.7.113 Ready node 24h v1.24.2

升级其它两个node

以上以生产环境有业务情况下比较严谨的先驱逐再升级,下面两个node我就不驱逐直接升级了首先停服务,然后把新的二进制拷贝过去,最后再启动服务

1、node2节点升级

root@node2:~# systemctl stop kubelet.service kube-proxy.service

root@k8s-deploy:~/kubernetes/server/bin# scp kubelet kube-proxy kubectl 172.31.7.112:/usr/local/bin/

root@node2:~# systemctl start kubelet.service kube-proxy.service

2、node3节点升级

root@node3:~# systemctl stop kubelet.service kube-proxy.service

root@k8s-deploy:~/kubernetes/server/bin# scp kubelet kube-proxy kubectl 172.31.7.113:/usr/local/bin/

root@node3:~# systemctl start kubelet.service kube-proxy.service

查看集群版本号

root@node3:~# kubectl get node

NAME STATUS ROLES AGE VERSION

172.31.7.101 Ready,SchedulingDisabled master 26h v1.24.3

172.31.7.102 Ready,SchedulingDisabled master 26h v1.24.3

172.31.7.103 Ready,SchedulingDisabled master 23h v1.24.3

172.31.7.111 Ready node 26h v1.24.3

172.31.7.112 Ready node 26h v1.24.3

172.31.7.113 Ready node 24h v1.24.3

集群升级完成,再把/etc/kube-lb/conf/kube-lb.conf文件中api server注释都解开

root@node1:~# vim /etc/kube-lb/conf/kube-lb.conf

root@node1:~# systemctl reload kube-lb.service

root@node2:~# vim /etc/kube-lb/conf/kube-lb.conf

root@node2:~# systemctl reload kube-lb.service

root@node3:~# vim /etc/kube-lb/conf/kube-lb.conf

root@node3:~# systemctl reload kube-lb.service最后把这些二进制拷贝到/etc/kubease/bin/目录下,这样即使后面新加的节点也会使用这些新的二进制了,也都是新版本了

root@k8s-deploy:~/kubernetes/server/bin# \cp kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubectl /etc/kubeasz/bin/

至此就完成了master,node的升级,希望对你有所帮助,谢谢~

665

665

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?