目录

三、基于存储类 Storageclass 动态从 Ceph 划分 PV

本篇文章所用到的资料文件下载地址:https://download.csdn.net/download/weixin_46560589/87403746

一、K8s 对接 ceph rbd 实现数据持久化

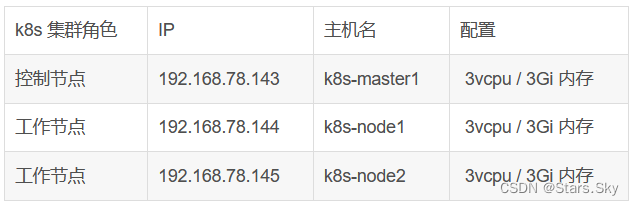

-

k8s-v1.23 环境

1.1 k8s 安装 ceph

kubernetes 要想使用 ceph,需要在 k8s 的每个 node 节点安装 ceph-common,把 ceph 节点上的 ceph.repo 文件拷贝到 k8s 各个节点 /etc/yum.repos.d/ 目录下,然后在 k8s 的各个节点执行 yum install ceph-common -y :

[root@master1-admin ceph]# scp /etc/yum.repos.d/ceph.repo 192.168.78.143:/etc/yum.repos.d/

[root@master1-admin ceph]# scp /etc/yum.repos.d/ceph.repo 192.168.78.144:/etc/yum.repos.d/

[root@master1-admin ceph]# scp /etc/yum.repos.d/ceph.repo 192.168.78.145:/etc/yum.repos.d/

yum install ceph-common -y

# 将 ceph 配置文件拷贝到 k8s 的各节点

[root@master1-admin ceph]# scp /etc/ceph/* 192.168.78.143:/etc/ceph/

[root@master1-admin ceph]# scp /etc/ceph/* 192.168.78.144:/etc/ceph/

[root@master1-admin ceph]# scp /etc/ceph/* 192.168.78.145:/etc/ceph/

# 创建ceph rbd

[root@master1-admin ceph]# ceph osd pool create k8srbd1 6

pool 'k8srbd1' created

[root@master1-admin ceph]# rbd create rbda -s 1024 -p k8srbd1

# 禁用一些没用的特性

[root@master1-admin ceph]# rbd feature disable k8srbd1/rbda object-map fast-diff deep-flatten1.2 创建 pod 挂载 ceph rbd

[root@k8s-master1 ~]# mkdir ceph

[root@k8s-master1 ~]# cd ceph/

[root@k8s-master1 ceph]# vim pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: testrbd

spec:

containers:

- image: nginx

name: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: testrbd

mountPath: /mnt

volumes:

- name: testrbd

rbd:

monitors:

- '192.168.78.135:6789' # ceph 集群节点

- '192.168.78.136:6789'

- '192.168.78.137:6789'

pool: k8srbd1

image: rbda

fsType: xfs

readOnly: false

user: admin

keyring: /etc/ceph/ceph.client.admin.keyring

[root@k8s-master1 ceph]# kubectl apply -f pod.yaml

[root@k8s-master1 ceph]# kubectl get pods testrbd -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

testrbd 1/1 Running 0 34s 10.244.36.96 k8s-node1 <none> <none>注意:k8srbd1 下的 rbda 被 pod 挂载了,那其他 pod 就不能使用这个 k8srbd1 下的 rbda 了!

二、基于 ceph rbd 生成 pv

2.1 创建 ceph-secret

这个 k8s secret 对象用于 k8s volume 插件访问 ceph 集群,获取 client.admin 的 keyring 值,并用 base64 编码,在 master1-admin(ceph 管理节点)操作:

# 每个人的不一样,是唯一的

[root@master1-admin ceph]# ceph auth get-key client.admin | base64

QVFES0hOWmpaWHVHQkJBQTM1NGFSTngwcGloUWxWYjhVblM2dUE9PQ==

2.2 创建 ceph 的 secret

在 k8s 的控制节点操作:

[root@k8s-master1 ceph]# vim ceph-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-secret

data:

key: QVFES0hOWmpaWHVHQkJBQTM1NGFSTngwcGloUWxWYjhVblM2dUE9PQ==

[root@k8s-master1 ceph]# kubectl apply -f ceph-secret.yaml

secret/ceph-secret created

[root@k8s-master1 ceph]# kubectl get secrets

NAME TYPE DATA AGE

ceph-secret Opaque 1 16s

2.3 创建 pool 池

[root@master1-admin ceph]# ceph osd pool create k8stest 6

pool 'k8stest' created

[root@master1-admin ceph]# rbd create rbda -s 1024 -p k8stest

[root@master1-admin ceph]# rbd feature disable k8stest/rbda object-map fast-diff deep-flatten2.4 创建 pv

[root@k8s-master1 ceph]# vim pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: ceph-pv

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

rbd:

monitors:

- '192.168.78.135:6789'

- '192.168.78.136:6789'

- '192.168.78.137:6789'

pool: k8stest

image: rbda

user: admin

secretRef:

name: ceph-secret

fsType: xfs

readOnly: false

persistentVolumeReclaimPolicy: Recycle

[root@k8s-master1 ceph]# kubectl apply -f pv.yaml

persistentvolume/ceph-pv created

[root@k8s-master1 ceph]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

ceph-pv 1Gi RWO Recycle Available 3s2.5 创建 pvc

[root@k8s-master1 ceph]# vim pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: ceph-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

[root@k8s-master1 ceph]# kubectl apply -f pvc.yaml

persistentvolumeclaim/ceph-pvc created

[root@k8s-master1 ceph]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

ceph-pvc Bound ceph-pv 1Gi RWO 4s2.6 测试 pod 挂载 pvc

[root@k8s-master1 ceph]# vim pod-pvc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2 # tells deployment to run 2 pods matching the template

template: # create pods using pod definition in this template

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

volumeMounts:

- mountPath: "/ceph-data"

name: ceph-data

volumes:

- name: ceph-data

persistentVolumeClaim:

claimName: ceph-pvc

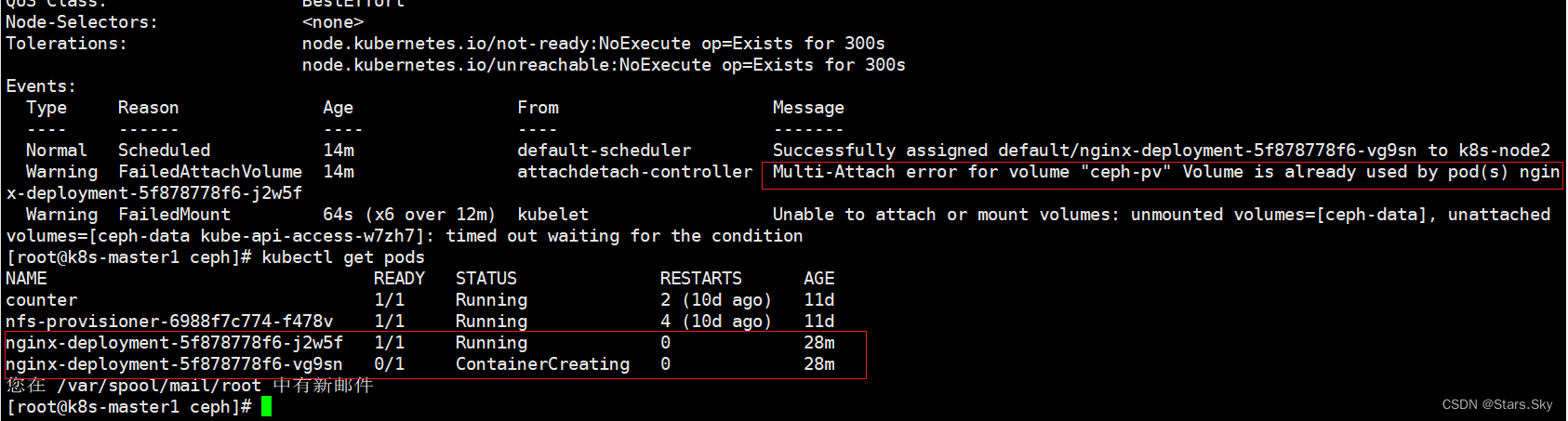

[root@k8s-master1 ceph]# kubectl apply -f pod-pvc.yaml 注意:deployment 生成的 pod 必须与前面创建的 pv 在同一节点才行,不然会出现下面这种情况:

其中一个 pod 是 ContainerCreating 状态,原因是 pv 已被占用,pv 不能跨节点!!!

解决办法:

# 在原来的基础上添加一个 nodeselector 节点选择器

# 给指定节点创建标签

[root@k8s-master1 ceph]# kubectl label nodes k8s-node1 project=ceph-pv

[root@k8s-master1 ceph]# vim pod-pvc.yaml

······

volumeMounts:

- mountPath: "/ceph-data"

name: ceph-data

nodeSelector:

project: ceph-pv

volumes:

······

[root@k8s-master1 ceph]# kubectl delete -f pod-pvc.yaml

[root@k8s-master1 ceph]# kubectl apply -f pod-pvc.yaml

[root@k8s-master1 ceph]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-75b94569dc-6fgxp 1/1 Running 0 70s 10.244.36.103 k8s-node1 <none> <none>

nginx-deployment-75b94569dc-6w9m7 1/1 Running 0 70s 10.244.36.104 k8s-node1 <none> <none>通过上面实验可以发现 pod 是可以以 ReadWriteOnce 共享挂载相同的 pvc 的。

2.7 注意事项

1)ceph rbd 块存储的特点

-

ceph rbd 块存储能在同一个 node 上跨 pod 以 ReadWriteOnce 共享挂载;

-

ceph rbd 块存储能在同一个 node 上同一个 pod 多个容器中以 ReadWriteOnce 共享挂载;

-

ceph rbd 块存储不能跨 node 以 ReadWriteOnce 共享挂载。

如果一个使用 ceph rdb 的 pod 所在的 node 挂掉,这个 pod 虽然会被调度到其它 node,但是由于 rbd 不能跨 node 多次挂载和挂掉的 pod 不能自动解绑 pv 的问题,这个新 pod 不会正常运行。

2)Deployment 更新特性

deployment 触发更新的时候,它确保至少所需 Pods 75% 处于运行状态(最大不可用比例为 25%)。故像一个 pod 的情况,肯定是新创建一个新的 pod,新 pod 运行正常之后,再关闭老的 pod。

默认情况下,它可确保启动的 Pod 个数比期望个数最多多出 25%。

3)问题:

结合 ceph rbd 共享挂载的特性和 deployment 更新的特性,我们发现原因如下:

由于 deployment 触发更新,为了保证服务的可用性,deployment 要先创建一个 pod 并运行正常之后,再去删除老 pod。而如果新创建的 pod 和老 pod 不在一个 node,就会导致此故障。

解决办法:

-

使用能支持跨 node 和 pod 之间挂载的共享存储,例如 cephfs,GlusterFS 等;

-

给 node 添加 label,只允许 deployment 所管理的 pod 调度到一个固定的 node 上。(不建议,因为如果这个 node 挂掉的话,服务就故障了)

三、基于存储类 Storageclass 动态从 Ceph 划分 PV

3.1 准备工作

在 ceph 集群和 k8s 集群中各节点都需要执行下面命令:

chmod 777 -R /etc/ceph/*

mkdir /root/.ceph/

cp -ar /etc/ceph/ /root/.ceph/3.2 创建 rbd 的供应商 provisioner

把 rbd-provisioner.tar.gz 上传到 node1、node2 上手动解压:

[root@k8s-node1 ~]# docker load -i rbd-provisioner.tar.gz

[root@k8s-node2 ~]# docker load -i rbd-provisioner.tar.gz

[root@k8s-master1 ceph]# vim rbd-provisioner.yaml

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-provisioner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["services"]

resourceNames: ["kube-dns","coredns"]

verbs: ["list", "get"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-provisioner

subjects:

- kind: ServiceAccount

name: rbd-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: rbd-provisioner

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: rbd-provisioner

rules:

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: rbd-provisioner

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: rbd-provisioner

subjects:

- kind: ServiceAccount

name: rbd-provisioner

namespace: default

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: rbd-provisioner

spec:

selector:

matchLabels:

app: rbd-provisioner

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: rbd-provisioner

spec:

containers:

- name: rbd-provisioner

image: quay.io/xianchao/external_storage/rbd-provisioner:v1

imagePullPolicy: IfNotPresent

env:

- name: PROVISIONER_NAME

value: ceph.com/rbd

serviceAccount: rbd-provisioner

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: rbd-provisioner

[root@k8s-master1 ceph]# kubectl apply -f rbd-provisioner.yaml

[root@k8s-master1 ceph]# kubectl get pods

NAME READY STATUS RESTARTS AGE

rbd-provisioner-5d58f65ff5-9c8q2 1/1 Running 0 2s3.3 创建 ceph-secret

# 创建 pool 池

[root@master1-admin ~]# ceph osd pool create k8stest1 6

[root@master1-admin ~]# ceph auth get-key client.admin | base64

QVFES0hOWmpaWHVHQkJBQTM1NGFSTngwcGloUWxWYjhVblM2dUE9PQ==

[root@k8s-master1 ceph]# vim ceph-secret-1.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-secret-1

type: "ceph.com/rbd"

data:

key: QVFES0hOWmpaWHVHQkJBQTM1NGFSTngwcGloUWxWYjhVblM2dUE9PQ==

[root@k8s-master1 ceph]# kubectl apply -f ceph-secret-1.yaml

[root@k8s-master1 ceph]# kubectl get secrets

NAME TYPE DATA AGE

ceph-secret Opaque 1 17h

ceph-secret-1 ceph.com/rbd 1 44s

rbd-provisioner-token-fknvw kubernetes.io/service-account-token 3 6m35s3.4 创建 storageclass

[root@k8s-master1 ceph]# vim storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: k8s-rbd

provisioner: ceph.com/rbd

parameters:

monitors: 192.168.78.135:6789,192.168.78.136:6789,192.168.78.137:6789

adminId: admin

adminSecretName: ceph-secret-1

pool: k8stest1

userId: admin

userSecretName: ceph-secret-1

fsType: xfs

imageFormat: "2"

imageFeatures: "layering"

[root@k8s-master1 ceph]# kubectl apply -f storageclass.yaml

注意:k8s-v1.20 版本通过 rbd provisioner 动态生成 pv 会报错:

[root@k8s-master1 ceph]# kubectl logs rbd-provisioner-5d58f65ff5-kl2df

E0418 15:50:09.610071 1 controller.go:1004] provision "default/rbd-pvc" class "k8s-rbd": unexpected error getting claim reference: selfLink was empty, can't make reference,报错原因是 1.20 版本仅用了 selfLink,解决方法如下:

# 添加这一行:- --feature-gates=RemoveSelfLink=false,如果有则无需添加

[root@k8s-master1 ceph]# vim /etc/kubernetes/manifests/kube-apiserver.yaml

······

spec:

containers:

- command:

- kube-apiserver

- --feature-gates=RemoveSelfLink=false # 添加此内容

- --advertise-address=192.168.78.143

- --allow-privileged=true

······

[root@k8s-master1 ceph]# systemctl restart kubelet

[root@k8s-master1 ceph]# kubectl get pods -n kube-system 3.5 创建 pvc

[root@k8s-master1 ceph]# vim rbd-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: rbd-pvc

spec:

accessModes:

- ReadWriteOnce

volumeMode: Filesystem

resources:

requests:

storage: 1Gi

storageClassName: k8s-rbd

[root@k8s-master1 ceph]# kubectl apply -f rbd-pvc.yaml

persistentvolumeclaim/rbd-pvc created

[root@k8s-master1 ceph]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

ceph-pvc Bound ceph-pv 1Gi RWO 17h

rbd-pvc Bound pvc-881076c6-7cac-427b-a29f-43320847cde1 1Gi RWO k8s-rbd 5s3.6 创建 pod 挂载 pvc

[root@k8s-master1 ceph]# vim pod-sto.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

test: rbd-pod

name: ceph-rbd-pod

spec:

containers:

- name: ceph-rbd-nginx

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: ceph-rbd

mountPath: /mnt

readOnly: false

volumes:

- name: ceph-rbd

persistentVolumeClaim:

claimName: rbd-pvc

[root@k8s-master1 ceph]# kubectl apply -f pod-sto.yaml

[root@k8s-master1 ceph]# kubectl get pods

NAME READY STATUS RESTARTS AGE

ceph-rbd-pod 1/1 Running 0 41s

rbd-provisioner-5d58f65ff5-kl2df 1/1 Running 0 21m四、K8s 挂载 cephfs 实现数据持久化

# 查看 ceph 文件系统

[root@master1-admin ~]# ceph fs ls

name: test, metadata pool: cephfs_metadata, data pools: [cephfs_data ]

4.1 创建 ceph 子目录

# 为了别的地方能挂载 cephfs,先创建一个 secretfile

[root@master1-admin ~]# cat /etc/ceph/ceph.client.admin.keyring |grep key|awk -F" " '{print $3}' > /etc/ceph/admin.secret

# 挂载 cephfs 的根目录到集群的 mon 节点下的一个目录,比如 test_data,因为挂载后,我们就可以直接在test_data 下面用 Linux 命令创建子目录了

[root@master1-admin ~]# mkdir test_data

[root@master1-admin ~]# mount -t ceph 192.168.78.135:6789:/ /root/test_data/ -o name=admin,secretfile=/etc/ceph/admin.secret

[root@master1-admin ~]# df -Th

文件系统 类型 容量 已用 可用 已用% 挂载点

······

192.168.78.135:6789:/ ceph 15G 388M 15G 3% /root/test_data

# 在 cephfs 的根目录里面创建了一个子目录 lucky,k8s 以后就可以挂载这个目录

[root@master1-admin ~]# cd test_data/

[root@master1-admin test_data]# mkdir lucky

[root@master1-admin test_data]# chmod 0777 lucky/4.2 测试 pod 挂载 cephfs

1)创建 k8s 连接 ceph 使用的 secret

# 将 /etc/ceph/ceph.client.admin.keyring 里面的 key 的值转换为 base64,否则会有问题

[root@master1-admin test_data]# cat /etc/ceph/ceph.client.admin.keyring

[client.admin]

key = AQDKHNZjZXuGBBAA354aRNx0pihQlVb8UnS6uA==

caps mds = "allow *"

caps mon = "allow *"

caps osd = "allow *"

[root@master1-admin test_data]# echo "AQDKHNZjZXuGBBAA354aRNx0pihQlVb8UnS6uA==" | base64

QVFES0hOWmpaWHVHQkJBQTM1NGFSTngwcGloUWxWYjhVblM2dUE9PQo=

[root@k8s-master1 ceph]# vim cephfs-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: cephfs-secret

data:

key: QVFES0hOWmpaWHVHQkJBQTM1NGFSTngwcGloUWxWYjhVblM2dUE9PQo=

[root@k8s-master1 ceph]# kubectl apply -f cephfs-secret.yaml

[root@k8s-master1 ceph]# kubectl get secrets

NAME TYPE DATA AGE

ceph-secret Opaque 1 18h

ceph-secret-1 ceph.com/rbd 1 68m

cephfs-secret Opaque 1 61s

·····

[root@k8s-master1 ceph]# vim cephfs-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: cephfs-pv

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

cephfs:

monitors:

- 192.168.78.135:6789

path: /lucky

user: admin

readOnly: false

secretRef:

name: cephfs-secret

persistentVolumeReclaimPolicy: Recycle

[root@k8s-master1 ceph]# kubectl apply -f cephfs-pv.yaml

persistentvolume/cephfs-pv created

[root@k8s-master1 ceph]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

ceph-pv 1Gi RWO Recycle Bound default/ceph-pvc 18h

cephfs-pv 1Gi RWX Recycle Available 4s

······

[root@k8s-master1 ceph]# vim cephfs-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: cephfs-pvc

spec:

accessModes:

- ReadWriteMany

volumeName: cephfs-pv

resources:

requests:

storage: 1Gi

[root@k8s-master1 ceph]# kubectl apply -f cephfs-pvc.yaml

persistentvolumeclaim/cephfs-pvc created

[root@k8s-master1 ceph]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

ceph-pvc Bound ceph-pv 1Gi RWO 18h

cephfs-pvc Bound cephfs-pv 1Gi RWX 4s

rbd-pvc Bound pvc-881076c6-7cac-427b-a29f-43320847cde1 1Gi RWO k8s-rbd 33m2)创建第一个 pod 挂载 cephfs-pvc

[root@k8s-master1 ceph]# vim cephfs-pod-1.yaml

apiVersion: v1

kind: Pod

metadata:

name: cephfs-pod-1

spec:

containers:

- image: nginx

name: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: test-v1

mountPath: /mnt

volumes:

- name: test-v1

persistentVolumeClaim:

claimName: cephfs-pvc

[root@k8s-master1 ceph]# kubectl apply -f cephfs-pod-1.yaml

pod/cephfs-pod-1 created

[root@k8s-master1 ceph]# kubectl get pods

NAME READY STATUS RESTARTS AGE

ceph-rbd-pod 1/1 Running 0 33m

cephfs-pod-1 1/1 Running 0 5s

······3)创建第二个 pod 挂载 cephfs-pvc

[root@k8s-master1 ceph]# vim cephfs-pod-2.yaml

apiVersion: v1

kind: Pod

metadata:

name: cephfs-pod-2

spec:

containers:

- image: nginx

name: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: test-v1

mountPath: /mnt

volumes:

- name: test-v1

persistentVolumeClaim:

claimName: cephfs-pvc

[root@k8s-master1 ceph]# kubectl apply -f cephfs-pod-2.yaml

pod/cephfs-pod-2 created

[root@k8s-master1 ceph]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ceph-rbd-pod 1/1 Running 0 35m 10.244.169.171 k8s-node2 <none> <none>

cephfs-pod-1 1/1 Running 0 2m9s 10.244.169.172 k8s-node2 <none> <none>

cephfs-pod-2 1/1 Running 0 5s 10.244.36.107 k8s-node1 <none> <none>

[root@k8s-master1 ceph]# kubectl exec -it cephfs-pod-1 -- sh

# cd /mnt

# ls

# touch 1.txt

# exit

[root@k8s-master1 ceph]# kubectl exec -it cephfs-pod-2 -- sh

# cd /mnt

# ls

1.txt

# touch 2.txt

# ls

1.txt 2.txt

# exit

# 回到 master1-admin 上,可以看到在 cephfs 文件目录下已经存在内容了

[root@master1-admin test_data]# pwd

/root/test_data

[root@master1-admin test_data]# ls lucky/

1.txt 2.txt

由上面可知,cephfs 可跨 node 节点共享存储。

上一篇文章: 【Kubernetes 企业项目实战】05、基于云原生分布式存储 Ceph 实现 K8s 数据持久化(上)_Stars.Sky的博客-CSDN博客

下一篇文章:【Kubernetes 企业项目实战】06、基于 Jenkins+K8s 构建 DevOps 自动化运维管理平台(上)_Stars.Sky的博客-CSDN博客

3297

3297

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?