安装nfs服务器 yum install rpcbind nfs-utils -y

systemctl enable rpcbind

systemctl enable nfs

systemctl start rpcbind

systemctl start nfs

mkdir -p /root/data/sc-data

[ root@master sc-data]

/root/data/sc-data 192.168 .1.0/24( rw,no_root_squash)

/root/data/nginx/pv 192.168 .1.0/24( rw,no_root_squash)

别的服务器检测是否能用

[ root@node1 ~]

Export list for 192.168 .1.90:

/root/data/sc-data 192.168 .1.0/24

/root/data/nginx/pv 192.168 .1.0/24

创建pv [ root@master xiongfei]

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-nginx

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

nfs:

path: /root/data/nginx/pv

server: 192.168 .1.90

创建pvc [ root@master xiongfei]

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-nginx

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 4Gi

创建一个configmap [ root@master xiongfei]

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-configmap

namespace: dev

data:

default.conf: | -

server {

listen 80 ;

server_name localhost;

location / {

root /usr/share/nginx/html/account;

index index.html index.htm;

}

location /account {

proxy_pass http://192.168.1.130:8088/account;

}

error_page 405 = 200 $uri ;

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

server {

listen 8081 ;

server_name localhost;

location / {

root /usr/share/nginx/html/org;

index index.html index.htm;

}

location /org {

proxy_pass http://192.168.1.130:8082/org;

proxy_http_version 1.1 ;

proxy_set_header Upgrade $http_upgrade ;

proxy_set_header Connection "Upgrade" ;

proxy_set_header X-Real-IP $remote_addr ;

proxy_read_timeout 600s;

}

location ~ ^/V1.0/( .*) {

rewrite /( .*) $ /org/$1 break ;

proxy_pass http://192.168.1.130:8082;

proxy_set_header Host $proxy_host ;

}

}

创建一个hpa,提高可用行(这个在这里不用看) [ root@master xiongfei]

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: pc-hpa

namespace: dev

spec:

minReplicas: 1

maxReplicas: 10

targetCPUUtilizationPercentage: 10

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: nginx-deploy

创建一个pod 实例验证一下 [ root@master xiongfei]

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-service

name: nginx-service

namespace: dev

spec:

ports:

- name: account-nginx

port: 80

protocol: TCP

targetPort: 80

nodePort: 30013

- name: org-nginx

port: 8081

protocol: TCP

targetPort: 8081

nodePort: 30014

selector:

app: nginx-pod1

type: NodePort

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-deploy

name: nginx-deploy

namespace: dev

spec:

replicas: 1

selector:

matchLabels:

app: nginx-pod1

strategy:

type: RollingUpdate

template:

metadata:

labels:

app: nginx-pod1

namespace: dev

spec:

containers:

- image: nginx:1.17.1

name: nginx

ports:

- containerPort: 80

protocol: TCP

- containerPort: 8081

protocol: TCP

resources:

limits:

cpu: "1"

requests:

cpu: "500m"

volumeMounts:

- name: nginx-config

mountPath: /etc/nginx/conf.d/

readOnly: true

- name: nginx-html

mountPath: /usr/share/nginx/html/

readOnly: false

volumes:

- name: nginx-config

configMap:

name: nginx-configmap

- name: nginx-html

persistentVolumeClaim:

claimName: pvc-nginx

readOnly: false

查看pv pvc 之间的绑定状态 [ root@master ~]

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pv-nginx 5Gi RWX Retain Bound dev/pvc-nginx 2d6h

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pvc-nginx Bound pv-nginx 5Gi RWX 2d6h

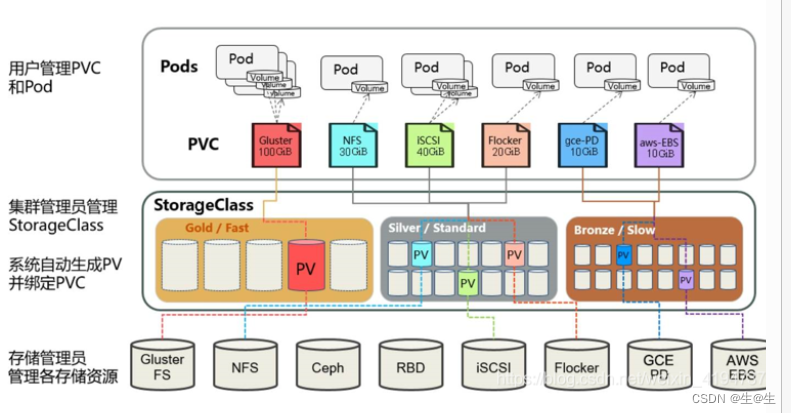

创建一个pod 实例验证一下 以上的这种演示方式是通过手动的情况下创建的

创建 nfs provisioner (nfs 配置器) [ root@master k8s-StorageClass]

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner

namespace: kube-system

spec:

replicas: 2

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

topologyKey: kubernetes.io/hostname

labelSelector:

matchLabels:

app: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:v3.1.0-k8s1.11

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: nfs-client-provisioner

- name: NFS_SERVER

value: 192.168 .1.90

- name: NFS_PATH

value: /root/data/sc-data

volumes:

- name: nfs-client-root

nfs:

server: 192.168 .1.90

path: /root/data/sc-data

创建rbac 资源的授权策略集合 [ root@master k8s-StorageClass]

kind: ServiceAccount

apiVersion: v1

metadata:

name: nfs-client-provisioner

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [ "" ]

resources: [ "persistentvolumes" ]

verbs: [ "get" , "list" , "watch" , "create" , "delete" ]

- apiGroups: [ "" ]

resources: [ "persistentvolumeclaims" ]

verbs: [ "get" , "list" , "watch" , "update" ]

- apiGroups: [ "storage.k8s.io" ]

resources: [ "storageclasses" ]

verbs: [ "get" , "list" , "watch" ]

- apiGroups: [ "" ]

resources: [ "events" ]

verbs: [ "create" , "update" , "patch" ]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: kube-system

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: kube-system

name: leader-locking-nfs-client-provisioner

rules:

- apiGroups: [ "" ]

resources: [ "endpoints" ]

verbs: [ "get" , "list" , "watch" , "create" , "update" , "patch" ]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: kube-system

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: kube-system

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

创建storageclass [ root@master k8s-StorageClass]

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{ "apiVersion" : "storage.k8s.io/v1" ,"kind" : "StorageClass" ,"metadata" :{ "annotations" :{ } ,"name" : "nfs" } ,"provisioner" : "nfs-client-provisioner" ,"reclaimPolicy" : "Delete" }

storageclass.beta.kubernetes.io/is-default-class: "true"

storageclass.kubernetes.io/is-default-class: "true"

name: nfs

provisioner: nfs-client-provisioner

parameters:

archiveOnDelete: "true"

reclaimPolicy: Retain

1 . 第一种情况

parameters:

archiveOnDelete: "true"

reclaimPolicy: delete

文件会以arch.. . 命名,数据还在,新创立的pod,不会引用之前数据目录里的数据

2 . 第二种情况

parameters:

archiveOnDelete: "false"

reclaimPolicy: delete

nfs 目录下的数据 会被全部删除

3 . **第三种情况**

parameters:

archiveOnDelete: "false"

reclaimPolicy: Retain

当回收策略改为Retain时, 删除pod时候, pvc pv 在删除时, nfs 文件不会被清楚,还是以defatult 这样的形势存在,当再次创建pod,会引用之前的数据

5 . 第四种情况

parameters:

archiveOnDelete: "true"

reclaimPolicy: Retain

数据目录下的 pvc的名字不变 还是以default 这样命名,新创建的pod ,不会引用之前留存的数据,pv的状态会变为Released

创建一个测试pod [ root@master k8s-StorageClass]

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumes:

- name: www

persistentVolumeClaim:

claimName: nginx

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx

spec:

storageClassName: "nfs"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

4514

4514

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?