【Hadoop伪分布式安装配置】

前置条件:hadoop前置准备(必须)

目录

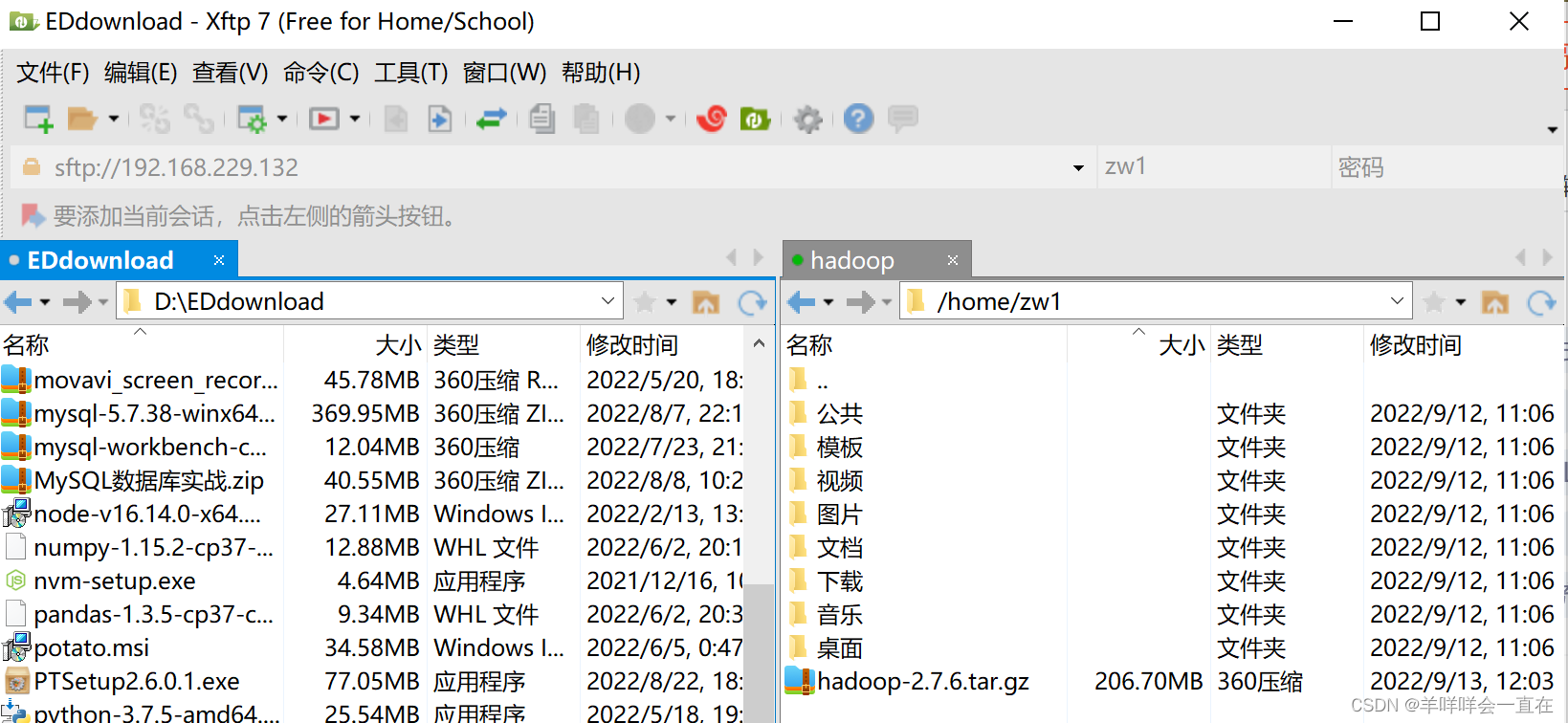

1.1 Hadoop 下载安装

Hadoop-2.7.6.tar.gz

Windows下载后使用xftp传输到CentOS系统上:

tar -zxvf hadoop-2.7.6.tar.gz -C /opt/software

cd /opt/software/hadoop-2.7.6

pwd # 获取路径

1.2 配置环境变量

sudo vim /etc/profile

末尾内容:

# set java environment

# set hadoop environment

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk

export HADOOP_HOME=/opt/software/hadoop-2.7.6

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/jre/lib/rt.jar

export PATH=$PATH:$JAVA_HOME/bin:${HADOOP_HOME}/bin:${HADOOP_HOME}/sbin:$PATH

source /etc/profile

hadoop version #检查是否成功,出现版本即成功

1.3 修改Hadoop配置

sudo mkdir -p /opt/software/hadoop-2.7.6/tmp

cd /opt/software/hadoop-2.7.6/etc/hadoop/

sudo hostname hadoop # 更改主机名字为前置准备中的主机

cat /etc/sysconfig/network

hostname # 确认一下

exit # 登出

# Xshell中重新连接

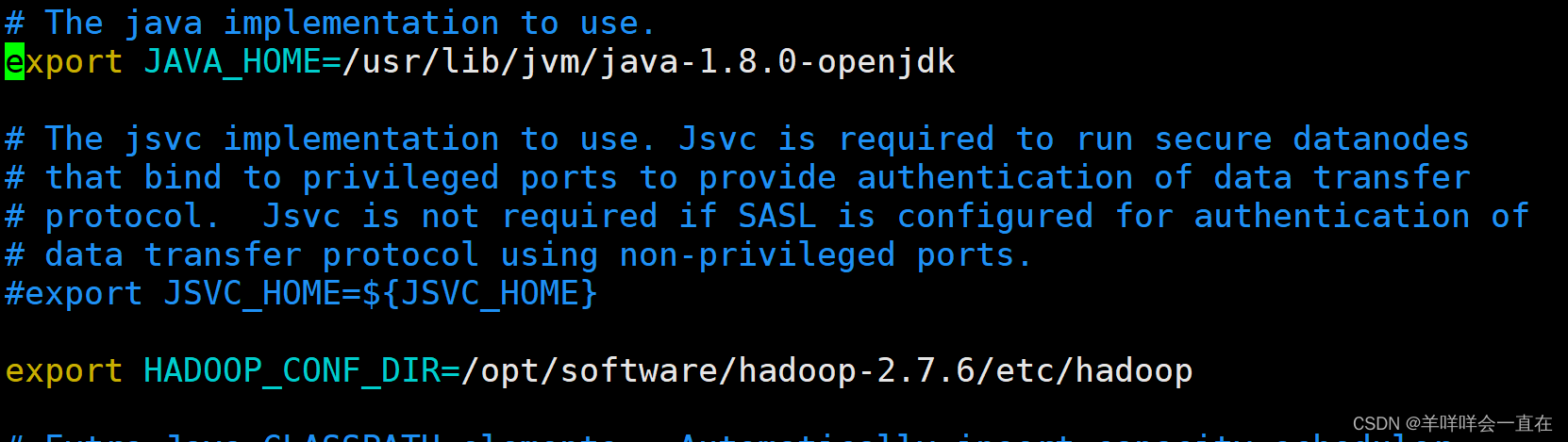

1.hadoop-env.sh

echo $JAVA_HOME #获取JAVA_HOME路径

echo $HADOOP_HOME #获取HADOOP_HOME路径

sudo vim hadoop-env.sh

修改后如图:

2.core-site.xml

sudo vim core-site.xml

'hdfs://hadoop:9000’中hadoop根据自己主机名字而定.

<configuration>

<!--指定默认文件系统的名称-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop:9000</value>

</property>

<!--HDFS在执行时的临时目录-->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/software/hadoop-2.7.6/tmp</value>

</property>

</configuration>

3. hdfs-site.xml

sudo vim hdfs-site.xml

<configuration>

<!--namenode 文件存放位置,可以指定多个目录实现容错,用逗号分开-->

<property>

<name>dfs.name.dir</name>

<value>file:///opt/software/hadoop-2.7.6/dfs/namenode_data</value>

</property>

<!--datanode 存放位置-->

<property>

<name>dfs.data.dir</name>

<value>file:///opt/software/hadoop-2.7.6/dfs/datanode_data</value>

</property>

<!--指定hdfs副本数量,包括自己,默认为3,伪分布式模式为1-->

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<!--hdfs操作权限,false表示任何用户都可以在hdfs上操作文件-->

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

4.mapred-site.xml

sudo cp mapred-site.xml.template mapred-site.xml

vim mapred-site.xml

<configuration>

<!--指定mapreduce运行在yarn上-->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

5.yarn-site.xml

sudo vim yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop</value>

</property>

<property>

<!--NodeManager huoqushuju de fangshi -->

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

6.slaves

sudo vim slaves

删掉localhost ,改成主机名:hadoop(我的)

1.4检查防火墙

sudo systemctl status firewalled

systemctl status firewalld

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

Active:inactive,防火墙为关闭状态.

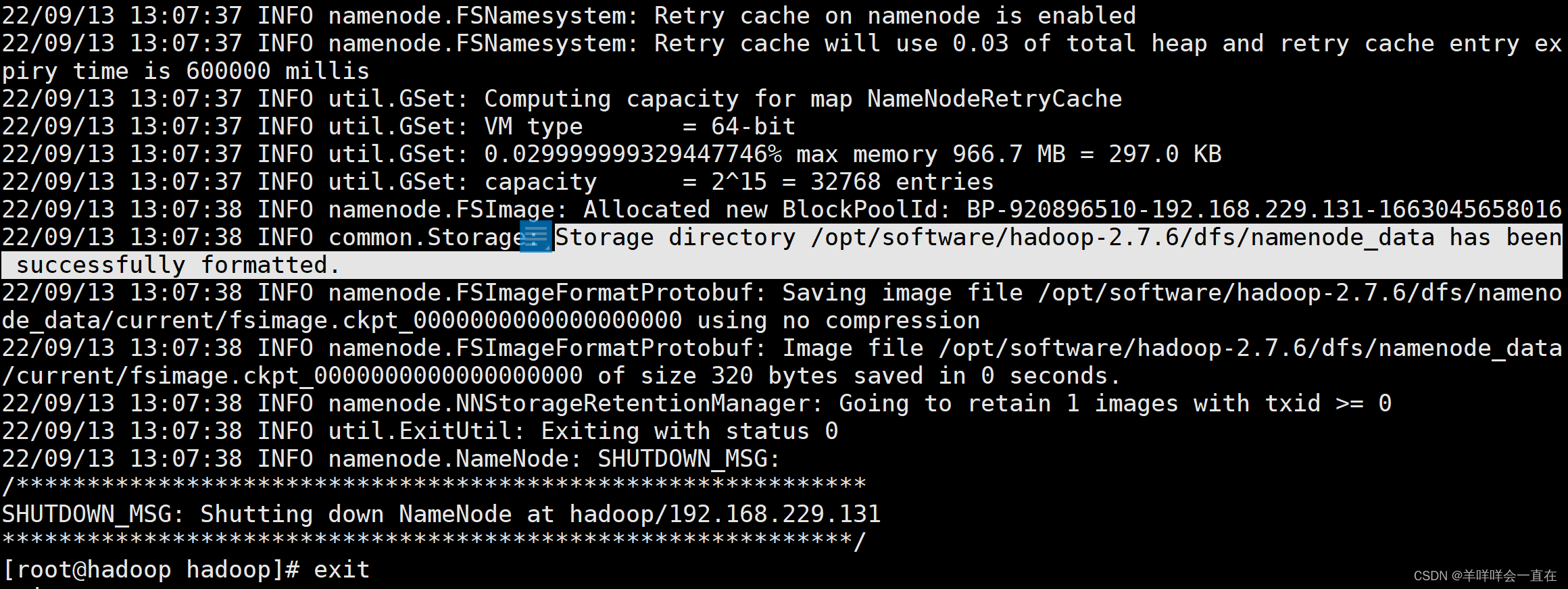

1.5启动关闭HDFS和YARN

su root

hdfs namenode -format # 第一次启动进行初始化

出现下图部分说明配置没有问题

[root@hadoop hadoop]# hadoop-daemon.sh start namenode

starting namenode, logging to /opt/software/hadoop-2.7.6/logs/hadoop-zw1-namenode-hadoop.out

[root@hadoop hadoop]# jps

8594 Jps

8557 NameNode

[root@hadoop hadoop]# hadoop-daemon.sh start datanode

starting datanode, logging to /opt/software/hadoop-2.7.6/logs/hadoop-zw1-datanode-hadoop.out

[root@hadoop hadoop]# jps

8665 DataNode

8719 Jps

[root@hadoop hadoop]# hadoop-daemon.sh stop datanode

stopping datanode

[root@hadoop hadoop]# jps

8758 Jps

[root@hadoop hadoop]# hadoop-daemon.sh start secondarynamenode

starting secondarynamenode, logging to /opt/software/hadoop-2.7.6/logs/hadoop-zw1-secondarynamenode-hadoop.out

[root@hadoop hadoop]# jps

8919 Jps

8878 SecondaryNameNode

[root@hadoop hadoop]# hadoop-daemon.sh stop secondarynamenode

stopping secondarynamenode

[root@hadoop hadoop]# yarn-daemon.sh start resourcemanager

starting resourcemanager, logging to /opt/software/hadoop-2.7.6/logs/yarn-zw1-resourcemanager-hadoop.out

[root@hadoop hadoop]# jps

9025 Jps

8988 ResourceManager

[root@hadoop hadoop]# yarn-daemon.sh start nodemanager

starting nodemanager, logging to /opt/software/hadoop-2.7.6/logs/yarn-zw1-nodemanager-hadoop.out

[root@hadoop hadoop]# jps

9070 NodeManager

9102 Jps

[root@hadoop hadoop]# yarn-daemon.sh stop nodemanager

stopping nodemanager

nodemanager did not stop gracefully after 5 seconds: killing with kill -9

一次性打开

[root@hadoop hadoop]# start-dfs.sh

Starting namenodes on [hadoop]

hadoop: ssh: connect to host hadoop port 22: No route to host

hadoop: ssh: connect to host hadoop port 22: No route to host

Starting secondary namenodes [0.0.0.0]

The authenticity of host '0.0.0.0 (0.0.0.0)' can't be established.

ECDSA key fingerprint is SHA256:G7MdQeYjKESMoY+E9oQ3uCnjUy+QIti6XvpBrd/CDcQ.

ECDSA key fingerprint is MD5:90:07:84:0f:10:72:13:af:c9:70:5a:fb:e0:45:67:d3.

Are you sure you want to continue connecting (yes/no)? yes

0.0.0.0: Warning: Permanently added '0.0.0.0' (ECDSA) to the list of known hosts.

root@0.0.0.0's password:

0.0.0.0: starting secondarynamenode, logging to /opt/software/hadoop-2.7.6/logs/hadoop-root-secondarynamenode-hadoop.out

[root@hadoop hadoop]# jps

9563 Jps

9437 SecondaryNameNode

[root@hadoop hadoop]# stop-dfs.sh

Stopping namenodes on [hadoop]

hadoop: ssh: connect to host hadoop port 22: No route to host

hadoop: ssh: connect to host hadoop port 22: No route to host

Stopping secondary namenodes [0.0.0.0]

root@0.0.0.0's password:

0.0.0.0: stopping secondarynamenode

参考:https://www.bilibili.com/video/BV1k7411G7Re?spm_id_from=333.851.header_right.history_list.click

967

967

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?