ELK日志分析平台--Logstash数据采集介绍与配置

1. Logstash简介

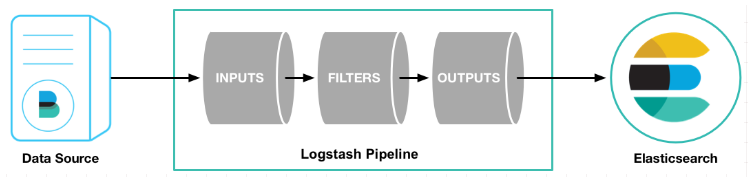

Logstash 是用于日志的搜集、分析、过滤的工具,支持大量的数据获取方式。一般工作方式为c/s架构,client 端安装在需要收集日志的主机上,server 端负责将收到的各节点日志进行过滤、修改等操作在一并发往 elasticsearch 上去。

Logstash 是一个开源的服务器端数据处理管道。

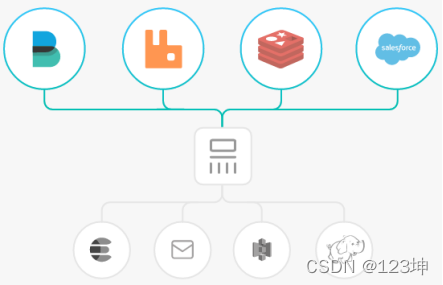

拥有200多个插件,能够同时从多个来源采集数据,转换数据,然后将数据发送到最喜欢的 “存储库” 中。(大多都是 Elasticsearch。)

2. Logstash组成

Logstash 管道有两个必需的元素,输入和输出,以及一个可选元素过滤器。

输入:

输入:

采集各种样式、大小和来源的数据.

Logstash 支持各种输入选择 ,同时从众多常用来源捕捉事件。

Logstash 支持各种输入选择 ,同时从众多常用来源捕捉事件。

能够以连续的流式传输方式,轻松地从您的日志、指标、Web 应用、数据存储以及各种 AWS 服务采集数据。

过滤器:

实时解析和转换数据.

数据从源传输到存储库的过程中,Logstash 过滤器能够解析各个事件,识别已命名的字段以构建结构,并将它们转换成通用格式,以便更轻松、更快速地分析和实现商业价值。

利用 Grok 从非结构化数据中派生出结构

从 IP 地址破译出地理坐标

将 PII 数据匿名化,完全排除敏感字段

简化整体处理,不受数据源、格式或架构的影响

输出:

选择您的存储库,导出您的数据.

尽管 Elasticsearch 是我们的首选输出方向,能够为我们的搜索和分析带来无限可能,但它并非唯一选择。

Logstash 提供众多输出选择,您可以将数据发送到您要指定的地方,并且能够灵活地解锁众多下游用例。

3. Logstash 安装与配置

软件下载https://elasticsearch.cn/download/

软件下载时尽量保证和 ElasticSearch 版本一致;此处需要下java包来支持logstash,为了和 elasticsearch 相匹配,此处最低需要 Java 8.

[root@server4 ~]# rpm -ivh jdk-8u181-linux-x64.rpm

[root@server4 ~]# which java

/usr/bin/java

[root@server4 ~]# rpm -ivh logstash-7.6.1.rpm

3.1 运行 logstash

在命令行中设置的任何标志都会覆盖 logstash.yml 文件中的相应设置,但文件本身不会更改。对于后续的 Logstash 运行,它保持原样。

执行二进制脚本来运行 logstash,/usr/share/logstash/bin下包括启动 Logstash 和 logstash-plugin 安装插件

官方文档https://www.elastic.co/guide/en/logstash/current/running-logstash-command-line.html

用命令的方式来执行标准输入到标准输出,即从接收从终端收到的再从终端输出

[root@server4 conf.d]# pwd

/etc/logstash/conf.d

[root@server4 conf.d]# ls

[root@server4 conf.d]# /usr/share/logstash/bin/logstash -e 'input { stdin { } } output { stdout {} }'

#当没有文件时,会让指定输入的信息。

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[INFO ] 2021-12-11 16:11:40.800 [main] writabledirectory - Creating directory {:setting=>"path.queue", :path=>"/usr/share/logstash/data/queue"}

[INFO ] 2021-12-11 16:11:40.837 [main] writabledirectory - Creating directory {:setting=>"path.dead_letter_queue", :path=>"/usr/share/logstash/data/dead_letter_queue"}

[WARN ] 2021-12-11 16:11:41.547 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified

[INFO ] 2021-12-11 16:11:41.553 [LogStash::Runner] runner - Starting Logstash {"logstash.version"=>"7.6.1"}

[INFO ] 2021-12-11 16:11:41.604 [LogStash::Runner] agent - No persistent UUID file found. Generating new UUID {:uuid=>"0b48306f-7137-49f0-b8f1-a3c6939b459b", :path=>"/usr/share/logstash/data/uuid"}

[INFO ] 2021-12-11 16:11:44.243 [Converge PipelineAction::Create<main>] Reflections - Reflections took 93 ms to scan 1 urls, producing 20 keys and 40 values

[WARN ] 2021-12-11 16:11:46.354 [[main]-pipeline-manager] LazyDelegatingGauge - A gauge metric of an unknown type (org.jruby.RubyArray) has been create for key: cluster_uuids. This may result in invalid serialization. It is recommended to log an issue to the responsible developer/development team.

[INFO ] 2021-12-11 16:11:46.365 [[main]-pipeline-manager] javapipeline - Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>250, "pipeline.sources"=>["config string"], :thread=>"#<Thread:0x414aebed run>"}

[INFO ] 2021-12-11 16:11:47.863 [[main]-pipeline-manager] javapipeline - Pipeline started {"pipeline.id"=>"main"}

The stdin plugin is now waiting for input:

[INFO ] 2021-12-11 16:11:48.015 [Agent thread] agent - Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[INFO ] 2021-12-11 16:11:48.419 [Api Webserver] agent - Successfully started Logstash API endpoint {:port=>9600}

westos

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/awesome_print-1.7.0/lib/awesome_print/formatters/base_formatter.rb:31: warning: constant ::Fixnum is deprecated

{

"@timestamp" => 2021-12-11T08:12:23.940Z,

"message" => "westos",

"@version" => "1",

"host" => "server4"

}

hellp

{

"@timestamp" => 2021-12-11T08:12:29.051Z,

"message" => "hellp",

"@version" => "1",

"host" => "server4"

}

world

{

"@timestamp" => 2021-12-11T08:12:34.675Z,

"message" => "world",

"@version" => "1",

"host" => "server4"

}

3.2 file 输出插件

-f -path.config CONFIG_PATH

从特定文件或目录加载 Logstash 配置。如果给定目录,则该目录中的所有文件将按字典顺序连接,然后解析为单个配置文件

用文件的方式来执行标准输入到标准输出,可以将其写入命令行也可以将其写入文件,然后用绝对路径的方式来指定。

[root@server4 conf.d]# pwd

/etc/logstash/conf.d

[root@server4 conf.d]# vim test.conf

[root@server4 conf.d]# cat test.conf

input {

stdin {} ##接收终端输入

}

output {

stdout {} ##显示终端输出

}

[root@server4 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/test.conf

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[WARN ] 2021-12-11 16:15:43.802 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified

[INFO ] 2021-12-11 16:15:43.844 [LogStash::Runner] runner - Starting Logstash {"logstash.version"=>"7.6.1"}

[INFO ] 2021-12-11 16:15:47.104 [Converge PipelineAction::Create<main>] Reflections - Reflections took 83 ms to scan 1 urls, producing 20 keys and 40 values

[WARN ] 2021-12-11 16:15:49.358 [[main]-pipeline-manager] LazyDelegatingGauge - A gauge metric of an unknown type (org.jruby.RubyArray) has been create for key: cluster_uuids. This may result in invalid serialization. It is recommended to log an issue to the responsible developer/development team.

[INFO ] 2021-12-11 16:15:49.368 [[main]-pipeline-manager] javapipeline - Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>250, "pipeline.sources"=>["/etc/logstash/conf.d/test.conf"], :thread=>"#<Thread:0x7281b76f run>"}

[INFO ] 2021-12-11 16:15:51.525 [[main]-pipeline-manager] javapipeline - Pipeline started {"pipeline.id"=>"main"}

The stdin plugin is now waiting for input:

[INFO ] 2021-12-11 16:15:51.661 [Agent thread] agent - Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[INFO ] 2021-12-11 16:15:52.085 [Api Webserver] agent - Successfully started Logstash API endpoint {:port=>9600}

hello

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/awesome_print-1.7.0/lib/awesome_print/formatters/base_formatter.rb:31: warning: constant ::Fixnum is deprecated

{

"message" => "hello",

"@timestamp" => 2021-12-11T08:16:13.477Z,

"host" => "server4",

"@version" => "1"

}

zxk

{

"message" => "zxk",

"@timestamp" => 2021-12-11T08:16:18.370Z,

"host" => "server4",

"@version" => "1"

}

[root@server4 conf.d]# vim test.conf

[root@server4 conf.d]# cat test.conf

input {

stdin {}

}

output {

file {

path => "/tmp/logstash.txt" #输出的文件路径

codec => line { format => "custom format: %{message}"}

#定制数据格式,message是输入的格式

#codec就是用来decode,encode 事件的。所以codec常用在input和output中

#codec => line就是输出一行内容

}

}

[root@server4 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/test.conf

zxk

[INFO ] 2021-12-11 16:20:49.885 [[main]>worker0] file - Opening file {:path=>"/tmp/logstash.txt"}

hello

[INFO ] 2021-12-11 16:21:09.860 [[main]>worker1] file - Closing file /tmp/logstash.txt

[WARN ] 2021-12-11 16:21:38.799 [SIGINT handler] runner - SIGINT received. Shutting down.

[INFO ] 2021-12-11 16:21:39.179 [Converge PipelineAction::Stop<main>] javapipeline - Pipeline terminated {"pipeline.id"=>"main"}

[INFO ] 2021-12-11 16:21:39.915 [LogStash::Runner] runner - Logstash shut down.

[root@server4 conf.d]# cat /tmp/logstash.txt

custom format: zxk

custom format: hello

此时是没有输出的直接写入文件,当也可以添加多个模块来做,加入stdout 来在终端显示信息。

3.3 elasticsearch 输出插件

将日志上传至elasticsearch 中,编辑配置文件。

[root@server4 conf.d]# vim test.conf

[root@server4 conf.d]# cat test.conf

input {

stdin {}

}

output {

stdout {}

elasticsearch {

hosts => "172.25.25.21:9200" #输出到的ES主机与端口

index => "logstash-%{+YYYY.MM.dd}"

#定制索引名称,每天新建一份

}

}

更改配置文件之后再次运行 logstash:

[root@server4 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/test.conf

hello

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/awesome_print-1.7.0/lib/awesome_print/formatters/base_formatter.rb:31: warning: constant ::Fixnum is deprecated

{

"host" => "server4",

"@version" => "1",

"message" => "hello",

"@timestamp" => 2021-12-11T08:38:54.875Z

}

westos

{

"host" => "server4",

"@version" => "1",

"message" => "westos",

"@timestamp" => 2021-12-11T08:38:58.282Z

}

zxk

{

"host" => "server4",

"@version" => "1",

"message" => "zxk",

"@timestamp" => 2021-12-11T08:38:59.443Z

}

在 es上查看,已自动创建索引和分片,采集到的内容和输入一致。

采集日志的信息时,由于用的是 systemd 的启动方式,默认是用的logstach 用户,在采集时没有权限,所以来给定权限之后,然后再来设定。

[root@server4 conf.d]# ll /var/log/messages

-rw------- 1 root root 14294 Dec 11 16:31 /var/log/messages

[root@server4 conf.d]# ll /var/log/ -d

drwxr-xr-x. 8 root root 4096 Dec 11 16:09 /var/log/

[root@server4 conf.d]# chmod 644 /var/log/messages

[root@server4 conf.d]# ll /var/log/messages

-rw-r--r-- 1 root root 14294 Dec 11 16:31 /var/log/messages

[root@server4 conf.d]# vim test.conf

[root@server4 conf.d]# cat test.conf

input {

file { #从文件/var/log/messages输入,从头开始输入

path => "/var/log/messages"

start_position => "beginning"

}

}

output {

stdout {}

elasticsearch {

hosts => "172.25.25.21:9200"

index => "logstash-%{+YYYY.MM.dd}"

}

}

修改完成之后再次运行logstash:

[root@server4 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/test.conf

完成之后在es端从网页中删除 logstash,再次创建时只会将更新的数据写入,(可以使用logger “日志” 命令来产生日志)。logstash 重启会将数据重复写入,当重启之后,logstash 会有一个机制来记录上次读取到的位置,只将新的数据写入;这是因为有一个 .sincedb 文件,它负责记录数据偏移量,已经上传过的数据,不会重复上传;如果想要重新读取,删除该文件,然后默认是从头开始读取。

删除之后再次运行,此时只会将更新的数据写入,

[root@server4 file]# pwd

/usr/share/logstash/data/plugins/inputs/file

[root@server4 file]# l.

. .. .sincedb_452905a167cf4509fd08acb964fdb20c

[root@server4 file]# cat .sincedb_452905a167cf4509fd08acb964fdb20c

52394392 0 64768 14294 1639212758.972494 /var/log/messages

logstash 如何区分设备、文件名、文件的不同版本: logstash 会把进度保存到 sincedb 文件中,该文件有 6 个字段,每个字段的含义分别为:inode编号、文件系统的主要设备号、文件系统的次要设备号、文件中的当前字节偏移量、最后一个活动时间戳(浮点数)、此记录匹配的最后一个已知路径。

3.4 Syslog 输入插件

如果要收集多台服务器的日志信息,那么每台都部署 Logstash 就比较麻烦,可以让 Logstash 伪装成日志服务器,直接接受每个节点的远程日志。

再次更改输入规则,让其接收每个节点的远程日志。

[root@server4 conf.d]# ls

test.conf

[root@server4 conf.d]# vim test.conf

[root@server4 conf.d]# cat test.conf

input {

syslog {}

}

output {

stdout {}

elasticsearch {

hosts => "172.25.25.21:9200"

index => "syslog-%{+YYYY.MM.dd}"

}

}

[root@server4 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/test.conf

[root@server4 ~]# netstat -antlup | grep :514

tcp6 0 0 :::514 :::* LISTEN 8200/java

udp 0 0 0.0.0.0:514 0.0.0.0:* 8200/java

此时开启之后,默认是有一个 514 接口的5,然后此时便可以将其他ES主机的日志发往该主机。

ES 主机 server1 需要编辑 /etc/rsyslog.conf 远程日志配置文件,打开 514 端口,并指定以何种方式发往那个主机。

[root@server1 ~]# vim /etc/rsyslog.conf

14 # Provides UDP syslog reception

15 $ModLoad imudp

16 $UDPServerRun 514

90 *.* @@172.25.25.24:514

[root@server1 ~]# systemctl restart rsyslog.service

此时重启之后,在监控的页面会立即收到信息;

{

"message" => "Unregistered Authentication Agent for unix-process:4421:9009625 (system bus name :1.66, object path /org/freedesktop/PolicyKit1/AuthenticationAgent, locale en_US.UTF-8) (disconnected from bus)\n",

"pid" => "2939",

"facility" => 10,

"program" => "polkitd",

"logsource" => "server1",

"priority" => 85,

"host" => "172.25.25.21", #该主机

"severity_label" => "Notice",

"facility_label" => "security/authorization",

"severity" => 5,

"@version" => "1",

"timestamp" => "Dec 12 15:10:21",

"@timestamp" => 2021-12-12T07:10:21.000Z

}

为了测试日志信息的更新状况,此处手动生成日志信息:

[root@server1 ~]# logger hello zxk #往messages中写入日志。

{

"message" => "hello zxk\n",

"facility" => 1,

"program" => "root",

"logsource" => "server1",

"priority" => 13,

"host" => "172.25.25.21",

"severity_label" => "Notice",

"facility_label" => "user-level",

"severity" => 5,

"@version" => "1",

"timestamp" => "Dec 12 15:12:39",

"@timestamp" => 2021-12-12T07:12:39.000Z

}

3.5 多行过滤插件

在查看日志时,面对杂乱的众多信息,有必要对所需要的信息进行过滤以方便快速定位。

[root@server4 conf.d]# vim multiline.conf

[root@server4 conf.d]# cat multiline.conf

input {

stdin {

codec => multiline {

pattern => "EOF" #以什么结尾

negate => "true"

what => "previous" #向上合并

}

}

}

output {

stdout {}

}

[root@server4 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/multiline.conf

1

w

z

2

4

EOF

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/awesome_print-1.7.0/lib/awesome_print/formatters/base_formatter.rb:31: warning: constant ::Fixnum is deprecated

{

"message" => "1\nw\nz\n2\n4",

"tags" => [

[0] "multiline"

],

"@timestamp" => 2021-12-12T07:18:59.540Z,

"@version" => "1",

"host" => "server4"

}

多行日志常用于java的日志输出和云计算的日志输出分析。

[root@server4 conf.d]# vim test.conf

[root@server4 conf.d]# cat test.conf

input {

file {

path => "/var/log/my-es-2021-12-11-1.log"

start_position => "beginning"

codec => multiline {

pattern => "^\["

negate => "true"

what => "previous"

}

}

}

output {

stdout {}

elasticsearch {

hosts => "172.25.25.21:9200"

index => "syslog-%{+YYYY.MM.dd}"

}

}

[root@server4 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/test.conf

此时便会将多行信息进行合并,方便观察。

3.6 grok 过滤插件

分割命令行的信息输出到终端;

安装 httpd,从外部访问一次然后来看;来通过其日志来过滤出访问IP 。

格式可以在/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/logstash-patterns-core-4.1.2/patterns中查看

[root@server4 patterns]# pwd

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/logstash-patterns-core-4.1.2/patterns

[root@server4 patterns]# ll httpd

-rw-r--r-- 1 logstash logstash 987 Feb 29 2020 httpd

把 apache 的日志作为 grok 的输入,日志文件需要给读的权限,日志文件的目录 /var/log/httpd 需要给读和执行的权限,读的时候是logstash 的身份

[root@server4 conf.d]# ll /var/log/httpd/access_log

-rw-r--r-- 1 root root 168 Dec 12 16:00 /var/log/httpd/access_log

[root@server4 conf.d]# ll -d /var/log/httpd/

drwx------ 2 root root 41 Dec 12 15:59 /var/log/httpd/

[root@server4 conf.d]# chmod 755 /var/log/httpd/

[root@server4 conf.d]# ll -d /var/log/httpd/

drwxr-xr-x 2 root root 41 Dec 12 15:59 /var/log/httpd/

编辑配置文件:

[root@server4 conf.d]# cat apache.conf

input {

file {

path => "/var/log/httpd/access_log"

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{HTTPD_COMBINEDLOG}" }

}

}

output {

stdout {}

elasticsearch {

hosts => "172.25.25.21:9200"

index => "apachelog-%{+YYYY.MM.dd}"

}

}

[root@server4 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/apache.conf

{

"httpversion" => "1.1",

"@version" => "1",

"request" => "/",

"response" => "200",

"verb" => "GET",

"clientip" => "172.25.25.250",

"ident" => "-",

"bytes" => "12",

"@timestamp" => 2021-12-12T08:11:34.862Z,

"host" => "server4",

"message" => "172.25.25.250 - - [12/Dec/2021:16:00:08 +0800] \"GET / HTTP/1.1\" 200 12 \"-\" \"curl/7.61.1\"",

"timestamp" => "12/Dec/2021:16:00:08 +0800",

"path" => "/var/log/httpd/access_log",

"agent" => "\"curl/7.61.1\"",

"referrer" => "\"-\"",

"auth" => "-"

}

完成一次测试便会生成相应的索引:

可以做压测,然后可以统计数量。

可以做压测,然后可以统计数量。

930

930

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?