环境简介

- k8s版本:1.15.2,三台主机

- 安装方式:kubeadm

主机说明:

| 操作系统 | IP地址 | 角色 | CPU | 内存 | 主机名 |

| centos7 | 192.168.50.13 | master | 2 | 2 | k8s-master |

| centos7 | 192.168.50.14 | node | 2 | 2 | k8s-node1 |

| centos7 | 192.168.50.15 | node | 2 | 2 | k8s-node2 |

名称空间

[root@k8s-master ~]# cd prometheus/prometheus/

[root@k8s-master prometheus]# vim ns.yamlapiVersion: v1

kind: Namespace

metadata:

name: monitoring

[root@k8s-master prometheus]# kubectl apply -f ns.yaml部署Prometheus

rbac授权

相当于在k8s中创建一个有权限的用户,Prometheus通过这个用户获取数据

[root@k8s-master prometheus]# vim rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: monitoring

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups:

- ""

resources:

- nodes

- services

- endpoints

- pods

- nodes/proxy

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

- nodes/metrics

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: monitoring

--- #获取永久sa的token

apiVersion: v1

kind: Secret

metadata:

name: prometheus-secrets

annotations:

kubernetes.io/service-account.name: "prometheus"

type: kubernetes.io/service-account-token查看token

kubectl describe secret prometheus-secrets -n monitoring使用configMap 创建报警规则文件和配置文件

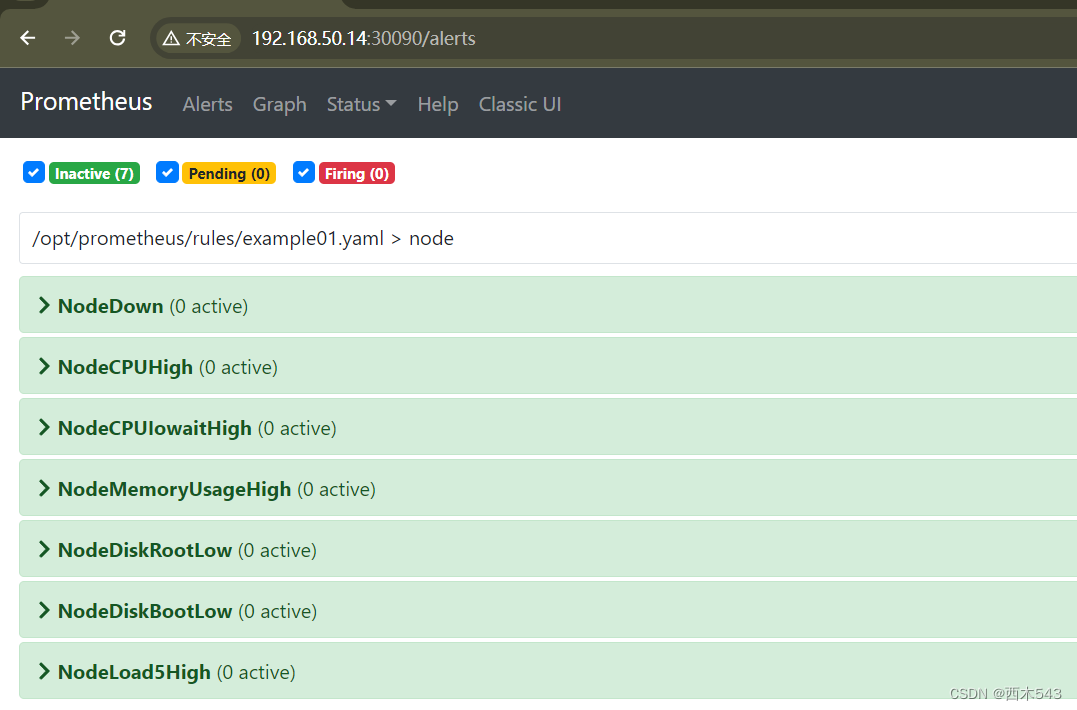

[root@k8s-master prometheus]# vim prometheus-rule.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-rule

namespace: monitoring

data:

example01.yaml: |

groups:

- name: node

rules:

- alert: NodeDown

expr: up == 0

for: 3m

labels:

severity: critical

annotations:

summary: "{{ $labels.instance }}: down"

description: "{{ $labels.instance }} has been down for more than 3m"

value: "{{ $value }}"

- alert: NodeCPUHigh

expr: (1 - avg by (instance) (irate(node_cpu_seconds_total{mode="idle"}[5m]))) * 100 > 75

for: 5m

labels:

severity: warning

annotations:

summary: "{{$labels.instance}}: High CPU usage"

description: "{{$labels.instance}}: CPU usage is above 75%"

value: "{{ $value }}"

- alert: NodeCPUIowaitHigh

expr: avg by (instance) (irate(node_cpu_seconds_total{mode="iowait"}[5m])) * 100 > 50

for: 5m

labels:

severity: warning

annotations:

summary: "{{$labels.instance}}: High CPU iowait usage"

description: "{{$labels.instance}}: CPU iowait usage is above 50%"

value: "{{ $value }}"

- alert: NodeMemoryUsageHigh

expr: (1 - node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes) * 100 > 90

for: 5m

labels:

severity: warning

annotations:

summary: "{{$labels.instance}}: High memory usage"

description: "{{$labels.instance}}: Memory usage is above 90%"

value: "{{ $value }}"

- alert: NodeDiskRootLow

expr: (1 - node_filesystem_avail_bytes{fstype=~"ext.*|xfs",mountpoint ="/"} / node_filesystem_size_bytes{fstype=~"ext.*|xfs",mountpoint ="/"}) * 100 > 80

for: 10m

labels:

severity: warning

annotations:

summary: "{{$labels.instance}}: Low disk(the / partition) space"

description: "{{$labels.instance}}: Disk(the / partition) usage is above 80%"

value: "{{ $value }}"

- alert: NodeDiskBootLow

expr: (1 - node_filesystem_avail_bytes{fstype=~"ext.*|xfs",mountpoint ="/boot"} / node_filesystem_size_bytes{fstype=~"ext.*|xfs",mountpoint ="/boot"}) * 100 > 80

for: 10m

labels:

severity: warning

annotations:

summary: "{{$labels.instance}}: Low disk(the /boot partition) space"

description: "{{$labels.instance}}: Disk(the /boot partition) usage is above 80%"

value: "{{ $value }}"

- alert: NodeLoad5High

expr: (node_load5) > (count by (instance) (node_cpu_seconds_total{mode='system'}) * 2)

for: 5m

labels:

severity: warning

annotations:

summary: "{{$labels.instance}}: Load(5m) High"

description: "{{$labels.instance}}: Load(5m) is 2 times the number of CPU cores"

value: "{{ $value }}"

[root@k8s-master prometheus]# vim prometheus-configMap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: monitoring

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

alerting:

alertmanagers:

- static_configs:

- targets:

- alertmanager.monitoring.svc.cluster.local:9093

rule_files:

- "rules/*.yaml"

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'kubernetes-nodes-cadvisor'

metrics_path: /metrics

scheme: https

kubernetes_sd_configs:

- role: node

api_server: https://192.168.50.13:6443

bearer_token_file: /opt/prometheus/k8s.token

tls_config:

insecure_skip_verify: true

bearer_token_file: /opt/prometheus/k8s.token

tls_config:

insecure_skip_verify: true

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.*)

- action: replace

regex: (.*)

source_labels: ["__address__"]

target_label: __address__

replacement: 192.168.50.13:6443

- action: replace

source_labels: [__meta_kubernetes_node_name]

target_label: __metrics_path__

regex: (.*)

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

api_server: https://192.168.50.13:6443

bearer_token_file: /opt/prometheus/k8s.token

tls_config:

insecure_skip_verify: true

bearer_token_file: /opt/prometheus/k8s.token

tls_config:

insecure_skip_verify: true

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scrape

- action: replace

regex: (https?)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scheme

target_label: __scheme__

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_service_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_service_name

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

api_server: https://192.168.50.13:6443

bearer_token_file: /opt/prometheus/k8s.token

tls_config:

insecure_skip_verify: true

bearer_token_file: /opt/prometheus/k8s.token

tls_config:

insecure_skip_verify: true

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scrape

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_pod_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: kubernetes_pod_name

- job_name: 'kubernetes-kubelet'

scheme: https

tls_config:

insecure_skip_verify: true

bearer_token_file: /opt/prometheus/k8s.token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)部署Prometheus并引用token

[root@k8s-master prometheus]# vim prometheus.yamlapiVersion: v1

kind: ConfigMap

metadata:

name: k8s-config

namespace: monitoring

data:

k8s.token: |

eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJtb25pdG9yaW5nIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6InByb21ldGhldXMtdG9rZW4tenpzd2siLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoicHJvbWV0aGV1cyIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjRjMzI0OGU3LWQyMTItNGE0MC04ZWJlLTFlOGJjYWU1Y2VlOCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDptb25pdG9yaW5nOnByb21ldGhldXMifQ.xMwaMScYCQT6BoeqDyXxvjRJhHgbGTt99oLY50V8CzksDAaJfHAtHVZ39SoXcgpxw5pJryYwnArRHdPFalsGpgO_AZgZRFho1L9MjDoZo-NyNfUvzZAALtryT4QfIXXAWP1oFcY38jzIl6TlzR9Uu9ONn6813K3uTIUU0WTlswDv-FxvjaaCdZgBSupylzMHEUxuVojHpuhP_oCytanC0ZcN_n_migDT-0PypB7jFE_Iq51i49xD6g4pV3EIGvIcFadYjuZ6l6JWWXX5n6QIpQS012acvn6Qels67Y7KzmyNSkOeh2ps9W-FYxCKUmMD8GI06MidVXNxwYRkJD3Krw

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus

namespace: monitoring

labels:

app: prometheus

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

containers:

- name: prometheus

image: 192.168.50.19:5000/prometheus:2.31.1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9090

volumeMounts:

- name: prometheus-config-volume

mountPath: /opt/prometheus/prometheus.yml

subPath: prometheus.yml

- name: prometheus-data

mountPath: /opt/prometheus/data

- name: k8s-config-volume

mountPath: /opt/prometheus/k8s.token

subPath: k8s.token

- name: prometheus-rule-config

mountPath: /opt/prometheus/rules/example01.yaml

subPath: example01.yaml

volumes:

- name: prometheus-config-volume

configMap:

name: prometheus-config

- name: k8s-config-volume

configMap:

name: k8s-config

- name: prometheus-data

hostPath:

path: /opt/prometheus-data

- name: prometheus-rule-config

configMap:

name: prometheus-rule

---

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: monitoring

spec:

type: NodePort

ports:

- port: 9090

targetPort: 9090

nodePort: 30090

selector:

app: prometheus[root@k8s-master prometheus]# kubectl apply -f rbac.yaml

[root@k8s-master prometheus]# kubectl apply -f prometheus-configMap.yaml

[root@k8s-master prometheus]# kubectl apply -f prometheus-rule.yaml

[root@k8s-master prometheus]# kubectl apply -f prometheus.yamlnode_export

[root@k8s-master prometheus]# vim node-exporter.yaml

apiVersion: v1

kind: Service

metadata:

name: node-exporter

namespace: monitoring

labels:

app: node-exporter

annotations:

prometheus.io/scrape: 'true'

spec:

selector:

app: node-exporter

ports:

- name: node-exporter

port: 9100

protocol: TCP

targetPort: 9100

clusterIP: None

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: monitoring

labels:

app: node-exporter

spec:

selector:

matchLabels:

app: node-exporter

template:

metadata:

name: node-exporter

labels:

app: node-exporter

spec:

containers:

- name: node-exporter

image: 192.168.50.19:5000/node_exporter:1.2.2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9100

hostPort: 9100

hostNetwork: true

hostPID: true

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedulemetrics

用于采集容器数据

[root@k8s-master prometheus]# vim kube-state-metrics.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-state-metrics

namespace: monitoring

labels:

app: kube-state-metrics

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: kube-state-metrics

labels:

app: kube-state-metrics

rules:

- apiGroups:

- ""

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs:

- list

- watch

- apiGroups:

- extensions

resources:

- daemonsets

- deployments

- replicasets

- ingresses

verbs:

- list

- watch

- apiGroups:

- apps

resources:

- statefulsets

- daemonsets

- deployments

- replicasets

verbs:

- list

- watch

- apiGroups:

- batch

resources:

- cronjobs

- jobs

verbs:

- list

- watch

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

verbs:

- list

- watch

- apiGroups:

- authentication.k8s.io

resources:

- tokenreviews

verbs:

- create

- apiGroups:

- authorization.k8s.io

resources:

- subjectaccessreviews

verbs:

- create

- apiGroups:

- policy

resources:

- poddisruptionbudgets

verbs:

- list

- watch

- apiGroups:

- certificates.k8s.io

resources:

- certificatesigningrequests

verbs:

- list

- watch

- apiGroups:

- storage.k8s.io

resources:

- storageclasses

- volumeattachments

verbs:

- list

- watch

- apiGroups:

- admissionregistration.k8s.io

resources:

- mutatingwebhookconfigurations

- validatingwebhookconfigurations

verbs:

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- networkpolicies

verbs:

- list

- watch

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kube-state-metrics

labels:

app: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: monitoring

---

apiVersion: v1

kind: Service

metadata:

name: kube-state-metrics

namespace: monitoring

labels:

app: kube-state-metrics

annotations:

prometheus.io/scrape: 'true'

prometheus.io/http-probe: 'true'

prometheus.io/http-probe-path: '/healthz'

prometheus.io/http-probe-port: '8080'

spec:

selector:

app: kube-state-metrics

ports:

- name: kube-state-metrics

port: 8080

protocol: TCP

targetPort: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kube-state-metrics

namespace: monitoring

labels:

app: kube-state-metrics

spec:

replicas: 1

selector:

matchLabels:

app: kube-state-metrics

template:

metadata:

labels:

app: kube-state-metrics

spec:

serviceAccountName: kube-state-metrics

containers:

- name: kube-state-metrics

image: 192.168.50.19:5000/kube-state-metrics:v1.8.0 # kube-state-metrics:v1.9.7 适用于Kubernetes 1.16以上版本

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

nodeSelector:

node-role.kubernetes.io/master: ""

kubernetes.io/hostname: "k8s-master"

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedulek8s组件

kubeadm部署,组件是以容器方式启动的,只需要创建对应的端点服务,service即可

kube-controller-manager

[root@k8s-master prometheus]# vim kube-controller-manager-prometheus-discovery.yaml

apiVersion: v1

kind: Service

metadata:

name: kube-controller-manager-prometheus-discovery

namespace: kube-system

labels:

component: kube-controller-manager

annotations:

prometheus.io/scrape: 'true'

spec:

selector:

component: kube-controller-manager

ports:

- name: http-metrics

port: 10252

targetPort: 10252

protocol: TCP

clusterIP: Nonekube-scheduler

[root@k8s-master prometheus]# vim kube-scheduler-prometheus-discovery.yaml

apiVersion: v1

kind: Service

metadata:

name: kube-scheduler-prometheus-discovery

namespace: kube-system

labels:

component: kube-scheduler

annotations:

prometheus.io/scrape: 'true'

spec:

selector:

component: kube-scheduler

ports:

- name: http-metrics

port: 10251

protocol: TCP

targetPort: 10251

clusterIP: Nonekube-proxy

kube-proxy需要修改监听端口将127.0.0.1:10249改为你自己想监听的地址,也可以为0.0.0.0:10249,如果不修改,那么Prometheus无法访问这条URL,Prometheus则显示为Down

[root@k8s-master prometheus]# vim kube-proxy-prometheus-discovery.yaml

apiVersion: v1

kind: Service

metadata:

name: kube-proxy-prometheus-discovery

namespace: kube-system

labels:

k8s-app: kube-proxy

annotations:

prometheus.io/scrape: 'true'

spec:

selector:

k8s-app: kube-proxy

ports:

- name: http-metrics

port: 10249

protocol: TCP

targetPort: 10249

clusterIP: Noneblackbox-exporter

黑盒探测工具,可以对服务的http,tcp,icmp进行网络探测

[root@k8s-master prometheus]# vim blackbox-exporter.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: blackbox-exporter

namespace: monitoring

labels:

app: blackbox-exporter

data:

blackbox.yml: |-

modules:

http_2xx:

prober: http

timeout: 10s

http:

valid_http_versions: ["HTTP/1.1", "HTTP/2"]

valid_status_codes: []

method: GET

preferred_ip_protocol: "ip4"

http_post_2xx:

prober: http

timeout: 10s

http:

valid_http_versions: ["HTTP/1.1", "HTTP/2"]

method: POST

preferred_ip_protocol: "ip4"

tcp_connect:

prober: tcp

timeout: 10s

icmp:

prober: icmp

timeout: 10s

icmp:

preferred_ip_protocol: "ip4"

---

apiVersion: v1

kind: Service

metadata:

name: blackbox-exporter

namespace: monitoring

labels:

app: blackbox-exporter

annotations:

prometheus.io/scrape: 'true'

spec:

selector:

app: blackbox-exporter

ports:

- name: blackbox

port: 9115

protocol: TCP

targetPort: 9115

nodePort: 30115

type: NodePort

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: blackbox-exporter

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: blackbox-exporter

template:

metadata:

labels:

app: blackbox-exporter

spec:

containers:

- name: blackbox-exporter

image: 192.168.50.19:5000/blackbox-exporter:0.19.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9115

readinessProbe:

tcpSocket:

port: 9115

initialDelaySeconds: 10

timeoutSeconds: 5

resources:

requests:

memory: 50Mi

cpu: 100m

limits:

memory: 60Mi

cpu: 200m

volumeMounts:

- name: config

mountPath: /etc/blackbox_exporter

args:

- '--config.file=/etc/blackbox_exporter/blackbox.yml'

- '--web.listen-address=:9115'

volumes:

- name: config

configMap:

name: blackbox-exporter

nodeSelector:

node-role.kubernetes.io/master: ""

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule[root@k8s-master prometheus]# kubectl apply -f kube-controller-manager-prometheus-discovery.yaml

[root@k8s-master prometheus]# kubectl apply -f kube-scheduler-prometheus-discovery.yaml

[root@k8s-master prometheus]# kubectl apply -f kube-proxy-prometheus-discovery.yaml

[root@k8s-master prometheus]# kubectl apply -f blackbox-exporter.yamlalertmanager

[root@k8s-master prometheus]# vim alertmanager.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: alertmanager-config

namespace: monitoring

data:

alertmanager.yml: |

global:

resolve_timeout: 5m

smtp_smarthost: '****.com:25'

smtp_from: '****.com'

smtp_auth_username: '****.com'

smtp_auth_password: '****'

smtp_require_tls: false

templates:

- /opt/alertmanager/template/*.tmpl

route:

group_by: ['alertname']

group_wait: 10s

group_interval: 10s

repeat_interval: 1m

receiver: 'email'

routes:

- match:

severity: critical

receiver: webhook1

- match:

severity: error

receiver: webhook2

receivers:

- name: 'email'

email_configs:

- to: '****@qq.com'

- name: 'webhook1'

webhook_configs:

- send_resolved: true

url: 'http://dingtalk.monitoring.svc.cluster.local:8060/dingtalk/webhook1/send'

- name: 'webhook2'

webhook_configs:

- send_resolved: true

url: 'http://dingtalk.monitoring.svc.cluster.local:8060/dingtalk/webhook_mention_users/send'

inhibit_rules:

- source_match:

severity: 'critical'

target_match:

severity: 'warning'

equal: ['instance']

---

apiVersion: v1

kind: Service

metadata:

name: alertmanager

namespace: monitoring

labels:

name: alertmanager

annotations:

prometheus.io/scrape: 'true'

spec:

selector:

app: alertmanager

ports:

- name: alertmanager

port: 9093

protocol: TCP

targetPort: 9093

nodePort: 30093

type: NodePort

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: alertmanager

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: alertmanager

template:

metadata:

name: alertmanager

labels:

app: alertmanager

spec:

containers:

- name: alertmanager

image: 192.168.50.19:5000/alertmanager:0.23.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9093

env:

- name: POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

volumeMounts:

- name: config

mountPath: /opt/alertmanager/alertmanager.yml

subPath: alertmanager.yml

- name: dingtalk-template-config

mountPath: /opt/alertmanager/template/webhook.tmpl

subPath: webhook.tmpl

- name: alertmanager

mountPath: /opt/alertmanager/data/

volumes:

- name: config

configMap:

name: alertmanager-config

- name: alertmanager

hostPath:

path: /data

- name: dingtalk-template-config

configMap:

name: dingtalk-template[root@k8s-master prometheus]# kubectl apply -f alertmanager.yaml[root@k8s-master prometheus]# vim webhook-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: dingtalk-template

namespace: monitoring

data:

webhook.tmpl: |

{{ define "dingtalk.text" -}}

{{ if eq .Status "firing" }}

【告警】{{ .CommonAnnotations.summary }}

> 告警级别: {{ .CommonLabels.severity }}

> 实例: {{ .CommonLabels.instance }}

> 描述: {{ .CommonAnnotations.description }}

> 时间: {{ .CommonAnnotations.startsAt }}

{{ else if eq .Status "resolved" }}

【已解决】{{ .CommonAnnotations.summary }}

> 实例: {{ .CommonLabels.instance }}

> 描述: {{ .CommonAnnotations.description }}

> 持续时间: {{ .Duration }}

{{ end }}

{{- end }}

{{ define "dingtalk.title" -}}

{{ if eq .Status "firing" }}

【告警】{{ .CommonAnnotations.summary }}

{{ else if eq .Status "resolved" }}

【已解决】{{ .CommonAnnotations.summary }}

{{ end }}

{{- end }}[root@k8s-master prometheus]# kubectl apply -f webhook-config.yamldingtalk

[root@k8s-master prometheus]# vim prometheus-webhook-dingtalk.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: dingtalk-config

namespace: monitoring

data:

config.yml: |

timeout: 5s

templates:

- /opt/prometheus-webhook-dingtalk/template/webhook.tmpl

targets:

webhook1:

url: https://oapi.dingtalk.com/robot/send?access_token=4c8948d70ed1db17e2b4daae36535258e781599acd01b1c1cbadf84c2b18fd05

secret: SECb019847b6d707a1958943e0fdffdcc6fce5b886d6506cf97788cef059b0f3005

webhook_mention_all:

url: https://oapi.dingtalk.com/robot/send?access_token=4c8948d70ed1db17e2b4daae36535258e781599acd01b1c1cbadf84c2b18fd05

mention:

all: true

---

apiVersion: v1

kind: Service

metadata:

name: dingtalk

namespace: monitoring

labels:

app: dingtalk

annotations:

prometheus.io/scrape: 'false'

spec:

selector:

app: dingtalk

ports:

- name: dingtalk

port: 8060

protocol: TCP

targetPort: 8060

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: dingtalk

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: dingtalk

template:

metadata:

name: dingtalk

labels:

app: dingtalk

spec:

containers:

- name: dingtalk

image: 192.168.50.19:5000/prometheus-webhook-dingtalk:1.4.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8060

volumeMounts:

- name: config

mountPath: /opt/prometheus-webhook-dingtalk/config.yml

subPath: config.yml

- name: dingtalk-template-config

mountPath: /opt/prometheus-webhook-dingtalk/template/webhook.tmpl

subPath: webhook.tmpl

volumes:

- name: config

configMap:

name: dingtalk-config

- name: dingtalk-template-config

configMap:

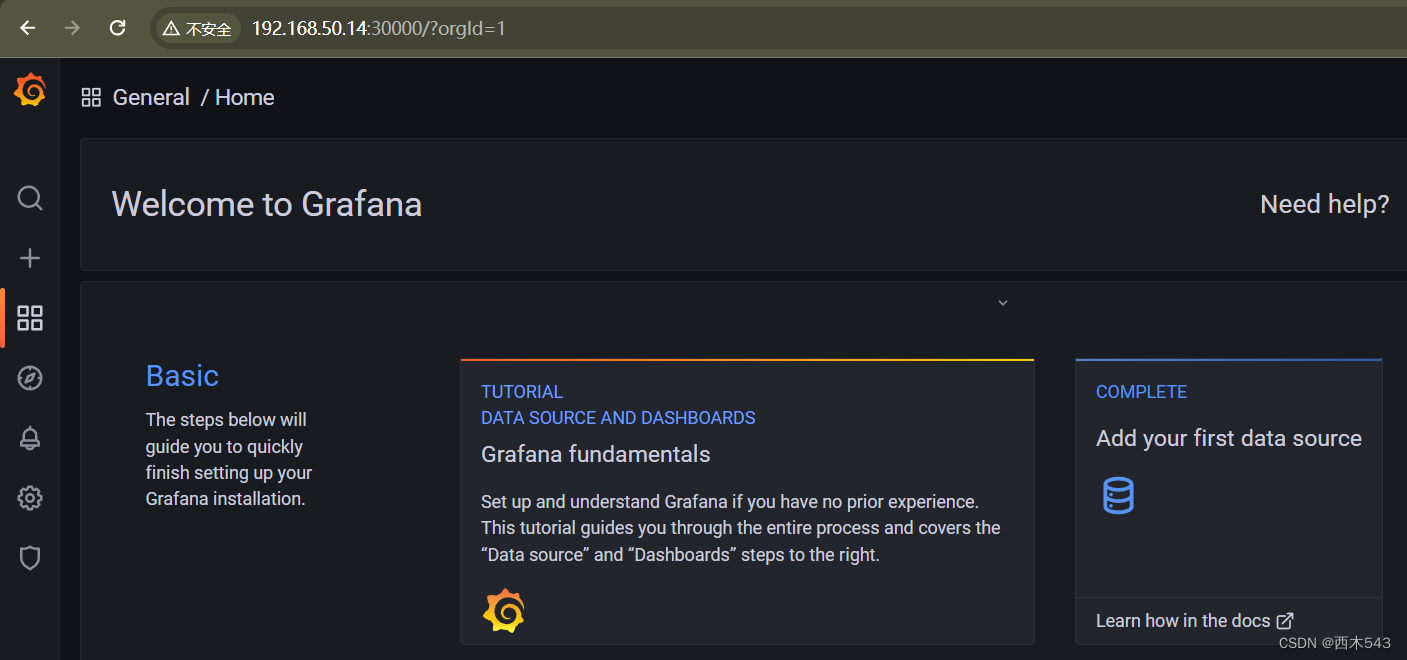

name: dingtalk-template[root@k8s-master prometheus]# kubectl apply -f prometheus-webhook-dingtalk.yamlgrafana

[root@k8s-master prometheus]# vim grafana-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: grafana

namespace: monitoring

data:

admin-password: YWRtaW4= # base64 加解密

admin-username: YWRtaW4=

type: Opaque

[root@k8s-master prometheus]# kubectl apply -f grafana-secret.yaml[root@k8s-master prometheus]# kubectl apply -f grafana.yaml

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: monitoring

labels:

app: grafana

annotations:

prometheus.io/scrape: 'true'

prometheus.io/path: '/metrics'

spec:

selector:

app: grafana

ports:

- name: grafana

port: 3000

protocol: TCP

targetPort: 3000

nodePort: 30000

type: NodePort

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

namespace: monitoring

labels:

app: grafana

spec:

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

nodeName: k8s-node2

containers:

- name: grafana

image: grafana/grafana:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3000

name: grafana

resources:

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 100m

memory: 100Mi

env:

- name: GF_AUTH_BASIC_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_DASHBOARDS_JSON_ENABLED

value: "true"

- name: GF_INSTALL_PLUGINS

value: grafana-kubernetes-app

- name: GF_SECURITY_ADMIN_USER

valueFrom:

secretKeyRef:

name: grafana

key: admin-username

- name: GF_SECURITY_ADMIN_PASSWORD

valueFrom:

secretKeyRef:

name: grafana

key: admin-password

readinessProbe:

httpGet:

path: /login

port: 3000

initialDelaySeconds: 10

timeoutSeconds: 5

volumeMounts:

- name: grafana-storage

mountPath: /var/lib/grafana

- name: grafana-log

mountPath: /var/log/grafana

- name: grafana-plugins

mountPath: /var/lib/grafana/plugins

volumes:

- name: grafana-storage

hostPath:

path: /grafana # 目录要给足权限

- name: grafana-log

hostPath:

path: /grafana/log

- name: grafana-plugins

hostPath:

path: /grafana/plugins

[root@k8s-master prometheus]# kbuectl apply -f grafana.yaml

结果查看

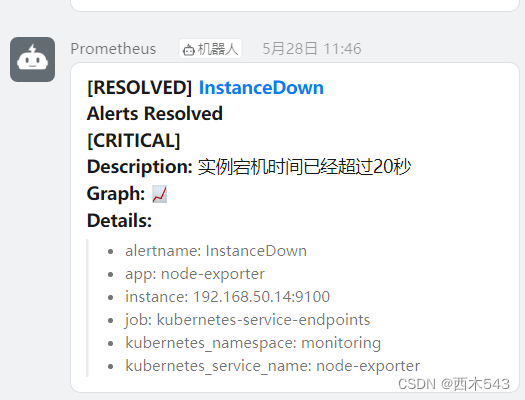

告警测试

[root@k8s-node1 ~]# vim kill_node-exporter.sh

#!/bin/bash

nodepid=`netstat -lntp | grep 9100 | awk '{print $NF}' | awk -F '/' '{print $1}'`

nodenum=`netstat -lntp | grep 9100 | grep -v pause | wc -l`

if [ $nodenum -eq 0 ];then

exit

else

kill -9 $nodepid

exit

fi

# 执行脚本

sh kill_node-exporter.sh

4255

4255

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?